Archives:History of IEEE Since 1984 - TECHNICAL TOPICS

international congresses

The first International Exposition of Electricity (Exposition internationale d'électricité) was held in Paris, France, in 1881 at the Palais de l'Industrie on the Champs-Élysées in Paris. Exhibitors came from France, Germany, Italy, the Netherlands, the United Kingdom, and the United States. They displayed the newest electrical technologies such as arc and incandescent lamps, meters, light fixtures, generators, boilers, steam engines, meters, and related equipment. (See Appendix 2: Select Electrical Congresses, Exhibitions, and Conferences.) As part of the exhibition, the first International Congress of Electricians featured numerous scientific and technical papers, including proposed definitions of the standard practical units of volt, ohm, and ampere. This congress was a decisive step in the building of the modern International System of Units (SI).

On 8 March 1881, Alexander Ramsey, U.S. Secretary of War, ordered Captain David P. Heap, Corps of Engineers, to sail for Europe in July for “the purpose of acquiring information respecting late inventions and improvements in electricity” by attending the International Exhibition of Electricity to be held at Paris from early August to mid-November 1881. On his return, he was to submit a detailed report for the use of the War Department.[1] The U.S. State Department appointed him honorary commissioner from the United States, and military delegate to the Congress of Electricians.

The first International Electrical Congress was held in conjunction with the electrical exhibition. The 1881 Electrical Congress was one in a series of international meetings held between 1881 and 1904, in the then new field of applied electricity. The first meeting was initiated by the French government, including official national representatives, leading scientists, engineers, industrialists, entrepreneurs, and others. Often, countries sent official representatives and companies sent displays of their newest products. Subsequent meetings also included official representatives, leading scientists, and others. The primary aim was to develop reliable standards, in relation to both electrical units and electrical apparatus.

pre 1884 elec history

Earlier Discoveries

Before there were electrical engineers, and before anyone coined the word “electron,” there were observations of certain physical phenomena that needed explaining. Charles Coulomb showed that the force of attraction between two charged spheres was inversely proportional to the square of the distance between them, lending precision to the field of electrical studies. In 1799, Alessandro Volta developed the first electrical battery. This battery, known as the Voltaic cell, consisted of two plates of different metals immersed in a chemical solution. Volta's development of the first continuous and reproducible source of electrical current was an important step in the study of electromagnetism and in the development of electrical equipment.[2] He built a battery, known as a Voltaic pile, made of alternating copper and zinc discs, with each pair of metals separated by flannel soaked in weak acid. The Voltaic pile stimulated so much scientific inquiry that, by 1831, when Michael Faraday built the first dynamo, the basis of electricity had been established.

INSERT Fig. 1-7. Five Pioneers in the Development of the Electric Dynamo and Motor. (Jehl, v. 2, p. 587.)

Volta’s battery made electricity easily available in the laboratory. Hans Ørsted, in 1820, used one to pass current through a wire near a compass, causing its needle to swing to a position at right angles to the wire—the first indication that electric current and magnetism were related. Soon after, mathematician Andre-Marie Ampère observed that a live coiled wire acts as a magnet, and developed equations predicting the force between two current-carrying conductors. This established the field of charges-in-motion, or electrodynamics. In 1827, schoolteacher Georg Simon Ohm wrote a simple equation relating potential, current, and circuit resistance, now known as Ohm's law.

One more discovery would be needed to set the stage for a new age of electrical invention. In 1821, Michael Faraday demonstrated that a magnet placed near a current-carrying wire caused the wire to move. That concept formed the basis of the electric motor. A decade later, he passed a current through one of two coils wrapped around an iron ring. When he varied the current in the first coil, it induced a current in the second. This experiment established the principles of the transformer and the rotating generator.

The theoretical and experimental basis for “engineering the electron” arrived with the work of James Clerk Maxwell. Between 1860 and 1871, at his family home of Glenlair in Scotland, and King’s College London, where he was Professor of Natural Philosophy, Maxwell conceived and developed his unified theory of electricity, magnetism, and light. A cornerstone of classical physics, the Theory of Electromagnetism is summarized in four key equations that now bear his name. Maxwell’s equations underpin all modern information and communication technologies. Maxwell built on the earlier work of many giants, including Ampère, Gauss, and Faraday, but he revolutionized the fields of electrical and optical physics, and laid the groundwork for electrical, radio, and photonic engineering, with his experiments, theories, and publications. The unification of the theories of electricity, magnetism, and light, which comes directly from Maxwell’s equations, clearly sets Maxwell’s work apart from similar achievements of the time.[3]

In 1865, Maxwell mathematically unified the laws of Ampère and Faraday, and expressed in a set of equations the principles of the electro-magnetic field. He showed the electric field and the magnetic field to be indissolubly bound together. Maxwell also predicted that acceleration or oscillation of an electric charge would produce an electromagnetic field that radiated outward from the source at a constant velocity. This velocity was calculated to be about 300,000 km/s, the velocity of light. From this coincidence, Maxwell reasoned that light, too, was an electromagnetic phenomenon.

Maxwell's prediction of propagating electromagnetic fields was experimentally proven in 1887, when Heinrich Hertz, a young German physicist, discovered electric waves at a distance from an oscillating charge produced across a spark gap. By placing a loop of wire with a gap near the oscillator, he obtained a spark across the second gap whenever the first gap sparked. He showed that waves of energy produced by the spark behaved as light waves do; today we call these radio waves. Faraday established the foundation for electrical engineering and the electrical industry with his discovery of induction; Maxwell, in predicting the propagation of electromagnetic fields through space, prepared the theoretical base for radio and its multitude of uses. With an understanding of the fundamental laws of electricity established, the times were ripe for the inventor, the entrepreneur, and the engineer.

The Electric Telegraph and Telephone: Instraneous Communication

At the time of Faraday's discoveries in the 1820s, information traveled at the speed of a ship or a messenger on either foot or horseback. Not much had changed since 490 B.C.E., when the runner Pheidippides carried the news of the battle of Marathon to Athens. Semaphore relay systems could overcome distances, but only if the weather cooperated. Messages by sea traveled so slowly that British and American forces met in the Battle of New Orleans two weeks after the Treaty of Ghent was signed ending the War of 1812. However, by the middle of the nineteenth century advances in communication and transportation technology created a more interconnected world in which steam powered ships and railways accelerated the pace of travel, and the telegraph and underwater cables quickened the pace of communication.

William Cooke, an Englishman studying anatomy in Germany, turned his thoughts to an electric telegraph after he saw some electrical experiments at Heidelberg. In London, he sought the help of Michael Faraday, who introduced him to Charles Wheatstone of King’s College, London, who was already working on a telegraph. In 1838, they transmitted signals over 1.6 km (1 mi). Their system used five wires to position magnetic needles to indicate letters. Because of discussions with the American Joseph Henry in England in 1837, Wheatstone later adapted electromagnets to a form of telegraph that indicated directly the letters of the alphabet.

In September 1837, while visiting New York University (NYU), Alfred Vail saw Professor Samuel F.B. Morse, the artist-inventor, demonstrate his "Electro-Magnetic Telegraph," an apparatus that could send coded messages through a copper wire using electrical impulses from an electromagnet. A skilled mechanic, Vail saw the machine´s potential for communications and offered to assist Morse in his experiments for a share of the inventor´s profits. Morse was delighted when Alfred Vail persuaded his father, Judge Stephen, a well-to-do industrialist, to advance $2,000 to underwrite the cost of making the machine practical. He provided them room in his Speedwell Iron Works at Morristown, New Jersey, for their experiments. Thus, while Morse continued to teach in New York, Alfred went to work eliminating errors from the telegraph equipment.

Alfred had a written agreement with Morse to produce a working, practical telegraph, at Vail´s expense, by 1 January 1838. Vail and his assistant, William Baker, worked feverishly behind closed doors to meet the deadline. Morse kept track of their progress, mostly by mail. New Year’s Day came without a completed, working model. Morse, now visiting, became insistent. Alfred knew that if success did not come soon his father would cut off his financial support and the experiments would end. Five days later, the telegraph was ready for demonstration. Alfred and Morse invited the judge to the workshop.

Judge Vail wrote down a sentence on a slip of paper and handed it to his son. Alfred sat at the telegraph machine, manipulated metal knobs and transmitted, by a numerical code for words, the judge´s message. As the electrical impulses surged through the two miles of wire looped through the building, Morse, at the other end, scribbled down what he received. The message read, "A patient waiter is no loser." (IEEE commemorated the demonstration of practical telegraphy with IEEE Milestone 13, dedicated on 7 May 1988.[4])

INSERT Fig. 1-9. Photograph of Practical Telegraphy IEEE Milestone No. 13 Ceremony.

In 1839, Morse was encouraged by a discussion with Henry, who backed Morse’s project enthusiastically. Morse adopted a code of dots and dashes for each letter and his assistant, Alfred Vail, devised a receiving sounder. Morse sought and received a U.S. federal government grant of $30,000 in 1843 for a 60 km (38 mi) line from Baltimore, Maryland, to Washington, D.C., along the Baltimore and Ohio railroad that would prove the efficacy of his system. On 24 May 1844, this line was opened with the famous message, "What hath God wrought?" Telegraph lines were rapidly built, and by 1861, they spanned the North American continent, many parts of the developed world, and Europe’s empires.

In the mid-nineteenth century, inventors and entrepreneurs looked to span continents, rivers, and oceans, but geography, distance, as well as existing theory and technology were obstacles to the expansion of telegraph communication networks. In 1851, Morse laid a cable under New York harbor, and another across the English Channel from Dover, England, to Calais, France. In the 1850s, a number of attempts were also made to lay a cable between Ireland and Newfoundland. Before the end of the decade, in 1858, the American Cyrus Field and his English associates attempted to install a cable from Ireland to Newfoundland. It successfully carried a congratulatory message from Queen Victoria to U.S. President James Buchanan on his inauguration. However, it failed after a few weeks because the mechanical design and insulation of this 3,200-km (2,000-mi) line were faulty. In 1864, two investors put up the capital and the Great Eastern was offered to Cyrus Field to lay another cable. The Great Eastern was five times larger than any vessel afloat at the time. She was able to carry the entire new cable, which weighed 7,000 tons. Its 2,600 miles could be lowered in a continuous line from Valentia, Ireland, to Heart’s Content, Newfoundland. In June 1865, the Great Eastern arrived at Valentia and began laying the cable. Within 600 miles of Newfoundland, the cable snapped and sank. The Great Eastern, after making several attempts to recover the cable without success, returned to Ireland.

William Thomson (later Lord Kelvin) had developed an effective theory of cable transmission, including the weakening and the delay of the signal to be expected. It took seven years of study of cable structure, cable-laying procedures, insulating materials, receiving instruments, and other details before a fully operational transoceanic cable was laid in 1866. A new company, the Anglo American Telegraph Co. raised £600,000 to make another attempt at laying the cable. On Friday 13 July 1866, the Great Eastern steamed westward from Valentia with 2,730 nautical miles of telegraph cable in her hold.[5] Fourteen days later 1,852 miles of this cable had been laid across the bottom of the ocean, and the ship dropped anchor in Trinity Bay, Newfoundland.[6] The successful landing of the cable established a permanent electrical communications link that altered personal, commercial, and political relations between people across the Atlantic Ocean. They also succeeded in raising from the seabed the broken end of the cable lost in 1865, and connected it to its terminus in Newfoundland. By 1870, approximately 150,000 km (90,000 mi) of underwater cable had been laid, linking all inhabited continents and most major islands. Later, additional cables were laid from Valentia, and new stations opened at Ballinskelligs (1874) and Waterville (1884), making County Kerry a focal point for Atlantic communications. Five more cables between Heart's Content and Valentia were completed between 1866 and 1894. This station continued in operation until 1965.

INSERT Fig. 1-13 and 1-14. Photos, IEEE Milestone No. 5 & No. 32 dedication ceremonies.

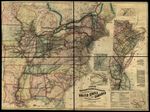

After the U.S. Civil War, the telegraph business expanded in conjunction with industrial and commercial growth in the United States. An economic problem became known because simultaneous messages required individual lines, thus more and more wires were needed over each route to handle the increased number of messages. This multiplicity of lines tied up capital and the networks of wires cluttered the space above the city streets. Great rewards were envisioned for an inventor who could make one line carry several messages simultaneously. In 1872, Western Union paid handsomely for the duplex patent of Joseph Stearns, which doubled the capacity of a telegraph line. Telegraph networks continued to expand, and by 1876, there were 400,000 km (250,000 mi) of lines on 178,000 km (110,000 mi) of routes in the United States.

In 1872, Alexander Graham Bell read a newspaper account of the Stearns duplex telegraph and saw an opportunity for quick fortune on a multiplex telegraph line. By extending the work of Hermann von Helmholtz, who used vibrating reeds to generate frequencies, Bell could make each frequency carry an individual telegraph message. The son of a professor of elocution at the University of Edinburgh, Bell had arrived in Boston the year before to teach at the School of the Deaf.

Elisha Gray, superintendent of the Western Electrical Manufacturing Company in Chicago, also saw the possibilities for a multiplex telegraph, especially one using different frequencies. In 1874, Gray discovered that an induction coil could produce musical tones from currents created by a rubbing contact on a wire, so he resigned his post to become a full-time inventor. He used magnets connected to a diaphragm and located near an induction coil as receivers and transmitters. Gray demonstrated some of his equipment to Joseph Henry in Washington in June 1874. Henry, in a letter to John Tyndall at the Royal Institution in London, stated Gray was "one of the most ingenious practical electricians in the country."

In March 1875, Bell met Henry and impressed him with his understanding of acoustical science. Henry told Bell that Gray “was by no means a scientific man,” and he encouraged Bell to complete his work. When Bell protested that he did not have sufficient electrical knowledge, Henry exclaimed, “Get it.” Never the less, in 1875, Gray’s multiplex equipment was more advanced than Bell’s, but Bell, the man with the scientific background, sought to transmit speech. Gray, the practical man, saw the rewards of multiplex telegraph and dismissed the telephone simply as a development of scientific interest, but Bell saw the telephone’s potential as a device that could transmit speech.

INSERT Fig. 1-15. Photo, Bell telephone exhibited at Centennial Exhibition, 1876.

On 14 February 1876, Gray’s lawyers filed a caveat in the U.S. Patent Office, which as a defensive measure included a description of a telephone transmitter, but Bell’s lawyer had filed a patent application on the telephone a few hours earlier. Less than one month later, on 10 March 1876, at his laboratory in Boston, Bell transmitted the human voice with the famous sentences "Mr. Watson, come here. I want you," addressed to his assistant down the hall. Bell developed instruments for exhibition at the Centennial Exhibition held that summer in Philadelphia, which attracted great interest and fostered commercial development. In early 1877, commercial installations were made and the first Bell switchboard was placed in operation in New Haven, Connecticut, in January 1878, with twenty-two subscriber lines. Indeed, it seemed some people were willing to pay for speech communication, albeit, primarily for business purposes. In time, the telephone became a common household technology for the upper and middle classes.

The potential of the telegraph and telephone attracted another young inventor to the field of electrical communication, and he was to have a profound impact. Thomas Alva Edison was a self-taught, highly successful inventor who had established a laboratory at Menlo Park, New Jersey. He had produced a carbon microphone for the telephone system, and was always interested in projects in which he could see commercial prospects. Edison was attracted to the possibilities of an incandescent lamp. George Barker, Professor of Physics at the University of Pennsylvania, was an acquaintance who was fascinated by the work of Joseph Swan on incandescent lamps, and he passed this enthusiasm to Edison. In October 1878, Edison embarked on what was to be a hectic year of incandescent lamp research. Edison's first lamps used a platinum filament and operated in series at ten volts each. He soon realized that parallel connections, with each lamp separately controlled, would be more practical. This increased the current to the sum of currents taken, and this made larger conductors necessary.

INSERT Fig. 1-17. Edison’s Menlo Park Laboratory, 22 Feb. 1880. (Jehl, v. 2, p. 459.)

INSERT Fig. 1-18. The steamship S.S. Columbia built in 1880 for Henry Villard and equipped with an Edison isolated electric light plant. (Jehl, v. 2, p. 556.)

INSERT Fig. 1-19. Original Edison dynamo used on S.S. Columbia, and later a part of the restored Menlo Park collection at Henry Ford’s museum in Dearborn, Michigan. (Jehl, v. 2, p. 559.)

The Menlo Park laboratory staff increased as more men came in to speed the work on electric light research. Among them was Francis Upton, a recent M.S. in physics from Princeton University, who was added at the urging of Grosvenor Lowrey, Edison's business and financial adviser. Upton focused on mathematical problems and analyses of equipment. By the fall of 1878, Edison and Upton realized that without a high electrical resistance in the lamp filament, the current in a large parallel system would require conductors so large that the investment in copper would be prohibitive. They considered the 16-candlepower gas light their competition, and they found that an equivalent output required about 100 watts. Using a 100-volt distribution voltage (later 220 V in a three-wire system); each lamp would need to have a resistance of about one hundred ohms. Although they had a platinum filament of sufficient resistance, Upton’s calculations indicated that the cost of platinum would make the lamp uncompetitive. Edison turned his attention to searching for a high resistance filament, and after trials of many carbonized materials, he had success on 21 October 1879 with a filament made from a carbonized cotton thread. In November 1879, a longer-lived filament was made from carbonized bristol board, and a little later Edison produced even longer-lived lamps made with carbonized split bamboo.

INSERT Fig. 1-20. Thomas Edison and Menlo Park Laboratory Staff, 1879.

INSERT Fig. 1-21. New Year’s Eve demonstration at Menlo Park, 31 Dec. 1879.

INSERT Fig. 1-22. Collage of electric light inventors – Brush, Swan, Maxim, etc.

INSERT Fig. 1-23. English Contemporaries of Edison. (Jehl, 2, p. 538.)

INSERT Fig. 1-24. Great European Contemporaries of Edison. (Jehl, v. 2, p. 539.)

INSERT Fig. 1-25.American Contemporaries of Edison. (Jehl, v. 2, p. 461.)

INSERT Fig. 1-26. Scientific Contemporaries of Edison. (Jehl, v. 2, p. 463.)

During the fall of 1879, Edison held many public demonstrations of his light bulb and electric light system at his laboratory in Menlo Park, New Jersey for friends, investors, reporters and others eager to see the newest efforts of the Wizard of Menlo Park. On 20 December 1879, Edison welcomed many the New York City Board of Alderman and reporters who had the power to grant franchises to construct an electric light system in the city. Edison Electric Light Company funded Edison’s lamp research and it placed enormous pressure on Edison to bring the light bulb to market. Edison, ever the showman and master of public relations, arranged for the press to announce the invention on 21 December. Later, an impressive public demonstration with five hundred lamps was arranged at Menlo Park, and the Pennsylvania Railroad ran special trains from New York City for the event.

In the fall of 1881, Edison displayed lamps and his "Jumbo" generator of 50 kW at the International Electrical Exhibition in Paris, to a very positive reception. Although the lengthy field structure of the Edison machines was criticized as poor magnetic design by Edward Weston, who also manufactured generators at that time, Edison and Upton had grasped the necessity for high-power efficiency, obtainable in a generator having low internal resistance.

Generator design was not well understood at the time. This was particularly true of the magnetic circuit. The long magnets of Edison's "Jumbo" were an extreme example of the attempt to produce a maximum field. In 1873, Henry Rowland had proposed a law of the magnetic circuit analogous to Ohm's law. However, it was not until 1886 that the British engineers, John and Edward Hopkinson, presented a paper before the Royal Society that developed generator design from the known properties of iron. The full development of theories of magnetic hysteresis had to wait for Charles Proteus Steinmetz in 1892. Again, inventive engineering was outpacing scientific understanding, but for the impact of an invention to be completely realized, more discovery would be needed.

The first central power station was that of the California Electric Light Company in San Francisco in 1879. It was designed to supply twenty-two arc lamps. The first major English central station was opened by Edison on Holborn Viaduct in London in January 1882, supplying 1,000 lamps. The gas lighting monopoly, however, lobbied successfully for certain restrictive provisions in the British Electric Lighting Act, which delayed development of electrical illumination in the country.

In the late 1800s, manufactured gas and kerosene dominated lighting, but Edison’s invention of a practical incandescent bulb created a powerful incentive for electrical distribution.

Edison and Upton were also working on plans for a central power station to supply their American lamp customers. They estimated a capital investment of $150,000 for boilers, engines, generators, and distribution conductors. The buried conductors were the largest item at $57,000, of which the copper itself was estimated to require $27,000. This made clear to Edison that the copper cost for his distribution system was to be a major capital expense. To reduce the copper cost, Edison contrived first (in 1880) a main-and-feeder system and then (in 1882) a three-wire system. Annual operating expenses were placed at $46,000, and the annual income from $10,000 installed lamps was expected to be $136,000. This left a surplus for return on patent rights and interest on capital of $90,000.

To use his system to best advantage, Edison sought a densely populated area. He selected the Wall Street section of New York. He placed in service the famous Pearl Street station in New York on 4 September 1882, for which another "Jumbo" of larger size was designed. Six of these machines were driven by reciprocating steam engines supplied with steam from four boilers. They produced an output of about 700 kW to supply a potential load of 7,200 lamps at 110 V. The line conductors were of heavy copper, in conduit installed underground.

INSERT Fig. 1-29. Lamps of Edison’s Principal Rivals, including Sawyer-Mann, Farmer, Maxim, and Swan. (Jehl, v. 2, p. 476.)

INSERT Fig. 1-30.The Edison dynamo, large, displayed at the International Electrical Exhibition, Paris, 1881. (David Porter Heap)

INSERT Fig. 1-32. Pearl Street central station, 1882. The building at 257 Pearl St. housed the generators and all the accessory equipment, while the offices and storehouses were at 255. (Jehl, v. 3, p. 1040.)

The first hydroelectric power plant (at first an isolated plant rather than a true central station) followed quickly, going into service on 30 September 1882, on the Fox River at Appleton, Wisconsin, with a 3-m (10-ft) head and two Edison generators of 12.5 kW each. It had a direct current generator capable of lighting two hundred and fifty sixteen-candle power lamps each equivalent to 50 watts. The generator operated at 110 volts and was driven by gears and belts by a water wheel operating under a ten-foot fall of water. As the dynamo gained speed, the carbonized bamboo filaments slowly changed from a dull glow to incandescence. The “miracle of the age” had been performed, and the newspapers reported that the illumination was “as bright as day.” The Vulcan Street Plant was the precursor of many projects to, follow, in which civil, mechanical, and electrical engineers cooperated to provide hydroelectric power for the United States.[7]

INSERT Fig. 1-34. Photo, IEEE Milestone dedication ceremony on 15 Sept. 1977.

Power generating stations proliferated in locations close to the potential consumers of electricity. Over a few decades they became consolidated into a handful of large companies (see below). The telegraph industry also grew rapidly. By 1880, the Western Union Company, with its network of telegraph lines, controlled the largest segment of the electrical industry. The rise of big electrical companies set the stage for the formation of a profession and high-profile executives who would lead the formation of what eventually became IEEE.

central stations

Central Stations: Roots of the Electric Utility Industry

Edison as an entrepreneur in the incandescent electric light field contended with three main factor, which included cost, quality, and reliability. In order to replace other forms of artificial illumination an incandescent system had to provide a superior product at a competitive price. Edison, Swan, Maxim, and other manufacturers of electric lamps, intended to sell more than a simple light bulb. Central electrical generating stations, the pioneers of today's utility industry were developed in conjunction with the electric lamp. The number of local illuminating companies grew rapidly as commercial electric light central stations because low-voltage direct current could only serve small areas. Because of the limitation on transmission distance, many stations were needed to supply cities such as New York or London. By 1900, there were more than 2800 small DC stations in the United States. However, already by 1885 inventors were exploring a new option, alternating current (AC).

The wide reach of electricity enabled elevators, thus stimulating the building of skyscrapers. Electric lights, installed in many city streets by the 1890s, made them safer. Lighting also allowed businesses to operate longer hours and gave rise to new forms of entertainment, such as movies, amusement park rides and—eventually—radio and television. At the same time, electrification of society also exacerbated social and economic inequities between the cities and the countryside, where entrepreneurs saw no profit in extending electric power to widely dispersed households.

Although Edison patented alternating current equipment, and he developed a high-resistance lamp filament to reduce line losses, he remained committed to direct current and the companies he founded to manufacture components continued to flourish. Indeed, he laid the foundation for the electrical manufacturing industry. The so-called Wizard of Menlo Park and associated companies had much invested, and—all things considered—coming in contact with a direct current line was potentially less fatal than an alternating current transmission line. In early 1888, the Edison companies went on the offensive, arguing that DC was more reliable, more compatible with existing equipment, and, most importantly, safer. In addition, DC could also be metered, while AC customers paid by the lamp, which gave them no incentive to turn them off, even when not needed. However, in 1888, Oliver B. Shallenberger of Westinghouse developed a magnetic disk meter for AC power.

In 1888, Nikola Tesla, received patents on a polyphase AC motor and presented a paper to the AIEE meeting in 1888. Westinghouse purchased the patents and hired the inventor. It took a few more years to solve some of the problems of the induction motor and to develop the two- and three-phase systems of distribution for AC power. As a consultant, Benjamin Lamme makes clear in his memoir that it took a lot of work on his and Westinghouse’s teams of engineers to turn Tesla’s paper polyphase dynamo into commercial products. Tesla was certainly useful but he never commercialized any of his patents himself.

The promise of a solution to the motor problem removed a second major argument of Edison’s interests for DC, and the furor over the safety of AC began to subside as well. In the summer of 1891, Westinghouse constructed a 19-km (12-mi) transmission line from Willamette Falls to Portland, Oregon operating at 4,000 V. In the same year, a 3,000-V, 5-km (3-mi) line supplied a motor at Telluride, Colorado.

Alternating current power stations, with their coal smoke and particulates, could be located near industrial plants to supply their loads, and transmission lines could supply residential and office lighting and elevator loads elsewhere in town. Industrial use of electricity developed rapidly after Tesla, aided by C. F. Scott and B. G. Lamme at Westinghouse, commercialized the AC induction motor in 1892 and Elihu Thomson developed the repulsion-induction motor for GE in 1897.

In 1895, the Niagara Falls project showed that it was practical to generate large quantities of AC power and to transmit it to loads elsewhere. While some of the electricity was transmitted twenty miles to Buffalo, most of the electricity generated by this power station was used in close proximity to the falls at electrochemical and aluminum processing plants.

Westinghouse won the contract based partly on its extensive AC experience. After much debate, the specifications called for three alternators, 5,000 hp each, two-phase, 2,200 V, and 25 Hz. The choice of frequency had been chosen based on characteristics of the steam engines that drove the turbines, but AC motors required lower frequencies. In 1889-1890, Lewis B. Stillwell proposed 3600 cycles per minute or 60 Hz, which was acceptable for lamps and motor loads both. When the Niagara alternators were being designed, a compromise was reached at 25 Hz, which was suited to motor applications or electrolytic refining, but was within the flicker rate of incandescent lamps. In later years it produced a very objectionable flicker in fluorescent lamps, and areas supplied with 25 Hz have since largely been changed to 60 Hz operation.

On the European continent, progress also continued apace. In 1891, soon after Willamette, the installation of a three-phase AC line at 30,000 V, carrying 100 kW for 160 km (100 mi) between Lauffen and Frankfurt in Germany, created much interest and further advanced the AC cause. Technically, Oerlikon of Switzerland and Allgemeine Elektricitats-Gesellschaft (AEG) showed that their oil-immersed transformers could safely operate at 40,000 V.

Even after Niagara Falls cemented the American lead in technology, many advances continued to come from Europe. Walther Nernst of Gottingen developed the Nernst lamp, a light source of heated refractory oxides, in 1899. Siemens and Halske patented a tantalum filament lamp in 1902, and Carl Welsbach produced the osmium filament lamp. These challenges to the lucrative carbon lamp business alerted Steinmetz and General Electric (GE) at Schenectady, and the General Electric Research Laboratory was established in 1901, with Leipzig-trained Willis Whitney in charge. Steinmetz saw competitive threats to the company's lucrative lamp business, which led him to propose a laboratory, divorced from production problems, devoted to work on scientific fundamentals underlying company products. In the fall, Willis R. Whitney, an assistant professor of chemistry at M. I.T., arrived as the first laboratory director. Whereas Edison had already pioneered what might be considered the first research and development laboratory at Menlo Park, New Jersey (and later in West Orange, New Jersey) the fact that the now large and growing electrical industry saw the need to do research at the corporate level shows the significance of the new electrical industry.

The reciprocating steam engine, usually directly connected to the generator, reached 5,000 hp by 1900. The 10,000-hp engines built for Ferranti's Deptford Station required the casting of the heaviest steel ingots in the history of British steelmaking, indicating that a much greater engine size might not be possible. The challenge was that, fundamentally, the reciprocating engine and the electric generator were a mechanical mismatch. The reciprocating engine supplied an intermittent torque, whereas the electrical generator and its load called for a continuously applied torque. This inequality was smoothed by storing rotational energy in large flywheels, but these took up space and were necessarily heavy and costly. The constant torque of the steam turbine was an obvious answer to the need. Turbine development had been carried on for many years, and in 1883, de Laval in Sweden designed a practical machine operating on the impulse principle, the wheel being struck by high-velocity steam from a nozzle. Rotational speeds as high as 26,000 rpm were produced, but the power output was too limited by the gearing needed to reduce the speed to that suitable for the generators. However, by 1889, de Laval had a turbine that drove 100-kW generator.

Above 40,000 volts, the line insulators posed a limit, being pin-mounted porcelain of considerable size and fragility. In 1907, H. W. Buck of the Niagara Falls Power Company and Edward M. Hewlett of General Electric solved the problem with suspension insulators composed of a string of porcelain or glass plates whose length could be increased as the voltage was raised. C. F. Scott and Ralph Mershon (AIEE President, 1912-1913) of Westinghouse investigated another significant problem. Scott had noted luminosity accompanied by a hiss or crackling sound on experimental lines at night when operating above 20,000 volts. The phenomenon was the cause of considerable energy loss, enough to justify further study, which these men undertook on the Telluride line in 1894. They concluded that this "corona loss" was due to ionization of the air at the high field intensities created around small-diameter wires. The power loss appeared to increase rapidly above 50,000 volts, posing a potential limit to highvoltage AC transmission. Scott's 1899 report of the Telluride work interested Harris J. Ryan, who showed that the conductor diameter and spacing might be increased to reduce the field intensity and hence the corona loss. After this work, conductor diameters increased and line voltages reached 220 kV by 1923.

The invention of the rotary converter—a machine with a DC commutator on one end and AC slip rings on the other—helped to moderate the AC/DC battle, and allowed for an orderly transition of the industry from the era of electric light to the era of electric light and power. Westinghouse used this machine in its universal electrical supply system, based on polyphase AC, which it displayed at the Chicago Exposition of 1893. The general use of AC equipment was also aided in 1896 by a patent exchange agreement between General Electric and Westinghouse by which rational technical exchange became possible.

Some utility executives foresaw the need to bring order to the supply of electricity by consolidating small stations and their service areas into larger ones. In Chicago in 1892, Samuel Insull had left the dual position of vice president of the new General Electric Company and manager of its Schenectady works to become president of the Chicago Edison Company. Believing in the economy of large units, Insull's first step in Chicago was to enlarge the system by building the Harrison Street Station in 1894, with 2400 kW of generator capacity. By 1904, the plant had been expanded to 16 500 kW, using 3500-kW units. With so much generating capacity available, a large market had to be found, so Chicago Edison acquired twenty other companies in the Chicago area, becoming the Commonwealth Edison Company in 1907.

Insull and his chief engineer, Louis Ferguson (AIEE President, 1908-1909), saw that the space requirements and weight of the reciprocating steam engines at Harrison Street limited the maximum rating for that station. Travelling in Europe, Ferguson saw many advances in steam turbines. Insull worked out a risk-sharing arrangement with GE to build a 5,000-kW steam turbine, which went into service in October 1903. The unit, vertical in design, was one-tenth the size and one-third the cost of the reciprocating engine-generator initially scheduled for the plant. Although not as efficient as expected, the lower cost and improvements made later units satisfactory. In 1909, the original 5,000-kW units at Fisk Street were supplanted by 12,000-kW sets that required virtually no increase in floor space.

Insull, on an 1894 European trip, met Arthur Wright, then the manager of the Brighton municipal station. Wright had been influenced by Dr. John Hopkinson, a world-renowned electrical authority. Hopkinson had communicated ideas to Wright concerning the optimum loading of a system and the scheduling customer tariffs to cover not only the cost of energy delivered, but also the cost of the capital needed to maintain the system. Wright had developed a metering system measuring not only the use of energy, but also the extent to which each customer used his installed capacity or his maximum demand on the system. Specifically, Insull learned that the system had to recognize both energy costs and capital costs for the customer. Load diversity and load factor were additional management principles introduced by Insull before 1914.

Wireless Telegraphy and the Birth of Radio

While advances were being made in electric power, telegraphy, and telephony, scientists and engineers wondered whether Hertzian waves could be used to transmit electromagnetic signals through the air without recourse to wires. In the 1890s, Tesla worked on the problem, as did Reginald Fessenden in Canada and Aleksandr Popov in Russia. The most commercially successful was Guglielmo Marconi, an Italian married to an Irish wife, who worked on wireless telegraphy first in Italy, then the U.K., then North America. Because of the discontinuity of electromagnetic waves produced by an electrostatic spark, Marconi transmitted dots and dashes based on Morse code. Receivers were likewise limited, originally coherers in which metal filings were moved by electromagnetic forces, and then natural semiconducting crystals of carborundum, silicon, and galena. After sending and receiving messages over increasing distances in the 1890s, in 1901 Marconi apparently succeeded in sending the letter “S” across the Atlantic Ocean. Despite these achievements, it would take more science and engineering to make radio a revolutionary means of communication, with all that implied for the world’s nations and societies.

INSERT Fig. 1-47. Marconi and his ship Electra (in MOH exhibit)

In 1897, J. J. Thomson announced a startling discovery. He had been trying to explain the nature of the cathode ray—a beam that occurs when an electric current is driven through a partially evacuated glass, or Crookes, tube. He found that the sub-atomic particles, now called electrons, which make up the beam, were more than 1,000 times lighter than the hydrogen atom. Thompson was awarded the Nobel Prize in 1906 “in recognition of the great merits of his theoretical and experimental investigations on the conduction of electricity by gases.”

The identification of the electron cleared up the puzzle of the Edison effect, which Thomas Edison had observed in 1880 while working on light bulbs with carbonized bamboo filaments. After glowing for a few hours, carbon from the filament would move through the vacuum and collect on the inside wall of the bulb. Edison found that the carbon had a charge, suggesting that electricity was moving through the vacuum. Because the electron had not yet been discovered, Edison could not explain the phenomenon. It later became the basis of the electron tube (often called the vacuum tube), which would soon have a tremendous effect on the world. Engineering based on these tubes came to be called electronics.

In 1904, John A. Fleming of England used the Edison effect to build a rectifier to detect radio waves. The output of two-element tube became known as a diode, which produced an audible signal from a radio wave. The diode was stable but could not amplify the electromagnetic energy that a connected antenna received. In October 1906, Lee de Forest presented an AIEE paper on what he called an “Audion,” a three-element tube, or triode. When evacuated, the Audion enabled amplification of a modulated, continuous radio wave received through an antenna. Circuits using triodes mad became the key component of all radio, radar, television, and computer systems before the commercialization of the transistor in the 1950s.

In 1939, William Shockley at AT&T’s Bell Labs revived the idea as a way to replace vacuum tubes. Under Shockley’s management, John Bardeen and Walter Brattain demonstrated in 1947 the first semiconductor amplifier: the point-contact transistor, with two metal points in contact with a sliver of germanium. In 1948, Shockley invented the more robust junction transistor, built in 1951. The three shared the 1956 Nobel Prize in Physics for their inventions.

INSERT Fig. 1-48. Early radio inventors and entrepreneurs: Marconi, Fessenden, Armstrong, de Forest, and Sarnoff.

education

Engineering Education

The U. S. Congress authorized President George Washington to establish a school for engineers at West Point, New York, in 1794, which had a curriculum based on civil engineering. Rensselaer Institute at Troy, New York (now Rensselaer Polytechnic Institute), gave its first degrees in civil engineering in 1835 to a class of four. By 1862, it featured a four-year curriculum with parallel sequences of humanities, mathematics, physical science, and technical subjects. At that time, there were about a dozen other engineering schools, including some at big universities such as Harvard and Yale. The Morrill Land Grant Act of 1862, signed into law by President Lincoln, gave grants of federal land to the states, which helped them establish colleges of "agriculture and the mechanic arts, for the liberal and practical education of the industrial classes." Within ten years, seventy engineering schools had been established. C. F. Scott (Ohio State, 1885) and B. J. Arnold (Nebraska, 1897) were the first graduates of land-grant schools to reach the presidency of the AIEE in 1902-1903 and 1903-1904, respectively.

After the U.S. Civil War, as steam became the prime mover, mechanical engineering diverged from civil engineering, with curricula at Yale, M.I.T., Worcester Polytechnic Institute, and Stevens Institute of Technology. Opinions differed on the relative importance of the practical and theoretical aspects of engineering education. Some schools such as M.I.T., Stevens, and Cornell emphasized mathematics and science, while others, including WPI, Rose Polytechnic, and Georgia Institute of Technology stressed shop work.

Electrical engineering usually began as optional courses in physics departments. The first such course was organized at the Massachusetts Institute of Technology (MIT) in 1882 by Charles R. Cross, head of the Department of Physics and one of AIEE’s founders. The next year, Professor William A. Anthony (AIEE President, 1890-1891) introduced an electrical course in the Department of Physics at Cornell University, in Ithaca, New York. By 1890, there were many such courses in physics departments. Professor Dugald Jackson (AIEE President, 1910-1911), the first professor of electrical engineering at the University of Wisconsin, wrote in 1934 that "our modes of thought, our units of measurement, even our processes of education sprang from the science of physics (fortified by mathematics) and from physicists." The University of Missouri started the first department of electrical engineering, in 1886. D. C. Jackson, of the Edison General Electric Company in Chicago, organized a department in 1891 at the University of Wisconsin, winning assurances that it would be on an equal footing with other academic departments.

The AIEE, as an organization both of the industry men and the experimenters, was extremely interested in how the next generation would be educated. The AIEE heard its first papers on education in 1892. Professor R. B. Owens of the University of Nebraska thought that the electrical curriculum should include "a good course in modern geometry, differential and integral calculus… But to attempt to analyze the action of alternating current apparatus without the use of differential equations is no very easy task... and when reading Maxwell, it becomes convenient to have quaternions or spherical harmonics, they can be studied in connection with such readings."

In 1903, the AIEE held a joint summer meeting with the newly formed Society for the Promotion of Engineering Education. The papers given by industrialists suggested that engineering students should learn "engineering fundamentals," but no mention was made of what those fundamentals were. There was disagreement on the value of design courses. One speaker urged that the senior year curriculum should include design courses that covered the materials of electrical engineering as well as study and calculation of dynamo-electric machinery and transformers, with minimum work at the drawing board. Steinmetz, in his AIEE presidential address in 1902, expressed the opposite view: “The considerations on which designs are based in the engineering departments of the manufacturing companies, and especially the very great extent to which judgement enters into the work of the designing engineer, makes the successful teaching of designing impossible to the college.” He urged more analysis of existing designs. Despite Steinmetz's recommendation, the teaching of design courses continued into the 1930s.

ge/bell labs/radio/rca/WWI

Research Laboratories at GE and Bell

The GE Research Laboratory was already working on improvements to the incandescent lamp. Tantalum superseded carbon in lamp filaments in 1906. William D. Coolidge tackled the problems posed by the next step. These were associated with the use of the metal tungsten. Tungsten was suited for lamp filaments because of its high melting point, but it resisted all efforts to shape it. Coolidge appears to have been more of an engineer than a scientist, a trait very useful in solving the problem of making tungsten ductile. Coolidge's ductile tungsten filaments made a further dramatic improvement in lamp output and efficiency when introduced in 1910. Because the filaments operated at a higher temperature, they produced more light. At the annual meeting of the AIEE in Boston in 1912, the GE Research Laboratory put its science team on show, with successive papers by Whitney, Coolidge, and Langmuir. The year 1913 brought an engineering first with a joint effort by Steinmetz of General Electric and B. G. Lamme of Westinghouse, the top engineers of their competing companies. This was a paper titled "Temperature and Electrical Insulation," which dealt with the allowable temperature rise in electrical machines.

The concept of team research that had been put forward by Edison at Menlo Park, and then expanded by him at West Orange, was now well established at Schenectady and supported by many accomplishments. This new management approach was valuable because it showed how the scientific advantages and freedom of the university could be combined with industrial needs and directions.

The engineering problems of the Bell telephone system in the United States were legion, but the most fundamental was the transmission of signals. Bell researcher William H. Doherty wrote in 1975, “The energy generated by a telephone transmitter was infinitesimal compared with what could be generated by pounding a telegraph key…But more than this, the voice spectrum extended into the hundreds, even thousands, of cycles per second. As the early practitioners, known as electricians, struggled to coax telegraph wires to carry the voice over larger distances, they were increasingly frustrated and baffled."

In 1900, George Campbell of Bell and Michael Pupin, professor of mathematical physics at Columbia University, independently developed the theory of the inductively loaded telephone line. Pupin was given the priority on patent rights. Oliver Heaviside had first pointed out that, after the line resistance, the line capacitance most limited long-distance telephone transmission. Acting between the wires, this capacitance shunted the higher voice frequencies, causing distortion as well as attenuation of the signal. He left his results in mathematical form, but, in 1887, there were few electrical engineers who understood mathematics well enough to translate Heaviside’s results into practical form; nor was there need to do so at that time.

Pupin drew his understanding of the physical problem from a mathematical analogy to the vibration of a plucked string loaded with spaced weights. He thereby determined both the amount of inductance needed to compensate for the capacitance and the proper spacing for the inductors. When, in 1899, the telephone system needed inductive loading to extend its long-distance lines, Pupin’s patents were purchased by the Bell system.

Using loading coils properly spaced in the line, the transmission distance reached from New York to Denver by 1911. This was the practical economic limit without a "repeater" or some device for regenerating the weak received signal.

Campbell's objective was always to extend the distance limits on telephone communication, but to do that he found it necessary to explore the mathematical fundamentals. His ability to do so increased the Bell Company's appreciation of in-house research, which had been only sporadically promoted.

Theodore N. Vail, who was one of the AIEE founders and had served as AT&T President in 1885-1887, but had left AT&T after financial disagreements, returned to AT&T in 1911. Vail's return signaled more support for basic research. This support was badly needed, as the system was about to start building a transcontinental line with the intent of initial operation at the opening of the Panama-Pacific Exposition in San Francisco to be held in 1915.

AT&T undertook a concerted study of the repeater problem. By making refinements to de Forest’s Audion, a transcontinental line, with three spaced repeater amplifiers, was used by Vail in July 1914, on schedule. The next January, President Woodrow Wilson and Alexander Graham Bell both spoke over the transcontinental line from Washington to San Francisco for the opening ceremonies of the Panama-Pacific Exposition in the latter city.

The Bell system initially had problems with the switching of customers' lines, solved with the so-called multiple board, which gave an array of operators access to all the lines of the exchange. Men were initially used as operators, since men dominated all industries outside the home, especially technical ones. However, AT&T found that a woman’s voice was reassuring to users, and so women began to dominate this occupation.

When automatic switching equipment was invented in 1889 by Almon B. Strowger, an undertaker from Kansas City, the Bell Company reacted as a monopoly sometimes does—it discounted the innovation. It took thirty years for Bell to drop its opposition. The automatic switching equipment, manufactured by the Automatic Electric Company of Chicago, operated by means of pulses transmitted by the dial of the calling telephone. Rotary relay mechanisms moved a selector to the correct tier of contacts, thus choosing the subscriber line desired by the calling party. The first installation was at LaPorte, Indiana, in 1892, with an improved design installed in 1900 at New Bedford, Massachusetts, for a 10,000-line exchange. This was a completely automated telephone exchange.

Bell's support of basic research in the field of communications was furthered in January 1925, by the organization of the Bell Telephone Laboratories from the Western Electric engineering department in New York, then numbering more than 3,600 employees. Frank B. Jewett became the first president of the Laboratories, which became a source of major contributions to the communications field.

In 1916, Bell engineers used a large array of small triodes in parallel to transmit voice by radio from Washington, D.C., to Paris and Honolulu, Hawai’i. There was a clear need for more powerful vacuum tubes to open long-distance radiotelephone communication. Although Alexanderson's high-frequency alternators had been used in point-to-point radiotelegraph service for many years, the future of radio lay with the vacuum tube.

An unexpected research bonus came from Karl Jansky of the Bell Labs in 1928, when he began a study of the static noises plaguing AT&T’s new transatlantic radiotelephone service. He found that most noises were caused by local and distant thunderstorms, and also there was an underlying steady hiss. By 1933, he had tracked the source of the hiss to the center of the Milky Way. His results were soon confirmed by Grote Reber, a radio amateur with a backyard antenna, and after the war the new science of radio astronomy rapidly developed with giant space-scanning dish antennas. This gave vast new dimensions to the astronomer's work, adding radio frequencies to the limited visual spectrum for obtaining knowledge from space.

Edwin Armstrong

In 1912, Edwin Armstrong was an undergraduate student at Columbia University, studying under Pupin. He built an amplifier circuit that fed the output signal back to the input, greatly boosting amplification. Armstrong patented the invention, the regenerative circuit, in 1914. It vastly increased the sensitivity of radio receivers, and was used widely in radio equipment until the 1930s.

At about the same time, Fessenden, Alexander Meissner in Germany, and H.J. Round in England all built circuits that produced similar results, as did de Forest himself a year or so later. De Forest started a patent action that was later taken over by AT&T. The ensuing long legal battle exhausted Armstrong's finances. The Supreme Court in 1934 decided against Armstrong. The Board of Directors of the Institute of Radio Engineers (IRE) took notice and publicly reaffirmed its 1918 award to Armstrong of its Medal of Honor for his "achievements in relation coregeneration and the generation of oscillations by vacuum tubes." Because of the patent litigation, many companies had used the regenerative circuit without awarding Armstrong his royalties.

Meanwhile, Armstrong made a further discovery about this circuit: just when maximum amplification was obtained, the signal changed suddenly to a hissing or a whistling. He realized this meant that the circuit was generating its own oscillations. The triode, he realized, could thus be used as a frequency generator—an oscillator.

Armstrong’s second invention would not have been possible without his first. Fessenden had, in 1901, introduced the heterodyne principle: if two tones of frequencies A and B are combined, one may hear a tone with frequency A minus B. Armstrong used this principle in devising what came to be called the superheterodyne receiver. This device converted the high-frequency received signal to one of intermediate frequency by combining it with a signal from an oscillator in the receiver, then amplifying that intermediate-frequency signal before subjecting it to the detection and amplification usual in receivers. The circuit greatly improved the ability to tune radio channels, simplified that tuning for consumers, and lowered the cost of electronic components to process signals. Armstrong developed the circuit while attached to the U.S. Signal Corps in Paris in 1918. RCA marketed the superheterodyne beginning in 1924, and soon licensed the invention to other manufacturers. It became, and remains today in software-defined radio receivers, the standard type of radio receiver.

Fessenden had demonstrated radio’s use as a broadcast medium all the way back in 1906, and now transmission and receiver technology meant that it could be more than a novelty or hobby. Despite the threat of interception, radio was used extensively in World War I for point-to-point communication, but after the war inventors and entrepreneurs again began to explore the commercial application of broadcasting. U.S. military officials argued that radio should be a government-owned monopoly, as it was in most countries, or reopened to private ownership and development. The IRE joined this argument on the side of private development, reminding Congress and the public that the radio field still faced important technical challenges. Government interference would impede technical creativity, its leaders argued and testified before Congress.

A crisis occurred in 1919 when the British-owned American Marconi Company proposed to buy the rights to the Alexanderson alternator from the General Electric Company, intending to maintain its monopoly position in the radio field. In Washington at that time, a monopoly might have been considered allowable, but certainly not if that monopoly was to be held by a foreign government. Seeking quick action, President Wilson turned to the General Electric Company to maintain American control of the radio industry. General Electric purchased the American Marconi Company for about $3 million, and proceeded to organize a new entity, the Radio Corporation of America. A few months later, AT&T joined RCA by purchase of stock, giving the new corporation control of the de Forest triode patents as well as those of Langmuir and Arnold.

Beginning around 1919 entrepreneurs in the United States and elsewhere began to experiment with what was to become commercial radio. Westinghouse Radio Station KDKA was a world pioneer of commercial radio broadcasting. Transmitting with a power of 100 watts on a wavelength of 360 meters, KDKA began scheduled programming with the Harding-Cox Presidential election returns on 2 November 1920. The broadcast began at 6 p.m. and ended at noon the next day. From a wooden shed atop the K building of the Westinghouse Company's East Pittsburgh works, five men informed and entertained an unseen audience for eighteen hours. Conceived by C.P. Davis, broadcasting as a public service evolved from Frank Conrad’s weekly experimental broadcasts over his amateur radio station 8XK, attracting many regular listeners who had wireless receiving sets. IEEE awarded the Westinghouse radio station KDKA an IEEE Milestone for its pioneering broadcast in 1994.[8]

INSERT Fig. 1-52. Photo, IEEE Milestone dedication on 1 June 1994.

By 1922, there were more than 500 American broadcast stations jammed into a narrow frequency band, and a search was on for a method to narrow the frequency band used by each station. In the usual technique, known as amplitude modulation (AM), the amplitude of the carrier wave is regulated by the amplitude of the audio signal. With frequency modulation, the audio signal alters instead the frequency of the carrier, shifting it down or up to mirror the changes in amplitude of the audio wave. In 1922, John R. Carson of the Bell engineering group wrote an IRE paper that discussed modulation mathematically. He showed that FM could not reduce the station bandwidth to less than twice the frequency range of the audio signal. Because FM could not be used to narrow the transmitted band, it was not useful.

In the late 1920s, Armstrong turned his attention to what seemed to him and many other radio engineers to be the greatest problem, the elimination of static. "This is a terrific problem,” he wrote. “It is the only one I ever encountered that, approached from any direction, always seems to be a stone wall." Armstrong eventually found a solution in frequency modulation. He soon found it necessary to use a much broader bandwidth than AM stations used (today an FM radio channel occupies 200 kHz, twenty times the bandwidth of an AM channel), but doing so gave not only relative freedom from static but also much higher sound fidelity than AM radio offered.

With the four patents for his FM techniques that he obtained in 1933, Armstrong set about gaining the support of RCA for his new system. RCA engineers were impressed, but the sales and legal departments saw FM as a threat to RCA's corporate position. David Sarnoff, the head of RCA, had already decided to promote television vigorously and believed the company did not have the resources to develop a new radio medium at the same time. Moreover, in the economically distressed 1930s, better sound quality was regarded as a luxury, so there was not thought to be a large market for products offering it.

Armstrong gained some support from General Electric and Zenith, but he carried out the development and field-testing of a practical broadcasting system largely on his own. He gradually gained the interest of engineers, broadcasters, and radio listeners, and, in 1939, FM broadcasts were coming from twenty or so experimental stations. These stations could not, according to FCC rules, sell advertising or derive income in any other way from broadcasting. Finally, in 1940, the FCC decided to authorize commercial FM broadcasting, allocating the region of the spectrum from 42 MHz to 50 MHz to forty FM channels. In October 1940, it granted permits for fifteen stations. Zenith and other manufacturers marketed FM receivers, and by the end of 1941, nearly 400,000 sets had been sold.

Armstrong received many honors, including the Edison Medal from the American Institute of Electrical Engineers and the Medal of Honor from the Institute of Radio Engineers. Thus, he received the highest awards from both of IEEE’s predecessor organizations.

On 21 January 1921, First unsuccessful attempt to transmit a radio from the Capitol occurs during the inaugural address of an American President–Warren G. Harding–on the radio. On 4 March 1925, the first national radio broadcast of an inauguration occurred when President Calvin Coolidge took the oath of office on the East Front of the Capitol. More than 22,000,000 households reportedly tuned in for the broadcast.

On 22 February 1927, Congress passed the H.R. 9971, the Radio Act of 1927, providing government control over airwaves, licensing, and standards. The act also created the Federal Radio Commission (the precursor to the Federal Communications Commission). President Calvin Coolidge signed the Radio Act on February 23, 1927. A year later, Congress enhanced the Radio Act with passage of the Davis Amendment, S. 2317, which expanded radio technology to rural communities.

The Growth of RCA

In 1920, Westinghouse bought Armstrong’s regenerative-circuit and superheterodyne patents for $335,000. Having entered the radio field late, it now had a bargaining position. It entered the RCA group in 1921. Essentially a patent-holding corporation, RCA freed the industry of internecine patent fights. It also placed tremendous power in one corporation. Entrepreneurs, however, could enter radio-manufacturing business with only a small investment and infringe major patents, gambling that technical advances would dilute a patent’s value well before legal action could be taken.

Among the three major stockholders of RCA in 1921, the licensing agreement seemed to parcel out the opportunities neatly. AT&T was assigned the manufacture of transmitting and telephone equipment. General Electric and Westinghouse, based on RCA holdings of Armstrong's patents, divided receiver manufacturing by sixty and forty percent, respectively. RCA, which was forbidden to have manufacturing facilities, operated the transatlantic radio service but otherwise was a sales organization.

RCA had another major asset in David Sarnoff, its commercial manager. Brought to the United States from Russia at the age of nine, Sarnoff started as an office boy with Marconi, learned radio operation in night school, and served as a radio operator on a ship. He had advocated broadcasting entertainment and information since 1915 to promote sales of home radios, but his superiors continued to focus on point-to-point communications. At RCA, in July 1921 he helped promote a boxing match as the first broadcast sports event. Up to 300,000 people listened, helping drive sales of home radios, and RCA became a much larger company than anyone else foresaw. Sarnoff was promoted to general manager of RCA in 1921.

Radio had a big cultural impact. It exposed listeners to a wide range of events that they would not otherwise have had access—sporting events, political convention, speeches and entertainment. The National Broadcasting Company, established in 1926 by AT&T, General Electric, Westinghouse, and RCA, initially reached up to fifty-four percent of the American population.

Sarnoff became president of RCA in 1930, the year the RCA agreement was revised and the end of the ten-year prohibition on manufacturing. RCA took over manufacturing plants in Camden and Harrison, NJ. AT&T retained rights to the use of tubes in the communication services and sold its radio station, WEAF, to RCA.

During the Great Depression, Sarnoff allocated $10 million to develop an electronic television system, based largely on the work of Vladimir Zworykin, who invented a practical electronic camera tube. After World War II, Sarnoff championed electronic color television in an eight-year battle with rival company CBS, which advocated an electromechanical system. His support of innovation at the RCA Laboratories, renamed the David Sarnoff Research Center in Princeton, New Jersey, led to the establishment of a U.S. color TV standard in 1953.

INSERT Fig. 1-53. Photo, RCA Lab/David Sarnoff Research Center.

Tools of War

Technology has always played a role in wartime—often a decisive one. World War I has often been called the first technoscientific war, but despite the fact that it followed on the heels of early advances in internal combustion engines, in aeronautics, in electric power, and in radio, it was largely fought in nineteenth century style. Edison, however, realized that technological advances would change the way wars are fought. Even before the United States entry into the war, he advocated that the country take pains to preserve its technological advantages. He was in favor of stockpiling munitions and equipment and building up soldier reserves. The government came around to the notion that technology would be the key to winning future wars, and that concept was borne out in World War II.

Interestingly, although governments were finally going to move into the electrical engineering research and development in anticipation of the next war, engineering education in the years from 1918 to 1935 was almost static; professors continued primarily to hold bachelor's degrees, and most had some years of practical experience, which was viewed as more important than theoretical or mathematical proficiency. Few teachers held master's degrees, and fewer had doctorates. Many teachers assumed that a student would need no knowledge beyond what the teacher had used in his own career. There was little thought of preparing the students for change in the field. For instance, complex algebra, which Charles P. Steinmetz advocated in circuit analysis before the AIEE in 1893, had not been fully adopted on the campus by 1925.

Frederick E. Terman (IRE President, 1941) reported that electrical research productivity from 1920 to 1925 averaged nine technical papers per year from university authors published in the AIEE Transactions, but three-quarters of those papers came from only five institutions. In the Proceedings of the IRE, more than half of the thirty papers from educational sources published in those five years were submitted by authors in physics departments.

The better students, disenchanted with boring work on the drafting table and in the shop, and by heavy class schedules, transferred to engineering physics, which included more science and mathematics in preparation for work in the industrial research field that began to flourish in 1925. During this period, electrical engineering educators failed to teach advances in physics, such as Max Planck's radiation law, Bohr’s quantum theory, wave mechanics, and Einstein’s work. Even radio, well advanced by 1930, was overlooked by most educators.

The complexities of radio and electronics attracted some students to a fifth year of study for a Master’s degree. The large manufacturers began to recognize the value of a Master's degree in 1929, increasing the starting salary for engineers from $100 per month to $150, with ten percent added for a Master's degree. The value of graduate work and on-campus research programs finally came to demonstrated only just before World War II, when new electronics oriented corporations, financed with local venture capital, began to appear around M. I. T. and west of Boston. These were owned or managed by doctorate graduates from M. I. T. or Harvard who had developed ideas in thesis work and were hoping for a market for their products. Another very significant electronics nursery, known as Silicon Valley, appeared just south of Palo Alto, California, which resulted in large part from Frederick Terman's encouragement of electronics research at Stanford University. The Hewlett-Packard Company began with a $500 fellowship to David Packard in 1937. He and William Hewlett developed the resistance-capacity (RC) oscillator as their first product, and manufacture started in a garage and Flora Hewlett's kitchen. Many other companies in the instrumentation and computer fields have similar backgrounds and histories. Even with these advances, electrical engineers were ill equipped to serve as researchers in the laboratories exploring the new fields opened by the technologies of war—would be a decisive factor in World War II.

One important observation in the years leading up to the World War II or I was that radio waves are reflected by objects whose electrical characteristics differ from those of their surroundings, and the reflected waves can be detected. In 1887, when Heinrich Hertz proved that radio waves exist, he also observed that they could be reflected.

A secret development to use radio waves for detection and ranging began in 1922, when A. Hoyt Taylor and L. C. Young of the U.S. Naval Research Laboratory reported that the transmission of 5-m radio waves was affected by the movements of naval vessels on the nearby Anacostia River. They also suggested that the movements of ships could be detected by such variations in the signals. (The word "radar" was invented in 1941 by the U.S. Navy as an abbreviation of "radio detection and ranging.") Returning to this work in 1930, Taylor succeeded in designing equipment to detect moving aircraft with reflected 5-m waves.

In 1932, the Secretary of the Navy communicated Taylor's findings to the Secretary of War to allow application to the Army's antiaircraft weapons; the secret work continued at the Signal Corps Laboratories. In July of 1934, Director of the Signal Corps Laboratories Major William R. Blair proposed "a scheme of projecting an interrupted sequence of trains of oscillations against the target and attempting to detect the echoes during the interstices between the projections." This was the first proposal in the United States for a pulsed-wave radar.

By 1936, the Signal Corps had begun work on the first Army antiaircraft radar, the SCR 268, and a prototype was demonstrated to the Secretary of War in May 1937. It operated at a wavelength of 3 m, later reduced to 1.5 m. Navy designs for shipboard use operated at 50 cm and later 20 cm, the shorter wavelengths improving the accuracy of the direction-finding operation.

Earlier, Sir Robert Watson-Watt of the National Physical Laboratories in England had independently worked along the same lines, and he successfully tracked aircraft in 1935. The urgency felt in Britain was such that the British military also started work on "early warning" radar using 12-m pulsed waves. The first of these "Chain Home" stations was put in place along the Thames estuary in 1937, and when Germany occupied Czechoslovakia in November 1938, these stations and others were put on continuous watch and remained so for the duration of the ensuing war. In 1940, British ground-based radars were used to control 700 British fighter aircraft defending against 2,000 invading German planes. This radar control of fighter planes has been credited with the decisive role in the German defeat in the Battle of Britain.

INSERT Fig. 1-54 and 1-55. Photos, Military Technology - Sonar and Radar.

Antenna dimensions must be proportional to the wavelength (ideally ½). The wavelengths of the early radars required antennas too large for mounting in aircraft, yet the need was apparent. If a fighter pilot could detect an enemy aircraft before it came into view, he could engage the enemy with advantages of timing and maneuver not otherwise obtainable. On bombing missions, airborne radar could assist in navigating to and locating targets.

The prime need was for a high-power transmitting tube, capable of pulse outputs of 100,000 watts or more at wavelengths of 10 cm or less. Such powers were needed because the weak signal reflected from a distant target back to the radar receiver was otherwise undetectable.

An answer to the problem came with the advent of a new form of vacuum tube, the cavity magnetron. In it, dense clouds of electrons swirl in a magnetic field past cavities cut in a copper ring. The electrical effect is much like the acoustic effect of blowing across the mouth of a bottle: electrical oscillations build up across the cavities and produce powerful waves of very short wavelength. Invented at the University of Birmingham, the British General Electric Company produced a design in July 1940.

The scientific advisers to Prime Minister Winston Churchill faced a problem: active development of microwave radar would create shortages of men and material then being devoted to extending the long-range radar defenses. They suggested to Churchill that the Americans, not yet at war, be shown the new magnetron and asked to use it to develop microwave radar systems. Vannevar Bush set up the National Defense Research Committee, which in August 1940 met with British representatives to see a sample device.

The American response was enthusiastic. In September, the U.S. military set up a new center for microwave radar research and development, the M. I. T. Radiation Laboratory. Lee Dubridge, then head of the Department of Physics of the University of Rochester, was appointed its director. Concurrently, the British magnetron was copied at the Bell Laboratories and judged ready for production.

At that time, the technology of microwaves was in its infancy. Little had been translated from basic equations to hardware and few engineers were engaged in the field. Those who were available were asked to join the M.I.T. "Rad Lab," but the main source of talent was the community of research-oriented academic physicists. Among those recruited to the Laboratory were three men who later were awarded Nobel Prizes in physics: I. I. Rabi, E. M. Purcell, and L. W. Alvarez.

By 1942, a cavity magnetron was capable of producing a peak pulse power of two million watts. The model SCR 584 10-cm ground radar, designed for tracking enemy aircraft and leading friendly aircraft home, was an early success. Smaller and lighter radars operating at 3 cm and 1 cm were quickly adopted by bomber missions for location of ground targets. Ground-based radars for early warning of impending attack were developed, too.