Oral-History:Mischa Schwartz

About Mischa Schwartz

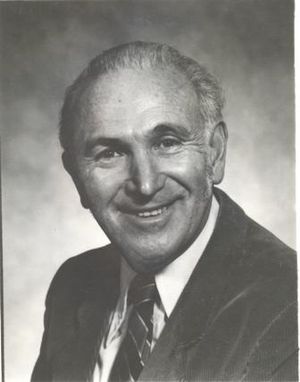

Mischa Schwartz received his bachelor’s from Cooper Union, his Masters from Brooklyn Polytechnic, and his PhD (1951) from Harvard, the last thanks to a Sperry Graduate Fellowship. He worked at Sperry from 1947 to 1952, largely on issues signal detection theory (also the subject of his dissertation). He was a professor at Brooklyn Poly from 1953 to 1973 (head of the EE department 1961-66, established a telecommunications group there, and since then has been a professor at Columbia (helping found the Center for Telecommunications Research (CTR) in 1985, and serving as hits director until 1988). His research included coincidence detection and sequential detection through the mid-1960s; then, with the development of SABRE, SAGE, and ARPANet, he switched focus to computer networks, particularly performance analysis and queuing theory. He worked on setting standards for networks with the CCITT, CCIR, ISO, and NRC. He has been involved with the IEEE and its Information Theory Group and Communication Society for much of his career, including stints as president of the Communication Society. He has published at least three textbooks, Information Transmission, Modulation, and Noise (1959), Computer Communication Network Design (1977), and Telecommunications Networks: Protocols, Modeling, and Analysis (1987). He mentions various of his doctoral students, the achievements of the field in general and of institutions to which he is affiliated, such as the CTR, in particular, and identifies central topics in the field.

About the Interview

MISCHA SCHWARTZ: An Interview Conducted by David Hochfelder, IEEE History Center, 17 September 1999

Interview # 360 for the IEEE History Center, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Mischa Schwartz, an oral history conducted in 1999 by David Hochfelder, IEEE History Center, Piscataway, NJ, USA.

Interview

Interview: Mischa Schwartz

Interviewer: David Hochfelder

Date: 17 September 1999

Place: Columbia University, New York

World War II influences on radar, communication theory

Schwartz:

- Audio File

- MP3 Audio

(360 - schwartz - clip 1.mp3)

As my first job, fresh out of school in 1947, I was lucky to get a radar systems job at Sperry Gyroscope Company, which had pioneered in radar during the war. I had a wonderful group to work with, and in the process of doing that, I got heavily involved in communication theory, so I come at the field of telecommunications from communication theory. Before World War II, communications was broadly considered to be the two areas of radio and telephony. Much of it was “seat-of-the-pants” engineering. For example, one of the textbooks we used in those days was the book Radio Engineering by Frederick Terman, who was one of the real pioneers going back to the ‘30s. That’s strictly circuit after circuit, with very little analysis or overall systems orientation. I think World War II changed that. At least from my perspective it did.

In our work, many of us learned from the work at the Radiation Laboratory at MIT during World War II, which put out a whole series of books on radar. While working in radar and communication theory, post-1947, I used a lot of that material. At the same time there were people at Bell Labs also working in that area. Claude Shannon, for example, the founder of, and a giant in information theory, did a lot of work during World War II on problems related to communication and control for the military. Norbert Wiener at MIT was also doing work of that type. So, this all came out of problems during World War II, much of it having to do with trying to improve radar, communications, and system control technology. There were many physicists and mathematicians working on radar at the MIT Rad Lab.

That’s my own view. They developed a systems unit that had been missing before. You had very good physicists working, good mathematicians, and very good engineers. The systems concepts had been slowly developing, but they really came together there. For example, such problems like detecting signals in noise—a critical issue in communications—came out of work on radar.

Education; Sperry Gyroscope Company employment

Schwartz:

I worked at this group at Sperry for two years in 1947-1949, coming in as a young kid, fresh out of school. I had just received a degree at Cooper Union. I started Cooper Union early on during the war and got drafted. I came back after the war, and luckily I had a good advisor who pushed me out in a hurry. He said I didn’t have to take certain courses, and so I got out in ‘47. I started a master’s degree at night at Brooklyn Poly and I was teaching at Cooper Union, too. When you’re young you have a lot of energy to do all kinds of things simultaneously.

At any rate, I got fascinated. Now, here I am fresh out of Cooper Union, I get involved with the radar systems group at Sperry, and the first thing I had to learn was probability. I never took a course in probability theory, but here I’m being asked to study signals in noise. What is noise? What are signals? How do you represent these things? What do you mean by detecting signals in noise? Because this was a critical issue in radar. So I did a quick learning process, and I must say in all honesty that sometimes I feel that I’m not as good in probability as I should be! All my work has been in statistical communications technology. But I never had a formal course in the subject, so it’s all “seat-of-the-pants” self-learning. I have taught numerous courses in statistical concepts, but somehow or other I still feel I missed the rigorous approach needed to truly learn probabilistic concepts. Anyway, it turned out one of the key areas that we had to learn quickly was noise representation. That was a very hot topic.

First of all, in radar, how do you pull up signals from noise? I used two primers at that time. One book of the Radiation Laboratory’s series was on the detection of signals in noise, so I went through that. The other was a classic series of papers written by Steve Rice, S. O. Rice, at Bell Laboratories in 1944 on mathematical noise representation. I found it very difficult as a young kid without enough background in probability when these guys are tossing this stuff at me. For instance, spectral analysis—I didn’t know what that was. The problem is in school it was all seat-of-the-pants kinds of things. Design electronic circuits—there’s no system orientation of any kind. Now suddenly you have to learn about spectrum analysis. Some of this had appeared earlier in the literature but really hadn’t been done in the schools. The whole field has changed now. This came out of World War II where people were dealing with noise representation, representation of signals, spectral analysis.

At the same time, in 1948 (the year after I started at Sperry) hot in the air was Shannon’s work on information theory that came out at that time. That was an eye-opener. I didn’t quite understand all of it either, and that took a lot of learning. So everything was really very exciting in those days, I must say. The whole idea of communication theory: how to improve transmission of signals in the presence of noise; how you cope with this in radio, how you cope with this in radar-related ventures. But starting with radar and moving into radio, now, how do you handle that? How do you carry out telephone transmission in the presence of noise? All of these ideas began to gel and come together based on work during the war by Shannon, Wiener, Steve Rice at Bell Labs, and the physicists who worked at Rad Lab. There was a lot of work being done. Some of this work was still being done in the classified area. There was a very famous report being done by a man named Marcum, who was one of the first to do some basic work on detection of signals in noise for radar problems. This was declassified a long time ago and it has been published in a classic set of papers. Our work at Sperry was sort of similar. We used some of his work, and we went beyond his to some extent. So this was “hot in the air” in those days, and that was the most exciting thing.

Nowadays, we look back and we say that’s only part of the communications process, what you call the physical layer. We didn’t call it that in those days. But nobody thought about going up higher or anything like that; just get those signals across and noise is your big problem. So, how do you handle it? How do you characterize the noise? How do you characterize the signals? How do you handle problems of this type? So that’s where things were gelling in those days.

Ph.D. studies, Sperry Graduate Fellowship

Schwartz:

Early in 1949, after I had been there almost two years, Sperry Gyroscope introduced a new doctoral program for people working at Sperry. I applied and was lucky enough to get the award. The most amazing thing is they say to pick any school I want in the country. “So, how much will I get?” “Well, you figure out what it’s going to cost.” I mean, nobody had a price, believe it or not. I could have gone anywhere, assuming I had been accepted. Luckily, I’m an honest guy. I had good grades in my evening Master’s program at Brooklyn Polytech, and as a young kid I had always heard Harvard was the best university in the country. Maybe not the best place for electrical engineering, but I decided to go to Harvard, and I’m glad I did.

So I went to Harvard in ‘49. Harvard had had an engineering school, but they closed it down just as I arrived there, so I went into the Applied Physics program. I was really doing communications work as well as applied physics, but you could do anything you pleased. I submitted a proposal to Sperry to cover my costs there and I thought I was limited in the amount of money, so I really didn’t ask for much. But as the “richest” graduate student on campus, I bought an old car—nobody else could do that. I wasn’t making much money, but it was still better than most students were getting in those days. I was very thankful to Sperry for the Sperry Graduate Fellowship. It was very nice. Summers I came back to work at Sperry.

I went to Harvard and looked around for a thesis topic. I got an advisor, a man named Pierre LeCorbeiller, who was a physicist. He said to me, “I’ve got a nice problem on the double pendulum that I’d like you to solve. Solve it, you get the degree.” I said, “I’m not really interested.” I took some reading courses with him on nonlinear mechanics, stuff like that. So I went scouting around for a thesis topic. I even went over to MIT to talk to some well-known electrical engineers there. One guy was a doctoral student, Bill Huggins, from Johns Hopkins whose work I’d known about earlier. I couldn’t get a topic, so I decided to extend the work that I’d done at Sperry. I’m happy that I did because it turned out to be wonderful- it expedited my getting the doctorate. My first year at Harvard, I took courses and I took the doctoral qualifying exam. The second year, I spent a few months on the thesis and I finished up in two years, very quickly. They tell me the thesis was very good. Somebody once said it’s the most dog-eared thesis at Harvard in that field, because it was a hot topic. It was on the detection of signals in noise as applied to radar, but extending the work that Marcum had done and the work we had done at Sperry. So it really was detection theory, if you wish.

Signal detection in noise; thesis and publications

Schwartz:

I tell my students I believe in serendipity. I like browsing, and I suddenly find something that can be useful. This was the case with my doctoral work. You realize the problem—the detection of signals in noise—is statistical, so you look through the statistics literature. It turns out statisticians had done work similar to this. They may have not have used the term noise, they may not have used the term signal, but instead talked of detecting something in the presence of interference. Something like that. In particular, both Marcum and I came across this. Two statisticians named Neyman and Pearson had done work on the theory of statistical detection that I applied to the problem of radar. It enables you to find optimization techniques. For example, if you have a signal in the presence of Gaussian noise, the model everybody uses, says that the optimum thing to do (which is what people were doing all along) is to take these signals, one after the other, and just add them up. After a certain period of time, you stop and say that if the sum of those signals exceeds a certain threshold based on the noise, then you call it a signal present. Otherwise, you call it noise present.

See, they came up with the nice idea that you have what’s called the probability of success and the probability of making a mistake—you have to incorporate both. A lot of books have been written on this. One of my books talks about that too. What you do is to say, “I want the probability of a mistake to be less than a specified amount.” In our case, mistaking noise for signal is to be less than a certain value. That sets a threshold. Then you maximize the probability of success of a signal when it does appear. The procedure tells you the way to handle this. If it turns out noise is Gaussian, just add up the signal samples and set a level, depending on the probability of false alarm, the probability of mistake. Some of this work had already been done at the Rad Lab, but not in this clear form. They had done similar things, because, if you think about it awhile, it’s almost an obvious thing to do.

So I pursued that for my doctoral thesis. I extended this work in my thesis to handle a signal that is fading, or fluctuating in amplitude. I also came up with another detection technique when I said, “But, gee, I might try other techniques instead of adding the signals up. Maybe there are simpler techniques.” These apply to communications obviously, because it’s the same problem of detecting a signal in the presence of noise. So I developed a technique called coincidence detection, taking a number of these radar signals coming in to see if each signal separately exceeds a certain level. If it does, you count it, then after a certain interval of time, you count the number of incidences above that level. So it’s a counter rather than an adder. I thought it might be easier to implement, and I compared that with the optimum Gaussian adding procedure and showed that it does very well.

You have a bunch of signal plus noise samples coming in; they may be fluctuating because the signal is fluctuating. So if each signal comes in, it could be noise, so you set a threshold. As each one comes in, you ask is it above the threshold? You set a counter. So every time it comes in, a counter keeps advancing. The radar can only handle a certain number, so you set a certain fixed number to come in, in a certain period of time. You say, “I’ll call it a signal rather than noise,” if K of N samples exceeds that level, and the value K depends on the noise level and on the signal level. Not only that, I found by just playing around it turns out when you do a study of a lot of these in large numbers, that there is an optimum value for that value K, in terms of maximizing the probability of success, for example. So that was a chapter of my thesis, and later published as a paper. The radar people picked up on this paper, and it was later cited as a classic paper in the field.

Not only that. I found to my amazement later on, people saying this was one of the first papers on non-parametric detection because the coincident detection technique was non-parametric. It didn’t count on the optimum Gaussian statistics it simply said count the number above a level. That’s all I did. It was very nice.

So I published these papers. I came back to Sperry after two years. I had nobody to guide me; my advisor didn’t really guide me in this. Somebody said, “Why don’t you publish this?” I said, “Where should I publish it? I don’t know anything about publishing.” “The Journal of Applied Physics.” I sent the whole thesis (180 pages long) to the Journal of Applied Physics. It goes over there. Back comes a letter from the editor: “It sounds interesting, but maybe you ought to send in separate articles.” It took me a while. I ended up finishing my thesis in ‘51. In ‘54, two papers were finally published.

Another example of serendipity was the chapter in my thesis on sequential detection applied to radar. While at Harvard, I was browsing through the statistical literature on signal detection. I came across a book by a man named Abraham Wald, who was a world-famous statistician at Columbia, called Sequential Decision Theory. He had the concept that one could speed up the process of determining whether a product that you were looking for is ok. All of this is small sample theory. Maybe I can do better with fewer samples, if instead of setting a criterion and seeing if my samples exceed that criterion, I do it sequentially. I start looking at each sample as it comes along. I have a decision region that changes depending on what the sample was. For example, if the first few samples I get are above a certain level, maybe that means it is a signal coming in. Let me reduce the threshold or do something. Adapting Wald’s work to radar, I developed a double threshold scheme. If something fell below a lower threshold it was noise; above a second threshold it was counted as signal; between the two, you continue the process, and start narrowing that double threshold region, the one between the two thresholds.

Hochfelder:

So it’s an adaptive measure?

Schwartz:

It’s an adaptive technique. I said, “My God. I can use that for radar!” I have a chapter in my thesis on sequential decision theory, which I never published, unfortunately. I wish I had, because other people published and got the credit for it. I tell my students this all the time now, “Publish as quickly as you can. Otherwise, you’re going to find that everything is in the air at that time. Everybody’s working on these problems. Things don’t come out of a vacuum.” If I’m working on this problem, other people are working on this problem. Don’t be afraid if somebody has similar ideas. You are going to have your own ideas, always different enough from somebody else’s ideas that it will be all right. Reminiscing a little bit, the day of my doctoral exam at Harvard, I got a call from my advisor to come into his office. He says, “When you went over to MIT, who did you speak to?” I said, “Why?” Well, it turned out that I had a fright, but it worked out okay. My advisor had invited Jerome Wiesner, a very well known professor from MIT, whose work was in this area, to serve on the committee. (He became the president of MIT later on.) He accepted because there was nobody—really, there were very few people at Harvard working in the field. My advisor was a physicist. He didn’t know anything initially about this field, but he took me on as a student, which was nice. So I worked on my own, based on my experience at Sperry. At any rate, my advisor says, “Well, he [Wiesner] looked at your thesis, and said, ‘A thesis at MIT was finished last year, exactly in that area.’” I said, “I never saw this before.” He gives me a copy of the other thesis. I look through it. The first chapter was very similar to mine. Quantitatively he was doing the same kind of thing, but happily, mine was theoretical. He had built a radar system and did studies, which took me off the hook. I’d never met the guy. When I went over to MIT, it had nothing to do with that. I was looking for other ideas in other fields.

Generally, when you’re working on something, other people are working on the same thing. That’s the way life is. I mean, I worked on radar because I was at Sperry and Sperry was a radar company, among other things. Work had gone on at Rad Lab. The Rad Lab books had been published. The Bells Labs’ people were doing work in this area. It was all over the place. Marcum had done this work at Rand Corporation out in California. So everything was going on at that time. Then you have your own ideas. So, at any rate, both sequential detection and Neyman-Pearson detection theory as applied to radar were in my thesis. But by the time I got around to wanting to publish this work, somebody had already published papers on them. I got two nice papers when I could have had four nice papers. But, okay. How could I complain?

Teaching; information theory

Schwartz:

Anyway, I went back to Sperry for a year and did more work. Then I decided I wanted to go into the academic world. I started doing teaching at night. I taught at Adelphi on Long Island and I gave a course at City College in statistical communication theory, which was just beginning to gel. Now, while I was at Harvard as a graduate student, one of the pioneers in information theory was a man named Peter Elias, who had done his thesis on this at Harvard.

Hochfelder:

He was associated with Norbert Wiener for some reason?

Schwartz:

Yes. He became a professor at MIT. He had one of these special fellowships at Harvard, I think, to do anything you please, really. He may have gotten his Ph.D. at MIT. I don’t remember where it was, but his thesis was in information theory, coding, those things. I listened to some talks that he gave, and it was very exciting. So I got very interested in information theory as well. I never really worked in information theory. Coding was not my field of interest. I was much more fascinated by noise. Signals in noise, detection theory—that excited me.

I spent another year at Sperry, and then decided to develop some graduate courses at Adelphi University on Long Island and a graduate course at City College, trying to pull together some of these ideas on statistical communication theory. I enjoyed those courses. I enjoyed pulling them together and I loved teaching. When I was doing my master’s degree at Brooklyn Poly at night and working at Sperry, Friday nights I was also handling a physics laboratory at Cooper Union. (When you’re a kid, you can do anything, obviously!) At Cooper Union as a senior, I enjoyed helping students with their course in applied mathematics, stuff like that. I enjoyed teaching very much. That’s how I decided to become a teacher.

How I finally made the switch from Sperry to teaching is very interesting. On my plane coming back from Chicago from the National Electronics Conference, the year after I got my doctorate, was Ernst Weber, one of my teachers at Brooklyn Poly, who was a pioneer in the field of electrical engineering. He was very well known, and had published books, including one much later with the IEEE Press. He has now passed away, but was an outstanding giant in the field. He was later the president of Brooklyn Poly and was head of the department when I was there. One of my teachers, and a wonderful teacher. So, I see him on the plane. I go over to him and say, “Dr. Weber, do you happen to have any openings?” It turned out he had, so he offered me a job at Brooklyn Poly in September of 1952 as assistant professor. Nowadays, when people complain about one course that they have to teach I had 18 contact hours. I had two three-hour lectures and twelve hours of laboratory. I said to myself, “Gee, what do I do with all the free time that I’ve got?” Because I was used to working a forty-hour week at Sperry and maybe overtime as well.

One of my colleagues that joined the same time as I did, Athanasios Papoulis, went on to become very well-known in the field. He’s published many books. We even shared a desk. They had no room for us when we first joined. Dr. Weber was also president of the Polytechnic Research and Development Corporation, which was marketing some of the stuff done at the Microwave Research Institute at Poly, of which he was also the director at that time. Poly had done pioneering work in microwaves under his direction during World War II. He was never in his office, so he gave us this desk to share the first half a year or year, or something like that.

Papoulis had five courses to teach, three graduate and two undergraduate courses. Maybe he had ten to twelve contact hours. Young faculty only teach three contact hours now, but there’s more pressure on them now. We didn’t have that much pressure.

Brooklyn Poly had pioneered in microwaves, electromagnetic theory applied to radar, and applied to a lot of other problems. Weber had built up this large group, and they had set up a separate department called the ElectroPhysics Department.

I joined the Electrical Engineering Department. It turned out, for whatever the reason, the Electrical Engineering Department was more of an undergraduate department at the time. We taught graduate courses, but there wasn’t that much research going. The ElectroPhysics Department was strictly a research department teaching graduate courses. So my first courses that first year were standard electrical engineering undergraduate courses.

This is 1952, and I had this interest in communication theory. In 1952, one of the first books published in the area was by Davenport and Root calledRandom Signals based on pioneering work at MIT and elsewhere, again, during World War II. It was on the detection of signals in noise and written at the graduate level. Davenport was from MIT, and I guess Root was also from MIT. Root went on to be a professor at Michigan. I, of course, looked through the book and liked it. I think I may have even taught a course at that time using that book. I don’t recall anymore; my mind is not clear about this.

Communication systems text

Schwartz:

I decided that it might be interesting to develop a modern undergraduate course in communications systems, because the only books available were books like Terman’s book on radio engineering, which was strictly radio. Maybe a little of telephony, but very little on this. When I joined Brooklyn Poly, I decided that I’d start focusing on developing that, and I started teaching a course in that area. I developed some notes for that and handed notes out to students. It took a number of years. I don’t remember the exact timing, but it was probably about the mid-’50s—’55, ‘56, something like that, during which I put the book together.

The head of the EE department at Brooklyn Poly at the time was an outstanding man named John Truxal, who was a pioneer in the control area. He had published an outstanding book called Automatic Feedback Control System Synthesis. He came out of MIT. He joined Brooklyn Poly as head of the department in ‘54, ‘55, something like that. He began to build a strong control group, which became one of the world’s outstanding groups on controls.

I put these notes together, and Truxal encouraged me to have it published as a book. I remember saying to him, “What title should I use?” He said to me, “Take a long title. Long titles sell well.” (Note that his best-selling book was called Automatic Feedback Control Systems Synthesis!) There were other books available related to my proposed book, but not quite the same. In particular, there was a book that had been written by Goldman of Syracuse University called Frequency Analysis, Modulation and Noise. A classic book at that time, very nice, but it really didn’t focus on statistical communication theory and the information theory aspects. It was more frequency analysis. Guillemin at MIT had published some nice books as well on frequency analysis, which was beginning to pervade the curriculum. However, there was very little on noise, very little on signals and noise and very little on information theory, especially on the undergraduate level. Graduate courses were developed using the Davenport and Root book.

My notes covered these topics on the undergraduate level, so I decided to proceed with book publication. I borrowed part of my title from Goldman’s book from Syracuse on frequency analysis and called my book Information Transmission, Modulation, and Noise. You can see the first edition here on my bookcase shelf. This whole period in the ‘50s had communication theory pervading the field of communication, at least in my mind. I come at it though radio, much less through telephony. At that time we had salesmen (they called them travelers) coming around from various publishing companies. A guy from McGraw Hill came in and said to me, “How about writing a book?” So, I said, “I have notes for a book on statistical communication theory for undergraduates.” He said, “No, no. You don’t want to do that. Davenport and Root’s book has just come out. Why don’t you try another area.” He tried to dissuade me. Luckily, he didn’t persuade me, because the book turned out to be a bestseller.

In 1959 this book was published. Independently, John Truxal, together with McGraw Hill, had set up a series called the Brooklyn Polytechnic Series, and this was published as part of the Brooklyn Polytechnic Series. It might have been the first or second in the series.

It was the first undergraduate textbook to cover modern communication systems from a statistical point of view. It talked about AM, FM and digital communications from a unified view, and brought in some of the statistical stuff that had appeared in other books, as well as spectrum analysis. It starts with frequency analysis, after an introductory chapter on information theory, presented in a very qualitative way. What do you mean by information? This was Shannon’s great idea, which he actually put into mathematical form. If I’m sending a signal, which is on continuously, it carries no information. So why send it? When you send something it should be unknown. So he quantified this.

Then I went on, in the book, to write about AM and FM signals. Then I went on to discuss digital communication systems, PCM, starting with pulse amplitude modulation. Then I said, “Okay. Now we can try to understand how these systems all function from a systems point of view. Because they all have to function in the presence of noise.” FM, AM—all of these get swamped by noise. Why is PCM better? Why is FM better than AM as far as noise is concerned? We all knew this. In fact, Armstrong pointed this out many years before and he did this in a very nice graphical way. Actually, in the ‘30s, people began to quantify this, so I tried to put all of this together in the book: the analog stuff that came out of pre-World War II, the digital stuff that came out of signal communication theory during World War II, and information theory during World War II. Students weren’t expected to know anything about probability. I hadn’t had it as a student, so I put in a chapter on statistical analysis. I applied that to FM, AM, and PCM. First, I study them without noise. I talk about statistics. Then I introduce simple analysis of noise on the undergraduate level using the work of Rice in 1944 that I’d learned a few years before. By now, fifteen years after Rice’s work had appeared, it was classic stuff, and I put it together for student use.

The book turned out to be very successful; many schools picked up on it. I was very pleased. A number of years back, the University of Wisconsin Department of Electrical Engineering had their hundredth anniversary celebration and they invited me as one of the guest lecturers there. I was very pleased to do this. They had published a book summarizing activities in their department for the last hundred years. They gave me a copy and other people copies. When they talk, in their book, about the ‘50s, they discuss introducing courses in communications. No suitable books were available, so in ‘59-’60, they began using my book, which is very nice to read. It was the first book in the field. It held the field for about six years or seven years. Then other people began publishing and it lost sales. The first five or six years it was the only book out in the field. I’m very pleased that it was a pioneering book; this made me feel very good.

I left Poly in ‘73. Len Shaw, one of my former colleagues there, was rummaging through the files a number of years back and found a mimeographed copy of my original notes. He was at Brooklyn Poly for many years and was one of the Deans there (he’s just retired). So he sent them to me with a comment saying, “Some of your ideas still hold up.” It was very nice. Very pleasant.

Brooklyn Poly Teaching and Research

Schwartz:

I must say in all honesty that when I joined Brooklyn Poly in ‘52, I focused on the undergraduate level. Then I began to teach graduate courses also. So I taught courses in a variety of areas, not just communications, because we were encouraged to do this kind of thing. I developed this book, then I began thinking about going back to research. I don’t have time for doing that sort of thing now. That’s why life was a lot easier in those days. Nowadays, the minute a young man or woman joins the university, he or she has got to start running. I guess I just take back what I said before. It’s much harder for them now. They can’t afford to teach eighteen contact hours and do research at the same time. But I managed somehow to begin to try to do this.

I remember going to Bell Laboratories, thinking maybe I can work with them or get some ideas from them. I was introduced to David Slepian, who retired from Bell Labs a long time ago. He was a pioneer in this whole field of mathematical representation of communication signals. At this time, the Army was doing work in RF and they encountered a lot of work with fading channels, fading signals. I thought that might be an interesting idea, so I began doing work on fading channels.

I had a number of very fine doctoral students at the time: Don Schilling, Ray Pickholtz, Bob Boorstyn, Ken Clark, Don Hess, and a number of others, and they were doing their theses for me. Actually, Ken Clark was the first one to do his thesis for me. There was a lot of interest in FM as well, so we began introducing a research program in the studies of FM and noise, and at the same time in fading signals and noise because the Army was pushing things like that. It was in the air—papers were being published. I put some students to work on that.

Rice at Bell Labs had continued to do his work. He was the pioneer in noise. He tried to apply some of his noise ideas to FM, and he developed the concept of click analysis. Of course, everybody knew why FM provided an improvement over AM in the presence of noise, above a threshold. Armstrong, as well as Crosby at RCA had pointed this out in the early ‘30s. But then, suddenly, below a threshold, FM goes to pot. Why? Why does the noise suddenly get larger? Rice through the click analysis was able to put this on a firm footing and so FM studies were in the air too.

My first doctoral student was Ken Clarke. He finished up in 1959. He had been an instructor. All these guys were appointed instructors on the staff, which was one way of getting teaching out of them and getting them a little extra money. Don Schilling and Don Hess, and, a number of years later, Pickholtz and Boorstyn, were instructors. In 1961, Truxal moved on to become Dean and I became the EE Department Head at Brooklyn Poly. I really started pushing telecommunications. These guys were there; they were very good guys and we had them all appointed assistant professors. We set up a group that eventually had seven of us working in telecommunications. I must have begun the group in 1959, I guess, but when I became the department head I began to push it.

At that time, Jack Wolf, an outstanding graduate of Princeton, joined us. I heard about him. He had been in the Air Force and then joined NYU. I appointed him to the staff also, and with him we had seven faculty members in the telecommunications area. Poly had a big engineering school, and the EE department had about forty-five people in it at that time. We had a group of about seven faculties, including myself, working the field. It was a glorious time. Ken Clarke and Don Hess were experimentalists—they tested. When we were talking about fading channels and fading signals, they developed an underwater fading channel simulator. If you send signals through the water, water also has some of the same effect on them. They built a water tank for this purpose. Sid Deutsch, another faculty member in the EE department, had done work on his own in television. He was doing work on low bit-rate television. He worked closely with our group as well. We began to publish in FM, fading signals, and noise. Jack Wolf was a specialist in information theory and coding, and was doing work in coding. We covered the gamut from information theory and coding to statistical communications theory to communication systems like FM, both in teaching and in research that we were carrying on at the time. I think it was probably one of the largest groups in the country, seven faculty members at that time, plus a sizable graduate enrollment. It was a wonderful time.

I say the department started off as an undergraduate department when I joined it. When Truxal joined the department, he brought in graduate research and teaching in the control area, but we built a large group in communications as well, following up on that. So, it was a real wonderful experience.

That was the tenor of the times at Brooklyn Poly when I was there. Unfortunately, Brooklyn Poly fell into financial problems and went through a difficult time. I was the Department Head until 1965. I left on sabbatical to go to France, came back a year later and continued my work in this area. That’s when in 1966-68, Ray Pickholtz and Bob Boorstyn and two other graduate students finished up, followed by a flock of others. I had a lot of students from Bell Laboratories come to work with me in those days as well too. I put them to work on problems in digital technology, communication theory, and things of that type. In fact, Poly set up a special program jointly with Bell Labs to have them come spend a year with us full time working on their doctoral thesis and go back. It was a wonderful time.

Communication networks and computers

Schwartz:

This took us to about the late ‘60s, I guess. About 1970 or so, I began to sense that there was a change taking place. We focused on what we now call the physical layer, and people were now beginning to talk about communication networks—machines talking to one another.

I’ll go back a little bit historically because this has emerged now, using computers and communications, as the Information Age. Back in the mid-’60s, the way I look at it, GE and MIT and other places began to experiment with time-sharing computers. Before that time, everybody who worked in a large establishment or university had access to a large machine. You would bring your cards in and have them loaded in and a couple of hours later you would go pick up your cards and maybe some printed-out papers. It was exciting, but young people nowadays have no idea how difficult this was. They began experimenting with the idea of time-sharing computers because there had been some studies done that indicated that the machine was not operating most of the time, or you could do multiple operations simultaneously. MIT experimented with this, and GE joined them on this, and other companies too. Once you start time-sharing, you start being concerned about communicating with that machine. A few years or so ago, I wrote a paper which discusses the history of some of this. Would you like to have a copy of that?

Hochfelder:

Sure. That would be great.

Schwartz:

I’ll tell you how to access it. You can get it at the Journalism School. I wrote the paper for our Journalism School. It’s called "Telecommunications, Past, Present, and Future," written specifically for the non-Engineer. It has an introductory chapter which talks about the history of computing and communications in that period. It is very simple. IBM and other companies, for example, had in the ‘50s, developed communication systems for airlines—airline agent terminals connected to a central computer. IBM was selling the concentrators and the terminals.

Hochfelder:

Is that SABRE?

Schwartz:

Yes. SABRE came out of that. SABRE was the first system. IBM pioneered in that. I forget the details, but I have it in this paper of mine. I went back and checked through the old literature on that. There had been work in that era on terminals communicating with a central computer. For the military, Bell Labs had done some work on some systems, even radar. You had terminals away from the central system that you wanted to communicate with the central system, back and forth. The military and the commercial world had already begun to develop the concept of terminals communicating to a computer somewhere over lines.

Hochfelder:

So, for the military that would be the SAGE system.

Schwartz:

SAGE, yes. I mention SAGE in my paper too. So, all of these things were in the air.

- Audio File

- MP3 Audio

(360 - schwartz - clip 2.mp3)

Then in the late ‘60s, it became apparent to IBM and other organizations that you are asking these large computers to do a lot of communication tasks. Once you start to do more and more of this, you’re tasking the computers with this, and in a sense you’re undoing what you started doing. You want them to do more computational work, and now you’re doing this other work. So they decided to off-load the communication tasks to special purpose communication systems they built called communication concentrators—computers which just do communications work. The concept was to have terminals connected to these; they concentrate the activity and they send them to the same central computer. In a way, this is the same thing the SABRE system does.. The SABRE system already operates under that premise: connect terminals through a concentrator. The concentrator then interrogates the central system and sends messages back. So that was already there many years before that idea, but now they decided to do it more generally. Once you start doing this, you have to develop what are called protocols—ways of having two machines that are a distance from one another interrogate one another and send messages back to one another and understand one another. This has been done for a long time now. Time-sharing, as well as airline reservation systems and military communication systems, among others, helped develop this.

ARPANET; commercial computer utilities

Schwartz:

At the same time (the mid-’60s now), there was pioneering work going on at the then Advanced Research Project Agency (ARPA) of the Department of Defense. Work has been published on this very recently. In fact, in this paper I mentioned, I sort of explore the ARPA, the IBM company thing; I explore the predecessors, the work of the airline reservation systems. The primary work at ARPA at that time, in the mid-’60s, looked to see if people can communicate with computers in some better way.

A man named Larry Roberts joined ARPA in the late ‘60s. He had the concept of developing what he called a computer utility. Since people were beginning to do all kinds of time-sharing activity at the time, why should every organization, every university, every commercial organization, have replicas of the software? Why not develop something special? For example, the University of Utah had a specialty in graphics capability. UCLA was doing its own work. Why couldn’t people all over the country access those universities for their software, rather than having to duplicate it in your own place? It made sense. So he had the idea of building a computer utility, like an electric utility, distributed, and ARPA began to fund the ARPA Network project. I think the first one went online in 1969 with four nodes or computers interconnected. So, you visualize all these things are coming together.

Now, in order for ARPA to operate, they had to have communication protocols to handle the messages back and forth. They had the concept of a router—a message processor that handles signals and routes them appropriately in some way. ARPA began to develop routing algorithms that came out of this.

IBM at the same time period, 1969, had begun work on something they called Systems Network Architecture, SNA, which is probably the first commercial network architecture designed to handle messages between computer systems. ARPA was a distributed topology because you could be anywhere, and their inter-network message processors (IMPs) were scattered all over the country and you fed into them and they connected with one another in a distributed fashion. IBM wanted people to access their main hosts, their large machines, so the concept was to have terminals connected to concentrators connected to the main host, passing messages back and forth, and you wanted an architecture for this.

In 1969, we also saw coming out the first commercial computer utility. A company called Tymshare was set up, coming from the word time-sharing. Their idea was, if you don’t have access to your own computer, you have a terminal, which you use to access its computers. Tymshare had a bank of computers scattered all over the country, large computer centers, where they would process your information. You would pay for this, so your company didn’t have to own a computer of its own. They developed a network called Tymnet, which also began to operate in the late ‘60s, early ‘70s. Everything was coming together now: ARPA’s pioneering work on the computer utility, mostly for universities communicating in a distributed fashion, Tymnet, IBM’s SNA. GE set up a network called GE Information Services Network, which did the same thing that Tymnet did. It offered services. They began to go abroad also and offered links to Europe and other places. Tymshare had their computers scattered all over the country because they felt that it was more reliable that way. GE had its computers all in the one center in Rockville, Maryland. It felt the system was more reliable that way. In fact, I attended a conference later on, where two guys, one from each company, were debating which one was more reliable. One is distributed, and if one system fails, you still have others. GE said if they are all together, we can make them more secure, so who knows? But anyway, very interesting.

Networks research, teaching, and publication

Schwartz:

This thing started to happen now, and I began to feel that networking was an exciting area to work in now. I began to develop some activity at Brooklyn Poly in this area. The Poly Microwave Research Institute (MRI) annually had a large workshop, covering various topics, not just microwaves. We ran one on computer communications and the integration of computers with communications, which is part of the same thing.

I remember talking to Paul Green, at the time at IBM, who had come to IBM from Lincoln Laboratories. He was a real pioneer in this field. He was at IBM Research and had done some pioneering research work in SNA. He said to me, “If you really want to learn about the field, why don’t you go out and find out what some of these companies are doing?” I think he might have suggested this for a journal. I said sure, and got together two of my colleagues, Bob Boorstyn and Ray Pickholtz at Brooklyn Poly, and the three of us picked four networks that were ongoing in this country. We went and talked to the people and learned what they were doing. It was a new field. We wrote a nice paper. That started us going. Once you learn what companies are doing, it gives you some exciting problems to work on.

Hochfelder:

It would be a good paper to have.

Schwartz:

Yes. I have it in my files here. I’ve got a copy of it. One of the IEEE journals, I forget which one, published it. So that was a tutorial paper. Nothing new, but we three interviewed people at different companies. One was the NASDAQ system, for example, that they had set up in those days. One might have been Tymnet too; I don’t remember anymore.

I began to develop a program on this subject and began to teach a course at Brooklyn Poly in the subject of networking. I had some notes. I left Brooklyn Poly in 1973, just when all of this was coming to a head. Poly had been having financial problems, as I pointed out, and it was sort of sad in a way because some of the leading people had left. Jack Wolf had left by that time. Ray Pickholtz decided to leave and go to George Washington, and two of the other key people decided to go into industry by themselves.

Hochfelder:

Is that when Don Schilling left Poly?

Schwartz:

No. Don Schilling had gone to City College. He may have gone before this time. But Don Hess and Ken Clarke organized a company of their own. They were experimentalists. They still have their company functioning. I think Bob Boorstyn and I might have been the only ones left at this time.

Columbia asked me to spend a year with them as a visiting professor, which I did. I gave a couple of courses in computer networking, because that was the thing I was really pushing at this time. They asked me to stay on and I stayed on at Columbia. I came to Columbia officially full time in 1974, and, again, continued developing a program in networks. Out of this came some notes and the first textbook in the field called Computer Communication Network Design and Analysis, published in 1977. A very nice book was published much earlier by Len Kleinrock, who was one of the pioneers in the field. That is his doctoral thesis that he did in 1961, I guess at MIT. Even years later, it is a classic book with wonderful stuff in it. He had it reprinted maybe by Dover Press; I forget who did it, and I’ve got a copy somewhere. That was really the first book in the field. There are other books that have been published too, but mine was a textbook with problems and exercises, stuff like that, for students to use based on the course we developed first at Poly and then at Columbia. I have continued in that field ever since. I’ve done work personally with students at Bell Labs, at Brooklyn Poly, at Columbia, and at other places. First at Poly and then at Columbia, in what is called congestion control.

Performance analysis

Schwartz:

Now, preferring work in analytical areas, I gravitated more towards performance analysis. How do you see if these systems are performing properly? By doing analysis. It turns out you have to learn queuing theory and things of that type. I tend to be oriented more in that direction, in more quantitative approaches. Bob Kahn, who was one of the pioneers of ARPAnet, one of the giants in the field, had published a paper on flow control for the ARPAnet. He was an electrical engineer by training, out of Princeton. He had worked in communication theory originally too, and had moved into this field. But he has his own organization now. He published his paper on congestion and flow control for the ARPAnet and I read it and I said, “Gee, maybe we could quantify these; maybe come up with some numbers.”I put a student named Mike Pennotti to work on this, a guy from Bell Labs who knew nothing about the field. This guy is sharp, so he picked up and finished his thesis in a year’s time. He had been working in, I think, underwater acoustics at Bell Labs and Navy work, or something like that, and switched fields completely, and boom. Sharp guy and good work. So we worked together on this, and he came up with what I call a pioneering paper on a virtual connection, a connection from point to point along a network which consists of a source terminal connected through routing nodes or routing switches to a destination node with buffering and queuing. That’s the way that networks operate. You store and transmit information in packets. We were able to model this. We came up with some concepts. We compared two different strategies. Do you want control over the virtual connection end-to-end, or do you want control at each node separately? We found that, by proper tweaking, both gave us the same performance.

But then, which one is easier to implement? It was the first such quantitative study and it has since become a paper that has been cited a lot of times, because it gave rise to a lot of other work in the field of congestion control and performance analysis. Again, the way engineering always works, somebody invents an idea, you develop the software (in the old days, it wasn’t the software, but nowadays the software), you develop the hardware for it, and then somebody comes along thinks maybe I can study and analyze it and get improvements on it. That’s the way it usually works.

So, this paper was published in ‘75, and it was very good early work in the area. Pennotti’s work was from Poly, but I had moved to Columbia, so he worked with me there. He got his doctoral degree at Poly, but he came to see me at Columbia at that time. There were other students who I had had at Poly whom I carried with me. They got their degrees at Poly but they worked with me at Columbia.

Routing protocols; communication links

Schwartz:

I began to work at that time with a colleague at Columbia here, Tom Stern, on routing protocols. Bob Gallager at MIT was doing some very nice work on routing protocols. Again, the ARPAnet had focused on that work. They had a routing procedure as part of the ARPAnet, which was pioneering. Had a lot of problems with it, because they tried to have it react too quickly and it was unstable, as it turned out. They began to change their routing protocol. The question arose, what are good routing protocols? Bob Gallager worked on this problem. Can you distribute the routing algorithms in some way? There were many routing protocols developed years before for work in transportation networks. How do you route trucks and things like that? Some of those ideas were picked up on in this case. So, Gallager did some pioneering work in distributed routing controls. Tom Stern did some fine, related work, which also stimulated work in the area. A lot of work was going on in this area. Harry Rudin, an American who went to Switzerland and is still living there, who had been active for years, was working in this field at IBM Zurich Research Laboratory. He’s now retired, I understand. He also did some pioneering work in routing.

We now left the physical layer behind and we were now moving into what is now called the network layer. IBM had done work on Synchronous Data link Control (SDLC). When the world’s standards bodies in the ‘70s began to pick up on this, they changed it a little bit; they called it High Level Data link Control (HLDC), but it is based on the IBM’s SDLC. So, that’s the second layer, data link control. People began to work on this, and papers began to be published on that layer. Now, we are moving up to what we now call the network, or third, layer. The network layer involves congestion control and routing. If you go higher to the fourth layer, now called the transport layer that also involves congestion control. TCP came along about that time. Later on, people began to develop a sophisticated control, called flow control, at that layer. We do congestion control at the network layer, we do flow control at the transport layer. But they are all very related. How do you keep receiving systems from being overrun by packets arriving? Now, you can do it end-to-end; you can do it hop-by-hop at the network layer. You can do it end-to-end on the transport protocol, but the ideas are very similar. Sometimes the layers get mixed up.

By this time ARPAnet was developing a full-fledged network and giving rise to a lot of work going on all over the country in these areas, so we were not among the few working on networking now. Everyone was beginning to work in this area. I just mentioned Gallager did pioneering work. Kleinrock, from the beginning, at UCLA, did pioneering work on the ARPAnet. People from other universities did the same thing as well too.

I personally focused on performance analysis. Kleinrock did too, by the way. He’s a broad guy. He does systems and software work, and he’s published classic books on queuing theory, giving courses in that regularly. So, he does work in everything.

We’re now in a real hot period in the network area. The work that I’m doing has become fully focused on networks. My book came out in 1977 as the first textbook in the field, although other books have been published on this as well too. What I do in this book is based on the literature, as well as some work that we had been doing. The examples of routing and flow control in networks that I give in this book are based on those of GE Information Services Network, Tymnet, and the ARPA Network from a qualitative point of view to try to understand what everything is all about. A big topic in those days (as now) had to do with being connected together with links of various kinds. So I also have in the book work on how you assign capacities to communication links, things like that. A lot of it was based on work that Kleinrock had done in his original thesis studying end-to-end delay. Since you now have queuing delay, this is very different from the telephone network. This is a packet-switched network with routers—you have buffering, so one of the performance objectives is to reduce the queuing delay as much as possible. How do you route according to queuing delay? He had done work in that, and so I discuss, how do you assign capacity to reduce delay? I have a chapter there on queuing theory, because people hadn’t done this before. I have a chapter here applied to store and forward buffering. I have a chapter on routing and flow control. All these things were in the air in those days. Other books have been published since, of course.

Modems and data networks

Scwartz:

I have a much bigger textbook covering much more material that came out in 1987 called Telecommunications Networks: Protocols, Modeling, and Analysis published by Addison Wesley. That also uses a quantitative approach, but I do treat protocols and things like that. Now, what was happening in the world in those days? Well, networking is becoming really significant to the world. It is known to everybody now. We had telephone networks that covered voice messages only. Suddenly, you find data becoming important now. People were shipping data over modems. Modems were being developed in those days because the telephone people realized early on that you want to ship data.

Hochfelder:

Bob Lucky’s work?

Schwartz:

Yes. Bob Lucky had a group at Bell Laboratories. Actually, his group came out of an earlier group, started by Bill Bennett, who passed away a long time ago. He had some of the best people working on modems. But other people were doing this work too. A guy named Dave Forney set up a company called Codex, and they developed a data modem. So, other people were doing work too. Bob Lucky’s group did pioneering work. Steve Weinstein, as well as others, worked for him. They began to develop modems early on for handling data over telephone networks. As a matter of fact, all of these networks we talk about use telephone facilities. How does someone get into those telephone networks in some way? This is for terminal, low bit rate modems, things of that type. So that was the modem work that was going on. The CCITT in the early ‘70s was aware, not only of the modem work going on…

Standards

Hochfelder:

CCITT?

Schwartz:

Yes. There is something called the International Telecommunications Union, ITU, housed in Geneva. That’s a standards making body for telecommunications administrations all over the world. Only administrations can belong to this. In the United States, it’s the State Department and the FCC that jointly work together on this. This has now changed. The ITU had, at the time, two separate standards bodies, one called CCITT, the other called CCIR. They are French acronyms.

Hochfelder:

One is for telegraphy and telephone, and the other one is for?

Schwartz:

- Audio File

- MP3 Audio

(360 - schwartz - clip 3.mp3)

Comité Consultative Internationale Télégraphie et Téléphonique is that CCITT international standards organization, and the other one is called Comité Consultative Internationale Radio. One is for radio standards and one is for telephone and telegraph standards. The CCITT has standardized a lot of modem 34 work, things like that. But let’s focus now on the networking area that I am more familiar with.

They were well aware that data networks were now becoming significant. We already had IBM; we had Tymnet developed; GE Information Services Network. The French had a network set up called the Cyclades network. ARPAnet was here. Data networks were developing worldwide. The telephone industry was very aware of this, and they were very aware of networking. So the idea developed to try to standardize some data networks in some way. They set groups to work. I wasn’t involved, so I don’t know the details of it. But in 1976 they came out with a different kind of standard called an interface standard and called X.25.

DEC had developed DECnet, Burroughs had developed its Burroughs network architecture; all of these architectures in the United States were proprietary—they were developed for their own equipment only, although the companies weren’t specifically in the data networking area. The CCITT was talking about developing some kind of open networking, but across interfaces. Another organization, the International Standards Organization (ISO), was beginning to develop activities as well too, so the two were going on simultaneously now.

Let’s focus first on CCITT. They came out with a standard called X.25. Now remember, they have working with them mostly telephone administrations, so they are catering mostly worldwide to the telephone companies which, except for the United States, were all government organizations in those days. AT&T was quasi-governmental. It was a monopolistic organization in those days. They had the standard called X.25, which enabled users to interconnect to any network. Networks would have their own protocols, but by using this interface you could get into that protocol. On your side, you could see this protocol. That network on this side sees the same protocol, so you can get into it and then handle it anyway you wish, and the other side, it gets back out again. It is strictly an interface protocol; it’s not an end-to-end protocol. (It has subsequently also been adopted in some places as an end-to-end protocol.) There was an interface defined between a terminal on the user’s side and the network side. The same on the other side of a network between: between network side and the user side. What happens in the network between they weren’t concerned with. They weren’t going to tell people how to handle their networks. X.25 is a three-layered protocol. People knew about layers now: physical layer; data link layer which is HDLC; and a third layer, normally a networking layer, but, in the X.25 case, an interface layer, handling X.25 packets going across it. That came out of the CCITT activity. There was a lot of work published.

One of the first administrations to latch onto this was that of Canada, with Bell Northern Research developing products. In fact, I attended a communications meeting in 1976 and they presented some of their work at that time too. So they developed some of their products. The Canadians were one of the first in this area. Contemporaneous with this, the computer manufacturers and the public using computers began to feel the need for interconnecting computers in a non-proprietary sense. I just mentioned before IBM, DEC, and Burroughs in the United States. The same for Japanese companies. Each developed their own protocols for their own equipment.

The international standards organization, ISO, is also housed in Geneva. It is made up of companies rather than administrations. They felt the need to do this, so they set up some bodies and they began to develop pioneering activity in standardizing protocol architectures. They developed the idea of a seven-layered computer communications architecture, a physical data link layer, network layer, transport layer, session layer, all the way up to application layer. Why seven? Well, you don’t want too many, you don’t want too few, so they came up with that. They began a massive effort in each layer to really standardize this. Independently in the United States, the ARPAnet community had developed protocols, so they had their own.

Hochfelder:

The TCP?

Schwartz:

Yes. So they had developed a network layer called IP, actually Internet Protocol, interconnecting different networks. It told you how to route packets using the ARPA routing algorithms. TCP, Transmission Control Protocol, was the layer above, a transport protocol architecture. They had a data link layer too, et cetera. They had, of course, layers on top of that for handling various kinds of transfers, file transfers, e-mail, things like that.

Kleinrock, in one of his early papers, summarizing traffic usage early on for the ARPAnet, showed the most amazing result. Despite the initial ARPAnet idea of a computer utility, with the network used for accessing other Hosts’ software, it turned out that people were using the network to talk to their own friends! The study showed the first elementary use of email, where you’re talking to the guys in the office next to you. So most of the activity was local activity. People never know what things are going to be used for. That was what happened with ARPAnet of course. It never became a computer utility. It developed for other communications purposes.

ISO began to develop these standards and they came up with a seven-layered model and the ARPAnet community developed its own layered architecture. I innocently got involved in this. A standardization conflict had developed in the United States between the National Bureau of Standards, which is now NIST, and the Department of Defense. NBS was concerned with all commercial scientific and technological activity in the United States, in helping the commercial sector as part of the Department of Commerce. They had a Computer Laboratory Division. I happened to be a member of a Visiting Committee to that, to look at the Division activities once a year. They had a large computer communications activity and they were studying how to determine whether the ISO protocols, in their commercialization, were “correct” in their operation or not. They were heavily involved in this. They were concerned that American companies not lose out worldwide if the world were to adopt the international computer communication standards being developed by ISO. The Europeans were moving in the direction of ISO. In the United States, there was a push on by the Department of Defense to standardize the TCP suite, the ARPAnet suite, because they already had it going. Why bring in new protocols? So there was sort of a conflict between the Department of Defense and the Commerce Department, the National Bureau of Standards. They went to the National Academy of Sciences/National Academy of Engineering, which runs the National Research Council, NRC, and asked them to adjudicate. NRC set up an expert panel made up of people from industry and universities to try to determine which is the better way to go. Unfortunately (in retrospect!), I was asked to join that panel. On the panel were people from other universities: Dave Farber, a well-known guy in communications; Larry Landweber from Wisconsin; experts from IBM, from DEC, from Burroughs, and others. It was a broad-sweeping panel. We met for a long period of time in Washington with long meetings where we had people come in. We had Vint Cerf, who was with ARPA, speaking on behalf of TCP and that suite. We had people speaking on the other side. Interestingly enough, Dave Farber and Larry Landweber kept pushing for TCP. The rest of us, myself included, said no, wait awhile. TCP is just United States; we have to go worldwide. The ISO suite, seven-layer suite, is much newer, it has the new features in it. It is based on TCP to some extent. It doesn’t have the segmentation TCP has and in some areas it is much better. The guys from industry were pushing for it. So why not go for that? We finally over-rode their objections and they reluctantly agreed. We pushed for the ISO suite.

We issued this report and the Department of Defense said okay, they’re going to ask all of their contractors to move to the International Standards Organization’s suite and move away from TCP as soon as practical. Well, the rest is history. Despite this, TCP took over and the ISO suite never came in, and I regret my decision to this day. I tell my students any time I give a talk, don’t ask me to predict what is going to happen in this world anymore. That was a real goof on my part. I was wrong. You never know.

Hochfelder:

The computer keyboard, the typewriter keyboard, is almost the same sort of thing.

Schwartz:

Is that right?

IEEE, Communications Society

Hochfelder:

We can talk about that off tape. Please talk about your involvement with the IEEE and with the Communications Society, and also your involvement here at Columbia at the Center for Telecommunications Research.

Schwartz:

- Audio File

- MP3 Audio

(360 - schwartz - clip 4.mp3)

Yes. The IEEE one I’ll make brief. As a young fellow in the early ‘50s, I got very involved in information theory and communication theory. I became active in the then Information Theory Group before it was called a Society of the IEEE and attended a lot of meetings. It is hard to recollect the details of it. I don’t follow the Information Theory Society activities anymore. But somebody told me a year or two ago that they had read the newsletter and somebody had mentioned my name in the newsletter because he had found it in studying the old society records of the ‘50s. I was active then in the Information Theory Group and served as Chairman in about 1964 or 1965.

I was simultaneously active in the Communication Technology Group, or whatever it was called before the IEEE Communications Society. That was when I was head of the department at Brooklyn Poly. I was head of the department from 1961 to 1965 and living on Long Island. I became chair of the Long Island section of the Communication Technology Group. Then I got active in the overall Communications Group itself. I was one of the original people involved with the change from Group to Society. There was a fellow named Dick Kirby, Richard Kirby, who I guess was the first president of the new Communications Society which came out of the old Communication Technology Group. He invited me among others to join the committee to come up with the first constitution. So I was on that committee.

I became active. I was on the Board of Governors for years. I don’t remember the dates anymore. I got elected Vice President. Then in 1978 I was elected Director of the IEEE representing the Division of which the Communication Society was then a part. I was there for two years as part of that.

I am very proud of one incident while IEEE Director. People have forgotten this by now, but I’m the guy who proposed the idea of President-Elect. It’s not mentioned anywhere. I’m not sure that anybody would recognize it. But when I first became a Director, it became apparent to me that it was difficult to be a President for one year. You come and go and that’s it. You need some training. I knew other organizations had that, like the AAAS of which I was a member. So I proposed that at one of our meetings. We instituted the idea of President-elect and that was accepted. So now a guy comes in, is trained for a year as President, and then is able to go on as President the year after. I think I might have even proposed having a President for two years.

Hochfelder:

Isn’t there also like a Past President?

Schwartz:

Yes. Stay on for President, Past President. See, really they are committed for three years, which is very difficult at that level. Anyway, I was proud of that.

I kept up, obviously, my interest in Communications Society. First I was active in the Communication Theory Committee for a long time, then I helped organize a new Technical Committee on Computer Communications, which has now grown considerably. That was the committee devoted to networking and things like that. Some of my students came in. Ray Pickholtz, my former student, later also became active in that committee as well following that.

In 1984-’85, I was elected president of ComSoc. I might have been vice president before that. I was president for two years. One of our meetings was held abroad in Amsterdam. I think it might have been one of the first meetings we held abroad and that worked out very nicely. We had a couple of anecdotes. In Amsterdam, they threw out the red carpet—they opened up the city for us. We had a dinner engagement at the municipal hall, whatever they call it. The Queen came down to greet us. My wife tells a funny story where she came into this room where the ComSoc governing group was gathered to meet with her, and somebody had said to us, “Number one, be careful how you greet her—she is a Queen, remember. Number two, just these people here, nobody else.” So I’m very different, I guess. She walks in, I shook her hand rather than bowing or something like that. In America, we don’t do things like that, right? She’s a Queen—so what? Secondly, I beckoned for my wife, “Come on. Come meet the Queen.” I wasn’t supposed to do that by protocol either. So what! Why can’t my wife meet the Queen? Anyway, it was very nice.

The nicest thing was the Chair of our Awards Committee at that time was a man named Ralph Schwarz, who was also at Columbia. He has since retired. He’s older than I am and a very nice guy. He was to give awards at the awards luncheon that we run annually. He and his wife were both refugees from Hitler Germany. They fled to Holland and he spent a year or two in Holland. They didn’t meet there; they met back in the United States, by coincidence. Ralph still retained some of his Dutch, so when he got up to give the awards, he started speaking Dutch. The Dutch hosts were amazed. This was wonderful. Imagine that- ComSoc comes to Holland, and this guy is actually speaking Dutch, which was wonderful. I think people respect you for that. So thank God for Ralph. It was very nice.

It was a good two years. Then I stayed on, of course, as past president, as you normally do, to run the Nominations Committee. The last couple of years I have cut back and I haven’t been involved as much because I feel it is time for young people to take over. But I was active during that period of time in these committees.

In other IEEE things, I’ve always been involved in various IEEE award groups. I won the IEEE Education Medal in 1983. So, as always happens, I was invited to become a member of the Education Medal Award Committee to select new candidates. I did that for a number of years. The same for the Kobayashi award. One of our own past presidents and good friend, Eric Sumner, who passed away some years ago, who had been an executive at Bell Labs: we have an award for him. I’m on the award committee in his name. So I’ve been on a number of IEEE awards committees.

Hochfelder:

What impact do you think that the IEEE Communications Society has had on advancing the state of art?

Schwartz:

It’s hard to judge. Obviously, like every IEEE society, it enhances education of its members. It has its publications. It has its conferences. So I think through the conferences and the publications, this is where we mostly advance the state of the art. We could argue that it is the engineers at companies like Bell Labs and IBM who advance the state of the art. But they are the guys who also do attend meetings and conferences and publish papers. Other companies learn from one another that way. I think the educational mission of the IEEE is reflected in ComSoc.

Unfortunately, the whole business of competition coming in has made things a lot more difficult now. I used to love to go to the ComSoc meetings, like the International Conference on Communications, ICC, and Globecom, the Global Communications Conference, and listen to papers from people from industry. They would talk about new systems, whether it was ITT talking about a new switching system or Bell Labs engineers talking about a new system. I would learn a lot from that. As an academic guy, that is important to me. Otherwise, academics talk to one another. Unfortunately, now, with competition you get very little of this. They give you very little information. I can’t blame them, but it is very hard now. You find more academic papers now. I used to like the other papers from industry where you would learn from these guys. It’s important. I think ComSoc as the leading communications society in the world has contributed to that in a broader sense.

I see more and more the need for bringing societies together. The communications field now spans many organizations. Steve Weinstein, who was president of ComSoc a couple of years ago, has done a lot of this. He tried to bring different societies together, and was very successful. When I was president of ComSoc I tried bringing the Communication and Computer Societies together in the area of computer communications. It was difficult because once you are part of an organization; you don’t want anybody intruding on your turf. You have the right to go ahead. The computer communications area belongs to both fields, computers and communications. I remember trying to get the Computer Society to try to join with us, meeting with their president. It was a difficult situation because we were pushing ahead in computer communications and it might be better for the two Societies to work together. It is difficult. Steve and other people have managed to do that; I did not. Maybe I laid the groundwork; I really don’t know. But I couldn’t accomplish that much. We just went ahead with our own journals.