Oral-History:Alan Oppenheim

About Alan Oppenheim

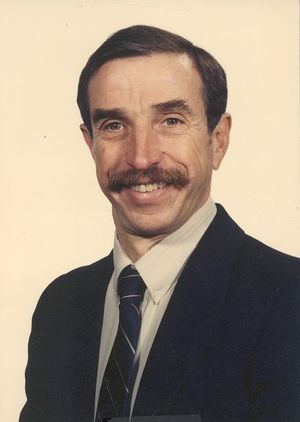

Alan V. Oppenheim was born in 1937 in New York, N.Y. He received simultaneous bachelor's and master's degrees in electrical engineering from MIT in 1961, and a Sc.D. in EE in 1964, also from MIT. He took a position at MIT in the electrical engineering department in 1964, and in 1967 also had an appointment at MIT's Lincoln Laboratory. He has held various positions at both institutions since that time, and is currently Ford Professor of Engineering at MIT. His principal research interests have been in the field of digital signal processing have focused on nonlinear dynamics and chaotic signals; speech, image, and acoustic signal processing; and knowledge-based signal processing. He is a Fellow of the IEEE (1977) [fellow award for "contributions to digital signal processing and speech communications], and the recipient of the ASSP Technical Achievement Award (1977), the ASSP Society Award (1980), the Centennial Medal, and the Education Medal (1988). Oppenheim is recipient of five patents, author or co-author of over 50 journal articles, and author or co-author of six major engineering texts including Teacher's Guide to Introductory Network Theory (with R. Alter, 1965); Digital Signal Processing (with R. W. Schafer, 1975); Signals and Systems (with A. S. Willsky, 1983, 2nd ed. 1997); Discrete-Time Signal Processing (with R. W. Schafer, 1989); and Computer-Based Exercises for Signal Processing (with C. S. Burrus, et al., 1994).

The interview began with Alan V. Oppenheim discussing his early educational experiences and his doctoral work at the Massachusetts Institute of Technology. Oppenheim related that he began doctoral research in the field of homomorphic systems and worked with Manny Cerrillo, Tom Stockham and Amar Bose. He discussed the first uses of the computer in the signal processing field and how the FFT was pivotal in gaining acceptance for computer signal processing. Oppenheim recalled that among the first to display interest in computer signal processing were those who worked in the control field, such as Jim Kaiser. He related that the work undergone at Bell Labs and Lincoln Labs was integral to work in the signal processing fields and that the work of Larry Rabiner, Ben Gold, Tom Stockham, Charlie Rader, Jim Kaiser and Jim Flanagan were of great influence to the field. Oppenheim was awarded his Ph.D. in 1964 and began working with Ken Stevens in the speech processing field. He then discovered that speech processing could greatly benefit from homomorphic techniques and related the example of the remastering of Caruso’s recordings by Tom Stockham. Oppenheim discussed the importance of non-linear systems in the deconvolution, speech processing and he invented the homomorphic vocoder. Oppenheimer also published several books, including a textbook on non-linear applications which he co-authored with Ron Schafer. He considers that speech processing technology, including the homomorphic variety, has most been utilized in communications, especially in the fields of compact discs, HDTV and cellular telephony. Oppenheim closes the interview with a brief history of his involvement with the IEEE Signal Processing Society and expressed his belief that the current organization is not selective enough in its promotion of technologies or workshops.

Other interviews covering the emergence of the signal processing field include Maurice Bellanger Oral History, C. Sidney Burrus Oral History, James W. Cooley Oral History, Ben Gold Oral History, Alfred Fettweis Oral History, James L. Flanagan Oral History, Fumitada Itakura Oral History, James Kaiser Oral History, William Lang Oral History, Wolfgang Mecklenbräuker Oral History, Russel Mersereau Oral History, Lawrence Rabiner Oral History, Charles Rader Oral History, Ron Schafer Oral History, Hans Wilhelm Schuessler Oral History, and Tom Parks Oral History.

About the Interview

ALAN OPPENHEIM: An Interview Conducted by Andrew Goldstein for the IEEE History Center, 28 February 1997

Interview # 325 for the IEEE History Center, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user. It is recommended that this oral history be cited as follows: Alan Oppenheim, an oral history conducted in 1997 by Andrew Goldstein, IEEE History Center, Piscataway, NJ, USA.

Interview

Interview: Alan Oppenheim

Interviewer: Andrew Goldstein

Date: 28 February 1997

Place: Cambridge, Massachusetts

Early career and education

Goldstein:

Dr. Oppenheim, to begin can we start with your early career, maybe with your undergraduate education?

Oppenheim:

I came to MIT as an undergraduate in 1955 and then was here for all my degrees, bachelor's, master's, Ph.D., and then got on the faculty, and so this is basically where I've spent most of my life.

Goldstein:

Your undergraduate degree is in electrical engineering?

Oppenheim:

Electrical engineering, yes.

Goldstein:

What was your Ph.D. thesis on?

Oppenheim:

First of all, I came to MIT almost by accident. I didn't know about MIT. I got into electrical engineering not knowing what it was. I feel like I've gone through my whole career walking backwards, but having tremendous good fortune. My master's thesis wasn't in anything related to signal processing. It had to do with the ferrite cores, magnetics.

Goldstein:

Was that with Jay Forrester?

Oppenheim:

Well, it wasn't with Jay Forrester. I was actually working with somebody who was at Lincoln Laboratory and trying to understand certain magnetic properties of ferrites. Then when I got into the doctoral program, I got hooked up with somebody named Manny Cerrillo. There were three graduate students at the time: myself, a fellow named Tom Kincaid and another named John McDonald. What we were trying to do was understand what Cerrillo was doing. He was a mathematician. Then around that time a guy named Tom Stockham had come back on the faculty from the Air Force and we started working a little with him. There was some very oddball signal processing stuff going on with Cerrillo. Cerrillo inspired me to go in a direction with my doctoral thesis which had to do with nonlinear ideas for signal processing. Then that became my doctoral thesis. It was called "Homomorphic Systems." Tom Stockham was really inspirational to me, and he saw value in this. Actually, when I graduated and got on the faculty, he got me together with Ron Schafer who was a graduate student looking for a doctoral thesis. Ron, Tom and I did some stuff building on my doctoral thesis in signal processing. That was how I got into these things. My path into signal processing was my doctoral thesis on nonlinear systems and then applying this to some classes of signal processing having to do with deconvolution and demultiplication. Then I connected with Tom right after I got on the faculty with Ben Gold. Ron Schafer was my first doctoral student and then I ended up involved with Lincoln Laboratory. In those early years, there was Tom Stockham, Ben Gold, Charlie Rader, me, Larry Rabiner and Ron Schafer (who were a graduate students at the time). That was in the mid-'60s.

Analog signal processing, computers in signal processing

Goldstein:

Can you paint a picture of what was going on in signal processing when you started working homomorphic filtering?

Oppenheim:

Yes. There were some interesting things. One of them was that signal processing at that time was essentially analog. People built signal processing systems with analog components; resistors, inductors, capacitors, op amps, and things like that. My thesis advisor was actually Amar Bose, and when Tom Stockham came back from the Air Force, he hooked up with Amar. The thing that Bose wanted to do was very sophisticated acoustic modeling for room acoustics. What Tom was suggesting was to do this on a computer. Basically people didn't use computers for signal processing other than for applications like oil exploration. In those instances, you would go off-line, collect a lot of data, take it back to the lab, and spend thousands of hours and millions of dollars processing it. The data is very expensive to collect and you're not trying to do things in real time; you are willing to do them off-line. Actually, I remember when Tom was doing this stuff with Amar and he was in what is now called the Laboratory of Computer Science. Generally the computer and signal processing people said that computers are not for signal processing, they are for inverting matrices or things like that. They said, "You don't do signal processing on a computer. "The computer at that point was not viewed as a realistic way of processing signals. The other thing that was going on around that time was that Ben Gold and Charlie Rader at Lincoln were working on vocoders. Vocoders were analog devices that were built for speech compression. But there was the notion that you could simulate these filters on a computer before you actually built them, so that you knew what parameters you wanted. So there was that aspect where techniques for processing of signals digitally was starting to emerge. But even there, the initial thought was more simulation than actual implementation.

Goldstein:

The funny thing is that the impression I got from Charlie Rader and other people was that the computer generated a lot of excitement for signal processing. But maybe that's only from the perspective of the people who were working on speech problems. Maybe people working with computers didn't see it.

Oppenheim:

- Audio File

- MP3 Audio

(325 - oppenheim - clip 1.mp3)

Well, I would say a couple of things about that. One is that computer people could not see the connection between computers and signal processing. I remember speaking to people in the computer field in the early to mid-'60s about computers and signal processing, and the reaction you would get is, "Well computer people have to know some signal processing because you might need to put a scope on the line to see if you're getting pulses that are disturbing the computer." But the notion that you actually wanted to process signals with computers just didn't connect. I remember very specifically when Tom Stockham was on Project Mac, and he was experiencing tremendous frustration. People in the computer area had no appreciation for what he was doing with the computers for signal processing. Did computers generate a lot of excitement in the signal processing community? I guess what I would say that there was a very small group of people in the early '60s who felt that something significant was happening here. You could implement signal processing algorithms with computers that were impossible or too hard to do with analog hardware. An example of that was this whole notion of homomorphic signal processing. It was nonlinear and you couldn't realistically do it with analog stuff. In fact, that's why Tom Stockham got excited, because he saw that the stuff I had done my thesis on could be done on a computer. The excitement really got generated when the Cooley-Tukey paper came out. When the FFT hit, then there was a big explosion, because then you could see that by using a computer you could do some things incredibly efficiently. You could start thinking about doing things in real time. You could think of it as the difference between BC and AD. The birth of the FFT was a very significant event.

Jim Kaiser recognized the value of using computers for signal processing even earlier than what we're talking about, although his focus was on digital filters. Jim's field was feedback control. In fact, I took a course on feedback systems from him when he was an instructor here. What happened in the control community is that it gravitated to something called sample data control where essentially what you do is you sample things and then in the feedback path you've got digital filters. Jim got into signal processing through that route and worked for quite a bit of time on digital filter design issues. That was kind of another thread into it. Then the community started to evolve. There was Tom Stockham, Charlie Rader, and Ben Gold at Lincoln Laboratory. I got involved in Lincoln Laboratory shortly after I graduated, through Tom and Ben. There was Larry Rabiner who was finishing his thesis with Ben Gold and who then went to Bell Labs. When Ron Schafer graduated, he went to Bell Labs and there was this kernel there. Some people would disagree with this view, but I would say that a lot grew out of the activity at Lincoln through Ben, Charlie, Tom, and myself. The activity at Bell Labs came through Ron, Larry, Jim Flanagan, and Jim Kaiser. Then there was activity at IBM after the Cooley-Tukey algorithm exploded.

Doctoral thesis

Goldstein:

Can we go back and talk about your thesis? When did you start working on it and when did you finish?

Oppenheim:

I started my doctoral program in '61, and handed in my thesis in '64. I got the key idea for my doctoral thesis riding a chair lift at Stowe, Vermont and I can remember exactly when it hit. I believe that it's really important to be involved in things besides your research, such as sports, because your subconscious creativity bubbles to the surface at the most amazing times. That's what happened with my doctoral thesis.

Goldstein:

Well tell me what problem you were trying to solve. Is it possible to describe what technique you were trying to achieve?

Oppenheim:

It actually wasn't a problem I was trying to solve. I would say it was more like what came out of it was a solution in search of a problem which has been characteristic of my research ever since. The exact sequence of events was that Cerrillo kept telling me that I should learn about group theory, because there was a lot of new stuff there. So I took a course in group theory. One of the things that's in traditional signal processing is the whole concept of linearity and something called superposition. Superposition applies to linear systems. When I took this group theory course, the guy who was teaching it was very careful to talk about group operations in an abstract way. He would call it "group addition" but it would be an abstract operation, because these are not numbers, these are just things in a group. There is the concept of homomorphisms and isomorphisms between groups and by definition a homomorphism satisfies a superposition.

I honestly remember being in that chair lift and the idea hitting me. Addition does not have to mean algebraic addition; all it has to satisfy is the algebraic postulates of addition, which multiplication and convolution do. There are lots of operations that satisfy that. So then you can think of a class of nonlinear systems that satisfy superposition not in the additive sense but in some other algebraic operation. So I was thinking about how you could end up with tools for nonlinear systems that are as rich as the tools for linear systems. I guess that's the way to phrase it. The key was this notion of representing mappings from input signal space to output signal space where the addition operation is not algebraic addition. The title of the thesis is "Superposition in a Class of Nonlinear Systems," which sounds like an oxymoron, and which was done on purpose. What happened was that in the thesis this was all done fairly abstractly. Then the question is "What are some operations?" Some examples of operations that have this same algebraic property are multiplication and convolution. Problems of separating signals that have been multiplied and separating signals that have been convolved are very important classes of problems in signal processing. So there is the idea of using these homomorphic systems to do the signal separation, and these are nonlinear systems. They involve operations that are not easy to do in analog hardware, and so it just naturally gravitated to using a computer to at least try some of these things out.

Goldstein:

Can you remember any of the early implementations in some of the homomorphic techniques?

Oppenheim:

There were a couple of things. One was that Tom Stockham and I got a patent on an automatic gain control system which would essentially be like the Dolby system. Dolby had just emerged at the time. This was an idea for doing automatic gain control where you model the signal as the product of a slowly varying gain function. Then what you do is separate out those two pieces and modify them separately. That's how you achieve gain control.

Goldstein:

So you can separate them or you can deconvolve them?

Oppenheim:

Well, you would demultiply. Because you are thinking of them as multiplying.

Goldstein:

All right.

Speech processing and recording restoration

Oppenheim:

Now Tom had also taken the idea of demultiplication and used it to develop a model of vision. He wrote some very classic papers on using this homomorphic model for images and doing some image processing based on this notion of homomorphic filtering. It reminded me of something else which was interesting. When I finished the thesis I decided I was sick of it, and so I thought what I would do is change fields. I would do something different. So what I decided to do was get involved with Ken Stevens and his group on speech stuff. He was very well known in the speech area, and I thought I was going to be taking off in a totally different direction. But within a couple of weeks I realized that speech was the perfect application for deconvolution using homomorphic filtering, because in speech processing deconvolution is a really key aspect. You think of speech in an engineering sense as being modeled as the convolution of the air flow through the vocal chords and the impulse response in the vocal cavity.

Goldstein:

Right.

Oppenheim:

Then all speech compression systems and many speech recognition systems are oriented toward doing this deconvolution, then processing things separately, and then going on from there. So speech really became a significant focus for me for applying homomorphic signal processing and it has become one of the important tools for that. Let me just mention one other application, because if you haven't heard about it you should. A very different application of homomorphic deconvolution was something that Tom Stockham did. He started it at Lincoln and continued it at the University of Utah. It has become very famous, actually. It involves using homomorphic deconvolution to restore old Caruso recordings.

Goldstein:

I have heard about that.

Oppenheim:

Yes. So you know that's become one of the well-known applications of deconvolution for speech.

Goldstein:

There's the original signal which is then degraded by some distortion, and so you can try to pick out the original signal?

Oppenheim:

You mean for Tom's stuff?

Goldstein:

Yes.

Oppenheim:

Well there were two things. What happens in a recording like Caruso's is that he was singing into a horn that to make the recording. The recording horn has an impulse response, and that distorts the effect of his voice, my talking like this. [cupping his hands around his mouth]

Goldstein:

Okay.

Oppenheim:

So there is a reverberant quality to it. Now what you want to do is deconvolve that out, because what you hear when I do this [cupping his hands around his mouth] is the convolution of what I'm saying and the impulse response of this horn. Now you could say, "Well why don't you go off and measure it. Just get one of those old horns, measure its impulse response, and then you can do the deconvolution." The problem is that the characteristics of those horns changed with temperature, and they changed with the way they were turned up each time. So you've got to estimate that from the music itself. That led to a whole notion which I believe Tom launched, which is the concept of blind deconvolution. In other words, being able to estimate from the signal that you've got the convolutional piece that you want to get rid of. Tom did that using some of the techniques of homomorphic filtering. Tom and a student of his at Utah named Neil Miller did some further work. After the deconvolution, what happens is you apply some high pass filtering to the recording. That's what it ends up doing. What that does is amplify some of the noise that's on the recording. Tom and Neil knew Caruso's singing. You can use the homomorphic vocoder that I developed to analyze the singing and then re-synthesize it. When you re-synthesize it you can do so without the noise. They did that, and of course what happens is not only do you get rid of the noise but you get rid of the orchestra. That's actually become a very fun demo which I still play in my class. This was done twenty years ago, but it's still pretty dramatic. You hear Caruso singing with the orchestra, then you can hear the enhanced version after the blind deconvolution, and then you can also hear the result after you get rid of the orchestra. Getting rid of the orchestra is something you can't do with linear filtering. It has to be a nonlinear technique. Now RCA actually made a recording of Caruso after removing the orchestra. They didn't market it, but there is an RCA red seal recording of the enhanced Caruso which RCA marketed for a while.

Goldstein:

That's what I wanted to ask you about, whether Tom's work was released in general, or whether it was a special case?

Oppenheim:

Actually, following that he actually started a company called Soundstream, and they got involved in enhancement. They did a lot of digital recording and mastering. Unfortunately the company didn't do well and wasn't successful. Tom was really ahead of his time. He was the first person in digital audio. There was an event for Tom when he retired that a lot of us attended, including myself, Ron Schafer, Charlie Rader, and a lot of other people. Several people gave "five-minute" presentations, which tended to get longer. They were tidbits and roasting and that kind of thing. There's a lot of history that comes out in the process, and there's a videotape from that which I have someplace. I'm sure I could find it, if you feel it would be useful to have.

Linear and nonlinear systems

Goldstein:

In the real world how many systems were successfully modeled linearly, and how many were just being done that way because that was the only technique? Was there really immediate demand for a nonlinear approach?

Oppenheim:

Well, first of all there is the issue of modeling, which is kind of like an analysis, and there are certainly a lot of linear things and a lot of nonlinear things. Then there's the issue of what you would call synthesis. I have signals and I want to do some processing on them. Do I do the processing linearly or nonlinearly? There is this old joke about the drunk who was groping around under the streetlight and the cop says to him, "What are you doing?" and he says, "I've dropped my keys," and the cop says, "Where did you lose them?" and he said, "I lost them in the middle of the block." "Well why are you looking here?" "Because the light's better." People use linear systems because you have analytical tools to understand what you are doing.

Goldstein:

Right.

Oppenheim:

To the extent that you can develop tools for nonlinear systems, often there are significant gains. Nonlinear by definition is not linear, which means you are defining it by something it doesn't have, and how do you exploit that? So there are lots of nonlinear systems that people have always used. Often they are developed in some empirical or ad hoc way. Nonlinear techniques in signal processing are really important, and we're still trying to discover classes of nonlinear techniques. If you ask me where the homomorphic signal processing it fits in importance in the toolbox of all signal processing techniques, I would say it has become a useful tool. There are particular places where it has a heavy role and that's largely in some deconvolution problems and speech processing. If you ask me to rank it relative to the FFT, the importance of the FFT exceeds it by many orders of magnitude. So it's a class of techniques that's useful, and belongs in the toolbox, but not like some other tools like the FFT.

Goldstein:

As you were finishing your work, and starting to promote it, what were the options for nonlinear systems at that time?

Oppenheim:

Not much actually. In terms of formalisms for nonlinear systems, there were some things that people had already done. There was some work using what were called Volterra series. This is work that Norbert Wiener had initiated with Y. W. Lee. Also, a guy at MIT named Marty Schetzen was working on it. But Volterra series, back in those days, never really turned out to be useful for a number of technical reasons. But it's interesting that Volterra series has been rediscovered in the last ten years or so. Again, it's hard to see how to use it. But that's one of those techniques or formalisms for nonlinear systems. Amar Bose did his doctoral thesis on nonlinear systems. As far as I'm aware, that work didn't really lead to any practical tools for designing nonlinear systems. So in the signal processing community there were linear techniques and then nonlinear things that were done that tended to be somewhat more empirical or ad hoc. I might, as I think about it, end up wanting to modify that somewhat. Because I am imagining somebody reading it and saying, "Well, how in the world can you say that when you used bang-bang servos all the time?" Many applications are highly nonlinear, such as the kind of nonlinear work that people had done in various detection problems. But I guess I would still stick with it--linear followed by some nonlinearity that got attached to it.

Publications and influence of homomorphic filtering

Goldstein:

Do you remember how you circulated your ideas just as you were finishing up? Was it through publications? Oppenheim: Well, actually that's an interesting question too, because it reminds me of a couple of things. One is that the first paper that I submitted on homomorphic systems was rejected, and I found that very depressing.

Goldstein:

Do you remember where you submitted it?

Oppenheim:

I don't. I remember saying to Y. W. Lee at one point, "What's really depressing me is that nobody seems to be paying attention to it." He said, "It's beautiful stuff. Just keep trying, and eventually people are going to recognize it." It's interesting that he was right. I've heard stories like that many times before where if there is an idea that's a paradigm shift, it's oddball enough that it doesn't just extrapolate what people have done before. It's hard to recognize its value early on, and it's quite likely that it will be controversial. I remember Sven Treitel telling me that the first paper that he and Enders Robinson wrote on linear prediction was rejected. I've heard lots of stories since then. That's one of the things I tell my graduate students, actually. The thing they should hope for is that the first paper they submit gets rejected.

Goldstein:

Either it is a sign of importance--or not.

Oppenheim:

Right. I have said to sponsors, not necessarily joking, that when they take a proposal of mine and send it out for review, my comment is, "I hope that it gets at least one negative review." Because then that means that there is something off the beaten path in there.

So when I had just finished my thesis and I was starting my career, it was kind of depressing that people didn't seem to appreciate what I thought was intriguing. I believed so much in how neat some idea was, I remember saying to my new wife "This is going to make me famous some day." She and I talked about it. Another thing I remember is when I was doing my thesis, I got really depressed at one point about it because I couldn't seem to get anybody interested in it. I remember Amar Bose saying to me, "Do you believe in it?" and I said, "Yes, this is really neat stuff." He said, "Well look, Al, this is probably the last time in your career that you are going to have the opportunity to spend all your time pursuing some ideas that you really believe in no matter what anybody else thinks." So he said, "You ought to stick with it." I remember that. I also remember that around the time I graduated I was talking to Tom Stockham, and I said, "It's kind of depressing that nobody is picking up on this stuff" and Tom said, "It's not depressing."

Oppenheim:

He said, "Actually what's great is that we have this all to ourselves for a while until other people really discover it." So it's like saying that if you found a field full of diamonds and you can just walk around and pick up all the diamonds you want until somebody else discovers it. So he was saying, "It's not depressing. It's exciting."

Goldstein:

Can you remember any milestones with regard to homomorphic filtering catching on?

Oppenheim:

I think one of the things that spurred things on came from a totally different direction. Some people at Bell Labs had come up with an idea that was somewhat similar from a different direction.

Goldstein:

Is this Cepstral Analysis?

Oppenheim:

Yes. They had developed the notion of looking at the logarithm of the power spectrum to detect echoes. They weren't focused at all on deconvolution; they were focused on detecting echoes. This process of the logarithm of the power spectrum loses a lot of information, but it gets it into the same domain as where I was mapping things, which is taking the Fourier transform of the logarithm of the Fourier transform. Now I wanted to do this to separate things, pull out just a piece of what's left and end up doing deconvolution. So the Cepstrum was generating some excitement. Then when it was recognized that there was some connection between these, then people who had been looking at detection, in particular some people in the seismic community, got excited about the possibility of using this for deconvolution of seismic data. Later, when I was working at Lincoln Laboratory I developed what was called a homomorphic vocoder, and that got published in papers and people got excited about it. Tom's work on the homomorphic processing of images also got published. If I was going to point back to an exciting event at that time, it was what was happening with the audio and electro-acoustics group. It was a Group, not a Society, and it was almost dead. Bill Lang at IBM was looking for some way to revive it, and the FFT had just gotten exposed, so this community got pulled together from people who were looking for exciting ways of using the FFT and also for ways of reinvigorating this audio and electro-acoustics group. There was a mindset among these people to look at everything optimistically rather than pessimistically, you know, there's a pony in there someplace.

Then there was the Arden House conference, which was the first of its kind. I presented a bunch of my stuff, and the view that people took toward it was, "This is really fantastic because it's innovative, it uses the FFT, and it has to do with speech which is like audio. This is just the kind of thing that we hope will see the light of day." So I would say that kind of helped to launch it.

Goldstein:

Your original work didn't use the FFT, so you must have adapted it in '65.

Oppenheim:

- Audio File

- MP3 Audio

(325_-_oppenheim_-_clip_2.mp3)

Yes. I knew about the FFT. The Cooley-Tukey paper came out in '65, but I think I knew about it in '64 or so. I finished my thesis, I started working with Ken Stevens, and then I saw this issue of deconvolution for speech that I could use. But what it requires is taking the Fourier transform of the log of the Fourier transform and figuring out how to get the Fourier transform. I'm pretty sure it was Tom Stockham who was working with Charlie Rader on trying to understand this algorithm. He introduced me to it and made the point that you can do it very efficiently. Actually, I remember in a conversation with Tom saying to him, "Well if this FFT algorithm is so efficient, then why don't you use it for filtering, because you can take the data and the filter impulse response, transform them, multiply them, and do an inverse transform? It's that efficient." Tom took that notion and ended up writing a classic paper. He gives me credit for suggesting this stuff. He developed it into another classic paper on what's called high-speed convolution. Because he was interested in doing convolution.

This all occurred before Arden House, so that essentially I implemented some of these ideas and I was able to present them and show stuff. Then there was a paper that Tom, Ron Schafer and I wrote called "Nonlinear Filtering of Multiplied and Convolved Signals" that was first published in the IEEE Proceedings and then it was republished by the Transactions on Audio and Electroacoustics.

Goldstein:

In homomorphic filtering, what about it made it difficult to implement in analog systems? What did the computer offer?

Oppenheim:

Well, it depended on what operation was being implemented. If it was demultiplication, you needed a logarithmic operation. For deconvolution, what you need is the Fourier transform, and then what you need beyond that is a way to get the complex logarithm of a complex value function. The Bogart-Healy Cepstrum didn't have that issue. It had the spectrum issue, since you have to get the power spectrum. But it didn't deal with a complex logarithm. It didn't involve phase. So being able to get a Fourier transform with very high resolution and get its complex logarithm is something that you can't do with analog.

IEEE, Arden House, and professional communities

Goldstein:

To jump back now, you were talking about Arden House, and I wonder if you can try to recreate for me the spirit at Arden House. Were people looking at the future or thinking in terms of finished systems? Just what was going on?

Oppenheim:

Arden House was a happening, you know. There was tremendous excitement. The people who were there really sensed that there was something magic that was happening, and that it was an opportunity. In some sense, we had a tiger by the tail. There's another piece to it. Do you know how often there is the feeling that with the good ideas you have mined you've got to be really careful about what you say, because other people may run with it? You have to be very protective. The spirit at Arden House was: "There is a gold mine here. There is more than enough for everybody! If I give away my five good ideas this morning, I'll have another five good ideas tonight, so I'm not worried about that." The excitement, the synergy, the spirit of collaboration, that was very strong at Arden House. And of course that kind of thing builds on itself. So there was definitely the feeling that something was really going on. It was also clear with the audio and electroacoustics group and the Digital Signal Processing Committee that there was a tremendous amount of work to be done and that we all had to roll up our sleeves and put our shoulders to the wheel. The reason I'm bringing this up is because I talk to my younger colleagues about this, and when they talk about IEEE committees, like in the Signal Processing Society, they describe them as being super political. There is a lot of in-fighting, there is a lot of back-stabbing, there are people who volunteer for these committees, not because they want to put their shoulder to the wheel but because it's important for their resume because they want to be president of the society some day or they want to get into the National Academy or something like this. That's typical of something that has gotten too big and has matured somewhat. Whereas back in those early days the whole spirit was different. It was, "We've got a lot of work to do," and it was like the pioneer days.

Goldstein:

Were there people participating at all phases of their own career? You are describing almost a young, enthusiastic spirit, and I wonder if that's located in the technology that is being discussed or the career stage of the people involved.

Oppenheim:

This was shortly after I'd gotten my degree, so I was about thirty. Ben Gold is fifteen years older than me, so he was about forty-five. Hans Schuessler probably was about Ben's age. I think maybe Manfred Schroeder was at that first Arden House. I can't quite remember. So no, I would not say that it was just the young guys. Bill Lang was kind of focused on rebuilding the audio and electroacoustics group, and that's what he saw as a mission. Sometimes the young guys come in and they push the old guys out of the way, saying "This is now our generation and we play our own music." I would tend to describe Arden House as having a different spirit. I would say that what happened is that the more senior people recognized the potential for rejuvenation by encouraging what they saw as the creativity coming from some of the other people. So in that sense it's more like two family models. One family model is that the teenagers finally grow up, kick their parents out of the way and say "All right, now this is our house and don't get in our way." Then there is another model which you think of as the much more stable multi-generational family, where there is the wisdom of the older generation, there is the enthusiasm and energy of the younger generation, and the synergy from that has the magic. I would describe Arden House, that first Arden House, much more in that spirit. But I would say that as you try to paint an historical picture, I think that contrast in a general sense is important. I remember very specifically in the early '60s that the place that should have picked up digital signal processing was the circuit theory Transactions. They didn't. There was an arrogance there. They were into synthesis of RLC networks and were not open. That group had matured, and they had their club and way of looking at things, and they missed an opportunity. I would say our society probably is missing some opportunities now. When you think about the history of these things, I would say this pattern that we are talking about is very common.

Goldstein:

Well, I think one important thing to get right with respect to that issue is Bill Lang's role.

Oppenheim:

Yes.

Goldstein:

I wonder if you have any insight into what motivated him and what he was after.

Oppenheim:

Bill was at IBM, and my sense is that Bill saw two things and he saw a way to marry them. One was the FFT coming out of the lab where he was involved. I forget what his managerial role was relative to Jim Cooley. So one is that you want a platform for this. The other is that Bill was heavily involved in the audio and electroacoustics group and the Transactions was dying. How do you rejuvenate it? What he saw was an opportunity.

Goldstein:

Like a paternalistic feeling for the society, that he wanted to see it thrive.

Oppenheim:

That's right. But you can do that thing in ways that are counterproductive. What he saw was a win-win opportunity. There was a platform for some work, signal processing, that was having trouble. It was getting picked up by other IEEE groups. There was no real home for it. If some other group had come forward with enthusiasm, the audio and electroacoustics group would have died. What Bill saw was a win-win thing.

Goldstein: You spoke before of the wealth of ideas in those early days maybe at Arden House among the participants there. Do you think most of those ideas have developed into something valuable, or do you recall anything, any fads or dead ends?

Oppenheim:

Well, first of all, if you have an environment where there are a lot of ideas being poured out and they all work out, then clearly people have not been uninhibited enough, because it shouldn't. That's too conservative. It's like a high risk, guaranteed payoff situation. There are some things that come to mind that people were talking about relative to particular filter structures and eventually when you look at it, it doesn't make sense. Because of the way the technology evolved, they turned out to be much less interesting or important than they might have been had the technology gone off in some very different direction.

Digital signal processing textbook and teaching

Goldstein:

One other major topic I wanted to talk about was the textbook you wrote with Schafer. Tell me something about how that came about and what you are trying to accomplish with it.

Oppenheim:

I took a leave of absence from MIT in 1967 to spend two years at Lincoln Laboratory, and then I came back to the faculty in '69. That's when I decided that I wanted to start a digital signal processing course. But there weren't any textbooks. There was the book that Charlie and Ben wrote that Tom and I had a chapter in. That wasn't a textbook that was comprehensive enough to use in a course, so when I came back I started a course which is still going. I started writing a lot of notes for it, and in the process of writing the notes I felt that I wasn't going to be able to finish it without a co-author. I couldn't think of anybody better to invite into the project but Ron. He was down at Bell Laboratories, and when I asked him about it he said that he and Larry were thinking of a book. What ended up happening then is that Ron joined me on the textbook, and Larry and Ben wrote a companion book that was more oriented toward industry than toward the classroom. So that's how it started. Early on, I sent a prospectus and sample chapters to McGraw-Hill, Wiley, and Prentice Hall. Now Prentice Hall had even before then visited me a bunch of times encouraging me to write a textbook on this course, so they were always very enthusiastic. The responses I got back from Wiley and McGraw-Hill were that one of them rejected it, and the other said, "We think this is a very limited market, but we'd be happy to look at future versions of it." Essentially no enthusiasm. Prentice-Hall was saying, "When can we publish it? When can we publish it?" So we ended up publishing it with Prentice-Hall. Twenty-twenty hindsight is always great, but, again, I think it was an indication that it was enough of a paradigm shift that it was easy to have missed it.

Goldstein:

Was there any particular point of view you were trying to promote in the book? If you write a seminal book it's an opportunity to do a lot of shaping, and I wonder whether you were concerned about those issues or in retrospect you notice some effect like that.

Oppenheim:

Well, the thing that I recognized at the time was that there was no book like it. There was no course like it, either, and so this would be the first real textbook in this field. When we wrote it we imagined that if it were successful, then it would be the basis for courses in lots of other schools. If I was going to identify a viewpoint, I would say the following: A traditional way that a lot of people viewed digital signal processing was as an approximation to analog signal processing. In analog signal processing; the math involves derivatives and integrals; you can't really do that on the computer, so you have to approximate it. How do you approximate the integral? How do you approximate the derivative? The viewpoint that I took in the course was you start from the beginning, recognizing that you're talking about processing discrete time signals, and that where they come from is a separate issue but the mathematics for that is not an approximation to anything. Once you have things that are discrete in time, then there are things that you do with them. There are examples that I used in the course right from the beginning that clearly showed that if you took the other view it cost you a lot, that you would do silly things by taking analog systems and trying to approximate them digitally and using that as your digital signal processing. I would say that was a strong component of it. There's a new undergraduate course that I'm involved in here with a fellow named George Verghese. It will probably end up as a textbook in four or five years. When you asked the question about what vision I had back then, it made me think of the vision I have now in this new course. There's no course like it in the country, there's no textbook for that course, and what I believe is that when we end up writing that book, it will launch this course in lots of other schools.

Goldstein:

Was there some external issue that caused you to de-emphasize something which you wish you hadn't, like time pressure, anything even as mundane as that? When you were done with the book, did you think it was a fair representation of the field of digital signal processing? Were you satisfied with how it covered it?

Oppenheim:

Well, when the book came out we were happy with it. After a period of time we were less happy with it, and that's why we essentially re-wrote it. Your question triggers two things in my mind. One is that of course it was written shortly after the FFT came out, and it devotes a lot of energy in the book to the FFT and all the versions of it and the twists and cute things. As I teach the course now I de-emphasize a lot of that. Because the FFT is just an algorithm, and if you're not going to program it there are a lot of these things you don't need to know. It's interesting to note some aspects of how it evolves, what makes it so efficient, but you don't need all the little quirks and things like that. So I would say we were overly enamored with that at the time. It was big news.

Goldstein:

Right.

Oppenheim:

The other thing that crosses my mind about that book is something that Ron and I agonized over a lot, which was the chapter on spectral estimation. Because it was a topic that we didn't understand well when we were writing that chapter and we spent a lot of time trying to explain it in a way that was easy to follow. I remember that up until the last minute, we thought about taking that chapter out because we were worried that people who knew spectral estimation would read it and see our description as wrong or naive. It turns out to have been one of the best chapters in the book, because it's a topic that's very hard to understand in general and a lot of people told us that that's the best description of it they've ever seen. So, it's one of those things where we thought about taking it out and we keep saying we're real glad we didn't.

Goldstein:

So you had the book now, and then you started teaching the class, and how did that go? Did it attract a lot of interest?

Oppenheim:

Yes. I remember the first semester that it was offered here. I was figuring it would have about twenty students, and I remember that it was wall-to-wall people out in the corridor.

Goldstein:

Would this be 1970?

Oppenheim:

It was the fall semester of '70, yes. I also remember the first teaching assistant in that course was Don Johnson, who also was taking the course at the same time. He was a very smart guy who is now a dean at Rice University. The course always had significant enrollment. It was a popular course.

Goldstein:

I still think of 1970 as being fairly early. The community was fairly small.

Oppenheim:

Yes.

Goldstein:

How do you explain the fervent interest among the undergraduates?

Oppenheim:

I think because by that time people had heard about the FFT. So I think that was probably the draw. It wasn't digital filters, it wasn't speech. There's an interesting thing about students. If you want to know where the future lies, put your ear to the ground and listen to what the students are sensing. Because they are thinking this way all the time. They are thinking not what's hot now, but what's going to be hot in ten years. They're not sitting around thinking hard about it, but if you think about the fact that there are thousands of students with their view projected toward the future, and then you try to integrate over that and get a sense of what they are sensing, it's usually pretty perceptive. It's an interesting aspect of being on a faculty of a place like this. If your stuff is becoming irrelevant, you see it in the attendance at your classes or the number of students who come by to talk to you about thesis topics or something like that. They know where the action is. So I think that's what it was. Now, did they see that there would be DSP microprocessors and a compact disk and a Speak & Spell some way or another? Of course not. They had no clue that that's where the future was going.

Goldstein:

How about your own research after that period, in the '70s and beyond?

Oppenheim:

I got involved in a lot of work on filter design, speech processing of various kinds. The nature of my research has always been solutions in search of problems, in the same spirit as what happened with my doctoral thesis. So I tend not to end up looking for problems to solve. I tend to look for intriguing threads to tug on. I like looking for paradigm shifts.

Goldstein:

Well, in general do the problems follow the solutions, or is that a hit or miss proposition?

Oppenheim:

It's hit and miss. I would say something that's characteristic of my research is that it tends to be highly creative. I know that's immodest to say. It tends to be solutions in search of problems. Probably the stuff that I do by itself does not solve the problem. The problems that it ends up attaching itself to add something to the thinking about it. I would say that's what it is. Recent stuff that I've done in collaboration with various students is on fractals and a notion called fractal modulation. An intriguing idea. Would I invest in a company that does fractal modulation? No. There are a number of papers that I've written and doctoral theses on using chaotic signals in interesting ways, and it's creative, it's interesting. Do I think it's going to make a big splash? Maybe.

Research applications

Goldstein:

Going back as far back as the '70s, have there been any of your research lines that have made an impact?

Oppenheim:

I'm hesitating, because I'm trying to remember what some of those were, and it's hard to answer questions like that without worrying about sounding immodest. I would certainly say that the work that I did on quantization was more like a problem that needed solving.

Goldstein:

What is the problem?

Oppenheim:

The problem is that when you implement signal processing algorithms, filters, or Fourier transforms, you have to do it with finite register length.

Goldstein:

Right.

Oppenheim:

That introduces quantization. There's a lot of work that I did with other people, including Cliff Weinstein at Lincoln and Don Johnson, that basically analyzes what the noise effects are with floating point and fixed point arithmetic. Then there was an idea that I came up with called block floating point. As I look back on it, it was not very exciting. There's work that I've done on noise cancellation over the years with a fellow named Udi Weinstein from Tel Aviv University, and a former student of his who was a former student of mine here on speech enhancement. That work has had an impact. Those things I would describe as trying to solve some particular problems. Speech enhancement is a problem, you want to solve it, and we came up with some pretty innovative ideas there.

Goldstein:

When you say an impact, you mean in terms of research or actual equipment?

Oppenheim:

In terms of some patents on enhancement algorithms. I wouldn't say in terms of equipment. I wouldn't say there is a piece of equipment out there that came out of my theory.

Goldstein:

Is there any general pattern to how your research disseminates? Do you publish and then it's just out there in the world, or do you channel it somewhere more or less deliberately?

Oppenheim:

I'm going to try an immodest answer. The pattern tends to be that it's guided by a bunch of things. One is when I take on a doctoral student, it's often a very close collaboration. It's not that I'm just attaching my name to their work. A lot of the ideas come directly from me. I try to see that by the time the students graduate, they have an international reputation, and also that about other people are starting to become aware of this exciting set of ideas, but that when they're working on the projects they don't have a lot of competition. I'm looking for paradigm shifts. The way I describe it is threads to tug on that will lead to interesting places that I can't anticipate. One of the things that I've found over the years is that there is generally a lot of interest in what topics my students are working on. In terms of disseminating what's going on, the typical situation is that we keep things at a low profile for a while. We use ICASSP as the first platform to get ideas put on the table, and then we publish in appropriate places. The issue of where to publish has become a real problem, because so many of the journals, like the signal processing Transactions, take so long to get something published that it's not that good a mechanism for disseminating information. I would say ICASSP is probably, for this group, the typical way of starting to disseminate information.

Goldstein:

But then Gold had a history of vocoder research at Lincoln Labs published in 1990, which is interesting. Your article here says something about the origin of signal processing at RLE.

Oppenheim:

Right.

Goldstein:

Can you say something about how your work gets absorbed in different applications, speech or seismic or radar? Are there different characteristics to the application in those different areas?

Oppenheim:

When you say "my work," you mean the stuff that we do in this group. I can think of two threads to the answer. Thing about the '60s was that if you were processing signals on a computer, you couldn't do anything in real time because it was too slow. You needed low bandwidth problems. Speech at 4 kilohertz bandwidth has an 8 kilohertz sample rate. You could do processing at rates that were tolerable. The notion of doing that processing on radar data was inconceivable at that time. The frequencies are so much higher. There was a natural gravitation toward low bandwidth signals and toward signals where you were doing off-line processing. Seismic data, both because it's low bandwidth because it's off-line, was one thing we could work with. Speech was also good, because it is low bandwidth. Images were good because they are off-line. You only spent time processing an image and then seeing what the result is as opposed to real-time video. As time has passed, that's changed dramatically. I said in my first class this term, "In my course I always try to speculate about the future. One of my standard speculations for many years was to say that some day your TV set is going to be a computer." I said, "I can't speculate about that anymore since it's happened." The notion that now the A-to-D converter is moving right to the antenna in lots of systems is amazing.

Goldstein:

How applicable was your work to these different major application sectors? Were there other applications of your work?

Oppenheim:

I would say where signal processing and a lot of what we do relates now is in communications. Modems have an enormous amount of signal processing in them. They have some coding, but a lot of what goes on is signal processing, or at least it's the same as signal processing. I would say a distinction or a boundary to think about is real time versus non-real time. Some applications are very clearly real time applications, like communications. HDTV another application, as opposed to non-real time applications where you are willing to do some things very differently than the way you are constrained to do them with real time applications. When one thinks about the research in this group and the kinds of algorithms that we generate, the notion of whether they relate more to communications or to speech or to video is common. But they tend to be somewhat universal. Along those lines, a point that I try to emphasize when I give talks about signal processing is that signal processing is not an application. Signal processing is a technology, just like integrated circuits are a technology. When you think of speech and radar and communications and things like that, signal processing cuts horizontally, not vertically.

Goldstein:

That makes telling the story a bit hard because it fans out.

Oppenheim:

Another thing that makes it hard to tell the story is when you say to somebody such as a venture capitalist that VLSI is a technology. You can hold up a chip and say, "Here's what it is." When you say signal processing is a technology and you start writing gobbledy-gook on the board, he can't quite understand what you mean when you say that it's a technology.

Evolution of technology in the 1960s and 1970s

Goldstein:

All right. The picture I have of the evolution of technology is this: in the mid-1970s it starts to mushroom, and you have a variety of the new techniques. Towards the end of the '60s it had stabilized. There was this toolbox of techniques, but then by the mid-'70s that had changed. Is that your own recollection?

Oppenheim:

So you're saying that by the end of the '60s it stabilized, and then it changed again?

Goldstein:

Yes, that new things had brought about an explosion of new research areas, or that the basic theoretical work was all opened up again.

Oppenheim:

After the FFT in the mid-'60s, things plateaued. Then in the '70s things opened up again, but then they plateaued again, and now they've opened up again. The latest buzzword is "wavelets." Ron Crochiere and Larry Rabiner wrote a book on the whole area of multirate signal processing in '83. I like to tell this story in my first class.

The Speak & Spell product

Oppenheim:

A significant event, believe it or not, was the invention of the Speak & Spell.

Goldstein:

I'd like to hear more about it.

Oppenheim:

The reason why it was so significant was that, prior to the Speak & Spell, you could basically think of digital signal processing as high-end. It was funded largely by the military or by high-end industry like the seismic industry, because it was expensive to do. There were algorithms like linear predictive coding which came out of Bell Labs, done by Vishnu Atal and Manfred Schroeder, which were making a splash in the speech area. The National Security Agency was in the process of developing chips to do linear predictive coding for what's called the STU-3 or STU-2. It's their encrypted telephone.

Goldstein:

What is STU?

Oppenheim:

Speech Terminal Unit. Texas Instruments saw the possibility of building a speech synthesis chip using LPC synthesis, and in a period of six months they designed a chip and fabricated it. The thing that drove them to do it is they realized that while it was an expensive thing to do, if they could sell a lot of these they would amortize it over millions of chips. They saw an application in the Speak & Spell. This was done by Richard Wiggins at Texas Instruments. Also, there was a speaking calculator that a company out in California had just come out with that cost 500 dollars. I think the name was Total Sensory Systems. The Speak & Spell came out just shortly after that talking calculator and completely killed it, because Texas Instruments could do the speech synthesis so much cheaper. Anyway, that launched digital signal processing into the commercial arena and in the public awareness. If you then take compact disks and the digital signal processing that goes on in that technology, that's what has gotten the field to where it is. Another factor was that the first DSP microprocessor that was designed was very successful. Texas Instruments decided to design a signal processing microprocessor, but they very specifically decided they were going to target an application and design the specs for the chips so that it was compatible with that application. They chose modems. That's when they came out with the Texas Instruments TMS digital signal processing chip.

Goldstein:

Do you know when this was?

Oppenheim:

My First guess is late '70s, early '80s. But then that's just a guess. That also was dramatic, because that was of course a successful digital signal processing chip, and now you see DSP all over the place.

Commercial applications of signal processing

Goldstein:

With respect to those commercial ventures, I wonder if the gap between the high-end, cutting-edge stuff and the more common stuff is fixed. Was it only a matter of time before the common applications crossed the threshold of feasibility and started to emerge? Do you see a closing of the gap, whether the readily available applications are coming closer to the high end?

Oppenheim:

My instinct is the latter. If you look at the signal processing that's going to go into HDTV, the signal processing that's in cable modems and 56-kilobit modems, we're talking about stuff that's in the commercial arena. People talking about what are called software radios, put the A-to-D converter at the front end, and you can configure the radio to be anything you want it to be. It can match any standard, because it's just downloading software. Those are pretty high-end digital signal processing algorithms, the technology is now allowing you to do these things, and the marketplace is now getting broad enough.

Goldstein:

When you spoke before about signal processing as being a technology like VLSI, one thing about it is that it's influenced by the availability of those other technologies.

Oppenheim:

Absolutely.

Goldstein:

Examples I can think of include charge coupled devices in the early '70s. Can you give me some sense of that process? Are there other examples of outside technologies that enable signal processing techniques?

Oppenheim:

I'll give you one that probably is not quite what you're expecting; the whole network issue. There is something that we're working on here which has to do with doing signal processing over networks. Imagine the following scenario. You want to do some signal processing at home for some reason. You do signal processing at home all the time. There's a lot of very sophisticated signal processing in the home. Rather than having a signal processing device sitting in your house, you take the signal, you tag it with what you want to have done to it, you send it out on the network, and the processed result comes back. Now you can imagine doing that from your home or network where some part of your signal gets processed in Belgium and some part gets processed someplace else. Or you could be talking about that happening locally, or just in a box, where the box is a network. How the processing gets done depends on what else the box is doing besides your signal. That changes the whole way in which you have to think about structuring signal processing algorithms. This is the doctoral thesis that one of my students is working on. She's working on it and I'm guiding it. My goal is that about the time she's done people will start to realize that it's an interesting idea.

Goldstein:

That's looking ahead.

Oppenheim:

Oh, you're looking back at CCDs.

Goldstein:

Yes.

Oppenheim:

What are the technologies? There is another class of devices in the category of CCD's which are called charge transport devices, like a cross between CCDs and surface acoustic wave devices. There are things like focal plane arrays that go in antennas where in effect you're doing a lot of the front-end signal processing locally, in the focal plane, right at the antenna. Then there are the switched capacitor devices. Bob Brodersen at Berkeley had done a bunch of stuff on those. Again, those are what they are. They are basically discrete time analog technologies: CCDs, saws, charge, and switch capacitor devices are all in that category.

Goldstein:

I wonder if there have ever been examples of innovation bottleneck where if you imagine that certain external technologies enable certain techniques that are developed in principal in signal processing. Has progress in these two arenas proceeded at the same pace or hand-in-hand?

Oppenheim:

It was not. I'll tell you what this triggers. For example, your VCR was technology in search of something to be done with it. That's why when you get the manual for your VCR it has got a billion functions, none of which you want, because they have this chip and they don't know how or what to do with it. The issue drives things the other way, in that people making these processors sometimes expand the capacity of them without knowing what that capacity could be used for. Then quite typically what will happen is that capacity gets expanded in the wrong direction. Which is the reason why it's important that as new generations of DSP chips get made, they are made in collaboration with applications or algorithms where all of that is matched together. Because otherwise you end up making design decisions on word length or amount of memory or speed that are a mismatch to what you want to do. So these things don't move along in concert, and often that's the reason why some chips are more successful or less successful than others. The design tradeoffs that they've chosen turn out to be the wrong ones once the application comes to the surface.

Applications of signal processing and technological progress

Goldstein:

In terms of charting the direction of the growth of signal processing, what is role of specific applications in that growth? In the '60s were applications responsible for the direction of the growth more or less than you see in the '70s or '80s?

Oppenheim:

I would say that one of the strengths of this field has been this interplay between the technology, the algorithms and the applications. In the '60s speech and seismic data were the key applications, and to a large extent that's continued. In the '80s the compact disk and digital audio area has been a big one. Image processing has been a big one. More recently the whole HDTV or enhanced television has been a big one. Probably one of the biggest ones now is the whole communications area, both in the cellular telephones and high speed modems. I would say that is driving the signal processing now. I would say that is what has made the field so robust, is that there has been good coupling between the applications between the technology and the algorithms.

Goldstein:

Do you see a shift in the employment of the people who are doing the interesting work?

Oppenheim:

Oh, yes.

Comparison of industrial and academic research

Goldstein:

What are the bounds between industry and academic research? Does that shift?

Oppenheim:

Yes. I would say it certainly shifted. It shifted a long time ago. I would say in academia to a large extent in a lot of places they are non-solutions in search of non-problems. That is pejorative to say, but I'll say it. People are doing things that are mathematically curious, but they don't understand the potential applications well enough. But if you are going to do signal processing, you need signals to process. Even if you are not trying to solve the fundamental problem of your signal, you need to try out your ideas in the context of some signals. In academia a lot of people don't do that. It's just math. I would say that the most sophisticated signal processing that I see going on is going on in industry.

Goldstein:

Those industries you mentioned before.

Oppenheim:

Yes, communications particularly, although perhaps not in terms of coming up with new algorithms. But using things in very sophisticated ways, absolutely. I'm involved with Bose Corporation and a bunch of people there out of this group and they do very, very sophisticated signal processing. I'm involved with Lockheed Sanders, which is a Defense contractor. There again you have very sophisticated signal processing.

Goldstein:

If you describe this as a change, can you put it in a time frame?

Oppenheim:

This may be wrong, but I would tie it to the early 1980s, about the time that the Speak & Spell came out. It was also around that time that the compact disk came out. There was money to be made, and I think that motivated some of this transition. With defense contractors, there's always been interest in sophisticated signal processing. But even there, there is a difference between the research lab and the people building avionics equipment for example. There you need to be fairly conservative, because stuff has to work; it can't be necessarily right at the cutting edge. It often takes ten years to go from a defense contractor laboratory to a piece of avionics gear. I would say probably the beginning of the '80s is when a lot of this shift happened. Also the communications interest was important. When did we start getting $200 modems? Modems have taken off now because of the Internet, also. But stuff like cellular telephone and wireless communications have been cooking for the last eight to ten years.

IEEE and Signal Processing Society involvement

Goldstein:

Maybe the last thing I want to know about is, have you been involved with the IEEE Signal Processing Society very much?

Oppenheim:

What's the history of my involvement?

Goldstein:

Yes.

Oppenheim:

Going back to Arden House and the audio and electroacoustics group, I was very, very involved. I was on the digital signal processing committee for quite a few years, and I was chairman of it. There were many us all pitching in to do the heavy lifting to get this thing going. For a period of time I have been very heavily involved. Over the last ten years, in terms of the activities of the society, I have been much less involved. I think that it's better for the younger people to be on those committees to bring in fresh ideas. It's a large organization now and the publications process has become very, very cumbersome. They recognize that, but it's not clear what to do about it. I think the stuff that's published has become sterile. It needs some rejuvenation. I certainly talk to my younger colleagues about that, people who are fairly heavily involved in the Signal Processing Society, and they are interested in changing things.

Goldstein:

If you want to make a student understand how an organization can help promote the field, are there examples that you use from the earlier glory days of the Signal Processing Society?

Oppenheim:

I would say Arden House is an example. The committees themselves are an example, because when a committee brings together a bunch of people who are excited, there is a lot of cross-fertilization that happens just from the people interacting. I used to say that a lot about the DSP committee I was on. I looked forward to going to meetings not because of the business of the meetings but because of the chance to go to New York and interact with all these other people. The society, particularly in the old days, had these targeted workshops that launched things. Now there are too many of them and it's hard to differentiate things, and there are just too many workshops.

Goldstein:

Is the organization not being selective and they are keeping things manageable?

Oppenheim:

Yes, I think some of it comes from the fact that it has gotten very big. It has been around long enough so that the novelty of it is gone. Then there is this issue that I firmly believe is what always happens to these organizations, which is that they initially get driven by people with vision who are creative and who are often stars. At that stage it's a high-risk venture. After a period of time, when it has gotten a lot of notoriety and publicity, it's a magnet for mediocre people who want to jump on the bandwagon, and that's their way of getting some visibility.

Goldstein:

Thanks very much.