Oral-History:Ben Gold

About Ben Gold

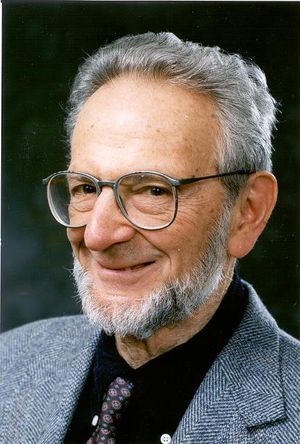

Ben Gold was born in New York in 1923. He received an electrical engineering degree in 1944 from the City College of the City University of New York, and a Ph.D. from the Brooklyn Polytechnic Institute in 1948. His first position in industry was at the Avion Instrument Corp. in New York, where he worked on the theory of radar range and angle tracking. From 1950 to 1953, he was with Hughes Aircraft Company in Culver City, California working on statistical problems of missile guidance. He joined the staff at Lincoln Labs in 1953 working there through 1981 on the Application of probability theory to communications. He also designed and implemented a device to recognize hand-sent Morse code signals and a device to measure the pitch of speech for use in voice-coding systems. He contributed to the theory and application of voice coding systems for digital data rate reduction, the development of the theory of digital signal processing, and the design and development of high speed signal processing computers and parallel computers. His work in the 1950s on pattern recognition led to the first device that could automatically recognize hand-sent Morse code. In the late 1950s and early 1960s he developed a pitch detector for use with vocoders that became a standard algorithm. Many later pitch detectors were compared with the Gold algorithm. In the early 1960s, Dr. Gold, together with Charles Rader and Dr. Joe Levin, pioneered the emerging field of digital signal processing (DSP). They developed the concepts of digital filtering as it applied to audio problems; these concepts proved applicable to diverse fields such as radar, communications, sonar, seismology and biology. Of particular significance were developments of digital filter design methods in both the time and frequency domains, and analysis of quantization effects. He spent 1954 in Rome, Italy on a Fulbright Fellowship and served on the MIT faculty as a Visiting Professor during the 1966-67 academic year. He is a fellow of the IEEE (1972) [Fellow award for "contributions to speech communication and digital signal processing"] and the Acoustical Society of America. His IEEE awards include the Achievement Award (1972), the Society Award (1986), and the Kilby Medal (1997). He and Charles Rader wrote one of the first textbooks in the field of Digital Signal Processing.

The interview focuses throughout on the evolution of digital signal processing as a field and on the impact of the fast Fourier transform and of Linear Predictive Coding. Gold also describes his development of a pitch detector and his contribution to the construction of the Fast Digital Processor at MIT. A major theme is the manner in which the FFT allowed theorists within the DSP community to harness computing power to test their own theories, rather than turning their conclusions over to analog hardware specialists who would then build the appropriate test machinery. He also describes the social relationships among the researchers based at MIT and the dynamics of funding and managerial oversight at Lincoln Labs.

About the Interview

BEN GOLD: An interview conducted by Andrew Goldstein, 15 March 1997

Interview #330 for the Center for the History of Electrical Engineering, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Ben Gold, an oral history conducted in 1997 by Andrew Goldstein, IEEE History Center, Piscataway, NJ, USA.

Interview

Interview: Ben Gold

Interviewer: Andrew Goldstein

Place: Berkeley, California

Date: 15 March, 1997

Education, career overview

Goldstein:

Could you tell me something about your education and early career?

Gold:

I graduated from Brooklyn Polytechnic in 1948 with a Ph.D. in Electrical Engineering, went to work for some small company in Manhattan for two years, then moved over to Hughes Aircraft Company in Culver City, California for three years. Since 1953 I have been an MIT Lincoln Lab employee, although now I am retired. I probably have always been interested in something to do with signal processing, although I didn't always call it by that name.

The important early work in digital signal processing came in a heavy flurry of activity in the early 1960s by Charlie Rader, myself, and several other people. I worked closely with Charlie, so I'm thinking in those terms. Before that I had been doing work that probably falls under the label of artificial intelligence. In those days we called it "pattern recognition." I designed a Morse code translator, which involved some signal processing but not very much. Then I got interested in the speech area. One day in late 1959 or early 1960 I found myself at Bell Labs talking to a gentleman named John Kelly, who is now gone, and he was describing something called the Pitch Detector to me. He also talked about vocoders. I had heard of vocoders, but I hadn't been very aware of what it was all about. Kelly inspired me to look very carefully at the problem of finding the fundamental frequency of human speech.

I was at Lincoln at that time, had already built the Morse Code Translator, and I came back and started working on this pitch problem. I did it just out of curiosity. In those days at Lincoln Lab you could almost pick and choose what you wanted to work on, up to a certain point. Working on pitch detection was considered okay, but my boss wanted to turn it into something "useful."

Goldstein:

Who was that?

Gold:

That was a fellow named Paul Rosen. He said, "Well, if you have such a good pitch detector, shouldn't we build a vocoder that includes that pitch detector?" So that put me onto vocoders. Now, a vocoder, among other things, has many filters in it, and once you get into filters you are into signal processing. It was frustrating at the beginning because although the computer was capable of doing a good program for pitch detection, it really wasn't capable of simulating an entire vocoder. It was just too complicated. Nobody knew how to do filters on computers. So we were poised when we realized that, "Hey, maybe there's a way to do this." We got very excited, and we started doing a lot of work very quickly over a period of two or three years. That's where most of the work came from.

Emergence of digital signal processing; digital filtering and speech at Lincoln Labs

Goldstein:

I've been trying to track down the origins of digital filtering. I heard from someone that they had digital filters available to them in the early '50s. I'm not sure how to reconcile that statement with what you're telling me now.

Gold:

Even earlier, people use the computer to process signals in a way that could be called digital signal processing. I think the difference was that people like Kaiser, Rader, myself, and maybe a few other people saw the beginnings of what you might call a formal field of study. We produced an awful lot of stuff very quickly. We were vaguely aware of some of the seismic work, but it wasn't the same thing. Sure, they could program certain things, but they didn't have for example the notion of a finite impulse response filter, or an infinite impulse response filter. They certainly didn't have any notion at the time of the FFT (Fast Fourier Transfer), which was really kind of a bombshell. That was what created the digital signal processing as a real field, because it has so many possible applications. I still feel that the period starting with some of Kaiser's work, some of our stuff, and working its way up to the divulgence of the FFT, from '63 to '67, was when the whole thing exploded and became a real field.

Goldstein:

You said you started to drift into speech. Can you tell me about how that happened?

Gold:

Yes. I was interested in problems of this sort. I was 16 when I went to see the New York World's Fair. At the fair they had an exhibit of the Voder. It was fascinating. Here was a machine that kind of spoke. Not very well, but it spoke. I was more interested in some of the other things. There was an exhibit where if you won a lottery you could make a long distance call to anybody in the country through the Bell System, and everyone could listen in. I got a real kick out of that, but that didn't lead to anything later. The Voder, on the other hand, I always remembered.

I think what really happened was more complicated, and let me try to trace it out. As I mentioned, I came to Lincoln in 1953 and was in a communications theory group. I worked there for a year, and then I got a Fulbright Fellowship and went to Rome, Italy for a year. I came back in '55 to the same group that now had a different name. It was called pattern recognition, and was one of the first groups in artificial intelligence. The group leader was a fellow named Oliver Selfridge. He changed the subject matter of the group drastically, and that's why I worked on this Morse Translator, which was a form of pattern recognition. The group did well for a few years, but then the division heads at Lincoln Labs started to be unhappy with the group. Four people from the group were just arbitrarily transferred to Paul Rosen's group. A year later, the pattern recognition group was disassembled completely and it disappeared. We four engineers were left to work in another group. One of them left right away, but I stayed.

Goldstein:

You were one of the four who were at first transferred before the rest of it was dissolved, then?

Gold:

My interpretation is that they took the four best engineers and decided, "We don't want the other people at all." When you got transferred to another group in those days at the laboratory, the boss didn't just come over to you and say, "Well, do this." He let you drift around and maybe pick up stuff from what other people are doing in the group, and he gave you carte blanche for a year or so. I had gotten interested in work that a fellow named Jim Forgie had been doing on speech recognition in a different group. I think probably my Morse Code work made me feel that this would be an interesting area to work on.

When I came to work at Lincoln, they had big IBM computers, but they didn't have the kind of computers we're used to now or that we got used to a few years later. In other words, as an engineer for quite a while I never felt that I needed a computer. If you needed to solve a mathematical equation, the programmer would do it for you. So until about 1958, I had nothing to do with computers. The Morse Code work I did in pen and paper, and engineers built the device. I had nothing to do with the hardware.

Around 1958 I began to feel that I had to learn something about these computers. I actually went to a school run by IBM for three weeks, and I discovered it was easy. I got interested. Then I discovered that at Lincoln labs there was a Computer Group who was pretty pioneering in the field of computer design. In particular there was a guy named Wes Clark, who was a great computer designer. Eventually I learned that they had built an enormous computer which really could do work in signal processing like speech recognition and pitch detection. Eventually I got to work with the TX-2. That really was the start of it.

Goldstein:

I still don't understand the difference between the kind of digital filtering that you started to work on, and the techniques that were already in the toolbox of engineers. You said that the seismic people were doing different things, but what was it that you needed to do that wasn't available in terms of digital filtering?

Gold:

- Audio File

- MP3 Audio

(330 - gold - clip 1.mp3)

Let's go back to the vocoder, because this is a good example. Here was one way that the work that I did operated in terms of practical use. First I started off with the notion that just because it's a nice thing to do I'd like to build a better pitch detector. From talking to Kelly, I felt that I could.

Anyway, I programmed something on the TX-2 computer that turned out to be a good pitch detector. But how to prove it was tricky, because in order to prove that you have a good pitch detector you need a vocoder. You need to excite the vocoder with the result of the pitch detector. We didn't have a vocoder, but Bell Labs had a vocoder. So we actually took a 2-track tape down to Bell Labs. One track had speech on it; the other track had the results of my pitch work recorded as pulses, which were sort of in synchronicity with the speech. That 2-track tape was fed into the Bell Labs vocoder and we could hear and record the output.

We brought the recording back and played it to our boss, Paul Rosen, and he said, "It sounds great. Let's build a vocoder." So that got us into what you might call analog signal processing. We didn't know how to build digital vocoders, so we actually built an analog vocoder and didn't do anything with the pitch detector except run programs. After a while, we were able to test our own vocoder with our program pitch detector. It was slow: to analyze two seconds of speech took the computer about two minutes, 60 to 1 real time. So here is what we had to do. We had to take the speech down to the computer, run the speech in through the computer, run the pitch detector program, record, make our 2-track recording, and bring it upstairs to where the vocoder was. It was pretty slow. So we kept saying, "Wouldn't it be nice if we could program the vocoder on the computer?" So we went back to Bell Labs and visited Jim Kaiser. There may have been other people there, but he's the one I remember. He said he knew how to build certain digital filters. That was just what we needed. We said, "My God, this is fantastic." We could actually build digital vocoders. So we started furiously looking around for literature, and I found there was a book. Have you ever heard the Radiation Laboratory Series?

Goldstein:

Sure.

Gold:

It's in a volume published in 1947 called Theory of Servomechanisms by James, Nichols and Phillips. I had looked at that book many times, but there was one chapter that I had totally ignored. It was a chapter on sample data control systems. That chapter was written not by the main authors but by a gentleman named Hurewicz, who was a mathematician. He wasn't interested in our kind of digital filters at all, but he spelled out the theory in such a way that it could be used directly. It was a revelation.

Here we were in 1963, and that chapter hit me like a bombshell. I practically memorized every word. Maybe a year or two earlier, Charlie Rader had come into the group. I had showed him how to use TX-2. He learned very fast. He and I both got very excited about it. It turned out that we were still pretty raw and didn't really know much. People brought up with doing analog work, analog filtering, found it very difficult to change their mindset to start thinking in terms of digital filters. At the time it didn't seem right. How could a filter be digital if it's analog? It didn't make any sense. But little by little we brainwashed ourselves.

There was another person I wanted to mention, a fellow who worked at Lincoln. This is kind of a sad story. This is a guy named Joe Levin, who was working on seismic detection. Lincoln at that time had a large program to monitor the Kennedy-Khrushchev Test Ban Treaty in '63. The tests were underground, and so there was a big effort in distinguishing underground explosions from earthquakes. Joe Levin was a staff member working on that subject. He was a really smart guy who knew a lot of things. Fortuitously, we told him about our visit to Bell Labs and he said, "Listen, I am teaching a course in control theory, so I know all about these things, and I'd be happy to give you guys a few lectures." So he gave us four or five lectures, and it helped a tremendous amount.

We got to the point where the three of us felt that we knew enough to write a really good paper on the subject. But then he got killed while he was driving on Massachusetts Turnpike. So Charlie and I wrote the paper, and it was a good paper. It was one of the early papers. At that point we were in the field, and despite the complaints of our bosses, who really didn't see the big picture, we kept working on it without getting fired.

Goldstein:

Was the pressure from above ever serious?

Gold:

Well, at Lincoln things were really pretty hands-off, but we had been doing all this vocoder work, we had published it, and we had been making an impact. My boss saw that we had kind of been neglecting it. One day he met me in the hall and he said, "Why the hell aren't you guys working on vocoders?!" I mean, he yelled at me. My response was, "We will, we will." But we kept working on digital signal processing. We did a little more work on vocoders, and the two fields eventually came together.

Publications, defining the DSP field

Goldstein:

It sounds like the field was emerging, and you needed to coalesce.

Gold:

Yes. By the way, other people were involved. Jim Kaiser was definitely involved. There was a gentleman named Hank McDonald, although I don't even know if he's still living. He was Jim's boss, and he actually didn't do much research, but he was very interested in pursuing the area and encouraged Jim to work on it.

Goldstein:

At the time, did you feel any need to define the field that you were working in? It was sort of in between a few areas, it was not very well defined. Did you feel any personal need to have it defined, and if so, how did that process work?

Gold:

It worked in a strange way. Like all processes. Let me mention a few other characters. In 1966, there was a gentleman named Ken Stevens who was a speech guy on the faculty at MIT. He had gotten to know me through my vocoder work, and called me up one day to ask me if I like to spend a year at MIT as a visiting professor. After a little bit of discussion with my bosses at Lincoln, it worked out. Charlie and I thought at the time that we had enough material to write a book on vocoders. But this DSP stuff kept coming along, and by the time I got to MIT and started teaching a course, it was basically a DSP course. So the emphasis had shifted slowly.

Another thing happened. While I was at MIT, a guy who used to be my first boss at Lincoln, Bill Davenport, asked me if I would make contact with Al Oppenheim. Al at that time was a tenure track assistant professor. He had graduated several years before. He was doing work on something that he called homomorphic filtering. Al looked me up, and we exchanged information. He told me what he was doing, I told him what I was doing.

We became friends, and at one point we went down to Bell Labs. They deserve a lot of credit, there's no doubt. We spoke to a fellow named Bruce Bogert. Now Bruce was not a DSP (digital signal processing) guy, particularly. He was interested in a lot of different things, and one of his interests again was earthquakes. He had come up with the idea of cepstrum. Now it turns out that Oppenheim's homomorphic filtering was also the idea of cepstrum. They are almost synonymous. The two of them went at it and really had a great discussion, and they both saw good stuff in what the other person had been doing. So that encouraged Oppenheim to continue work in that.

The other thing that happened was Oppenheim got very interested in what [Rader] and I were doing. And just around that time the FFT hit. And it was actually an interesting story about how it hit. I was teaching this course, and it was mainly a course on digital filtering the Z transform, different kinds of filters. There was a lot of stuff along the lines of applications to vocoders. I had a TA, a teaching assistant, named Tom Crystal, who was still a graduate student. Well, a lot of MIT students spend time at Bell Labs. One day, during the 1966-67 academic year, when the course was nearly finished, he brought a little document to me after class. It was by Cooley and Tukey. At that time it hadn't been published as a paper, as a journal article, but simply as an internal memo.

I can tell you my reaction. After the first few paragraphs the hair stood up on my head. I said, "This is unbelievable, and I know that this is very, very important." The rest of the paper, I couldn't understand at all. It was all mathematics, and I was just not good at that. It was quadruple sums, really complicated stuff. It was just algebra, but it was very hairy. So I asked Charlie, who is better at that than me, and Tom Stockham, to translate, because I knew it was important. They came up with some wonderful, wonderful ways of looking at it, which I think actually sparked the whole field. At that point, given Oppenheim's work, given the FFT, and given the stuff on digital filters, we said, "There's a book here," and Charlie and I sat down. I had written a fair amount of stuff already for my class, and we just sat down, we said "we're going to write a book, we're going to get Oppenheim to write a chapter, going to get Stockham to write another chapter." Charlie wrote the chapter on the FFT, and that was our book.

Goldstein:

What was Stockham's particular area of expertise?

Gold:

Stockham was an assistant professor and a good friend of Oppenheim on faculty at MIT. His advisor was Amar Bose, and he was interested in the kind of things that made Bose rich, like the impulse response of a room. He was doing that kind of work, but he was paying attention at that time to what was going on in DSP. He conceived idea of high-speed convolution, which was a way of filtering using FFTs. It was a breakthrough paper, and that was the main topic of his chapter in our book. By that time Charlie and I had written four or five papers on the subject, and we were deeply, deeply into it. Of course by that time the world was deeply into it. There was a lot going on.

Goldstein:

In the early 1960s or late 1950s was there any desire or interest in defining this field?

Gold:

I'd say that we were interested in defining the field. From my point of view, once I understood Hurewicz's chapter in The Theory of Servomechanisms, I felt that this was already a field. Just a few years later I actually offered to teach a DSP course. We had done quite a lot of work on quantization effects, on different kinds of filtering. Here was a whole field that we just called digital filters. The reason it became more than that was because of the FFT. We knew that there was such a thing as a discrete Fourier transform, but it seemed much too "clugy," because you need n2 operations. But if you do it with n logn, it makes a whole world of difference

Fast Fourier Transfer; computation and theory

Goldstein:

How did the FFT open the field up beyond digital filtering? What things became possible?

Gold:

If you look at the DFT (Discrete Fourier Transform), the FFT is simply a way of doing the DFT, but it makes looking at the DFT very interesting. With the DFT you can, for example, define a filter bank, or you can define individual filters. There are enormous connections between different kinds of digital filters and different kinds of ways of dealing with the DFT. So the whole thing becomes a unified field. Things that really weren't possible to compute were now computable. I think that was probably the most significant point. You can compute things like Hilbert transforms, filters with complex parameters rather than real parameters, and things that you just couldn't do in the old system.

For example, a fellow named Bob Lerner at Lincoln had spent an enormous amount of time and money just building an analog delay line for audio frequencies. Well, that's completely trivial on the computer. The field now not only had a theoretical basis, it had a computational basis. I think that's why it really prospered, because you could do anything. As computers got faster, it turned out that things that took an IBM computer the size of a room could be done on a chip.

Goldstein:

Did that computational capacity change the focus from theoretical work to application work?

Gold:

No, I would say that computation and theory became very strongly integrated so that you could do both at one time, and that had a tremendous effect on how the field grew. Because you could try something out and you could actually see what happened on the computer very quickly, and that gives you insights that you couldn't get just with paper and pencil.

Goldstein:

That's similar to digital filters in the beginning of the '60s where you were able to try different vocoders without having to actually build them.

Gold:

That's right, and we did. It was pretty slow compared to what we can do now, but it was fast enough that we felt it was really worth doing.

Pitch detector machine

Goldstein:

Let me step back for a second, because we moved past your pitch detector machine. Can you put the development of the pitch detector in the context of the technology that was available at the time? What did you want to do that was different than earlier pitch detectors and what tools did you have available?

Gold:

This short-lived pattern recognition group still had some very interesting notions. One of the notions that Oliver Selfridge advanced he called Pandemonium. He always had tricky names for things. He thought this was some sort of paradigm for how the brain operates. His idea was there are many, many independent modules in the brain, and they all go their own way, doing what they like to do, but somehow, in solving a pattern recognition problem, they get together and produce a good answer. What this says, in terms of engineering, is perhaps a single algorithm isn't sufficient to get a particular result. Maybe you need several algorithms which are quasi-independent.

This inspired me when I worked on pitch detection. I put together six little elementary pitch detectors. I had a method which was really nothing more than a histogram, a probability estimate of what these different pitch detectors told me about what they thought pitch was. The combination of these six elementary detectors led to a single detector which was better than any of them. It was quite good. So that was an important background for my work on pitch detection, but not particularly for the DSP. It was really for the pitch detector

DSP instruction

Goldstein:

Could you tell me about the class that you were teaching in DSP? Was this the first instruction they had at MIT in this area?

Gold:

This was probably the first time anywhere. It was 1966-67, and they just made an announcement. I had a fairly large class, maybe 20 people. One of them was Larry Rabiner. He was a grad student at the time, and that year while I was there Larry and I shared an office. We got to be friends. He was a very smart guy. By the time he graduated in 1967 he already knew a lot of stuff on speech and on DSP. He was the one guy who I really remember well. There are other people who took my class who have done okay, but he became a star. Al Oppenheim did not take the class; he just came and spoke with me, about the time the class was finishing. Tom Crystal sat in on it. He was a TA, and he has done fairly well. I forget where Tom is now, but I know that he was a committee chairman for IEEE, probably for the Signal Processing Committee.

Goldstein:

So the class was intended for graduate students?

Gold:

The class was definitely intended for graduate students, and I think there were only graduate students in it. This was very new stuff. It wasn't that it was terribly difficult. I think what was hard about it was the fact that your mindset had to change. The idea that you could, with a computer, do a filter, which had always been a coil and a capacitor, seemed very strange. It was very strange to me, and I think to many people.

Digital filters quantization, design, accuracy

Goldstein:

When you started working with digital filters, you had to be conscious of things like quantization effects. Were there design considerations from the analog world that you could forget about?

Gold:

Well, the basics about digital filters can be summed up as sampling and quantization. Sampling is a very basic thing, and what it tells you is that whatever filter you build, its frequency response is periodic in frequency. That's very different than analog filter. The question becomes, "What do I do about that? How do I handle it?" The way you handle it, most of the time, is that at the very end, you build an analog filter to get rid of all those periods and save only the main period.

Gold:

- Audio File

- MP3 Audio

(330 - gold - clip 2.mp3)

Accuracy is a very key question in both analog and digital filters. In the analog filter world, what people used to do first was what's called the approximation problem. That is, you find a mathematical function that does the kind of filtering you expect. That's the least of your worries in the analog domain. What you really worry about is how to build it. There have been a whole slew of volumes on techniques for building better analog filters that are less sensitive to perturbations in the parameters, because you can't build a coil that's exact. You can come pretty close digitally. But in the analog world what you try to do is develop a structure that is less sensitive to variations. So that's a whole big field, and occupied many people at Bell Labs and other places through the '20s and '30s and '40s.

Now, when you come to build a digital filter, first of all you have a sampling problem, which we mentioned, and that is not terribly difficult to get around. The quantization problem is now becoming less difficult, but it was quite a problem. You could think of quantization as just noise, and you don't want a noisy filter. The question is how much noise for how much word length. Charlie and I at the very beginning actually worked out some theoretical results. We said, "You've got to do it this way, and these are the answers, here are some numbers." But even that isn't enough, because depending on the structure of your digital filter you have more or less sensitivity to the parameters in the digital filter just as you do in the analog domain. So we had to figure out better structures. A lot of people did a lot of work on that, and that went on for maybe 10-15 years.

Goldstein:

Can you relate the work you did on quantization issues to that done by Widrow? Were you after the same issues?

Gold:

It's my impression that Widrow did a lot of work on what later came to be called neural networks a long time before anyone else. But that had nothing to do with digital filters at all.

Goldstein:

That's true, but he did get involved with neural networks after working on adaptive filters. His Ph.D. had been on analyzing the noise from quantization.

Gold:

All I can say is that I wasn't aware of what Bernie did, and we had no connection in that sense. I may have done the very same thing that he did and I didn't know.

Research networks, collaborations

Goldstein:

You mentioned a few names that I've heard before, people who were in this community at MIT. Could you lay out for me the social relations in that community? Was everybody on the same plane as colleagues, or were there senior people?

Gold:

The people I've mentioned are Rabiner, who was a grad student, Oppenheim who was tenure track faculty, and Stockham, who was also tenure track faculty. These are the three people who were associated with MIT except for Charlie and me. We were also associated with MIT working at Lincoln Lab.

Goldstein:

I've heard Tom Stockham's name come up as an inspiration. He was described as being important intellectually and also socially.

Gold:

Tom was a good friend of Al Oppenheim. Larry Rabiner was not as close. I'm not sure if he even knew Al Oppenheim at the time, but he knew me because of the class. Charlie and I worked together, and so Charlie knew everybody that I knew. I know that Al and Tom were really good friends, because Al bought Tom's house in Lexington. So Tom, Al, and I became quite good friends. Charlie and I didn't become great friends, but we worked together for a lot of time. After a while many other people got interested. I have a very good friend, Joe Tierney, who two or three years later suddenly realized, "Hey, look what these guys did. I want to learn it too." He started really doing stuff.

Charlie, Larry and I were definitely interested in audio processing. I think Al was more of a mathematical type, and I think Tom was also interested in audio processing. But at a certain point the radar people got interested. You're talking two orders of magnitude more speed from radar compared to audio, maybe three orders, and yet the possibilities were looking so good that even radar people started fooling around with this. Eventually, a lot of DSP came out of radar.

Goldstein:

When you say the radar people, do you have anyone specific in mind?

Gold:

The only name that comes to mind is a fellow named Ed Muehe, and it was peripheral with him. I mean, he was interested in it. There was also a fellow named Bob Purdy. Purdy was a good radar guy.

Fast Digital Processor, software

Gold:

What actually happened was that in the late 1960s, I came in one day to my boss and said, "Isn't this DSP stuff great? Why don't we build a computer based on it." It was quite a provocative statement, to build a whole computer. Anyway it turned out we did, and it cost a lot of money. It was a big computer. From end to end, it probably covered this entire room.

Goldstein:

So it was about 25 feet square?

Gold:

It was big, yes. It was called the Fast Digital Processor, the FDP, and it was built with in-house funds. Somehow my boss felt strongly enough about it that he was able to find the money. For many years they had what they call line item money, money that just came in through the Air Force or some agency like that, which was pretty automatic. Then there was other money that you had to apply for. So if something came along that the managers felt was good but not sponsorable, they'd use in-house money. The Fast Digital Processor, was built with in-house money. It cost a lot of money, and the directors got very antsy about it towards the end. So they said, "We've got to use this for something useful. Let's use it for radar." So we all became radar people. For a few years we worked on radar, and Muehe and Purdy were people that I worked with, but it was really the same people, like Charlie and me, who were pushing radar DSP.

Goldstein:

Was there similar work going on elsewhere?

Gold:

Oh, I'm sure there was. By that time the whole world was working on it. I'm just talking about what I know at Lincoln. I know that, for example, at Bell Labs they had built a very nice piece of software that they called BLODI, which stands for Block Diagram Compiler. It was basically a macro program where you could specify DSP blocks and have the computer assemble it and give you an algorithm. Charlie actually liked that program so much that he built something called PATSY for our computer. I don't remember what it stands for anymore. It was a nice algorithm. There was that kind of work going on with us and elsewhere.

In the late 1960s, Al came to Lincoln for two years and worked on what he called the homomorphic vocoder. It was a way of using his mathematics to build really a new type of vocoder algorithm, which is now a standard. Oppenheimer is one of the great guys. After those two years he went back to MIT and organized the first really intensive graduate course on DSP. From the course he wrote his book, which became as close to a best seller as a DSP book can be. But that was already into the 1970s.

Goldstein:

When you said that you suggested to your boss that you build a computer based on DSP, what do you mean by that? Based on DSP or to do DSP functions?

Gold:

So it could do very fast FFTs and very fast digital filters. The thought was about parallelism. These days of course you can build huge parallel processors, but in those days it wasn't that easy. So what I proposed was four individual processors, each running in parallel, and if you structure the computer correctly it can do digital filtering and FFTs four times as fast as if you only had one processor.

Now of course nearly anything you build, as you probably know, becomes obsolete by the time you finish building it. By the time we finished building the FDP, technology had advanced to the point where we now could do the same thing with raw speed. We actually built a succession of computers that were a lot simpler, but very fast for those days, to do signal processing. We no longer used the rather awkward structure of the FDP. It was good in its time, for a few years. We wrote a paper on it, and it was nice, but it became obsolete very quickly.

Goldstein:

Who was the boss who was interested in seeing it applied to radar?

Gold:

I would single out Jerry Dineen, who was director of Lincoln in the '70s. Paul Rosen, who had my group leader, was an associate division head by this time, so he was my boss's boss. His boss was Walter Morrow, who is now the Lincoln Lab Director. The names I would pick were Dineen, Rosen, Morrow, and Irwin Lebow, who was sort of my direct boss. These were the people who pushed radar. One of Lincoln's big things of course is radar, computers and communication. That's what it was founded on. DSP was sort of an orphan for a while. The directors didn't see that this was anything wonderful. But later on they did. It took a few years

Scholarly reception of digital signal processing

Goldstein:

You made a comment that people needed to change their mindset to see the digital filtering world. Was that a very serious issue with some people?

Gold:

I think it was pretty serious.

Goldstein:

Were there some people who weren't able to readjust?

Gold:

I think some people felt that, and maybe still do to some extent. At the time that Oppenheim was teaching his course, Louie Smullin, who was a microwave person, was the chairman of the EE department. Louie didn't think there was anything interesting in DSP. It was just signal processing: "We know signal processing, why get so involved?" He just didn't see it at all. Another person is Bill Siebert, who eventually integrated it into his classes. He is another professor at MIT. But at first Bill didn't think that it was that important. So, you know, things take time.

Goldstein:

I'm interested in following the progress of research of this kind into functioning systems. A lot of the people never pursued that, or never really followed it. It sounds like you were a little more involved with actual systems.

Gold:

We could build interesting systems using these ideas, and also could write interesting computer programs. Eventually the two things merged. When we first started working on this, we would write a program, it would be non-real time, and we would get results which would indicate how good or bad our algorithm was. We would polish it up, and then we would turn it over to a hardware man who could build it and make it run much faster than we could. Eventually the technology sort of merged so that the designer would simply write a program or make a chip. You know, the two became one in a sense.

Goldstein:

When did that happen?

Gold:

I'd say by the 1980s it was clear that was happening. Maybe other people more visionary could see it sooner, but I think the whole thing was really sparked by the integrated circuit revolution. Things were obviously getting faster, smaller, better, and the DSP people knew that. I think anybody could see that something that you build on a board today in five years would be on a chip. That was sort of common knowledge

Commercial applications of DSP

Goldstein:

Were you aware that any of the work that you were doing or the work that you saw going on around you was showing up in commercial systems of any kind or in functioning hardware?

Gold:

Yes. The person who comes to mind first is a fellow named Lee Jackson. Lee worked in Bell Labs and he knew Jim Kaiser. He was a younger person, and within two or three years after we started doing our stuff he got very involved and got to be very good at it. In the late '60s he left Bell Labs and he either formed or joined a company to build digital filters. I don't know how successful it was, but he's the one who comes to mind. There may be many other examples.

Goldstein:

When you sell a digital filter, is it just software that runs on a computer that your client already owns?

Gold:

No, probably in those days it was real hardware. It was a special purpose piece of hardware that did only that, that you couldn't program or anything. It was faster and smaller.

Goldstein:

Who was using these?

Gold:

Good question. I don't know if the company went out of business or is still in existence. My guess is they didn't really sell a lot. I think chips came along and the whole thing became a different game. I never paid much attention to what happened commercially and how much money people made. My guess is that the integrated circuit revolution it wasn't really a revolution, it was an evolution kept getting better and better. All sorts of digital devices were being fabricated and sold in many different ways by people like Intel and other places. I think that DSP devices were just part of that game. When people had to do something, and it needed some digital filters, they put in some digital filters. There was no big deal anymore. It was just another component. You could probably program it in most cases. It fell into the whole area of integrated circuit technology.

Gold's renewed focus on speech; linear predictive coding

Gold:

Part of it was still theoretical. In fact, at a certain point I more or less crawled out of the field and just got back into speech. There were all these professors with their graduate students, and they were doing stuff that on the one hand was just too advanced for me because it had lots of math, and on the other hand was sort of a waste of time. It was just being done to get a thesis out.

Goldstein:

That's interesting. When did that start? When did you start to have that feeling?

Gold:

I'd say that by the mid-1970s I was entirely involved in speech work, building vocoders, analyzing them, getting more involved in perception, which is something I am still doing now, but not really doing theoretical work in DSP. That was really just a few years of stuff for me.

Goldstein:

I see.

Gold:

It was a few years of theoretical work, then a few years of work in which I was pretty heavily involved in computer design for DSP, and after that just drifting back into speech.

Goldstein:

Were you involved when linear predictive coding became an important issue?

Gold:

We got involved very quickly. What happened there was interesting. Remember I mentioned that we were doing radar work? One of the reasons that we were doing radar work was that the funding for speech work had dried up, and that was one of the reasons directors ordered us to do radar work, "because we can give you money for that." All of a sudden LPC came along, just another bombshell.

Goldstein:

Tell me when.

Gold:

I'd say very late 1960s, early 1970s, probably going into up to the mid-seventies. In any case, we jumped into that pretty quickly. We had a fellow named Ed Hofstetter who was actually an old microwave person. He wasn't that old, but he came from microwave. He got interested in speech, and got interested in programming, something he had never done, and he got very good at it. He was also a good mathematician. When LPC came along, he was one of the first to really pick up on it and understand it. He said, "I could write a real time program using the FDP." At that time nobody could do real-time computation on a general purpose computer to do LPC.

Goldstein:

The FDP?

Gold:

The Fast Digital Processor. It was a fast computer. He actually programmed the first real-time LPC on the FDP. So that got us back into the speech area, and we actually did quite a bit of work on LPC.

Goldstein:

Why did that get you back into the speech area?

Gold:

Well, because LPC caused the funding switch to open again, and we got money, and were able to work on stuff. Just before that there had been a flurry of work on just DSP theory, and new filter structures had been invented, and Hofstetter, among others, also programmed those on the FDP. It was very useful to have a fast computer, because the ability to do things in real time turns out to be an important issue.

Goldstein:

Can you tell me how you became aware of LPC and just re-create that sequence?

Gold:

Either Schroeder or Atal came down to MIT and gave a lecture. We attended, and it was something that seemed very odd to us, because it was a whole different way of looking at how you build a speech processor. But after a little bit of fussing and thinking about it, we realized this was very powerful, and we got into it. I'm trying to remember whether people in our group did any theoretical work on it. I know that building the first real time LPC was a nice innovative thing, but in terms of any theoretical work, I don't think the people at Lincoln contributed. I learned about LPC, but I didn't contribute to any of the theory.

Goldstein:

You said it struck you as a strange way to build a speech processor, but LPC wasn't necessarily limited to applications in speech, was it?

Gold:

No, it came out of auto-regressive analysis, which was used in the statistical domain a lot.

Goldstein:

Right.

Gold:

But it had never occurred to me that it could be used for speech. That was the nugget, the fact that somebody, either Schroeder or Atal at Bell Labs thought, "Hey, this might be a new speech processor."

Goldstein:

I see. So in their lecture they said that?

Gold:

Yes. They probably spent a year or two working quietly at Bell Labs building something, programming something, and then they were ready to announce it. When they did, everybody in the world picked it up. And they are still picking it up.

Significance of digital filtering in digital signal processing

Goldstein:

The way you've been talking up to now, it makes it sound like digital signal processing was synonymous with digital filtering. I don't want to say something like that unless I've checked it. Is that the way it was used back then?

Gold:

That's the way I thought of it. Until the FFT came along, I didn't see any particular use for these Z transforms and all the theoretical stuff aside from programming, building, and analyzing filters. Now, it's true that when you build something like a vocoder the major part of the computation is definitely filtering, but you also do other things. You do rectification, and you do low pass filtering in addition to band pass filtering. You have to do down sampling. There are several other functions that take place, but they all seem very, very trivial. We could have done those functions ten years ago on computers. They were easy, but filters were hard. So I'd say filters were the heart and soul of DSP.

Goldstein:

You said that by the mid-seventies you had gotten out of digital signal processing and were concentrating on speech.

Significance of digital signal processing to speech research

Gold:

Well, I got out of it in the sense that I didn't sit down and try to do new theoretical things. I paid attention to the field. I used these things all the time as a matter of course.

Goldstein:

That's what I am wondering: how somebody could be doing speech without these tools.

Gold:

Oh, no. I use them to this very day. I'm always using DSP things, I'm always thinking in terms of cepstra or Hilbert transforms, things like that, and thinking of them in a digital way. But this is all textbook stuff now.

Goldstein:

Could you tell me highlights from say the mid-seventies up to the present day of new techniques that arrived that one could use in applications such as the ones you are interested in?

Gold:

Well, LPC was new.

Goldstein:

But LPC in the late 1960s.

Gold:

And LPC really can't even be done analog. It's by definition a digital process. What you're doing is saying, "I predict this sample on the basis of n previous samples." So it's automatically digital. LPC is certainly a big thing. There have been some nice things. I'm thinking of the different ways you could look at auto-regression, like maximum entropy ideas. There have been many things along those lines that have to do with the mathematics of linear prediction, but none of them really are in the same category as just the invention of LPC itself, or the FFT, or the fact that you could build digital filters.

Goldstein:

It sounds to me like you're saying that applications have been absorbing these major pillars since the late '60s.

Gold:

What I'm saying is the reason that it's such an important area is because of integrated circuits and the ability to combine theory and computation. You can realize things and do it all, and connect it with theory very strongly.

Goldstein:

Yes, I've heard you clearly.

Gold:

That just couldn't be done until integrated circuits and things like the FFT came along.

Goldstein:

Thank you very much.