Oral-History:James W. Cooley

About James W. Cooley

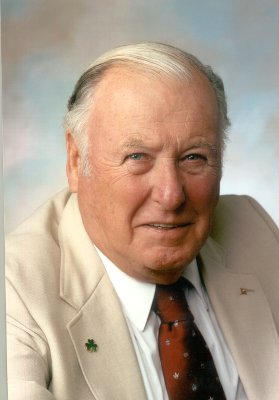

James W. Cooley was born in 1926 and grew up in New York City. After serving in the Army Air Corps, Cooley attended Manhattan College, graduating with a degree in math in 1949. He then went to Columbia University, earning his Masters in 1951 and doctorate in Applied Math in 1961. Cooley worked at the Institute for Advanced Study on the IAS Machine from 1953 to 1956, and began his career at IBM Research in 1961. He retired from IBM in 1991, and then taught at the University of Rhode University for two-and-a-half years. Cooley is a pioneer in the digital signal processing field, having developed the fast Fourier transform (FFT), which has been used in atmospheric studies and to analyze signals sent to the earth from outer space. He developed the FFT through mathematical theory and applications, and has helped make it more widely available by devising algorithms for scientific and engineering applications. Cooley was a member of IEEE's Digital Signal Processing Committee from 1965 to 1979, and helped plan the Arden House conferences on digital signal processing. He has been actively involved in other IEEE Acoustics Speech and Signals Processing Committee (ASSP), and from 1992 to 1993 he was Associate Editor for the IEEE Transactions on ASSP. His professional awards include the ASSP's Meritorious Service Award in 1980, the ASSP Society Award in 1984, the IEEE Centennial Medal in 1984, and the Jack S. Kilby Signal Processing Medal in 2002. Cooley became an IEEE Fellow in 1981 for the development of the FFT.

The interview focuses almost wholly upon Cooley's career, particularly his development of the fast Fourier transform (FFT). Cooley talks about his interest in theoretical work and how IBM Research allowed him a great amount of freedom. He explains his early interest in digital signal processing and covariance problems, and how this led to his work with FFT algorithms and his writing of the famed 1965 paper. Cooley also notes the important work of colleagues such as John Tukey and Dick Garwin in the development of his work and ideas. He discusses his work on the IEEE Digital Signal Processing Committee as well as various uses for the FFT. Cooley recalls his work to make the FFT available to increasing numbers of scientists through developing appropriate computer software. The interview closes with Cooley's thoughts on the changing interests of the Digital Signals Processing Committee and its predecessors.

Other interviews detailing the emergence of the digital signal processing field include C. Sidney Burrus Oral History, Ben Gold Oral History, Wolfgang Mecklenbräuker Oral History, Russel Mersereau Oral History, Alan Oppenheim Oral History, Lawrence Rabiner Oral History, Charles Rader Oral History, Ron Schafer Oral History, Hans Wilhelm Schuessler Oral History, and Tom Parks Oral History.

About the Interview

JAMES W. COOLEY: An Interview Conducted by Andrew Goldstein, Center for the History of Electrical Engineering, 11 March 1997

Interview #327 for the Center for the History of Electrical Engineering, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

James W. Cooley, an oral history conducted in 1997 by Andrew Goldstein, IEEE History Center, Piscataway, NJ, USA.

Interview

INTERVIEW: James W. Cooley

INTERVIEWER: Andrew Goldstein

DATE: 11 March 1997

PLACE: Yorktown Heights, New York

Background and Education

Goldstein:

Can we start with basic biographical information, like when you were born and where, and get right up to your education?

Cooley:

Sure. I was born in 1926

Goldstein:

On what day?

Cooley:

September 18, 1926. Grew up in New York City, Grover Cleveland High School, was in the Army Air Corps and then went to Manhattan College, in Riverdale, the Bronx, and Columbia University, where I got a Masters in 1951, a PhD in 1961.

Goldstein:

In math?

Cooley:

Applied math. Then I got a job. Well I worked at Columbia University as an electrician for a while, but I got a job at the Institute for Advanced Study in Princeton, New Jersey, on John von Neumann’s computer from ’53 to ’56.

Goldstein:

That was still the IAS Machine.

Cooley:

The IAS Machine, right. I was a programmer on a meteorology project, programming American weather forecasting. Then in1956 I went to New York University, the Atomic Energy Commission, what was the - Department of Electro - I forget the name of the department. It was part of the math department. In 1961 I came to research, IBM Research in Yorktown Heights. Retired from that in 1991, took a job at the University of Rhode Island. And I taught there for 2 ½ years.

Goldstein:

Let’s go back to your education now. When did you graduate from Manhattan College?

Cooley:

1949.

Goldstein:

And then you immediately started up at Columbia?

Cooley:

Columbia University, right.

Goldstein:

And what were you working on? What was your thesis?

Cooley:

Well, my Master’s thesis was Functions of Matrices, I was working in mathematics then, but then I went towards Applied Math and got the degree in Applied Math, PhD in Applied Math. I used to work with desk calculators, jobs on the side.

Goldstein:

As a computer?

Cooley:

For computing, yes, with desk calculators.

Goldstein:

What was your PhD thesis on?

Cooley:

Oh, some computational methods for studying the investigation of diatomic molecules. It was in quantum chemistry, actually, done as mathematical problems in numerical analysis.

Goldstein:

What was the work at IAS like? Was it a departure, something different?

Cooley:

Well, that was programming and solving the equations for the flow of the atmosphere. The program itself was a matter of solving a Poisson equation on each time step and then doing an extrapolation in time. Anyway, you do it with a map of the heights of pressure surfaces, distributed over a grid. Well, that was extremely laborious, took a lot of time, so there wasn’t much time for any theoretical work. It was a matter of juggling numbers in the machine. We had only 8,000 words of memory And we had to do a forecast over the whole Northern Hemisphere on three levels. So guess how we could do that? With 8,000 words of memory.

Goldstein:

The thing I am curious about from this part of your career is how you reacted to discrete math, whether you had much experience with the kind of numerical work that you do on a computer.

Cooley:

Before that, oh yes, I worked with desk calculators. Calculating various things, integrals, and I worked on the order/disorder problem. I had several jobs in the physics laboratory at Columbia University and in the math department.

Theoretical Work and IBM Research

Goldstein:

I’ve heard from several people who have done work in signal processing that the availability of computers opened up whole new opportunities for them for things like digital filtering, different techniques. Did you have any experience like that?

Cooley:

That came later. You’re talking about the Institute for Advanced Study. You spent most of your time doing silly clerical work like punching programs in binary on IBM cards. So there wasn’t much time for theoretical work. The problem with programming is it is so laborious, you took an awful lot of time in doing trivial things. You don’t think about that anymore. You program at a higher language and you don’t have to think of all those problems.

Goldstein:

So tell me how you returned back to more theoretical work.

Cooley:

By coming to research here at IBM. They had far better computational facilities here and I had the opportunity of doing anything I wanted. When they started the research division, the idea was to staff it with lots of scientists, and just let them do whatever they wanted to do, and then the company would find among all these things something that could be used in the business.

Goldstein:

Is that really the way it was? When you came here, were you able to just define the research project you wanted to work on?

Cooley:

Yes, I could do anything I wanted.

Goldstein:

What did you start working on?

Cooley:

I worked on problems of quantum chemistry, so following up on my thesis.

Goldstein:

Can you tell me how your department was organized when you first came here?

Cooley:

Well, first I was in the computing department, and then I moved up to the math department. And there I could continue what I was doing, I got into several other areas, do you want me to go on to that? We can talk about it.

Goldstein:

Sure.

Cooley:

Well, one of them, I got into neurophysiology when the department chairman, Hersch Cohen, decided that mathematical biology might be of interest. So we did simulations of nerve conduction, the Hodgkin-Huxley model, and several other models of ion diffusion. I must say though, I did get involved in quite a few different things and lots of topics that had absolutely nothing to do with IBMs business. But, as they say, this is the purpose of the research division.

Goldstein:

So that work was mathematical? You were developing the mathematical model?

Cooley:

Yes. Developing the mathematical model and numerical methods for solving the equations.

Goldstein:

Did any general purpose mathematical techniques come out of that work?

Cooley:

Well, for the nerve conduction work I was using fairly standard techniques, known methods. While we worked on some novel ways of programming, however. We published a few papers on that.

Goldstein:

What machines were you working on?

Cooley:

Well, from the beginning or here in research?

Goldstein:

I guess here in research -

Cooley:

We had a 704 at first, and then it was a succession of machines, t360 series came, and then later the 370 series, I worked on all of them. In American weather prediction I worked on the 701 and later on the 704. IBM gave time to the projects, I did that down at [the] World Trade Center, so I knew IBM, they came as a customer then, using the machines.

Tukey, Garwin and FFT

Goldstein:

Can you tell me what led you to the work on the FFT?

Cooley:

Well, that was kind of forced on me in a way. Dick Garwin was here, and I had known him before. I did do some work in the Watson Laboratory at Columbia University. He was on the staff there, but he came here with some ideas from John Tukey. They were both on President Kennedy’s Scientific Advisory Council. Dick Garwin said John Tukey was doodling while at one of these meetings, and he asked him what he was doing, and he said well he was working on an idea for doing Fourier transform much faster than people do it now, and it was essentially the idea of taking N, the large number of points, for your transform, writing it as two factors, equal of A x B, and then decomposing the large Fourier transform into sets of small Fourier transform of size A and B, A and B being the factors of N. And he said to Dick Garwin, if you continue the factorization, you can get a Fourier transform, which does N equal to a power of two, in N-log N operations, which was very, very important to Dick Garwin, because at the time they were discussing ways of limiting atomic bomb testing, and Dick Garwin had the idea of setting seismometers all around the Soviet Union, since the Soviet Union wouldn’t allow on site testing. So he thought he could do it from outside, but to do it, you would have to process all the seismic signals, and a large part of the processing was, could be done, by Fourier transforms. But the computing power at the time was not enough to process all of the signals you’d need to do this. Now, this was his incentive, but he didn’t discuss that, he came to me and he made up another problem. He didn’t want to tell me about that.

Goldstein:

So he was thinking this around the early 60s.

Cooley:

It was when I first came here, I guess that was 1963. So he described another problem, looking for periodicities in the spin orientations of Helium-3 in a crystal. I didn’t think it was very important, and I kind of put it on the back burner when I was doing something else, but he came and kept prodding. Finally they got a program done to do a three-dimensional Fourier transform, where N was the power of two in each dimension.

Goldstein:

So he presented you with a different application.

Cooley:

Yes, a completely different application.

Goldstein:

How did he lay out the idea that he had heard from Tukey? Did that come as a surprise to you? Was that totally unknown?

Cooley:

Well, it wasn’t surprising, because I had worked with John Tukey before. He was the liaison between Princeton University and the computer project and we used to have meetings in which he was consulted on meteorology. One of the problems he had that he brought over and had me program, was to do spectral analysis of wind velocities, and to do it by Fourier transforms. He was developing what became known as the Blackman-Tukey methods, and the Blackman-Tukey methods were designed to do large spectra, avoiding large Fourier transforms, deliberately. So first you do the correlation function, then you can do short Fourier transforms. So it is somewhat ironic that the first project was one which was designed to avoid large Fourier transforms.

Goldstein:

Right.

Cooley:

My second contact with John Tukey was to do a large Fourier transform. I also knew him from square dancing. He was an ardent square dancer.

Goldstein:

What remained, what work needed to be done? So Tukey has this idea about factorization.

Cooley:

Right.

Goldstein:

And so what was the problem? What still needed to be done in order to have a general method?

Cooley:

Well, it had to be programmed. And the indexing of this was not quite straightforward as simply summing up a Fourier series. One had to work out an indexing scheme. And at the time of course memory was not awfully large and we talked about large Fourier transforms, so I had to work out an indexing scheme which would allow you to overwrite the data with results. And to do that, I developed this thing which became known as the bit reversal. If you just go ahead and do it, I can overwrite data with results, but either the starting data or the final data had to be in a permuted order, a permutation defined by the bit reversal.

Goldstein:

Let’s talk more about things like that. What were some of the tricks that you had to develop in order to realize this BBFFT on the equipment that you had?

Cooley:

Well, the trick was this bid reversal, first the indexing scheme and this bit reversal. That was the novel idea to this.

Goldstein:

Yes.

Cooley:

Of course the first novel idea was to do the factorization, which you do on pencil and paper, put together a program. To get an efficient program you have to have some way of indexing.

Goldstein:

Do you know how long Tukey had had this factorization idea before anyone was able to program it or make it work?

Cooley:

Well, he must have had it at least a few years, because he gave it in his graduate courses, he gave it to the students. In fact, one very bright student, Gordon Sande, picked up the idea and programmed it almost simultaneously as I did, and he wrote a paper on it about the same time I wrote the paper which I published with John Tukey, but when he brought it to John Tukey to look it over, and asked if it should be published, they told him that my paper was already on his desk, so he withheld it. But his paper was actually a far better paper because he described how to use the idea for doing covariance calculations, which was a very important application to this. I hadn’t thought of that at all.

Goldstein:

Tell me what that adds, I’m not familiar with that work.

Cooley:

Well, there’s a basic theorem that if you have to do a covariance calculation or correlation function which is another name for it, and say the time domain, over in the frequency domain, if you transform to frequencies, this operation transforms into simple element-by-element multiplication. Now, covariance calculation takes a number of operations proportional to N-square, but in the frequency domain it takes a number of operations proportional to N, a number of data points, which is of course far smaller. Now, if you can do the Fourier transform and end log N operations, and then in the transform domain do N operations, then transform back again, you get a covariance function in a number of operations proportional to N log N instead of N-square, which makes a big difference if you have a very large N, which became important when computers became big. It wasn’t important when you had the small sequences that one handled by hand and by desk calculator.

Goldstein:

He wasn’t able to get that work in with your paper, but was he able to publish it quickly?

Cooley:

When they got involved with the IEEE DSP committee we organized a conference on FFT and invited Gordon Sande. He gave his paper there. So he did later publish his idea, he published some better versions of it, too. He added a lot more to it. He added a lot more about general factorizations of N.

Goldstein:

It seems strange to me that the factorization idea lay fallow for a few years. Had Tukey been working on programming it and wasn’t able to get it done, or had nobody really taken up the challenge of getting it?

Cooley:

As far as I know, no one programmed it except first Gordon Sande and then myself.

Writing the 1965 Paper, Patents

Goldstein:

When did you start working on the FFT paper and can you tell me something about writing that paper, the 1965 paper?

Cooley:

After I wrote the program and gave it to Dick Garwin, he went about publicizing it and they still didn’t think it was very important. We had a seminar here in the math department, as we often did. We were talking about computing and so on, and a couple of the people who were developing APL, Ken Arborson. Are you familiar with APL?

Goldstein:

Sure.

Cooley:

Well, they were giving some lectures on APL and then they said, “are there any other algorithms that we can illustrate with this?” Of course it wasn’t the programming language then, they were talking about it as a way of describing algorithms. Nobody had implemented it on a computer. So then I volunteered to give a talk on this algorithm, which I did. Then it gained some interest, they programmed in APL and did a very good job and I think one of their later programs could do the whole thing in one line of APL.

But in any case, there was a lawyer in the back of the room there named Thomas, but I can’t remember his first name. But he heard my talk and said afterward, “This has patent possibilities.” So they called a meeting with some attorneys, the patent attorneys, and they decided yes, it has patent possibilities. There are several considerations, however. One is that John Tukey isn’t an IBMer, the other is that we better get the thing - instead of patenting, put it in the public domain so we protect the right of IBM to use the idea, before someone else patents it.

Cooley:

So they suggest that I write a paper on it.

Goldstein:

Yea.

Cooley:

So I just wrote a paper, if you have seen it, it is rather short, simply describing the basics of the method and of course you could have N, any number of factors and then show you can get the smallest number of operations of N, as a power of 2 or 4, but any set of factors will do, which John pointed out right from the beginning. And the paper went back and forth from me to John Tukey several times. Finally he agreed on it and we got it published. As I said, it was only because the lawyers told us to do so. Now the method for protecting this as a patent was this. The lawyer suggested that somebody design a device, because at the time you could not publish or patent an algorithm. You have to describe a process on a device, so they suggested that Ray Miller and Wienegraad in our department design a circuit which would do the Fourier transform by this algorithm, and they told me to put a footnote in the paper saying they did it. That would put the whole thing in the public domain and prevent anyone from patenting it.

Goldstein:

So they did an analog circuit that realized the FFT?

Cooley:

It was digital because it is a digital method. Well, it turned out to be a very good idea, because many years later, someone did get a patent, a fellow named Ron Bracewell, when the Hartley Transform came along, which has a lot in common with the FFT, a basic idea of doing the factoring is there, and he described his algorithm and of course most of the description was simply the FFT. Would you like me to go on with another part of the story about the patent?

Goldstein:

Sure, sure.

Cooley:

Well, this was much later, it must have been around ’87, I worked on a software package [for] an engineering and scientific subroutine library, for the new vector machine which was coming out. The day before the machine was to be announced, the lawyers called up and said, “Hey, you might be infringing on somebody’s patent.” The subroutine package, which was a very important part of the vector machine to have this library available. We had a very quick meeting and we went over there and they asked us what we had done before and what this has to do with the patent. Now, Ramesh Agarwal and I who had - we worked on this and in fact Ramesh did all of the programming for the FFT. We described what the original FFT and what we had done and how we had put it in the public domain and in fact one of the lawyers, Frank Tezerdian, was the lawyer who actually suggested doing the publication of the FFT idea.

Goldstein:

You mean originally or - ?

Cooley:

Originally, yes.

Goldstein:

Oh, I thought you said that was someone named Thomas.

Cooley:

No, he was the fellow who said that was patentable and called this meeting. He had since passed away.

Goldstein:

I see.

Cooley:

But Frank was on that committee too, and Frank was the one who suggested the strategy for putting it into the public domain. Ramesh and I had already read the patent, somebody had sent it to us, and we were well-prepared and we were able to show the lawyers and of course since Frank Tezerdian was familiar with it, we were able to respond to the lawyers who were setting up and issuing the software package. So this saved the day. We just probably would have held up the release of the vector machine, which was a pretty strategic thing for IBM.

Goldstein:

Let’s go back into the 60s. What was the gap between when you wrote your program and when you published the paper?

Cooley:

That went very fast, actually. I sent it to Math of Computation, and actually I knew the editor, because he was in the New York University. I forgot the name of the department. In any case, for some reason, he got the paper thing published very fast. It was published in April of ’65. I think I only sent it to him about the beginning of the year of ’65.

Goldstein:

I see.

Cooley:

I worked on the program, that was started in late ’63 or so. I am just guessing now, I may be off. There was a delay before doing this, because as I said, I wasn’t going to publish it at first.

Goldstein:

I’m interested in that, when you said that you didn’t think it was very important. In the end it turned out to be very important, for all sorts of applications, and I am wondering about what it was at that time that made that destiny hard to see.

Cooley:

Well, I guess most of my career was with machines that had very small capacity and which you wouldn’t have a very big N, and this is the thing which became important when N became big. The other is I wasn’t in digital signal processing, I wasn’t familiar with the problems that people were doing with these things.

Dick Garwin

Goldstein:

Was there any liaison between those two worlds, people who knew what you were doing, who could see how it was applicable to signal processing problems?

Cooley:

The one very strong liaison was Dick Garwin, so he understood both worlds and he did a lot to publicize the algorithm.

Goldstein:

I actually don’t know much about him, can you tell me what his position was here and, what his responsibilities were?

Cooley:

Well, first he was in the IBM Watson Laboratory at Columbia University that was set up by IBM and was part of the astronomy department. They sent a bunch of scientists to work finding out how to use modern computers, that is, punch card machines for doing scientific problems. He is a brilliant theoretical physicist, and numerical analyst, a lot of the field. Then they set up this laboratory. When they built this they brought him up here. So he worked here and there.

Goldstein:

Do you know what his position was here?

Cooley:

Well, he was a follow of IBM and he was on his own, he could do anything he wanted to do.

Goldstein:

So he wasn’t really built into the management hierarchy?

Cooley:

No, [he] wasn’t, but he was very important.

Goldstein:

And so he, because he had this exposure to Columbia and a variety of other things -

Cooley:

Yes, he had exposure to the whole world of scientific calculations, he was kind of on the leading edge. As I said, he was on President Kennedy’s Scientific Advisory Council.

Goldstein:

So did you and he talk about how the FFT might be useful in other applications?

Cooley:

Later we did. He didn’t tell me in the beginning. We just wanted to get the program done.

Goldstein:

When did you start to talk about that?

Cooley:

Well, after he publicized it and I started getting requests for copies of the program. And then of course talking to these lawyers and they are saying, “Well, we should patent it, it was a patentable idea,” and then I started getting calls and invitations from people.

Rader and Stockham, Oil Exploration

I guess the people who really convinced me that it was important were the ones up at MIT, namely Charlie Rader and Tom Stockham. They were doing some very early work on the digital signal processing of voice and music. Tom was doing processing of - one example he gave was old Caruso records. Are you familiar with this?

Goldstein:

Yes, I heard about that.

Cooley:

So he called me and told me that this was an extremely important breakthrough, he had been doing this for quite a time on big computers, but it was of no practical use, because computers weren’t big enough to handle the computational task, but this cut the job down an enormous amount. It was getting to where it might be feasible. So he was very, very excited about it. Then I invited him down here and Charlie Rader too, and he gave a talk on how he was using this, and then he demonstrated with his Caruso records. Then Dick Garwin made an effort to publicize it. One of the things he suggested was that we run a seminar in IBM and teach other IBMers the idea, bringing people from the field who were in digital signal processing. Many of those were in the oil exploration or they dealt with the oil exploration industries.

Goldstein:

So IBM was a contractor to oil exploration companies?

Cooley:

Well, they sold the machines to them and helped them with the software for doing oil exploration and they do a lot of convolution calculations and [Unintelligible] spectrum calculations.

First Arden House

So they formed a little group here, with Pete Lewis, Pete Welsh, and myself, and had us put together a set of notes for this course, which we did very fast. Now, they were statisticians, so they knew a lot more about the applications than I did, how to do this, and so this little booklet we put out as a research report for the seminar was quite successful and then later led to a set of papers that we published.

Goldstein:

So the booklet is sort of like an internal technical memorandum?

Cooley:

Yes, it was internal and the seminar was internal to give IBM an advantage. Then the next thing that Dick Garwin did was to suggest that we have a workshop, too. Which we arranged after I got on the DSP Committee, I was invited to that because of the FFT. Rich Cannell was the chairman of the committee at the time, and Charlie Rader was on it. I don’t know if Tom Stockham was on it at the time, I think he came on it. Manny Parson was there, they were doing some work on standard score. [Unintelligible] burst estimations. So this committee then decided to run a seminar or workshop and invite everybody who is interested in the FFT. So to do that, Bill Lang suggested getting the Arden House, which was the Harriman mansion over in Harriman Park, which was then owned, and it still owned, by Columbia University. They thought it would be just about the right size, they could accommodate 106 people and that would be all the people who were interested in FFTs, and we had it and it was a very successful meeting. Now, that was one of the dates I said I can’t quite remember. I think it was ’67.

Goldstein:

I think Arden House was maybe ’66.

Cooley:

Oh, ’66 perhaps. So we had the meeting and Dick Garwin came to it, too, and then he described the seismic problems and his reasons for being interested in this. So all the prominent people who were interested in this came. One other significant thing about it is that the people were from many, many different disciplines. Two of them were heart surgeons. Let’s see, a couple of others gave papers on all sorts of other applications. Some were numerical analysts and computer people who described other generalizations of the indexing schemes.

Goldstein:

Yes.

Cooley:

Marshall Pease for example, who gave some papers which led to a long series of variations on FFTs for various type architectures. Let’s see, Dick Singleton of the Stanford Research Institute put out one of the first papers and probably one of the very best for quite a while on the mixed rate FFT where he could take many different factors of N. He had the paper on the call of the killer whale. So that was a very interesting meeting and the notes and papers from this meeting were disseminated all over. They were very important in spreading the word about the FFT.

Writing the Program, IBM Publicizing

Goldstein:

The picture I am getting now is that you contributed programming expertise. The problem that you were working on was a small N problem, it didn’t have a vast amount of data.

Cooley:

What one? You mean the problem Dick Garwin gave me before this?

Goldstein:

Right.

Cooley:

I can’t remember the size of it, but it was an FFT on a cube. I think there was something like maybe eight or 16 points in each direction, so it wasn’t a very big problem.

Goldstein:

Is there anything to say about how you came up with those ideas?

Cooley:

No, it’s hard to say how I came up with that idea. I know I struggled for quite a while, trying to write the program so I could overwrite data. I guess one of the reasons I put a lot of effort into it, well, first of all, we were short of memory. I was thinking if N might be big, I was writing a general program. The other is I did think something like we did with the numerical weather forecasting, because of the severe limitation of memory, we always kept writing it so we could overwrite - keep overwriting the data with results. So of course the other method, the analogy didn’t go any further. But it was kind of a challenging problem. I got quite interested in what I saw, first to me it was simply just a programming job where I didn’t think it was very significant, but then when I got interested in this idea of the indexing, then I started putting more effort into it.

Goldstein:

Dick Garwin promoted it both externally, got a few other people interested, and then also internally.

Cooley:

He had lots of contacts outside and people doing seismic work and physics. Everywhere. He was a prolific writer and speaker.

Goldstein:

It sounded like on the one hand you are saying that they - that IBM was interested in keeping it proprietary but then on the other hand -

Cooley:

No, they wanted to publicize it, it was the opposite. They wanted to publicize it and protect the right of IBM to use it.

Goldstein:

I see.

Cooley:

Originally IBM did a lot of work on algorithms and programs and disseminated them because IBM felt that the whole future was in the machines, and the more you get people using the machines the more machines you’ll sell. They had no idea of selling software. That was out of the question. Software was free at that time. People wrote programs, if they thought anybody could use them, they gave them out free. This is the way IBM, well, most of us, thought the world would be. We’ve seen differently now

Other Interested Groups, Similar Ideas

Goldstein:

You said that Dick had mentioned it to the people at Lincoln Labs working on speech research, but then some other people, too. You started getting requests for papers. Do you know some of the other people who he had presented this to?

Cooley:

People he had presented it - yes, astronomers, he knew a lot of astronomers, they were using it in astronomy, one of the big applications they had there was before they had spectroscopy, they could use the FFT for calculating the spectrum of a diatomic molecule on another planet. Do you want to go into that? I don’t know if you do.

Goldstein:

Yes.

Cooley:

Well, not to digress, but for continuity I think the next question you should ask is was this done before?

Goldstein:

Okay.

Cooley:

That was an interesting story. I think you were leading to it. I think I could tell from your questions. The question now, when we are talking about patenting the idea. They asked, where the idea came from, was it ever done before, and I said, “As far as I know it wasn’t done before,” and I asked John Tukey and he said he saw ideas which were somewhat similar, and he referred me to some papers by [Frank] Yates, a statistician. If you remember, John’s field was statistics, and Yates had a factoring algorithm that was for doing end factorial experiments and the experiment was on the uses of fertilizer, if you have different fertilizers you are using and if you want to use them in all possible combinations to the power N, each one is either used or not, so it is a binary thing. He developed this indexing scheme, which was something like FFT except that in that one, this thing literally became an N-dimensional problem, and he didn’t have what we call this twiddle factor in-between iterations done, I think I am getting beyond the scope here with details.

But anyway, he referred me to a completely different class of algorithms, although algorithms by I.J. Good who had done a lot of work, very important work, but he did it in coding theory during the war, which therefore was all secret and it wasn’t known, but he did some work too, which was reminiscent of this algorithm or was suggestive of it. But it wasn’t the same. His was algorithms where you were actually doing an N-dimensional transform which was fairly simple. That was simply iterating on the different dimensions. Factoring N into the factors and making it look like an N-dimensional transform was somewhat similar except that in between you would have an extra complex multiplication called a twiddle factor multiplication. So he didn’t quite lead me to it, but then I was led to some work by [Cornelius] Lanczos in 1943.

He was a Hungarian and he was a refugee and went to live in Dublin. He was a numerical analyst and he did a lot of work on Fourier transforms. But he had the idea and published it in the Journal of the Franklin Institute in 1943. I’m trying to remember now if John told me that or who told me that. Oh no, excuse me, John Tukey did not tell me that. I got a letter shortly after the publication from Phil Rudnick of the Loyola Institute in California saying he used the idea and he got it from Lanczos’ paper. And I looked it up and there it was, Lanczos had the idea, doing the factorization. But Lanczos wrote several books on doing Fourier transforms and he devoted only hardly more than a footnote to this particular factoring idea. I met him. I was invited to Yale to give the talk on the FFT by the astronomers there who were very excited about it, and Lanczos was in the audience. I didn’t know that, so I felt funny afterwards telling about how I was describing this thing which he had already done. I met him and I asked him why he didn’t publicize it more, and give it more prominence, he said, “Well, at the time, N was not very big.” So using this algorithm had hardly any more advantage than using a lot of other very clever algorithms that he was doing, which I read later, and I saw his work and I saw that for the sizes of N he had many other tricks that he could use, which gave a lot more. So this was just one trick in his bag of tricks that he was using.

Big N

Goldstein:

Well, that’s the interesting thing about your problem, because you were working on a problem where N wasn’t very big, and so it is interesting to think that this procedure that you developed, which was very effective for big Ns, is something that you developed in this application where it is not necessarily so crucial.

Cooley:

Yes.

Goldstein:

I would imagine that in order to believe that the project was important, you’d have to be thinking in terms of big N.

Cooley:

Yes, and Dick Garwin did think of big N, he was thinking of this huge problem of large spectrum calculations of data from seismographs all around the Soviet Union and he was thinking of the problems in astronomy, for spectroscopy in all these other fields.

Goldstein:

But you were still focused on the hydrogen problem.

Cooley:

Yes. Well, I don’t know if I was focused on it, because as soon as I finished, I just went off doing other things, completely different things.

Goldstein:

Mm-hmm.

Rader and FFT

It is often said that FFT didn’t make a big impact in engineering until Charlie Rader gave an engineering interpretation of it.

Cooley:

Yes, yes.

Goldstein:

Can you tell me something about how that came to be how it was that Rader’s paper made it more accessible to engineers?

Cooley:

Well, he made it easier to understand. When I wrote the algorithms up, I wrote them algebraically. I just wrote lots of formulas down, and I understand later many engineers had difficulty understanding this. Now, mathematicians understood it right away, in fact many of them said, “Well, that idea has been used somewhere or could be used there.” It was a thing that people had been doing in group theory, factoring of groups. It was not to do computing, but just to understand the group theory. Well, Charlie and Tom Stockham and I don’t know which or who, or if they did it together, they talked together a lot, but they worked out ways of drawing system flow graphs.

When they drew system flow graphs, and described these flow graphs and flow of information, engineers understood it right away. It was very accessible. Of course you might understand and say, well, “Why did engineers have to understand everything about it?” You just nowadays you just call a sub-routine that you can download from the internet that does FFT and all you have to know is what goes in and what goes out, but at that time programs weren’t available. An engineer wanted to use it, he had to program it. So this enabled them to not only understand how to program it, they understood what it did, and of course it made them understand some of the differences between doing this digitally and doing it by analog, which Charlie, Stockham and these people were able to make clear and show how the aliasing ideas which people could understand in analog, how that goes over into the numerical thing, that caused a lot of confusion with engineers at the beginning, just what was aliasing in the numerical calculation. And they were able to make this pretty clear to them.

FFT and Numerical Analysis

Goldstein:

Were you interested in following this popularization? Had you passed off work on this at that point or were you still involved?

Cooley:

Well, no, I didn’t do anything after writing the program. I thought I was off the hook, but of course when I found out how important it was, and from the seminar working with Pete Lewis and Pete Welsh, I understood the applications and how to apply it and that there were lots of other numerical problems in the application and started working on other aspects of this and how to use it for doing convolution, and covariance calculations.

Goldstein:

Could you tell me about how you got interested in convolution and covariance problems?

Cooley:

Yes, it was about ways of applying the FFT to problems in numerical analysis.

Goldstein:

For example?

Cooley:

I wasn't working on any specific application project, but I worked on the algorithms and methods for using the fast Fourier transform (FFT) to do convolution calculations. I also worked on factorizations of the FFT and some refinements of the bit reversal and the mapping algorithms. Lots of people were working on FFTs back then, but I worked on getting better programs. But after a while, with so many people working on programs and the best ones getting wide circulation, I felt there was little point in going further. But the FFT work got me invited to nice places and a few awards—there's nothing like that to stimulate one's interest. I got invited to the DSP committee, got into IEEE work, conferences, and traveled and met a lot of people.

Digital Signal Processing Community, 1960s

Goldstein:

Were you very familiar with the applications of the math in signal processing? How well connected to engineers, such as in the DSP committee, were you?

Cooley:

I wasn't connected at all until I got involved in the fast Fourier transform. An important part of my education was that people always thought I was in digital signal processing and knew a lot about it, but I wasn't. So in the beginning, when programs weren't available, people would call asking for programs, and I got to talk to them. I learned from them while they thought that they were learning from me. It was a good way to get started in digital signal processing.

Goldstein:

So you came to signal processing from square one in the mid-'60s. How did you see the field? Where was it going?

Cooley:

I had very little appreciation of the field until I got involved with the DSP committee and saw things Tom Stockham was doing, and also Charlie Rader, and Larry Rabiner in Bell Labs—if I start naming names I know I'm going to slight some important people. I got to meet most of the important people in digital signal processing at the time. From the DSP committee and the conferences, I became aware of most of the good work going on. Now they are all way ahead of me, I haven't stayed in much contact with this in recent years.

FFT in Mathematics and Engineering

Goldstein:

It sounds almost like two separate worlds: mathematical and engineering. Did the FFT develop independently in these two worlds, or was there a lot of communication? For example, the engineer Charlie Rader translated a mathematical algorithm so that it was accessible to engineers. Did that algorithm take on a life and development of its own once it entered the engineering world, or did the innovation continue to come from the math side?

Cooley:

Well, there are several kinds of innovations. There were lots of innovations in engineering because engineers had great incentives: the computers were getting big and people were building special-purpose computers. In the mathematical world, Ephraim Feig, whom you just met, and his professor Louis Auslander did a lot of work in pure mathematics, i.e. group theory. They looked back into some theorems in group theory that had the FFT structure. From this they developed important new applications in new fields, such as crystallography. In crystallography, you have to describe crystals by their group properties—the groups of transformations which leave them invariant. One of the big problems in crystallography is the computation of the Fourier transform. It's a large three-dimensional Fourier transform of data from a crystal—a calculation which would be really huge. But you can use the symmetries, so they developed some very good Fourier transforms that used the symmetry of the data of crystals. So I took the group theory methods from the mathematical side and combined them with numerical methods, and developed new and better Fourier transform algorithms for data with symmetry.

Goldstein:

You say engineers had great incentive to work on it, what were some of the advances that came from that side?

Cooley:

- Audio File

- MP3 Audio

(327 - cooley - clip 1.mp3)

They are so numerous they could fill many books. One big field was Fourier spectrometry. I really got to appreciate that shortly after the FFT came out. I had visitors from France who flew all the way over here to find out about the FFT. One was Janine Connes, who was head of the computing lab for the University of Paris. Her husband was Pierre Connes, a very important astrophysicist who was studying the atmospheric composition of the planets. His method was to pass infrared through a spectrometer (like the old Michelson-Morley experiment). This splits the sample apart, brings the light beams together, and gives an interference pattern. As you remember from school, you can determine the frequency by counting the peaks in the reinforcement pattern.

The important thing here is that the pattern can get very complicated; it's more than just counting the peaks and their separation. So you do its Fourier transform. This is actually a correlation function, so you use the Fourier transform in the same way as for the co-variance in statistics. You do the Fourier transform of the interferogram to get the spectrum of the reflected light from the planet. That spectrum will have a set of peaks characteristic of each diatomic molecule—each corresponding to a certain vibrational frequency in the electromagnetic spectrum.

I gave Janine Connes some programs and described the methods. They went back and reprogrammed their calculations and produced a huge book with the spectra of all of the planets. It's now in a little display case upstairs. They invited me to a conference in Vienna on Fourier spectroscopy. They had a lot of talks about its applications and a big manufacturer's display of machines that analyzed emission spectra. With the machines, you took a specimen of something—the user could be a physicist, a policeman, textile processor, anything at all—put the specimen in the machine and it produced an emission spectrum. It calculated the spectrum, compared it with templates of spectra for various materials, and then determined the composition of the specimen.

For several days they had people from many different careers talking about the FFT and looking at these machines. The conference celebrated three things: the experiment of Michelson-Morley, the interferometer, and the discovery that you could use the FFT on the interference spectrum. All of these machines had an FFT built into them to analyze the interference pattern. There were machines bigger than an automobile, and some like a desktop copying machine—small enough to carry in a Volkswagen. I was really impressed—it was a big field covering many, many applications.

Gauss used the method, and also Herman Goldstine (although I didn't know it then); he was the first Chairman of the Department of Mathematical Sciences here, and he was also Director of the Mathematics project at the Institute for Advanced Study. Goldstine was the man who hired me for my first electronic computer job. Anyway, he was writing a book on the history, and he found a book by Gauss. It was written in classical Latin, so there weren't too many readers, but he told me about it. I couldn't read it myself, but I could see the formulas; he had this idea of factoring the Fourier series, and he was using it (but of course, N wasn’t very big there, either.) So we wrote another history paper, and finally Heidemann, a student of Don Johnson at Rice University, wrote a book in which he described Gauss and the early FFT. He managed to get a translation of Gauss' paper. The idea was there, but it wasn't that useful because since N wasn't big. However, he did manage to find ways of using it. I might mention Sidney Burrows too; he did a lot of very important work on FFT algorithms.

Digital Signal Processing Committee, Arden House Conferences

Goldstein:

I'm interested in your work on the Digital Signal Processing Committee. What actions did the committee take while you were a part of it, and what was your role in them?

Cooley:

The first big action was the Arden House conference I mentioned, which brought together a lot of people interested in the FFT and to explain what they were doing. They talked about the program, what was needed, and so on. It was a great stimulus to the field—a lot of good papers came out of it. There was another conference two years later, I think we called it FFT and Digital Filtering. Then there were a few more Arden House conferences on digital signal processing, and the people at these conferences decided to have an international conference, which became ICASSP. The first one was in 1976 in Philadelphia, and many of the papers were on digital signal processing, FFTs, digital filtering and so on.

Goldstein:

What was the goal of the workshop—to be sure that everybody understood FFT and what it could do for them?

Cooley:

No, the people who were invited had already been programming or using the FFT. It was so they could bring in their ideas and explain to each other how they were using it. We got the names of people to invite from the list of people who had been calling and asking for programs and article reprints and so on. I had quite a few names, and so did everybody on the committee. The important people were there—the MIT people, Tom Stockham, Charlie Rader, the Bell Labs people, Bridge Kaenel, Larry Rabiner, and of course Ron Schafer.

FFT Applications and Computers

Goldstein:

Was the interest more in the mathematics of FFT or the applications?

Cooley:

I think everyone there was involved in important applications. They were interested in seeing how they could get programs to do these various tasks. Charlie Rader was into both the algorithm programming and the applications.

Goldstein:

Did you personally get involved in any of the applications?

Cooley:

I can't say I did.

Goldstein:

That brings us back to the issue of whether development was stimulated by the math side or applications. It sounds like you're saying from both.

Cooley:

Yes. Well, it just came naturally because people needed the results. Of course, when computers became larger you could do more DSP applications. For example, when Tom Stockham worked on the Caruso records, he used a big machine. I think it was a DEC mainframe machine. I said to him, "Would it be practical in the future to do Fourier transforms of real-time speech, and music?" He said, no, it wasn't practical. It may not have been practical then, but later he went on and founded Soundstream, one of the first digital signal processing companies. Now they are doing FFTs, filtering, and transforming speech and music in real time.

Goldstein:

This issue of practicality is an important one. When people were working on these algorithms, were there different tiers of work—one for the most advanced super-computing facilities available and others for widely-available computers? I'm wondering if some people worked on algorithms for more common, lower-level computers, while others developed higher-level algorithms that took advantage of the capacity of big machines

Cooley:

Higher level meaning very large Fourier transforms?

Goldstein:

Yes.

Cooley:

The largest Fourier transforms I have heard of are in Stanford, California, which are used to study signals from outer space for signs of intelligent life. They sample a very, very wide spectrum, and if they want the details of a signal, they need very, very large FFTs. They have written some papers (I can't remember the names of them) on how they do factorizations of N. When N gets too large for the computer, they have to break the data into blocks.

The largest I had heard of before that was used by the astronomers in Paris who were doing infrared plotting. Again, they were looking at extremely wide spectra, yet had to have very fine detail to identify the positions of the peaks in the spectrum of the radiation from diatomic molecules.

Goldstein:

Here's the situation I had in mind—help me with it if this is not the best way to look at it: Say it's 1968 and you are working on FFT algorithms. You know that some computers have 40-bit words or so of memory, but there are very few of these machines and time on them is expensive. But there are some smaller computers, like D-8s, that are more available. Did you have different people working on different algorithms for these two levels of computing power?

Cooley:

Well, there are variations on the algorithm which use this idea of breaking a big Fourier transform into smaller blocks which could be processed separately on a computer. If you are able to compute Fourier transforms of only size A or size B, you break them into blocks. A blocks of size B and B blocks of size A.

Goldstein:

Were people at all levels of computing power able to process the signals they needed to, or was FFT really only available to the more powerful computers?

Cooley:

It was available to smaller computers too, by breaking the data into blocks. You could keep all your data on tapes. The smaller computer would process a block of data, put it on a tape, then bring in another block. There were some papers on that, including one in the Arden House conference. A man named Singleton designed it with two tapes containing all the data. The data would come in on one tape, then get processed by blocks and put on the other tape.

Some of these block-processing ideas were used later with cache memories. In fact, in our engineering scientific sub-routine library, a lot of the sub-routines we wrote had to make efficient use of the cache, again by breaking it into blocks that fit in the cache.

Goldstein:

Was breaking it into blocks a fairly straightforward problem, or was it tricky and did it require real insight?

Cooley:

It got very tricky. There were lots of papers written on all the tricks that could be used. Image processing has become important too, because images have a huge amount of data. The question was whether it could be done on a small computer, and it could. It would work very efficiently even on a desk calculator, if you could organize the algorithm. And Lanczos did it.

Goldstein:

So it sounds like an economics problem. If somebody's building a signal processing system, they decide how much computing power they can afford, and then how much pre-processing will be required.

Cooley:

Or you can choose hierarchical storage. You can have several levels by using high-speed memory and the disks for cache. You schedule the algorithm to use these several levels of storage, but operate at optimum speed in the high-speed memory.

Goldstein:

Over time, the availability of computing power has increased dramatically. Under Moore's Law, computers become cheaper and more powerful. So far as you are aware, has that hardware development influenced the direction of the FFT algorithms?

Cooley:

Definitely. It gave some incentive for designing these nice scheduling algorithms for breaking the FFT into blocks and scheduling the blocks of calculation. Whole papers have been written just on the scheduling of data through hierarchical storage.

Digital Signal Processing Committee, 1970s-1980s

Goldstein:

How long were you involved with the Digital Signal Processing Committee?

Cooley:

I tapered off after the New York ICASSP conference in 1988. I was registration chairman for that, and also for ICASSP 1977 at Hartford. I went to the Toronto meeting after that, and then I started working at the University of Rhode Island.

Goldstein:

That's all the way into the '80s. Had the interests of the committee changed during the '70s and during the '80s?

Cooley:

Oh, yes. Before I got on the committee, it was writing standards for the measurement of the spectra of bursts. Then after the FFT came along, the committee was devoted almost entirely to FFT algorithms and applications. Then came digital filtering, digital speech processing, and it broadened out into the whole field of digital signal processing. In fact, it wasn't even called the DSP committee in the beginning, it was Audio and Electroacoustics.

Goldstein:

And it became Audio, Speech and Signal Processing.

Cooley:

Yes, that shows the change.

Goldstein:

I have a picture of the rush of activities in the 1960s, but it is less clear for the '70s. It wasn't called the DSP then, but later on, do you know what they were promoting and what were the most exciting areas of research in the '70s?

Cooley:

I think it was broadening out into very general areas. Speech recognition was important—people were writing very nice filtering algorithms.

One committee project was to compile a set of the best programs in digital signal processing and put them through a certain set of standards. They were all in FORTRAN, and there was a program that tested to see if they conformed to a set of basic rules. They were put through the machine at Bell Labs and then all the copies were put onto a tape available to anybody. All the best programs were put in one thick book, including digital filtering, FFTs, and filter design programs.

Later on, they decided to do it again, but it was much harder to pick the best programs because there were so many more available. So the second one became very big and it became practically impossible to get everything in.

Goldstein:

What impact did the availability of these algorithm books have?

Cooley:

I haven't heard how many people ordered it, but I'm sure they sold lots. It was extremely valuable to have. Labs anywhere could have the best programs written by the top people in the field.

Goldstein:

Was there any feedback from the audience?

Cooley:

I'm sure there was lots of feedback, but the people who were doing the book were, with their co-workers, among the chief users. They worked for places where these things were used, so feedback could come directly from them.

After that, a lot of the committee work was running these ICASSP conferences every year. That was quite a lot of work, and it dominated.

Goldstein:

Did the committee try to give direction to the field through the selection of papers or the programming of the sessions at the ICASSP?

Cooley:

I don't think they tried to give direction; I think they tried to follow what was important and what people were interested in.

Career Highlights

Goldstein:

We've been talking mainly about the FFT because that's what you are best known for. Is there other work of yours that is important to talk about?

Cooley:

No, I don't think I really did anything else that was very important. Well, let's see, I did a lot of work on nerve simulation, and some on solving the equations of quantum chemistry, also the solving of Schroedinger's equations, and some articles. I did some work on diffusion calculations with people in the lab who had various research problems—diffusion of contaminants through insulators was one. I also did some quantum mechanical calculations for lasers. What else? Towards the end of my career at IBM, I got in the group which did the engineering scientific sub-routine library for the IBM vector machine, and then later for the RISC machine, the RS-6000. Then I left to take a teaching job and go into semi-retirement.

Goldstein:

All right, that wraps it up.

Cooley:

Good, I hope it's of interest.