Oral-History:Marvin White

About Marvin White

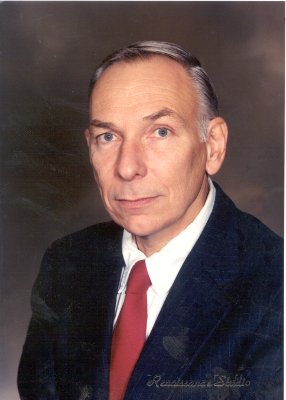

White was born in 1937 in the Bronx. He graduated from the University of Michigan with a BS in engineering physics, got a Masters in Physics from the same institution, then went to work for Westinghouse. While there he did research into electron beam scanning, solid state imaging, complementary MOS (CMOS) transistors, and MNOS transistors, which can be used as Nonvolatile Semiconductor Memory, and which eventually evolved into sonos, semiconductor flash memory and storage memory, and smart cards. He also was in charge of the newsletter of the Electron Device Society for some years. In 1981 he went to Lehigh University as a professor, and there set up a microelectronics program, specializing in VLSI.

About the Interview

MARVIN WHITE: An Interview Conducted by David Morton, Ph.D., IEEE History Center, 28 June 1999

Interview #400 for the IEEE History Center, The Institute of Electrical and Electronics Engineering, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Marvin White, an oral history conducted in 1999 by David Morton, IEEE History Center, Piscataway, NJ, USA.

Interview

Interview: Marvin White

Interviewer: David Morton, Ph.D.

Date: June 28, 1999

Place: Lehigh, Pennsylvania

Childhood, family, and educational background

Morton:

Please tell me a little about where and when you were born and if you had any childhood experiences that led you towards this career.

White:

- Audio File

- MP3 Audio

(400 - white - clip 1.mp3)

I was born in the Bronx, New York. It was in September of 1937. I don’t remember much about my early years, but we stayed a while in the Bronx, my mother and father. There was some movement at that time. We were moving around to different locations. My mother, Margaret (Peg), was working but my father, Frank, had part time jobs. It was the time when the country was coming out of the Depression. My father was born in 1892 in St. Johns, New Brunswick so he was too old for the military, but he went into the military police. He had a job at the Ford plant in Chester, Pennsylvania and looked out after the Jeeps that were being shipped overseas at that point. We were living in a small town around the Chester area called Green Ridge. I was going to a small country school there where they had every grade from first grade up to the sixth or seventh grade in one classroom and a little stove in the middle of the classroom. We would all sit in rows that were to be part of our grade level. I have distinct memories of that time in my life.

I was always interested when I was growing up in electronics and listening to the radio, fooling around with radios, trying to make small radios and wrapping wire around toilet paper rollers and making coils. I can remember I had a lot of interest in electronics even as a boy. When World War II ended my father’s job ended and we moved up to the Michigan area. We moved to a city called Dearborn. I started going to school there around the fourth grade. The school (Miller Elementary) I was going to at that time was located in a building that I would later go to for Junior College. We called it the Henry Ford Community College. I started going to elementary school at that time. Then later I finished up in Dearborn High School. All through high school I was very much interested in science and mathematics. I had really decided that I wanted to go into engineering and a science career because I really enjoyed the classes in high school, the chemistry and physics classes especially. I loved mathematics, particularly geometry and algebra. I was very much interested in pursuing a career that involved all of those. I was an outgoing young fellow, so I wasn’t put apart from going into other areas of study, but I just really liked to understand what was happening in the various sciences.

I had no role models. There was nobody in my family that I can remember was a scientist or an engineer. My mother, who was born in New York City, always wanted me to be a dentist. She thought it was a good profession. I think it’s because I saw dentists so often because I had braces when I was young, and so she thought it would be good for me to do that. Later she thought it would be good for me to be a chemical engineer. She heard that that was pretty good. I think that she was the driving force for me to go to college. I was very interested in engineering. She wanted me to go to college because she had worked for Sears Roebuck in New York and her boss went to college. She knew that it was very important to get a college education. My father kept saying to me that one person in his family, his brother Marvin (who I was named after) had gone to college (two years) and he did very well in life. I had a lot of push from my parents. My parents never went through high school. My mother was an orphan and my father came from Canada. They met in New York. The push was really from my parents to go to college. I was very much interested, as I said, in science and math in high school.

I got involved in the Civil Air Patrol, which was something very new in high school. Later I went on to the Air Force ROTC program. I loved to do outdoor activities. I played a lot of sports in high school. I pitched and played outfield in baseball. I was always a very active fellow and I think that really helped me a lot to study, actually, to be more active physically. Then, when I got done doing that I would feel really good about sitting down and studying. I think that really helped a lot.

At that time we didn’t have a lot of money at all. In fact I don’t know how we would classify ourselves. My father was a plant protection man at Ford, my mother was a secretary, and I stayed home, looked after my brother and took my mother to work because she didn’t drive a car. As a New Yorker she took the subways and never learned to drive. She was the first to buy me a piano, the first to buy me a car because she had made some money. She really was, I think, a driving force in me.

I got out of high school in 1955 and I didn’t have a great deal of money so I went to the Henry Ford Community College (HFCC), because I could live at home at that time. In the summer, I worked in a small company called Chicago Latrobe, a division of National Twist Drill and Tool. I had taken typing in high school so I could pass the entrance test, which was to type better than 40 words per minute with less than two errors, which I could do. So I got a job. When I told them I was leaving to go to college at HFCC they were of course disappointed, because they hoped I would stay.

So I went to Junior College at HFCC. There, I think the thing that really set me on my path was that I had an outstanding set of mathematics teachers. Also, the physics teacher was really wonderful. He had been a graduate of the University of Michigan. For the first time I opened my eyes that experimental science was really wonderful to behold because he would take and bring a television in and take it apart and show us how it worked. We would go down to the basement and he would take a rifle out. I can’t imagine doing this today in the University. One of the students would hold a block up and he’d shoot the bullet into the block. In fact we tried to measure how far the block would swing as a result of the bullet. There was hay behind it to prevent the bullet from going too far into the walls. It was just really interesting. We would go out and crawl under a car and he’d show us the different parts of a car. I really got an impression of experimental work from this teacher. His name was Karl Place. He had a Master’s degree from the University of Michigan. We did laboratory experiments. Most of them didn’t work, but it was very interesting to explain why the experiments didn’t work.

I think one of the things that really interesting me, looking back at high school when I took physics—and again, I had wonderful chemistry (Dick Welch) and physics (Allen Storr) teachers—who gave problems that were very challenging. The first thing Mr. Storr did was to ask us to measure the length of a desk. We were to take a yardstick and record what we found and not to mention our results to anyone. At the end we would go to the board, all of us, and mark down what we measured. There were like 20, 30 people in the class and I remember the board was filled with different numbers. There were a lot of gasps from people in the class because I think they expected the desk would have a definite length. I guess none of us really understood that the observer, the person doing the experiment together with the measuring apparatus, created a sense of the error involved and that there would be a mean and a variation in this measurement. I think that was very interesting to me to see how the experimenter interacted with the experiment itself. Later as I went on into physics and into quantum mechanics I learned there were uncertainties involved and the observer does participate in this process.

University of Michigan engineering physics studies

White:

I knew at the end of two years in Junior College that I was going to pursue the combination of physics and engineering. I had received a scholarship from HFCC at that point to the University of Michigan. So in 1957 I went to the University of Michigan and I entered into the engineering physics program, which was a relatively new program there. It was an attempt to put engineering and physics courses together. I really enjoyed the program because I could take electives in math and I could take electrical engineering and I could combine all the areas that I really liked. I worked in the summertime. I had different jobs. I worked at television repair. I repaired air conditioners. I worked in a job shop using a lathe and shaper. I had all kinds of jobs going through college. As a boy growing up I had an amazing number of different jobs. I set pins in a bowling alley, worked as a soda jerk, sold garbage disposal units door to door, delivered papers, worked as a busboy in a restaurant, and worked as a stock boy in a dime store and grocery store. I had a lot of different jobs and I think the experience helped me a lot.

Morton:

That’s tough.

White:

It was an interesting time for all of us. I even did shoe shine work as a small boy. At about 10 years of age I made a shoeshine box. I went out and went around to the bars and shined shoes and started out charging a dime and worked up to a quarter a shine. I did men’s and women’s shoes. I think all of the experience really helped me a lot, helped me understand the work ethic and how difficult it was to earn money. I always felt that when you went to work you went to work, put a good day in, and you didn’t loaf around on the job. I felt strongly about working hard and so did my parents.

So when I went to Michigan I decided I’d live in a Co-op house. The Co-op house at Michigan rose out of the depths of the Great Depression in the early 1930’s and is a place where you cook and you clean floors and you put in about four or five hours of work a week. At the time, would you believe college cost $125 a semester to go to school in 1957? In addition to these expenses the room and board was almost about the same, $125 living in the Co-op. As a young person working in the summertime you could make enough money to put yourself through college. You can’t do that anymore. I don’t think a young person can make the kind of money today in the summer to go through college. But you could earn enough money when I was going to college. You could get a job and put your way through school, so I did that. I didn’t have to take any money, borrow any money from my parents. So that was very good.

The Co-op was a wonderful experience. There were people coming from all parts of the world and you had to work with different types of people—Chinese, Greeks, Turks. I learned how to play soccer there. I’d never played soccer before. Coming from where I did you didn’t play soccer, but I learned to play soccer with the Greek team and we went around and had a lot of fun. So the co-op experience was very good. I did various jobs there. I was president of the house and I was steward - the person who puts the meals together. That’s a thankless job at times. But I learned a lot at the Co-op on how to deal with people and how to manage things and how to deal with budgets.

I also participated in intramural activities. I loved track. I still do a lot of running now. I liked touch football. We had a lot of touch football teams. And you wouldn’t think it was touch after a while. I enjoyed a lot of sports. We had a great softball team. So it was just a lot of fun living in the Co-op, and I really enjoyed my experience at the University of Michigan.

Graduate studies at the University of Michigan; semiconductors and transistors

White:

I decided to go to graduate school at the University of Michigan and pursue a graduate program. But I wasn’t quite sure what I wanted to do. I had received degrees in engineering physics and engineering math and I was about six hours short of getting one in electrical engineering. I lacked the AC and DC machinery courses. There were labs associated with these courses. In graduate school-- I got a Master’s in Physics and I was doing some work on the bubble chamber at that time. Professor Donald Glaser, who was later to move to U.C. Berkeley, eventually won the Nobel Prize for his work on the bubble chamber. Another graduate student and I were examining different decay tracts of high-energy particles (pion-muon) in bubble chamber experiments.

I knew, however, this really was not the type of work I wanted to pursue in my career. I was really interested more in electronics. I had taken a course on semiconductors and transistors at the University from Professor Donald Mason in the Materials Department. I had heard about transistors, of course, but I didn’t know much about how the physical electronics and how they operated. And this course was really an eye opener. It showed me that you could use mathematics and physics to explain the way devices worked. Up to that time I was really taking courses mainly in vacuum tubes, and after seeing how semiconductor devices worked, I knew this was the area that I really wanted to pursue.

Morton:

This was around 1960?

White:

1960, right.

Morton:

Do you remember how long that course had been in place? Obviously the semiconductor courses were probably new in the curriculum.

White:

Yes, they were absolutely new. I would say a few years old. It’s surprising how long it took for courses on transistors to make it into the curriculum. But I’d heard about this course and I said that I want to learn more about these devices. This course was really very good. I always took notes in school. I took good notes and I went home and rewrote them again neatly in my book and I would add things to them. I still have those books. If I had any advice to give young people, I would tell them to take good notes. I had good teachers going through

Michigan—good math teachers, renowned math teachers that wrote textbooks and excellent physics teachers. I really think my undergraduate career was very good at the university because I was exposed to the very best teachers in the classroom. The one thing I can tell you though, which is different today, as a student going to school I never felt that I could really ask a question in a classroom. I felt the teachers were so far above me. I didn’t even go after class to ask the question. I’d always tried to figure it out myself. But today’s students they’ll just ask questions.

Morton:

Times have changed.

White:

You know, I’m like that now, too. I will pop up and ask questions at meetings. Times really change. Just as an aside, there was a professor here, named Walter Dahlke, who has since passed away, and he was helpful in bringing me to Lehigh. He came from Germany, and the structure of the system in Germany was to be the Herr Professor, and he was used to non-aggressive students. The first time at Lehigh a student came to visit him in his office, he told me that the student sat down and put his legs up, crossed them, and sort of put one shoe on his desk. He said he was really appalled at this behavior. However, after awhile, he began to see that the American student was really liberated to a large degree and didn’t have this feeling of fright. So I see a little bit today even from students coming from overseas, from China and so on—they’re still a little bit frightened initially of really interacting with the teachers. I was too. As a young student I felt that I really had to learn it on my own.

Employment at Westinghouse and continued graduate studies

White:

After I received my Master’s Degree in Physics in June 1961 at the University of Michigan, I really decided at the time that I didn’t want to pursue high energy physics and I really wanted to look more into solid state studies. I didn’t really know where to go at that point in time, so I decided to leave the University. While I was going through school I was in the Air Force ROTC program. But when it came time to make a decision to continue and go on and become an officer, I decided not to do so. I just decided that maybe I would just take my chances, and no one could guess that Vietnam would be starting up in the early ‘60s.

I took a job at Westinghouse in the summer of 1961. I had decided to join the Westinghouse Graduate Student Program and prolong any graduate studies in favor of working in industry. At the time, most of the electrical engineers joining the company would work in Westinghouse-Baltimore at the Space and Defense Center. All of the engineers that worked in the Defense Center were given deferments as they were working on space and military programs.

Morton:

Not to put too fine a point on it, a lot of the people who went to work for the big companies came out of MIT and Stanford. Did you ever encounter snobbery or any kind of exclusion from the “club” because you came from the University of Michigan?

White:

No, not really. I’ll tell you though, if I had had a Ph.D., then I probably wouldn’t have gone to Baltimore to work. I probably would have landed in Pittsburgh, which is where the Westinghouse Research Laboratories were located. Perhaps at the Research Laboratories this would have been an issue. I know in various laboratories, even to this day, managers are closely associated with universities in the Northeast or on the West Coast, and they tend to hire people from their own schools. So, this does exist. In the Baltimore area most of the people had Bachelor’s degrees. They were engineers, right out of school, and a few had their Master’s and very, very few, had Ph.D. degrees.

So it was really a wonderful time, because you were not going into a place to bury yourself in research. You knew you were going to go in and work on a team. I joined the Westinghouse Graduate Student Program, which allowed you to go from one location to the next, take assignments from four to six weeks, and then see if you could locate a job. I thought when I joined the company that I had a job, but, in fact, I really didn’t have a job. I had a position in the graduate student program. I was expected to find a job and that wasn’t so easy. There were over 450 of us and jobs weren’t very easy to find. So there were a lot of people that would go through six or seven of these kinds of four to six week assignments, wouldn’t find a job, and they would be asked to leave the program. This was sort of an eye opener to all of us. We would go down and have a beer at a sort of rathskeller in Baltimore and we’d see if anyone had found a job. We were having a good time, and we were all single guys and gals and we were going through the program together. There weren’t too many gals; mostly guys. That’s a big change in the work force. When I was going through there were mostly guys working in the company. Today it’s much different.

Solid State Laboratory

White:

- Audio File

- MP3 Audio

(400 - white - clip 2.mp3)

As I was moving through the graduate student program in 1961, I had various assignments at Pittsburgh, I had some in Baltimore, and one assignment was very interesting. I took an assignment in the Solid State Laboratory. I really enjoyed working there because the people were doing very interesting work. The area of work was called molecular electronics. Today the same word is being used again. However, at the time, molecular electronics, as defined by Professor Von Hippel of MIT, was electronics comprised of spins, fields, waves, and charges. Von Hippel envisioned these four entities as the basic building blocks to fabricate very unique devices and circuits, unlike traditional types of circuits involving resistors, capacitors and transistors.

So we had a program at Westinghouse to work on molecular electronics. It was an Air Force sponsored program. I really enjoyed working on an assignment in the Solid State Laboratory. I was working on a different project, however, which involved putting semiconductor p-n junctions in vacuum tubes and scanning them with an electron beam. The U.S. Army sponsored this work. The Army was interested in research and advanced development for the far-infrared region and night vision. I was working with another person on this program and I thought I did a good job on my assignment.

At the end of my assignment I was told there just weren’t any more openings in the Solid State Laboratory, so I took another assignment in Pittsburgh. After the assignment in Pittsburgh, I was told there wouldn’t be anything available there and so I started looking again for assignments in the Baltimore area. I heard from a friend there was a group in Baltimore called the Applied Physics Group and they were working on Schroedinger’s equation and artificial potential wells.

Well, I had had quantum courses at Michigan, and I thought this would a great assignment.

I wanted an assignment in this area and I thought I was going to go to Baltimore to accept an assignment in this area. So I arrived at the Baltimore Defense Center and I went into a room where I was supposed to meet my supervisor for the assignment. Well, low and behold, in the same room was my supervisor from the first assignment.

Also, there was this manager of the Applied Physics Group, and he said, “Well Marvin, I know you want an assignment in our group. But the previous group that you were with, the Solid State Laboratory, they say “you did a good job and they’d like to have you come back and continue on for a second assignment.”

Actually, I was very interested to take a second assignment. However, the manager of the Solid State Laboratory said, “You know, I have to tell you there are no openings in this group. So you have to tell me what you would like to do.” I really was naive and I said, “Well I like the work. I think I’ll take a second assignment in your group.” Because you know, I always thought if you did a good job something would happen that was good, and so I went back and I did my assignment. Even near the end of the assignment I knew that there weren’t going to be any jobs.

However, two people that were working in the Solid State Laboratory decided to leave and go to the Raleigh-Durham area in North Carolina, because there was a rumor a Research Triangle Institute would be established in that area. Actually, it didn’t take place until many years later. But because these two people left it opened up a position, so I was offered the position to stay in the Solid State Laboratory. This opening, in the early 60’s, really started my career. I started working in the laboratory and I continued on with the Army sponsored program, which I had worked for my two assignments.

It came time to obtain a renewal on the Army sponsored program, and I made a presentation to the customer. The important thing in industry is to get your renewal, and I got a renewal. I was in charge of the program because the other people who were involved had left for North Carolina. Years later, I spoke with the person who gave me my renewal. He had retired from the Army. We were at a meeting and he said laughingly, “You know, when I gave you that renewal, I really didn’t understand what you were working on. But you were so enthusiastic I figured I had to sponsor a person that had that kind of enthusiasm.” I always had enthusiasm about my work. I felt really strongly about doing a good job and presenting it to the customer or at a conference. Of course we didn’t have the visual aides that we have today.I also got involved in teaching very early in Baltimore, because I wanted to learn. One thing I tell young people, when they join industry, is to find a mentor. When you go some place and you get a job, make sure that you can learn something. But also find somebody that you can work with and learn from, and I found this mentor, Vasil Uzunoglu. He was writing a textbook for McGraw Hill. It was in the area of transistors, and I knew almost nothing about how to design transistor circuits. I knew a little about vacuum tube circuits. Vasil had experience from Bell Laboratories and previously owned a company in Turkey. He was also Greek and his written English needed a little correction. I could help and type the chapters and I learned from him. I would ask questions about how a circuit worked and I would try to figure it out. In the process I really learned quite a bit helping him put his book together. We’ve been friends ever since, for over 40 years now. The association with a mentor has been an important part of my life.

Interactions of education and work; teaching at Westinghouse School

White:

When Vasil left the company, I took over and taught the courses he was teaching in the Westinghouse School of Applied Science and Engineering. Teaching helped me a lot in my career, helped me to think about why things really work the way they do, how to answer questions. The students that took courses in the night school at Westinghouse were not interested in credit. They wanted to learn about a subject. They received a degree after taking so many courses, but they would ask questions that were very practical and very focused. So it made me concentrate on the practical aspect of circuit design and device operation. I also found out the engineers, who did astonishing things, I mean in terms of design and who were highly regarded, didn’t necessarily have a Ph.D. degree. They were people that learned on their own. So continual learning is very important. I mean, you can have a Ph.D., and if you don’t continue to learn I don’t think you’re going to make progress or make a contribution to your field.

I observed engineers with Bachelor and Master’s degrees that would continually learn, going to meetings and conferences. I was also exposed to the team atmosphere of working. I really enjoyed being a part of a team - to have an assignment, to come back the next week and report on what you did. I think, if you had a Ph.D. degree, then you were expected to some extent in those days to be more isolated and work on individual efforts. Perhaps, that’s why there weren’t many Ph.D’s in the Baltimore area. If there were, then they were generally in management positions. The team people were really people with Bachelor and Master’s degrees. Eventually this changed and today there is more of an influx of Ph.D’s.

Ph.D. studies at Ohio State University; semiconductors and vacuum tubes research

White:

Speaking of Ph.D’s, In 1965 I married Sophia Waring from Charleston, South Carolina, who was working in the Baltimore area at a small company called Hydronautics in Laurel, Maryland. I took a leave of absence from Westinghouse in 1966 and went to graduate school at The Ohio State University in Columbus, Ohio to pursue a Ph.D. in Electrical Engineering. While we were in Columbus our son David was born in 1967. I returned to Westinghouse in 1968 and worked on my dissertation in the Characterization of Microwave Transistors under Professor Marlin Thurston at The Ohio State University. I received my Ph.D. in 1969.

As you can see from my resume, I started working in the area of semiconductors and vacuum tubes.

Solid State Circuits Conference and International Electron Devices Meeting

White:

I started going to technical meetings, such as the International Solid State Circuits Conference, which in the early 1960’s was held in Philadelphia. I also went to the International Electron Devices Meeting (IEDM). The IEDM was held down at the Sheraton Hotel in Washington, D.C.

Morton:

Do you get any sense of how long that lasted, that there was this Electron Devices meeting at this point and particular hotel?

White:

Well, in the early ‘60s it was at the Sheraton. One reason it changed (I’m not sure if this is the actual reason) was a failure to reserve the date for the meeting. The IEDM missed the booking for a particular set of dates and Washington is a very popular area. So the IEDM moved from the hotel and relocated to the Hilton Hotel and that was probably in the ‘70s. So it has lasted for more than several decades.

Morton:

So they never went back.

White:

No, the IEDM didn’t go back again, and also the Hilton offered larger rooms with easier mobility between rooms. At the Sheraton you were really walking around from one small room to another through narrow isle ways. I mean it was ornate. It was like an old Victorian Hotel. It was very beautiful, but it wasn’t really set up for the larger meetings. As we grew in size I think the Hilton’s, the Marriott’s, these hotels started to offer more in terms of services.

Electron beam scanning research; p-n junctions, diodes, and MOS transistor

Morton:

I’d like to hear some more about the research, particularly the events leading to the Ebers Award. I know you mentioned that you had started work pretty early at Westinghouse on this research.

White:

Electron beam scanning, yes.

Morton:

Was there some other work that you know about going on in that field? It seems like I’ve heard of something similar to that.

White:

I’ll try to explain this a little to you, technically. Camera tubes were traditionally made, by having a photoconductive film deposited on a ‘face-plate’, and the electron beam would scan the photoconductive film. Underneath the beam, the beam has a certain area to it. The beam diameter is, for example, 10 microns and there is a certain area to the beam as it scans the surface of the film. When it scans the surface of the film it deposits charge on the surface. You can view the surface as having a certain resistance under the beam, and in parallel with the resistance is a capacitance. The capacitance is the dielectric constant of the film divided by the thickness of the film. Then you have to multiply it by the area of the beam. The resistance, the beam encounters, is the resistivity of the film times its thickness divided by the area of the beam. So, if you multiply the resistance times the capacitance, it turns out it’s the product of the resistivity times the dielectric constant of the material – fundamental material parameters. You need to have this product, the RC time constant, on the order of a frame time of scan. In other words, if the beam leaves a particular spot on the film and returns, a thirtieth of a second later, then you need to be able to make sure the charge the beam put on the film will stay on the surface. This should be the situation providing the film is not exposed to incident radiation in the form of an image.

When the film is exposed to radiation, the film resistance is lowered because the radiation induces excess free carriers in the material. The resistance is altered and, thus, you reduce the RC time constant. In a sense, you discharge the film surface. So, when the beam returns to the same point again, it will supply the electrons necessary to recharge the film surface to the potential of the cathode. When you measure that recharging current in a given area under the beam, you are measuring the amount of irradiance (i.e. photons) that have fallen on that particular area all the time the beam has been away. So, in the absence of the electron beam, this little area on the surface of the film has been collecting and integrating all of the photons of light coming in during the time, because these photons will excite electrons within the photoconductive film. I was greatly impressed by this process of signal integration.

I was impressed in college about integration when I first encountered it in calculus—the idea of summing up infinitesimal quantities. I thought this was an amazing breakthrough that Newton would see this and design a calculus on the basis of infinitesimals. Later, I saw integration in terms of how it improved the signal-to-noise performance of electronic systems. The signal, which was represented by light in terms of photons—would create hole-electron pairs within the semiconductor film. The signal would discharge continuously the previously mentioned capacitance. The result would be storage or integration of the signal information as the electron beam was traversing through the rest of the photoconductive film. When the beam returned, it would sense how much of the charge was removed by recharging the film surface to cathode potential. So you had signal integration. If we have various sources of noise, such as leakage current or so-called ‘dark current’ and fluctuations in the arriving signal photons, then the process of integration acts to sum the squares of the root-mean-square (RMS) values of the various components.

Morton:

What’s the source of noise?

White:

Well the ultimate source of noise would be the fluctuations in the arriving photons from the image you are trying to observe. The ultimate source of noise, supposing that you had N photons arriving in a time interval of a thirtieth of a second, if they were distributed with Poisson (or Gaussian for large numbers) statistics, then the square root of N photons would be the number of ‘noise’ photons. Thus, the ‘mean’ number of photons, N, represents the signal photons and the ‘variance’ or N represents the noise. So let me give you an example: Suppose there were N = 10,000 arriving signal photons. The square root of 10,000 would be 100 – the number of noise photons. So if you take 10,000 and divide it by 100, we have a signal-to-noise ratio of 100 to 1. This would be the limiting signal-to-noise ratio, the best you could achieve. It would be limited by the statistical fluctuations in the incoming signal photons. If we assume the creation of hole-electron pairs within the semiconductor film is ‘correlated’ with the arriving photons, then our measured electronic signal would reflect the same limit.

The idea of integration is extremely important because it represents the process of adding or summing up all of the arriving signal photons. If you would simply measure the signal at the moment of time that the beam dwelled on a particular area of the film, then this would be what we call a point detector, one that doesn’t have any storage capability. This process of signal integration was quite interesting to me and formed the basis for my future work.The idea occurred to me to place semiconductor devices, in particular a ‘mosaic’ or checkerboard pattern of p-n junctions, into a vacuum tube and scan these junctions with an electron beam. It would then be possible to achieve signal detection and integration in much the same manner as with a continuous photoconductive film. At the time, I assumed other researchers were working on this concept. A p-n junction has a very large reverse resistance. It also has a reverse-biased capacitance. So the p-n junction would have a very large RC time constant, similar to the photoconductive film, which could be used to store information. The result was a checkerboard of these p-n junctions, which were laterally isolated from each other by the large RC time constant. We called this configuration a ‘mosaic’. Since there were camera tubes in existence, called the Orthicon and Vidicon, we called this camera tube the ‘Mosaicon’. I worked on trying to create ‘mosaics’ of diodes in materials like Silicon, Germanium, Indium Antimonide, and Indium Arsenide, with the goal of trying to move into the far-infrared region, which was the desire of the U.S. Army.

I also recognized these p-n junctions were not only storing information, but they were sensing the information. So the sensing and storage operations were in the same physical region of the structure. I thought this put too much of a restriction on the operation of the image sensor. I thought, why don’t we just store the information in one region and sense it in another. Then I placed ‘mosaics’ of transistors into a tube and scanned the collectors of the transistors. In the transistor, the emitter would be the sensing region and the base-collector region would be the storage region. So I began to do research on these structures.

So, my early experience was on storage or integration for the improvement of signal to noise ratio in image tubes. I actually verified a p-n junction could be used as a storage device. I remember taking a p-n junction photodiode that I fabricated and replace the addressing or commutation of the diode by the electron beam with a mercury-wetted relay, which served as an excellent switch. The diode was placed across the input of my first MOS transistor, which I fabricated around 1963. I placed the output of the diode into the gate of the MOS transistor and I took a look at the signal that came out of the source of the MOS transistor. I synchronized the reset operation of the p-n junction photodiode with an incident ‘chopped’ light source. I could see the decay, caused by the incident light, of the voltage across the photodiode at the source of the MOS transistor. So, this little experiment showed that, indeed, I could store information on a p-n junction photodiode. I went ahead and derived some equations to explain the operation.

Morton:

You mentioned the storage capability of these arrays or mosaics. Was anybody at that time interested in using this as a memory device rather than an imaging device? Or not, really?

White:

Well, I think from a memory point of view the information was being put in optically, and there is a memory only in the sense of storage or integration over a frame time of scan. But no, not that I’m aware of at the time. We were not considering this. Eventually, when you went to solid state commutation-- In other words, there was an electron beam addressing these diodes, and when you went to using MOS transistors to address the diodes, then the thinking was that you could take and use switches and capacitors and store information. Of course, one of the very famous inventions, along these lines, is IBM’s Bob Dennard’s invention of the one transistor and capacitor DRAM cell. So, in a sense, you store information on a capacitor, open up the switch, and let the information sit for a period of time. In this operation, you don’t want the information to discharge the capacitor, as we discussed previously with the incident photons discharging the storage capacitor of the photoconductive film or p-n junction photodiode. So although the operation is different, the storage principle still applies.

White:

So, as I said, I was very interested in the idea of storage. Then, when the MOS transistor arrived on the scene, I worked with other people at my company, Westinghouse, to develop Complementary MOS (CMOS) transistors. I remember seeing a paper at the ISSCC meeting in 1963 by Frank Wanless and C. T. Sah of Fairchild Semiconductor. They had taken n- channel and p-channel devices (I believe they were discrete devices), and placed them inside a package and made basic CMOS circuits, which was quite impressive. I went back to the laboratory and duplicated these CMOS circuits. I decided it would be pretty interesting to put them on the same chip. So a colleague, Ronald Cricchi, and I worked on these CMOS circuits and we came up with a way to integrate the CMOS devices and put them on the same chip, which we published in several papers.

We, my colleagues and I, were very interested in CMOS devices and circuits from an early stage. We were also interested in CMOS circuits because they represented very low power consumption. Would you believe, at that time, hardly a person thought CMOS technology would go anywhere? I had other thoughts, however, and wrote a chapter on MOS transistors for Academic Press in 1971 for the Semiconductors and Semimetals Series. I did this chapter while I studying for my PhD at The Ohio State University in the 1966-68 time frame. In this chapter, I described the advantages of CMOS devices and circuits. However, there were many skeptics who claimed CMOS required too much chip area. The CMOS technology hadn’t developed at the time to permit transistors to be highly packed on a chip. Also, CMOS technology required one extra photomask operation. I can’t believe, looking back, that this was a consideration, but CMOS at the time was relegated to space applications where power dissipation was essential. It was also relegated to medical pacemakers and other battery-operated applications. Who would have predicted the emergence of consumer products with the demand for low power?

With the development of CMOS technology, I started to work on the integration of CMOS technology and photodiodes for self-scanned imaging arrays. So, instead of using an electron beam to scan the photodiodes, I began using CMOS devices to act as switches to commutate or to reset the photodiodes with subsequent readout.

Morton:

Could you explain how these are actually physically constructed, too? It’s a little unclear to me how you put these things in physical proximity, or are they sort of made together?

White:

- Audio File

- MP3 Audio

(400 - white - clip 3.mp3)

Well, they’re made together on the same chip. The CMOS devices have very low power consumption. For example, in logic devices, you always have an ‘off’ transistor in series with the power supply. In a simple inverter logic circuit, you have an n- channel and a p-channel device and they’re both in series with one another and with the power supply. When the p-channel device is ‘on’, the n-channel device is ‘off’. Conversely, if the p-channel device is ‘off’, the n-channel is ‘on’. So, in one of the logic states, a one or a zero of a device, you’ll always have an ‘off’ device in series with the power supply. This means the standby current drawn from the power supply will be quite low, say picoamperes. The total power dissipation, which is the product of this standby current and the power supply voltage, from a standby or ‘static’ point of view, in either the one or the zero state for the inverter circuit, is extremely small. The time you dissipate power is when you are switching between the two logic states. During this time, you’re charging and discharging so-called ‘load capacitance’ that is connected across the output node of the CMOS circuit – in this case, a simple CMOS inverter circuit. In this switching operation, the energy on a capacitor is CV2/2, where C is the ‘load capacitance’ and V is the supply voltage. If you divide the energy by one-half the clock time, T/2, the time to discharge the capacitor, you have CV2/T or CV2f, where ‘f’ is the clock frequency. So, the dynamic power dissipation is simply PD = CV2f.

If you increase the frequency, then the power dissipation goes up proportionally. If you’re going to try to keep the power dissipation under control, then you must either reduce the ‘load capacitance’, which is what people are trying to do today by making dielectrics with a lower dielectric constant, or reduce the power supply voltage. In the early days of MOS transistors, the voltages were as high as 30 volts for p- channel circuits. Eventually, as the technology improved with migration to n-channel and eventually CMOS devices, these power supply voltages decreased to 12 volts, then 5 volts, down to 3.3 volts, and now down to 1.8 volts. Thus, the dynamic power dissipation is reduced by the square of the power supply voltage.

CMOS devices can be used as logic devices, but they can also be used in linear devices or amplifiers. Both logic and linear devices are needed in imaging arrays. We need to address the photodiodes and apply a reset voltage, but we also need to be able to take and read out the stored information on the photodiode. In the process of working on self-scanned CMOS photodiode image arrays, I started to realize there was an intrinsic limitation to reading out the stored information in these arrays. This limitation came because of the inherent Nyquist noise present in the resistor. We know there are fluctuations of electrons in the resistor and these fluctuations give rise to noise. So, consider a resistor with an ideal series switch, a series capacitor and a series voltage source, VR, called the ‘reset’ voltage. If you proceed to close the switch for a sufficiently long period of time to ‘reset’ the capacitor to the ‘reset’ voltage, VR, then the fluctuations in the electrons within the resistor will result in a ‘noise’ charge stored across the capacitor. If you leave the switch closed long enough and reopen the switch, then, in addition to the ‘reset’ voltage, VR, and a possible ‘feedthrough’ voltage from the switch itself, there will be an uncertainty in the voltage across the capacitance arising from the electron fluctuation within the resistor. This uncertainty is called the ‘noise’ voltage. In essence, if you have a switch and you have a voltage connected to one terminal and you’re going to try to reset the capacitance to that voltage, then you’ll never obtain exactly this ‘reset’ voltage across the capacitor. The actual voltage will be a combination of the ‘reset’ voltage, feedthroughs and a ‘noise’ voltage. In fact, in this example we don’t even need the resistor because a practical switch and the connecting wires always have a finite resistance.

Now, how can we envision this? From the Equipartition Theorem of Physics and Thermodynamics, we know kT/2, where k is Boltzmann’s constant and T is absolute temperature, is the thermal energy associated with each degree of freedom in a mechanical system. If we set the thermal energy kT2=Cvn22, the energy stored on a capacitance, where vn2 is the square of the RMS noise voltage, then we see the RMS noise voltage is simply vn2()1/2=kTC. If we rearrange the equation again and write it in terms of noise charge, then we see the RMS noise charge is qn2()1/2=kTC. If we put in some numbers at room temperature and we assume 0.25fF of capacitance, then the uncertainty in setting this capacitance to a voltage would be around 200 noise electrons, which would set the so-called ‘noise’ floor for the system. Thus, we must remove this uncertainty if we are to improve the signal-to-noise ratio for this system.I saw this as a basic limitation in all of the sampling circuits that we were using. At that time we were not able to reduce the noise so we had a major limitation. This noise, if you translate it back to an incoming light signal, set the level of light that we would be able to detect in an imaging system. So I started to think about this a little bit. I said, “This is a sampling system and each time we reset the capacitance we don’t know the noise because it’s going to be different from sample to sample.” When we open up the reset switch, that reset uncertainty is not going to change. It’s going to be stored or integrated on the capacitance. It may leak away a little bit if we left the switch open for a long period of time, but the times we’re talking about are milliseconds or maybe less. Today they’re microseconds. So in that period of time the reset uncertainty, although we don’t know what it is, remains on top of the capacitor.

I got the idea, “Why don’t we just measure the sampled value of the reset level uncertainty, which is an instantaneous value of the noise? Why don’t we just take the output of the capacitor and put it through a set of amplifiers and measure it, with a ‘clamping operation’, and find out what it is during each sample time?” Then, while that particular reset level uncertainty is on the capacitor, why don’t we inject our desired signal on top of the capacitor during a separate time interval? Why don’t we just put the signal on the capacitor and assume that we can put it there in a relatively noise free way? Now that’s a major assumption, but it turns out that that’s not too far from the truth. So, if we put our signal on top of the capacitor, it raises the reset uncertainty level up by the signal level. In addition, to the actual reset voltage level, as I have mentioned, there’s also feedthroughs and other possible sources of noise, but we’ve measured all that during the ‘clamp’ operation. Next, we ‘sample’ the value of the combination of the signal and reference uncertainty level during a separate time interval called the ‘sample’ operation. Finally, we subtract the ‘clamp’ level from the ‘sample’ level to remove the reference uncertainty level. Therefore, in the case of imaging sensors, it takes two samples during each picture element time (called a pixel time) to remove the reset uncertainty and hence the so-called ‘reset’ noise. The first sample, as I mentioned, is called a ‘clamp’, while the second, I call a ‘sample’. In the process of differencing these two samples, you’re able to remove essentially a good part of the noise due to the reset level uncertainty.

I called this process Correlated Double Sampling or CDS. It made a world of difference. It was remarkable. It brought the number of electrons down from 200, in the case of our previous example, let’s say, to 2 electrons. It opened up all kinds of possible applications. It opened up applications in medicine, in endoscopy, in astronomy to see M31 stars that produce a photon every 20 seconds. It opened up applications in extremely low light level imagery. At the time, it was aimed more at imaging arrays that were used in satellite applications, such as for NASA. It was useful for scientific, medical and military applications. So, I managed to be able to publish the CDS technique and received the necessary company approval. I was doing the research under the sponsorship of Independent Research and Development.

After I did the work on CDS, which was the middle ‘70s, I worked on other subjects. I didn’t come back to CDS again until I went to the university. In the years ensuing, I noticed people started to refer back to the CDS work and began using this technique in their applications.

Transition to Lehigh University

White:

I left Westinghouse in 1981 to go to Lehigh University. However, prior to leaving Westinghouse, I spent a short period of time in Belgium. I received a Senior Fulbright Research Professorship to work in microelectronics at the Catholique Universite’ de Louvain in Louvain-la-Neuve, Belgium. When I was offered the chance to go on a Fulbright to Belgium, I told my manager (Gene Strull). He asked me what they were paying me, and I told him. He said, “Well that’s not an awful lot of money and you have your house back here. I’ll take the difference between your salary and put it in the bank for you so you’ll have it when you come back.” It’s nice to work for a company where your management cares for you, because I think that young people like to know they’re working in such an environment. Now it turns out I did leave the company when I came back, but I left with a good feeling and I still work with people at the company.

After I left Westinghouse and joined Lehigh University, I consulted with the Kodak Research Laboratories, during the 1980’s, and helped them, in the early years, develop image sensors. I remained a consultant for over 15 years with Kodak. I have managed to stay active in the field of consulting. I have consulted with the Naval Research Laboratories as well.

Metal Nitride Oxide Semiconductor transistor; Nonvolatile Semiconductor Memories

In the late ‘60s, I started working on another device, which was called a Metal Nitride Oxide Semiconductor (MNOS) transistor - a Nonvolatile Semiconductor Memory device. Initially, my colleagues and I were searching for a way to make a MOS transistor without an oxide and we thought we could replace the silicon dioxide with a dielectric called silicon nitride. We knew silicon nitride had properties that might be used to make a more reliable transistor in many ways. For example, we knew silicon nitride could stop sodium and water ions from permeating through the film to the semiconductor surface.

In the process of our studies, we examined some transistors made with a deposited silicon nitride gate dielectric. Our studies were performed with fairly low voltages (less than 10 volts) and these transistors were fairly normal. However, as it later turned out, if you were to apply a very high voltage on these transistors, which we did not do at the time, then these transistors would exhibit a so-called ‘hysteresis’ in their electrical characteristics. The people at our Westinghouse Research Laboratories looked at their MNOS capacitors at high voltages (greater than 25 volts). They observed a hysteresis in the C-V characteristics and described the results as a reliability issue. However, other researchers at Sperry Rand Corporation saw these devices as an opportunity to create solid-state, nonvolatile, semiconductor memories. So, we started working on these devices in the late 1960’s and I’ve been working continuously on the same type of structure for the past 30 some years.

These devices evolved into Nonvolatile Semiconductor Memories (NVSMs). Today, we call these SONOS NVSMs. Over the years, these SONOS NVSMs have been used primarily in space and defense applications; however, today there are a number of companies looking to use these kind of memories because SONOS memories program and erase with relatively low voltages (i.e. less than 10 volts). SONOS memories find application in so-called ‘smart’ cards, EEPROMs, NVRAMs and application specific integrated circuits (ASICs).

My graduate students and I have been working on SONOS memory devices for about 20 years now since I’ve come here to Lehigh. I received my Fellow Award (1974) not only for Solid State Imaging, but also for nonvolatile semiconductor memory devices. I suppose you probably want to know about my start with the Electron Devices Society?

Morton:

Before you get into that, do you know a fellow named Richard Petritz?

White:

Yes, Dick Petritz?

Morton:

Isn’t he also involved in similar memory technology in his current company?

White:

He may be, but the Dick Petritz I knew was involved in infrared imaging many years ago at TI.

Morton:

Yes. I’m sure it’s the same guy.

White:

I’m sure it is, too.

Morton:

Well, I interviewed him a few years ago, but I think now he’s doing something like what you described.

Electron Devices Society

White:

I started going to meetings as I told you like the International Solid State Circuits Conference (ISSCC), the International Electron Devices Meeting (IEDM) meetings and the Device Research Conference (DRC). I found these wonderful meetings to go to, especially in talking to people. That’s where you really learn a lot, where you communicate with people in the halls and go to lunch and dinner with them. That’s why it’s so important for young people to go to meetings because they find out things through contacts with other workers in the field.

So I thought about getting involved in the Electron Devices Society (EDS) and I wasn’t quite sure how to go about the subject. An opportunity arose because of John Szedon at the Westinghouse Research Laboratories in Pittsburgh who was doing the newsletter for the Electron Device Society. John had done some of the early work on the MNOS devices and discovered the ‘hysteresis’ reliability problem. So, John said to me, “Marvin, I’ve had this newsletter for some time now. It’s a lot of work and even though you have editors you’ll find you’ll have to do a lot of the digging to get this thing going.” The EDS newsletter was a ‘paste-up’ at that time. He said, “How would you like to take it over? You have opportunities to get to meet people and interview them and have a really good view of the Society.” So, I took his suggestion and took over the EDS newsletter. I took it over for three years. At first I continued producing the newsletter every month, but eventually I went to a bimonthly edition. I found the experience very good because I called people on the phone, I went and got pictures from them, I interviewed them, I wrote stories, and I really got to know all the people in society.

I would advise young people, if they want to work in the EDS or any technical Society, then just let somebody in the Society know your interests. There’s always something for an individual to do in any Society. You get to meet really wonderful people, people that are really doing great work in the field. I think it’s an excellent experience for a person wishing to contribute to the field. I’ve worked in the EDS Society Administrative Committee (AdCom) and held the EDS Membership Chair for more than 12 years. I’ve also done Chapters and Education. Over the years, I’ve been more of a worker than a manager – although I’ve had my share of management responsibilities. I like to do things that I see make an impact. I can organize, but I really like to take on specific challenges like the newsletter and membership areas.So I got involved in the newsletter and I did it for three years. This opportunity was a real lead into the EDS. Over the years, I have worked with many EDS presidents. I worked with Frank Barnes from the University of Colorado, Harvey Nathanson from Westinghouse, Greg Stillman from the University of Illinois, Craig Casey from Duke University, Lew Terman from IBM, Lou Parrillo and Al MacRae from AT&T Bell Laboratories and later Motorola, and Bruce Griffin and Rick Dill from General Electric. The Society has really done a wonderful job over the years sponsoring meetings and seeing to it that information is available to workers in the field. They’ve done a good job in terms of opening up new journals like the Electron Devices Letters. I remember when George Smith got it started, no one thought that it would really catch on with the membership. George really did a wonderful job and got the EDS Letters moving and made it self-sufficient. It’s really quite a nice forum now for putting information out to EDS members.

History of the field, 1960s-1970s

Morton:

During those years you were working on the newsletter, again, it sounds like you got a great perspective on all kinds of things that were going on outside of your own specialty. Concentrating on that period--

White:

- Audio File

- MP3 Audio

(400 - white - clip 4.mp3)

What was happening? Well the big thing that was happening in the ‘60s was the development of a practical Metal Oxide Semiconductor (MOS) Field Effect Transistor or simply a MOSFET. Prior to the 60’s, Gerald Pearson and William Shockley of the Bell Telephone Laboratories, in the late 1940’s, set out to develop a MOSFET with a metal gate electrode, an ‘air-gap’ insulator, and a silicon semiconductor substrate; however, they had very little success in their experiments. The work of Pearson and Shockley was followed by Bardeen and Brattain, who continued to experiment with a metal electrode, a mica insulator, and a germanium semiconductor substrate; however, they had similar results. Bardeen explained the results of these experiments by suggesting the voltage applied to the metal gate electrode created flux lines, which terminated on so-called surface states at the interface between the semiconductor and the insulator. These surface states were so large in number that flux lines could not penetrate into the bulk of the semiconductor and modulate its conductivity. Only about 10% of the semiconductor conductivity could be modulated in these early experiments. So to create a device based upon the field effect principle was very difficult in the late 1940’s and most of the 1950’s. Near the end of the 1950’s, work was continuing on the MOSFET at the Bell Laboratories by Dawon Khang and Maurice Atalla. They presented a paper in the 1960 Device Research Conference in Pittsburgh on their experiments with MOSFET transistors fabricated with a silicon semiconductor, a silicon dioxide insulator, and an aluminum gate electrode.

The other competing Integrated Circuit (IC) technology in the 60’s and 70’s was the Bipolar Junction Transistor or BJT, which suffered from issues relating to power dissipation and packing density. With the arrival of the MOSFET device engineers were able to compact, make delay lines, and do it with very low power. Another point about a bipolar device is that it doesn’t make a very good low -level switch or ‘chopper’. There’s an inherent offset voltage in the bipolar transistor, whereas in a MOSFET transistor, if you have zero current, then you have zero voltage across the output terminals of the device.

The MOSFET started taking over, but it was with P-channel MOSFET devices in the ‘60s. This was because device and circuit engineers could create designs in N-type substrates and not worry about ‘parasitic’ inversion layers. These inversion layers came as a result of positive charge in the overlying oxide. If you had an N-type semiconductor, then positive oxide charge would just make the surface more N-type. The problem came when you had a P-type semiconductor and you had positive oxide charge. Electrons would be attracted to the surface and you would have parasitic inversion layers coupling N-channel MOSFETs. Thus, the designers faced a loss of device to device isolation.

Rather than deal with the issue of ‘parasitic’ inversion layers, designers wanted to design circuits initially with only P channels, devices that were fabricated in N-type substrates. After technology progressed to control the growth of high-quality gate oxides with low fixed positive charge combined with oxide isolation, ion-implantation, and self-aligned polysilicon gates, N-channel MOSFET technology became accepted in the design community. Silicon Integrated Circuits (ICs) were designed in the ‘70s with N-channel MOSFET technology.

In the late 1970’s there was an important turning point in integrated circuit technology. This occurred in the transition when industry moved from a 64K DRAM to a 256K DRAM. In this transition, the power dissipation with an N channel technology design was prohibitively large with several watts per chip. The reason related to the increase in the number of ‘sense’ amplifiers needed on the larger 256K chip. The power dissipation became high and chips started to heat up. U. S. companies were struggling to hold onto their N-channel MOSFET technology with multiple clocking schemes and flip-chip technology for cooling.

Meanwhile, a number of companies in the aerospace industry had low power, CMOS technology; however, the U.S. consumer industry had not been moving in that direction. Japanese companies, such as Toshiba, started to use CMOS technology in the design of memory arrays and gained a large percentage of the world market in memories and logic. Eventually, the U. S. consumer industry realized the importance of low-power, CMOS technology. Power dissipation has played an important role to change electronics and in microelectronics technology. Power dissipation will continue to be an important consideration, especially in portable consumer products.

Charge-Coupled Devices technology and standards committee

White:

In the early 1970’s, one of the exciting technological advancements was the introduction of the Charge Couple Device (CCD) Technology. There is no question in my mind, from a personal point of view, this was a very exciting time for device engineers. I can explain. In CCD technology, signal charge, represented by minority carriers at a specific location on the semiconductor chip, is moved with the use of overlapping electrodes excited by sequentially applied voltages. With this technique, a signal charge can be moved along the surface of the semiconductor until it reached a point, lets say a collection point, where you sense the charge or ‘read out’ the signal. As the voltage on these electrodes goes up and down, a displacement current is created, which is the flow of majority carriers in the electrodes and semiconductor substrate. The signal charge, as I mentioned, is carried by minority carriers. These two carrier species never interact until you reach the collection point where you detect the signal. This means you can move signal charge (minority carriers) without interacting with the majority carriers flowing in the substrate and clocking electrodes.There was a problem in the operation of these ‘surface-channel’ CCDs. As you moved the charge along the surface of the semiconductor, the charge would become trapped at surface states and you would not be able to get the signal out. Eventually, the charge was moved away from the surface with the introduction of the so-called ‘buried channel’ CCD. Actually, I believe these devices were discussed in the early 1970’s at the Device Research Conference (DRC), which was held at the University of Michigan in Ann Arbor. George Smith and Bill Boyle of the former AT&T Bell Telephone Laboratories received an award this past year at the DRC for their contributions to the development of the buried channel CCD. I remember the presentation in Ann Arbor, because it was a hot summer without air conditioning in the dormitories where the attendees stayed.

Charge Coupled Devices (CCDs) were wonderful. They were wonderful because we could see they would be great for signal processing and imagery. However, we had to detect a very small signal charge, which could be corrupted by sources of noise. This is where the concept of correlated double sampling (CDS) was employed. Also, we had to develop techniques to inject electrically a signal charge, transport the signal charge with suitably designed clock drivers and all of the associated circuitry. This was a technology where you needed to understand the physics, the materials, the analog to digital circuits, and the system applications. If you studied and worked on these issues, then you could learn a lot about the field of electronics.

I got involved in giving talks on this and participating in panels and just being very active in CCD technology. I was on an IEEE Standards Committee for CCDs, whose head was Gilbert Amelio from Fairchild Semiconductor, although Gil had participated in the early experiments on CCDs with Carlo Sequin and Mike Tompsett at the Bell Telephone Laboratories. Gil and Al Tasch, who is now at the University of Texas, myself and others on the committee were trying to create a so-called ‘standard’ for Charged Coupled Devices. I had a lot of fun just getting to work with people in the field who are now still very active. I met a lot of people there at the time.

Also, to make these devices you had to understand surfaces of the device. You had to understand how to control the surface. That’s so important to improving the yield in semiconductors. It was a wonderful learning experience. I feel this was a major technology development in the early ‘70s.

Morton:

Was there anything in that period that everybody thought was going to be really big that never really happened?

White:

Well, some workers believed CCDs could be used in memories, which did not happen.

Morton:

Why didn’t that happen? Did another technology come along that was just obviously better for memory?

White:

I think in order to do the memory technology, you needed a serial organization for the CCDs, which made it slower. You had to be able to shift out the signal in a serial or sequential manner, which required considerable time, even at high speeds. This was one of the limitations. There was also the signal loss due to trapping. I feel the biggest issues centered around the need to organize into serially addressed memories and the subsequent charge loss in sequential readout.

Morton:

Was there anything else like that you can think of? I mean, I’m not thinking of anything in particular.

White:

That just didn’t go anywhere?

Morton:

Yes. Something that seemed like a big deal at the time that never really took off or hasn’t taken off yet.

Technological improvements that influenced research

White:

Well of course CMOS is in the wings. It wasn’t there. People didn’t recognize that it could have been moved quicker. I think the technology is needed at times to move a field along, like CMOS was able to come along when you had the right set of technologies. I think LOCOS Technology, where you use oxide isolation, was extremely important to pack devices more efficiently on the wafer. Also ion-implantation was certainly a major technology. I think Stanford University and Bell Laboratories played a major role in this area. Ion-Implantation made a major impact on controlling the threshold voltages of the MOSFET devices. This was an important step in making N and P channel MOSFET devices on the wafers. The threshold voltages had to be controlled and this was accomplished quite well with ion-implantation technology.

Another development that helped the reliability of MOSFET technology was the movement from a metal gate to a polysilicon gate. A metal gate was just puncturing the oxide. As you tried to reduce the oxide down it was puncturing it. From a reliability point of view, polysilicon gates (also self-aligned technology) gave the ability that once you put the gate down you could do the implantation around the edges of the gate and align the source and drain. At that point you have very little overlap capacitance associated with the source and drain regions. This made the MOSFET devices go faster. It also opened the door for more utilization of CMOS technology.

The introduction of Computer Aided Design (CAD tools) helped the design of analog and digital circuits. Circuit design was helped immensely with a simulation tool called SPICE. Process simulation was helped with a tool called SUPREM. SPICE came from Berkeley and SUPREM from Stanford. Universities have made major contributions to the early development of simulation tools. What would we do without some of these tools? SUPREM, which came from Professor Bob Dutton’s group at Stanford, helped to simulate the process sequence for an integrated circuit. With a simulation in SUPREM, you could basically lay out on a computer the steps that you wanted to do to make an integrated circuit and you could simulate the effects of each process step. Eventually, the files that SUPREM generated would go right into SPICE to predict the operation of the circuit. SPICE came from Professor Don Pederson’s group at Berkeley and has proven invaluable for the simulation of integrated circuit operation. These were very important simulation programs for the development of integrated circuit technology. Ion implantation, polysilicon gates, self- alignment, and LOCOS technology were all extremely important. Another major technological development was the introduction of Silicide technology. This technology made possible the reduction of resistance associated with the source and drain regions as well as the polysilicon gate electrodes in an integrated circuit. It permitted better contacts to devices and improved the current drive to charge load capacitance. There are a lot of different technologies that have aided over the years, such as multilevel metalization and more recently the emphasis on the development of low dielectric constant, inter-level dielectrics (ILDs).

In the ‘70s (to answer your question), I think there was one logic family that came along that received a lot of attention. It was called Integrated Injection Logic, I2L. I believe the major problem facing the technology was to ‘steer’ and control currents around on a chip. I2L required very precise control over steering current. It was predicted to be a very important technology, but it never made it.

I can say from experience, if you work in areas to push technological advancement, like microwave technology, where you had to make high speed devices, the microwave people were always ahead of the commercial microprocessor and logic people by several years, at least in terms of pushing the technology. They had to obtain narrower line widths and spacings, and they had to achieve shallower junctions with lower contact resistance for performance levels that were far exceeding what was required in other application areas.

Today, we see microprocessor technology moving into the gigahertz range. We see the need to understand transmission lines with parasitic capacitance and finite line conductivity, and to really understand how to make good connections and contacts to devices.

The advantage in working in a systems development organization, like Westinghouse in Baltimore, was my exposure to many different technologies. I’m sure my colleagues in other companies, that were systems-focused but had a device technology lab, would agree. In a systems-oriented company you gain an understanding of teamwork to create advanced systems. I knew the role the device would play in the system. I knew what was important and needed in the device properties. I learned from many different disciplines and had the opportunity to work in so many different areas. I was just not tied into one area.

I think the concern of young people today, especially when joining a large company, is that if they get into one small area and they become very good at it, and suddenly that area dies away, then they haven’t had the ability to grow. Many of our students go to work at large companies and I am concerned for their future growth. I saw this happen in larger companies, but the advantage I had was that I was able to work on a large number of different projects and also meet with customers and make presentations. I always liked to work on a lot of things because I knew that some of the projects would be successful.

Committees in the Electron Devices Society

Morton:

One other thing that you may be able to help me with, and this is sort of outside of your area, but there was an energy conversion committee within Electron Devices, but there’s not much information about that. Through your years here was there any activity in that committee?

White:

I don’t remember who was involved in energy conversion devices.

Morton:

It’s a very longstanding committee, but I can’t find out much about it.

I remember with Lou Parrillo, when I was on the Executive Committee (ExCom) of the EDS Administrative Committee a few years back, we had a Saturday meeting. We used to do that before Sunday meetings. We’d go over subjects so that when we went into the Sunday meeting the attendees would have a well-thought out agenda. We planned ahead of time before we discussed a controversial issue what we were going to do because the meetings would go all day long on Sunday and you had to move things along. So we met on Saturday to refine the issues. We had a meeting one time to discuss the future of the EDS. I guess Lou brought up this issue about how we had just too many EDS technical committees and they were just hanging around for years. We had no personnel involvement or activity in these committees. We found there were some people involved, but there were some that just hadn’t had any activity in years.

Academic career; Lehigh microelectronics program and laboratory

Morton:

Let’s discuss your move from Westinghouse.

White:

Well, I also taught at the University of Maryland from ‘74 to ’78. I enjoyed teaching in the Electrical Engineering department. As you know, I also taught at a night school at Westinghouse called the Westinghouse School of Applied Science and Engineering. Teaching was of interest to me because I thought I could do a good job and it gave me an opportunity to think about some research issues. The Fulbright experience in Belgium gave me the feeling I would like to work in the university. I started to look at possible university positions. I had offers from several schools, but Lehigh University looked interesting. Lehigh had an endowed chair called the Sherman Fairchild Chair. They had constructed a building, and it looked like I could come and put a research program in microelectronics together. It was a small enough school and I guess I thought I could make an impact. My wife, Sophia, thought this would be a nice area to come to. It’s a nice place to live. It’s quiet.

I was able to put a microelectronics program together at Lehigh. I came in 1981 and I introduced a course on Very Large Scale Integrated (VLSI) Circuits. The course consisted of a lecture and laboratory, which used an Applicon Computer Aided Design (CAD) System. I taught the lecture part of the course and my wife, Sophia, taught the laboratory and introduced the students to the principles of an operating system. The students learned how to design both analog and digital integrated circuits. I introduced CMOS as well as NMOS circuits into the course curriculum. Of course, when the students left the course and interviewed for jobs in the early ‘80s, they received nice offers because they had good experience. So I started to put a program in microelectronics together at Lehigh. I had had industrial experience in writing proposals and bringing in funds. I spoke with customers, wrote proposals and made presentations. These were helpful in my new position as a university professor.