Oral-History:Paul Green

About Paul Green

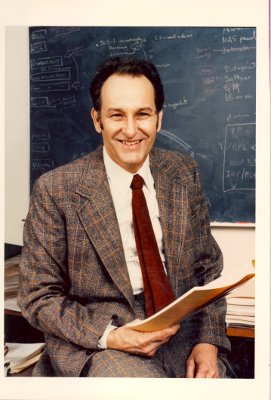

Paul Green received his B.S. from Chapel Hill, his Master's from North Carolina State University (1948), and his Ph.D. from MIT (1953). He worked at Lincoln Lab (1951-1969), IBM (1969-1997), and Tellabs, Inc. since. At Lincoln he helped develop RAKE, the first operational spread spectrum system still the basis for spread spectrum cellular telephones. Via some false starts, he figured out a way to do radar mapping in astronomy. In seismology, his idea of a Large Aperture Seismic Array proved less effective than theory had predicted. At IBM he worked on speech recognition, peer (decentralized) networking, an the all-optical IBM 9729 network, using fiber optic technology (1987-1996). Since then, at Tellabs he has been continuing optical network research, particularly on the optical cross-connect, an optical switch. He has had significant involvement in the IEEE Information Theory Society and the IEEE Communications Society throughout his career, particularly in the latter.

He is most proud of his work on range Doppler mapping, but hopes his optical network work will prove to be enduringly significant. In the field itself, he notes the importance transistors, information theory, digital signaling, satellites, personal computers, and the complementary developments of fiber optics and wireless communications. He believes that future innovations in fiber optics and bandwidth demand will govern the field’s future development, and that there is a great challenge to inspire students to do real technological innovation, with visionary thinking, and not just hare off into product development.

About the Interview

DR. PAUL GREEN: An Interview Conducted by David Hochfelder, IEEE History Center, 15 October 1999

Interview # 373 for the IEEE History Center, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Dr. Paul Green, an oral history conducted in 1999 by David Hochfelder, IEEE History Center, Piscataway, NJ, USA.

Interview

INTERVIEW: Dr. Paul Green

INTERVIEWER: David Hochfelder

DATE: 15 October 1999

PLACE: Hawthorne, New York

Overview of changes in communications engineering

Hochfelder:

Good afternoon, Dr. Green. Would you give a summary of the way the field of communications engineering has changed since you became active in it?

Green:

- Audio File

- MP3 Audio

(373 - green - clip 1.mp3)

It has been completely transformed. I first got interested in it as an undergraduate student. At that time there were no such things as computers to compute simulations. Everything was closed form mathematics and simulations really hadn’t matured much in communications engineering. Also, the technology necessary to do telephone calls or military kinds of things absolutely dominated everything, and radar in particular. (I suppose one might call communicating with oneself a branch of communications).

Therefore, I watched as the technology became more and more enriched with sophisticated ways of analyzing things, while clever inventions were being made, like the Shannon Theory of communications, digital signaling, and fiber optics with its surprising and totally counterintuitive capability of something so small supporting 25,000 gigahertz of bandwidth. All these things came in a rush.

Looking back, there have been a number of transformations. The pace of technological change has been steadily accelerating and continues to accelerate. If you were to plot technological progress, there’s probably a curve that starts fairly slowly after World War II building almost entirely on the technologies unearthed during the war. Then it accelerates and gets faster and faster to the point where now all sorts of things are doubling in months rather than years, such as bandwidth consumption, individual bit rate and the amount of information presented to users on CRT displays.

Hochfelder:

As in Moore’s Law?

Green:

Yes. I think what’s behind it is both the push of technology and the pull of demand. Technology push is the kind of thing the IEEE does when presiding over a technical innovation, but the consumer doing the pulling is apparently insatiable and there is an unlimited demand for more and more bandwidth and new services.

Bandwidth demand and applications

Hochfelder:

Why is that? What are some of the applications that occupy bandwidth?

Green:

The applications don’t even need to be defined. Give people a little bit more bandwidth and they’ll find something for which that bandwidth is not nearly enough. Think of the material that we’re trying to present on the web pages: full motion video, et. These are real bandwidth pigs. Users are consuming enormous amounts of bandwidth and overloading a major portion of the communication resources of all telecommunication these days. This is true in the U.S. and is probably also the case in all developed countries. What are we getting for it? We are getting stationary pictures and an amount of type that is less than you would have on a printed page. We are certainly not rifling through pages doing fast browsing, and we’re not showing much motion video. If we did, it would be in just two dimensions, not 3-D visualization.

The calculations people made years ago about the limited acceptance bandwidth of the human brain are all wrong. John Pierce and others in the early days of information theory computed what they thought the upper limit of how much information the human brain could assimilate as an order of magnitudes.

Due to phenomena like rapid browsing and quick eye motion, and the human ability to process large information in chunks, provided it is presented in a fraction of a second, assimilating information quickly is not something that can be calculated in a cold-blooded way. There does not seem to be some crisp information theoretic upper limit.

What I’m trying to articulate is that, given the bandwidth, we greedy human beings will find applications for it that are probably going to go well beyond anything that can be provided. I don’t think one has to worry about the applications being there. People will think of them.

Major developments in communications engineering

Satellites

Hochfelder:

What do you think have been the major developments in communications engineering since World War II?

Green:

Satellites were an important development, though I would suggest that the importance of the satellite as a communication entity is overshadowed by its importance as a navigation and reconnaissance instrument. The Weather Channel is a good example of how high tech has been blended into the entertainment media to produce something remarkable. The transistor may be more significant than any other one development.

Fiber optics

Green:

Along about the 1970s came fiber optics. That is what I work on, and that has been an absolutely key development. I would also list the PC as a key development, as a window through which human beings look at information. I think of the Web as a major subplot of the PC story. The democratization of computing, having come out of the computer room and onto desktops and laptops while at the same time providing the resources of ARPANET, the Internet and the Web has certainly been a significant development. Have I forgotten anything really key?

Wireless

Hochfelder:

Wireless?

Green:

Of course. How could I forget wireless. It took me a while to realize exactly how to say this, but it looks to me as though wireless and fiber optics are the two big Cinderella technologies. Wireless goes everywhere but won’t do much when it gets there because of bandwidth limitations, whereas fibers can do almost anything you want when they get there because of their gigantic bandwidth and low attenuation, but because they have to be strung on poles or run underground or whatever they can’t go everywhere. These two mutually complementary technologies are making all the headlines and they’re defining where we’re going. Satellites are not making headlines much anymore. And who remembers intercity coaxial cable? Cell phones and pagers go anywhere but don’t do too much when they get there. However when that is combined with the phenomena of fiber optics, which handles the insatiable demand for bandwidth, they’re defining the future. Yes. How could I have overlooked wireless?

Educational background and military service

Hochfelder:

Would you talk about your career, starting with your educational background and military service?

Green:

I’m from Chapel Hill, North Carolina. I went to school and got my undergraduate degree there before being carried off by the Naval ROTC just as World War II was ending. When I returned in 1946, I started my Master’s at North Carolina State University, and was finished in 1948. Then I worked for a year and got married. Since I married a Boston girl, needless to say I had to go to MIT, which I entered in ’49. I got my Doctorate at MIT in 1953. In the meantime I had joined the recently formed Lincoln Lab, which had been a response to the North Korean invasion of the South. I worked at Lincoln Lab from its inception in 1951 until 1969.

By 1969 I was sick of working for the military. We were in the middle of the Vietnam War, and I would get up every morning and wonder why I was having my paycheck paid by people who were burning up little brown people in Asia. I couldn’t stand it. Therefore, when the offer to head a so-called communications science program at IBM Research came along I pulled up my family, which by then was a wife and five kids, and moved from Boston to Yorktown Heights in New York. I worked there from 1969 until 1997, about twenty-eight years. In 1997 I left IBM to come to Tellabs, Inc., which is where I work now on fiber optic networks. That’s the barest statistics. The technical content of all of this was endlessly fascinating to me.

I had a professor at MIT I looked upon as a role model named Robert Fano. His is a famous name in communication theory. One of the things I admired about him was the fun he got out of being right at the frontier of high-powered technology. Six or seven times in his career he moved on to completely new frontiers after having helped define a field. He worked very hard at what could be called cream skimming. I thought that was just a wonderful way to live. He would do the fun problems, and when they got the least bit mature or stale he would sense the next big problem in a very creative and visionary way. Then he would jump from that lily pad onto another. He was and still is my hero. He’s a retired MIT professor and is still around.

Lincoln Lab at MIT; Venus radar experiment

Green:

That’s kind of the way I tried to operate. When I came to MIT and Lincoln Lab was formed, I inherited a challenge which sounds dull now but which was not at all dull then: communicating between two points using pseudo-noise waveforms. This later came to be called spread spectrum. The idea had been invented by a number of people, and in fact Robert Price, a brilliant engineer with a dose of the historian, later discovered that one of the early inventors of this concept was a very gifted young female movie actress from Vienna named Hedy Lamarr.

Hochfelder:

Right.

Green:

- Audio File

- MP3 Audio

(373 - green - clip 2.mp3)

We didn’t know anything about that at the time. We had gotten the idea from someone on a government Air Defense panel who independently conceived the idea that if you communicated using pseudo-noise waveforms instead of the more customary pulses or sinusoids, they would be hard to jam because they would be hard to duplicate, and would be very difficult to intercept because they sound just like noise. The challenge was to develop a way of having two of these pseudo noises – one at the transmitting end to modulate a message onto, and another at the receiver end, where the key to unlocking this message was an to have an identical copy of the same pseudo-noise. I undertook working on finding a way to do this as my Doctorate thesis.

I had had some Navy training duty in what later became the National Security Agency and knew about using cryptographic key generators. I generated two pseudo-noises at both ends using a method that in retrospect was very stupid. However it worked, and it got me my thesis. I studied key generators as a way of generating pseudo-noises at opposite ends of a communication link and synchronizing the two. I had been really proud of solving that problem and thought it was pretty heady stuff, though it’s embarrassing now to look back at how primitive and mistaken a lot of that was. Then the Armed Services immediately wanted to have the results in my thesis deployed into their system. Being an ambitious young guy, I got myself appointed manager of that little project, the Lincoln F9C system, the first operational spread spectrum system. After a while that got kind of frustrating because the ionospheric multipath was causing horrible difficulties. This needed fixing. There was a genius in our group who is another one of my heroes, this same Bob Price. He and I co-invented something called the Rake System as a fix for the F9C system. Here was this ionospheric multipath with many successive arriving replicas of the initial signal that had been sent. We worked on figuring out a way to use the repeated signal arrivals that are all smeared together and to take them apart and put them together again optimally. This was instead of the previous situation of using just one and having to consider the other replicas as noise.

We first worked out how to do it, particularly from Bob Price’s theoretical point of view. Then we actually built the Rake Receiver. A form of Rake is the basis of today’s spread spectrum cellular telephones. We did that in 1958, about six years before the invention by Bob Lucky and Dave Forney of the self-adaptive modem that optimizes a different parameter and adapts to whatever channel is in its way. Ours adapted to the ionosphere in its way. That was also pretty heady stuff, a receiver that adapted itself and that took variously arriving ionospheric multipaths and put them all back together again. That tool was built into military systems. And well that got stale for me too.

Then one day a bunch of us were sitting around the table in the cafeteria and an historic conversation took place. I recently visited up there and sat at the same table and the same place. Bob Price had returned from Australia where he had been studying radio astronomy and came back with a book on radio astronomy. The last chapter of the book talked about something previously nonexistent called radar astronomy. The Hungarians and Americans had already bounced echoes off the moon, and the conjecture was that one-day radar systems would be powerful enough to bounce echoes off Venus. I had read this thing, and Bob and I were sitting at this lunch table and talking about it. There was a big radar set that was just about to be turned on, called Millstone Hill, having more power than any radar set to date. We were wondering if we could try that radar experiment on Venus with Millstone Hill.

Bob Kingston was there too in the cafeteria, and he had just been developing a Microwave Amplifier of Stimulated Emission (maser), a predecessor of the laser. One could get very low signal detect ability with that, which is to say low noise temperature. We called Bob Kingston over to where we were sitting, and he said that yes, he could convert his maser to the frequency at which Millstone worked. Then we spotted Gordon Pettengill. He’d been studying planetary orbits and such. At that lunch Bob Price, myself, Gordon Pettengill and Bob Kingston began conversing together, and by the time we’d gone through three or four paper napkins we had a plan. I think it was from Gordon that we learned we had about eight months to get ready for the next close approach of Venus.

We dropped everything else and started spending the Government’s money without telling anybody. We later learned that we had been found out. It was brought up to the Lincoln Lab Steering Committee and they sort of shrugged and said, “Well, it will keep them out of trouble. Let them go ahead." If you had a good idea, they’d let you work on it. Those were heady days at Lincoln Lab.

We went to work and did this Venus radar experiment when Venus got its closest and didn’t see anything right away. However, Bob Price was one of the world’s most persistent guys and he didn’t give up. He patched together the tapes he’d gotten and finally came out with a convincing but marginal conclusion that he had detected an echo.

It wasn’t just an idle technology stunt. The Space Program was about to be launched, and no one knew the size of the solar system at all accurately. All of the measurements up to that point had been based on parallax measurements, which measured tiny differences of angles and which were notoriously imprecise as far as distances were concerned. Radar is imprecise at an angle, but very precise in distance. Everyone needed to know what the Astronomical Unit was, the yardstick of the solar system. We supposedly measured this to about four or five orders of magnitude better accuracy than was known before, and it generated a huge amount of press. For a moment there we thought we were pretty hot stuff.

Retribution came soon enough. The next time Venus came around everyone had much stronger radar systems. The Jet Propulsion Lab people and others, including us, discovered that the Astronomical Unit was a considerably different number. We had just seen some noise wrinkles in the curve and didn’t have as much system margin as we thought. We shouldn’t have and in fact did not detect the target. That was humiliating. We thought we were fairly honest folk, but here we had rushed into print, even had our pictures in The New York Times. Now we had pie all over our faces, and professionally we felt just terrible about it. Price and I were the sort of principal villains in this, and reacted in the same way in that we both buried ourselves in trying to develop total theories of radar astronomy to “redeem our besmirched escutcheons”. I set out to work on how to use modern communication theory, to extract the most information about measuring astronomical objects. Bob Price set out to work on how to sensitively detect astronomical objects no matter how screwball their behavior, and how to build mathematically optimum structures to perform the detections.

During my researches I stumbled on how to measure target properties using a very interesting concept. If one used the kind of pseudo-noise waveforms we used for the Venus experiment and did it just the right way, and made a picture of the echoes as a function of two variables – power as a function of tau, the range and f the Doppler frequency offset – lo and behold, this could be viewed as a map of the target’s reflectivity. It was a somewhat distorted map and had some ambiguities that people later found ways of getting around, but it was a map of the object.

That just blew out our minds - nothing left but a cinder. Humans have been looking at Venus since they developed organs of sight. People had worshipped it. They didn’t know what it was, didn’t understand it, and sure as hell didn’t know what was underneath all those clouds. Any radar that looked at it was not sufficiently narrow beamed (and probably will never be) to resolve features of the planet in angle. Yet it was rotating and it was rough, which meant that it sent back signals at different Doppler offsets and it sent back signals at different range. One night I had the insight that if you looked at it just the right way, and if you used a computer to break down the return signal on the basis of how much power is the function of range and Doppler, that the cells of power as a function of these two variables map directly onto whatever is the reflectivity of the object. In this way one could get a picture of Venus looking at it from earth. Wow. At that point, in typical butterfly fashion, I went on to something else.

Gordon Pettingill however picked up that idea and transformed astronomy with it. He was the mastermind behind something called the Magellan spacecraft that flew around Venus, made unbelievable maps and discovered all sorts of things. There are Magellan radar pictures of Venus available from NASA that look like aerial photographs of 1 km resolution. Absolutely unbelievable. It’s done either from the earth or from a spacecraft that gets a little bit closer, and it’s all done with this range Doppler mapping.

I jumped onto another lily pad after that. I was a peacenik and had always been uncomfortable working for the military, but working on something that would help the cause of international understanding, if there was such a thing in this mass of technology, was what I wanted to do. I got interested in seismology because of the Test Ban problem. There were desperate negotiations to arrive at a treaty, and the headlines from last week were just sickening to me. People like me with twenty years of work invested have been betrayed by Senator Helms and the crazy Republican Senate in Washington.

Hochfelder:

Are you referring to the Comprehensive Test Ban?

Green:

Yes, the Senate’s rejection of it. In 1965 we started working on building a large seismic array using what we knew about signal processing theory and the use of computers to exercise the theories. Our experience base was all the work we had done, ranging from the ionosphere all the way up through echoes from Venus.

Mathematically, you would think in some sort of high level sense that you could map backward from some received seismic signals and figure out what happened at the source. Whether it was a bomb or an earthquake, what you needed in order to distinguish between the two was a very large array of seismometers. To make a very long story short, under ARPA sponsorship we hatched the idea of something called a Large Aperture Seismic Array (LASA). Our group at Lincoln Lab spearheaded the thing. Two of them were built, and they were huge – about 200 km across. One was in a deserted area in eastern Montana and the other in northern Norway near the then Soviet Union. I think the one in Norway is still running. This was an effort that really essentially never went anywhere for political as well as technical reasons. The arrays didn’t work as well as the theory said they should due to complexities of random and un-measurable variations in the ground underneath the different seismometers. We gave it our best shot for four years, until 1969 when the Vietnam War was raging and I wanted out.

IBM

Management; Advanced Peer-to-Peer Networking (APPN)

Green:

I went to IBM under the illusion that I could be a big manager. However it didn’t take me or my superiors long to learn that I wasn’t worth much in that capacity. I went from one job to another at IBM. I presided over the initiation of a number of things at IBM, including the speech recognition program. I worked with that one during its genesis, though I wasn’t the one that cooked up the idea. I was manager of a number of programs like that. Two successful technical projects I did initiate myself were peer networking and the IBM 9729, part of the all-optical networking vision I’ve been preaching since 1987.

As to peer networking, IBM had the idea of very centralized networking architectures based on the notion that if you built a network it had to be presided over by a single control point in a large mainframe. However, one already had minicomputers and could already clearly predict the arrival of the personal computer. My group at IBM Research postulated the idea of having a networking architecture in which every machine that was a member of the network would take its own responsibility for entering, participating in, and leaving the network. After a tremendous amount of painful lobbying and internal battles, it became the accepted evolution of the IBM standard way of networking called System Network Architecture (SNA). Our scheme was called Advanced Peer-to-Peer Networking (APPN).

By 1987 the younger guys were running circles around me and there wasn’t really anything for me to do anymore in that whole network architecture area, so I got fed up with that.

Fiber optics group; wavelength division for networks

Green:

Then one day someone mentioned fiber optics. That led to my initiating this program at IBM in which I am still involved, although we and our program have moved on to another company. This program involves using optical wavelength division for building a whole new generation of networks that are of enormous bandwidth and are signal transparent. You can send any format you want through fiber at any bit rate. With WDM, each different wavelength looks like an individual fiber, so rather than having one fiber per communication transmission we were imagining systems in which a large number of such paths could be generated within the same fiber.

Our very small group was patterned after the kind of group I was in at Lincoln Lab as a young student. The group, though small, had really good people, every one of them, and they very carefully spanned all of the relevant disciplines that were required – all the way from greasy thumb engineers to hotshot mathematical types. This group of twelve or fourteen people at IBM developed the product, and in 1995 it was the first wavelength division link that was commercially successful. Initially it was called MuxMaster, but the IBM product people gave it an even more romantic name: IBM 9729.

Hochfelder:

That does have a ring to it.

Green:

It has a certain musical quality to it. This MuxMaster, or IBM 9729, although a historic first, was not much of a success, because, as became clear by 1996, hooking up data centers with optical wavelength division multiplexing was not where the action was. The action was in the center of the telephone system where the exhaust of the fibers available first hit. If you’ve run out of fibers, there is only one thing you can do other than dig up the ground and put in more, and that is to put in wavelength division multiplexing. Other groups were running with this, but we had been innovative in getting the thing started. We weren’t the only ones after 1995, but we were the only ones to have a significantly fruitful effort at the product level. We watched in frustration as this field began to burgeon. Now, of course, as anyone who looks at telecommunication landscape knows, wavelength division multiplexing the “all-optical network” is one of the big buzzwords of communications today because of the great demand for bandwidth.

Tellabs; fiber optics and bandwidth

Green:

- Audio File

- MP3 Audio

(373 - green - clip 3.mp3)

We struggled and soldiered on at IBM for a while, and then in ’96 some very creative managers instigated a period of downsizing in IBM. The guys we worked for were really super people. Some of them weren’t the warm cuddly type, but they were very bright. They realized that the guys in our group were working their hearts off in the wrong company and told us, “Don’t feel bad about looking around. In fact we’ll help you.” One thing led to another, and we spun out from IBM to Tellabs, one of the most successful and rapidly growing telecommunication suppliers in the business. I had never heard of them, but friends of mine who play the stock market had heard plenty. For years Tellabs stock has been splitting every year and a half to two years. Our whole group left IBM and came to Tellabs. Geographically we moved just two doors down the street from IBM Research right here in Hawthorne, New York.

What I work on now is my usual shtick of looking for which mountain we are going to climb after we climb the present one. Everyone else in the original group is developing a product that is a super-duper update of what we did at IBM, but this time for communication service providers, not data centers. I’m working on the key element for a completely flexible network made out of that wavelength division, which is a giant optical switch known as an optical cross-connect. I’ve been working on that for the last two years along with some other key people elsewhere in the company.

Well, that’s who I am and where I’ve been. I’m 75 years old now, and I’ve had fun every inch of the way. I don’t want to stop working on figuring out the next mountain to climb. I’ll tell you, this fiber optic world is the place to be. It’s the most astonishing thing imaginable. Here is this medium with a thousand times the bandwidth of the whole RF spectrum on the planet earth, where bandwidth is limited by oxygen absorption. Each fiber has a thousand times that much bandwidth, and it’s all ours, it’s already installed and it’s all over the place. By getting more and more clever technologies to divide up that spectrum into different wavelengths there seems to be no limit as to what can be created. It’s a tremendously hard path to crank up the number of wavelengths and flexibility of switching to route those wavelengths, but it’s absolutely the way the world is going. People have got to have more and more communications bandwidth. Even though fiber doesn’t go everywhere, as wireless does, it does so much when it gets there that it’s just inevitable that there will be more and more optical communications.

Planetary radar astronomy

Hochfelder:

I’d like to get more details about these technologies on which you’ve worked. Going back to planetary radar astronomy, I’m curious what sort of information you could get from the return signals besides getting a physical map of the terrain of an object.

Green:

Basically the only thing you can tell with radar is electrical reflectivity. A little bit might be done with polarization, sending one polarization and seeing what comes back, but basically you are looking at a given point on the surface on which that incident energy is striking. We have no idea what happens to the energy that gets scattered off at angles and is not received back at the receivers. Looking at the object from a single point, all we can ever know is what we get from the electromagnetic energy hitting that surface and bouncing back. Of course we try to see that same piece of the surface at different angles of incidence. Once we start doing that, if we are looking at a given place on Venus from a number of different angles on different passes of the radar imagery we can begin to make some assumptions about the degree of roughness and maybe the dielectric constant of the medium and so forth. Basically we simply start with a dumb measurement of what the reflectivity is from the energy is that is echoed back. That is very restrictive because it’s only back along the line of sight. We can resolve different elemental areas of the planet, but about any one of these areas, that is all we can know. Still, a lot can be told from that.

Pettingill has a very interesting discovery, not yet validated or refuted, that there is an enormously high reflectivity place on Venus that can only be explained by a very rich deposit of some possible metallic chemical elements. This is ascertained by meticulously measuring how much energy went out and how much came back. I think he has also got some depolarization measurements and is able to make some big leaps and guesses as to what kind of material is there by a process of elimination. We can’t actually go there to see what is there, but the fact that we can get a picture through all that cloud cover is amazing – and the surface of Venus is hot enough to melt iron. Yet we are able to sit here on earth or from a spacecraft and make pictures of it. It’s amazing.

Hochfelder:

For what other planets has this technique been used?

Green:

Just about all of them by now, including a lot of asteroids. Talking about technological progress, the increase in sensitivity of the earth-based radars of today compared to Millstone Hill is phenomenal. I left the field decades ago so I have no idea as to the numbers, but I’m sure it’s many orders of magnitude better. Then of course there is the fact that we are now able to go close in a spacecraft. That changes everything because of the fourth power law. The returned echoes decrease in power as the fourth power of the range.

Hochfelder:

How long do you have to illuminate an object to get a decent return?

Green:

Back then, to get a return from the whole planet we had to illuminate for the entire round trip and that took about 25 minutes. I have no idea how it is now.

Wavelength division multiplexing

Hochfelder:

Would you explain a little bit about how wavelength division multiplexing works?

Green:

- Audio File

- MP3 Audio

(373 - green - clip 4.mp3)

First of all, in the beginning there is the laser. The signal has got to be transmitted, so a particular kind of laser that doesn’t spread energy all over the frequency or wavelength domain must be used. Standard commercial lasers that have been in use for years, distributed feedback lasers, are sufficiently narrow banded.

By the way, I forgot to say that one of the things I did in my optical communication career was to finally do something I had always wanted to do, which was to write a book. My book is a sort of textbook on fiber optic networks that was published in 1993. I probably learned more in researching that book than the readers.

Getting back to wavelength division multiplexing, the distributed feedback laser has been in use in optical communications at single wavelength for years. It was found that a very narrow spectrum was necessary in order to avoid the effects of dispersion. The fiber would send a slightly different velocity, in fact any glass will send at slightly different velocities for slightly different frequencies. Therefore if one is trying to send information, some of that spectrum arrives earlier and some later than the main part, and that’s going to smear every bit into adjacent bits. If one sends at fast enough bit rates one can rapidly run into this intersymbol interference. In order to minimize this dispersion, lasers were already in existence that operated at a single frequency.

From the beginning we were able to specify to the manufacturer very carefully what frequency those had to be so that we could build as the transmitter for each wavelength a laser in a can about the size of a sugar cube. The laser itself is inside that and is about the size of a grain of salt. These lasers were like little radio transmitters that transmitted on just one frequency and didn’t have wider frequency spreads. It could be turned on and off by stopping and starting the current that was driving it. That was the transmitter, and that was the easy part.

The hard part was how to pick apart this composite of arriving signals at different colors of light and different frequencies, different wavelengths. There have been a variety of techniques that are all passive, which is to say that they are unpowered. They’re just pieces of glass of one fancy design or another for which you have a little box with one fiber coming in and many coming out. Many colors of light come in all on the single input fiber, and as long as the colors match what these individual outputs want to see, each output will carry the light just at that one color In one form or another they are really all based on constructive and destructive interference. The devices we first started using were based on diffraction gratings. On its way to a given output, the light passes through something that has spatial variations that match one wavelength. If these variations don’t match the wavelength of the light coming in, then not much light comes out, but if they happen to coincide then a lot of light comes out. One channel is built to have wrinkles in the glass material corresponding to channel 1, the next one has material wrinkles corresponding to channel 2, and so forth. The light coming in at channels 1, 2, etc. comes out individually in different channels. Immediately thereafter, light from each output on a photo-detector and light becomes bits again the same way bits became light on the transmitting end.

The design of the lasers hasn’t changed much since we started work on this in 1987. Twelve years later there is still the DFB distributed feedback laser for transmitting. The price has come way down and the performance has gotten a lot better.

The receivers, on the other hand, have been a real fairyland of new innovations and sexy new techniques that come up every year or two to make the previous ones obsolete.

Career achievements; range Doppler mapping

Hochfelder:

Looking back over all the technologies you have worked on and all the contributions you have made, of which technical achievements are you most proud?

Green:

Though it was only a few minutes of inspiration, I am most proud of the range Doppler mapping – just because of its romance. Here were these cloud-covered objects that people had worshipped in the sky, nobody knew what was up there, and radar wasn’t going to tell us. Then along came range Doppler mapping and all of a sudden we could see and map this thing. Andrew Butrica wrote a book about radar astronomy and he picked a title that I thought was an absolute bullseye: To See the Unseen.

Hochfelder:

Right.

Green:

From the point of view of the sheer scope of it, I would say that’s the thing for which I am most proud. However, if asked that question a few years from now I hope I will give a different answer. There’s a kind of network humankind can build using optical wavelength division multiplexing that is totally transparent and dirt-cheap. It is future-proof because it will be able to handle any kind of data signal that can be thrown at it. I hope to stick around and work long enough to see that happen, and when it happens I want to be one of the guys that did it.

IEEE, Communications Society

Hochfelder:

Let’s talk about your involvement with the IEEE and with the Communications Society.

Green:

I’ve been a member of the IEEE since I was a student in 1946, I think continuously. When I was a graduate student at MIT it was in information theory. In a sense spread spectrum is a real life exploitation of some ideas of a famous 1949 Shannon paper about the use of noise waveforms as an alphabet for encoding data. You could see in that paper, if you looked at it the right way that it was really a spread spectrum system he was talking about. All the signals chosen are from different segments of random white Gaussian noise and distinction of one from another is detected by a process of cross-correlation. My thesis was on what might be called an application of information theory, and I fell in with the group of people that formed what was later named the IEEE Information Theory Society. My mentor, Professor Davenport, was one of the founders, as was my hero Robert Fano whom I mentioned earlier. I got dragged into that and I liked it. I liked meeting great men that were movers and shakers in the field, and I enjoyed participating and helping with the journals. Eventually I became effectively the President of the then professional group later known as the IEEE Information Theory Society.

My mathematical abilities aren’t much. I’ve got intuition, but when something needs to be explored mathematically I’m not the guy you want around, and this field was just running away from me. I didn’t understand what these guys were talking about after a while. The work of the Russian Vladimir Kotelnikov, who was a sort of Shannon look-alike, was nice because it was understandable to undergraduate engineers. One didn’t have to know much more than trigonometry and Fourier transforms. When I realized that information theory was no longer the place for me, I got interested in the IEEE Communications Society, which is much more down-to-earth.

In our Information Theory group we called ourselves the Administrative Committee and I was for a year the Chairman of that. There wasn’t any such thing as a President of the Information Theory Society back then, and there certainly wasn’t any great tree full of owls called the Board of Governors.

We did have a Board of Governors at the IEEE Communications Society, and I got involved with that. I had a falling out with some of the guys over splitting up the Transactions. I thought it was a bad deal, I was probably in the wrong, but I dropped out.

Then one day Mischa Schwartz, who either was the editor of all the magazines or the chairman of the Society – I’ve forgotten – called me and said, “Hey, we need somebody to preside over all the computer communication papers.” I jumped at the opportunity. I figured that to read and judge the merits of every paper that came in would be a great way to understand the field, and that did prove to be the case. I never became editor of any of the journals but was a sub-editor of the Transactions for about two years and really enjoyed it. I learned a lot by looking at those papers and trying to maintain quality control.

After that I became Director of Publications. Then there was something called Vice President for Technical Affairs, whose job was to try to make sense out of the twenty-odd partially overlapping and under lapping technical committees. It was under lapping in the sense that there were fields that were left out. I stood for election for that position, was elected and did that for a couple of years. I don’t remember all of the details, but I think the way it worked at the time was that somehow that position was an automatic path to becoming the Vice President of the Society, which in turn evolved toward becoming President of the Society. I did those jobs in sequence. First I was a partial editor, then Director of Publications, then ran the Technical Committees, then became Vice President and then President of the Society, which is two-year job.

More than anything else, what I wanted to achieve as President of the Society was an improvement in technical quality. Meetings are one thing, but only a couple of thousand people come to each meeting and 40,000 or more read the publications. Therefore I focused more on the publications. I tried to make sure that we not only kept up with new things that came along, but that we had a very high rejection ratio in order to maintain a high level of quality.

One of the things about which I fought and scrapped with people more than anything else was the consideration of volunteers versus membership. I considered that the first obligation of the Communications Society was to the members and not the volunteers. Time and again I had intense arguments with people who would say things like, “Good ole Joe Blow. Okay, he’s got no sense of technical discrimination, but this is a volunteer society. You can’t say he’s got to step aside for somebody that can do it to take over. He’s not performing, but good ole Joe is a volunteer.” And I would say something to the effect of, “That’s idiotic nonsense. Our duty is to the readers; not the volunteers” (or, hopefully, some more diplomatic version of the same thought).

Another ongoing argument I’m sure I lost a lot of friends over was when I would say, “The journals exist for readers, not the authors.” I did a lot of that, and I’m not sure I did much good.

One of the things that happened when I was President, under the instigation of two people – Carol Lof, who ran the office, and Maurizio Decina, who succeeded me as President, - is that I developed a real conviction about the need for globalization of the Society. Globecom and ICC, our two big annual meetings, were very rarely held outside of North America. I began to push going outside the U.S., and when Maurizio took over from me as President he really brought that to fruition.

When I was involved with the Society, I had three main objectives. One was to increase the globalization of the Society. Another was to increase the quality of our publications, which meant a high rejection ratio. It also required relevance to real rather than imagined problems. Incidentally, I had discovered how to make up word puzzles with computers, and in 1981 started doing crostic puzzles such as similar to those in the Sunday New York Times, but on technical subjects. So, my third objective was to have one of my puzzles published in the back of the Communication magazine every month. Last month the series reached puzzle #203.

Hochfelder:

That’s great.

Green:

You’re probably curious to know if people like me that were involved with the Communications Society in the past are still involved. I have dropped out of all activity with the Communications Society with the exception that I still submit this funny word puzzle on technical subjects every month.

Hochfelder:

Would you talk a little bit about the impact you think the IEEE Communications Society has had on fostering important new technologies?

Green:

I think the Communications Society has done a very creditable job as the arbiter of technical opinions and results for many years. In hindsight I think the journals have been excessively preoccupied with micro- and mini-problems, much due to the fact that there are too many people at universities trying to make tenure that work simply to pile up publications in order to promote their careers. And I suppose I would act the same way. It’s not the Communications Society’s fault that only a small fraction of what is published has any real impact on the science, technology and industry of telecommunications. The same thing is true everywhere. If you take most other IEEE Societies, for example, there are an awful lot of papers that are read by few others than the authors.

The Communications Society is no worse or better than the others, and it has been the place to go in all branches of communication with two exceptions. One exception is the very theoretical domain, such as interpretation of Shannon’s Theory and whatever else it is that information theory people do for a living. We don’t handle that, and it’s just fine that we don’t, in my opinion. I think that field has long since become fairly baroque. The other exception, which is a bit more serious, is that we don’t handle much, which relates directly to the computer industry. This is due to our traditional preoccupation with things the telephone industry is doing rather than the computer industry. For a long time I was probably the only Communications Society activist that worked for IBM, big as it is. The Society has been colored by who its participants and activists are – and they are not just from the universities, but in a large sense from the telephone companies.

Therefore we missed it as far as the Internet and the Web are concerned. It’s the Association for Computing Machinery (ACM) that seems to do that better than ComSoc does. One of the things that happened during my tenure as President was the inception of an archival journal on networking. I had argued that we should be doing this with the (sic). This was an attempt to drag the Internet type people who tend to flock toward the ACM – which is a computer technology oriented society – into a close relationship with us. This journal, called the Joint ACM-IEEE Journal of Networking, works closely with the ACM. It has some Internet/Web type coverage, and now and then the Communication Magazine has such coverage. As a result, we haven’t completely missed the boat. Basically my answer to your question is that we have been a force for the good and have helped the profession a lot. We’re the place to go in communications in the IEEE.

Communications technology predictions

Hochfelder:

Looking forward over the next twenty years, what do you think some of the innovations in communications technology will be?

Green:

Fiber optics is just getting started, and there is a lot of bandwidth out there to be mined. Basically the only thing holding it back is the need for better and better wavelength resolution, switching and amplification techniques.

Electronics continues to get faster almost in a vain attempt to keep up. Ten gigabits is a sort of a lingua franca of most of these high-speed networks these days, and people are sniffing away at 40 gigabits. Everything is getting faster and faster, and high capacity networks are keeping up with this insatiable demand. I think that will continue to play out. I don’t see it leveling out from either the point of view of demand or that of technology solution for the indefinite future.

Another thing that is a corollary to that is the famous “last mile bottleneck.” We haven’t seen anything yet as far as the growth of bandwidth demand is concerned. Think about it this way: Out in the middle of the network are large facilities sending at gigabit rates. All the telephone exchanges or routers are sending one another gigabit bit streams of 2½ to 10 gigabits. Thus there is a world in the backbone of gigabit facilities, usually on glass, but also there’s another gigabit world in laptops and desktops. Look at a 32-bit wide bus running at a 300 MHz clock rate. Multiply the two and that’s 10 gigabits. This gives the ability to look at the screen, show pictures, and reproduce sound – all sorts of things. One can IO in and out of the memory of that computer and do logical operations on the data at a rate of 10 gigabits per second.

These two multi-gigabit worlds are like two elephants trying to mate through a one-inch knothole, and the one-inch knothole is this last mile.

What’s the bit rate through which I look from my 10-gigabit world to the other 10-gigabit world? It’s something like 28 kilobits per second. That there is this huge bottleneck and there are these two worlds that need to communicate with each other is no great revelation. What I want to suggest is that they will learn how to communicate. They are already learning how to communicate. For example, the ill-fated ISDN is being superseded, but much too slowly.

The ISDN history has been like the gestation of elephants: there is a lot trumpeting and stamping around, and then it takes two years to get the baby elephant. However, in this case the baby elephant sort of never eventuated. ISDN has now been end run by cable modems and digital subscriber loops. When they get fiber to the premises – which is already happening at an unexpectedly rapid rate – initially to businesses, large apartment buildings and later on to individual homes, all of a sudden the one-inch knothole will be completely opened up and gigabit environments will be talking to each other.

This curve of bandwidth demand will then become steeper. I think I’m on firm ground predicting that the future is going to involve enormous increases in the bandwidth of what’s transmitted and the applications that will be enabled by that. However that’s all in glass. What about the other dimension, the wireless and cellular world? I think an awful lot of innovation is still possible there. Technically I’m not the right person to even comment on it, but my guess is that there are many clever ways of using more and more micro cells and getting more and more capacity out of the 40 gigabits of bandwidth that are now available on planet earth. It would be even more were it not for oxygen absorption. By radio transmission with clever use of spatial separation into little cells, micro cells or mini cells, spread spectrum techniques, etc., I don't think we are anywhere near seeing the end of what personal radio – meaning pagers and cell phones and so on – can do. Maybe there’s something other than those two things that I should be thinking about in trying to answer your question.

Engineering field challenges; educational philosophies

Hochfelder:

What sort of technical challenges do you think the engineers of the next generation will face?

Green:

- Audio File

- MP3 Audio

(373 - green - clip 5.mp3)

I have a favorite hot button as a challenge for engineers. A lot of engineers are going to become educators. The challenge that those people will face will be that of keeping students in school long enough to learn things deeply enough that they can learn how to innovate at a fundamental level. It’s difficult today because students today get bright eyes about getting rich quick. They want to do a startup, do an IPO, get bought out, get the new trophy wife, get the third Mercedes and all that. It’s going to be a big challenge to make an extended stay in an educational institution so irresistibly attractive to people that they want to do it. Educators will need to make it obvious to students that they are going to be better off in the long run if they stay and get fully educated. Students leaving school early is an increasingly difficult problem. It’s not made any easier when the brightest students are seeing that the reward system is not at the frontiers of really basic looks at new phenomena, new inventions or new technical innovations, but in little product innovations. There is a huge difference between a product innovation and a truly deep innovation that changes the world. Educators will need to make their world more capable of delivering real rewards, and not just a pile of papers.

I always figured that I was doing the right thing by working on big problems rather than tiny problems. It’s important for people to realize that they only have a certain number of years of productive life on this planet and in their careers. What are you going to say when you get to be 75 years old and some guy from the IEEE asks you, “What did you accomplish? How did you change the world?” Did you change the world? Did you make a lot of money? Did you have twenty-five children?” I think it’s good to look ahead and predict whether you are going to answer that you made a difference. That’s my own personal view of challenge to the engineers.

A lot of engineers don’t see it that way. My closest colleague has been very impatient with me for some years, and probably correctly so, for always talking about what the vision might be rather than getting the dang work done. I think he is insufficiently respectful of large-scale visionary thinking, and he thinks I am insufficiently respectful of the necessity of short-term goals. I like to work on problems that are at the frontier and pushing the envelope and pushing it in places where pushing it will produce a big result.

Hochfelder:

Do you have any final thoughts that you want to conclude with?

Green:

I just hope that everybody else that follows this profession will have as much fun as I’ve been having.

Hochfelder:

Thank you very much.