Oral-History:Robert Gallager

About Robert Gallager

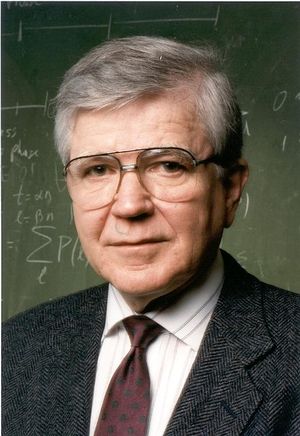

Dr. Robert G. Gallager was born in Philadelphia in 1931. He attended the University of Pennsylvania and graduated with the BSEE degree in electrical engineering in 1953. He then pursued graduate study at the Massachusetts Institute of Technology and earned electrical engineering S.M. and Sc.D. degrees in 1957 and 1960 respectively. Gallager briefly worked for Bell Laboratories and in the U.S. Army Signal Corps before joining M.I.T.'s faculty in 1956. Gallager was named the Fujitsu Professor of Electrical Engineering, Co-director of the Laboratory for Information and Decision Systems and Co-chairman of the department overseeing control, communications, and operations research. His contributions to communications have focused on information theory and data networks. Accordingly, his work has been in fields such as coding, multi-access communication systems, distributed algorithms, routing, congestion control, and random access techniques. Gallager helped found the Codex Corporation in 1962 and continues to consult there; his studies were responsible for Codex's success with 9600 bps modems. He holds four patents, has written one textbook and co-written another, is an IEEE Fellow, and is a member of the National Academy of Engineering.

The interview begins with describing Dr. Gallager's academic career. Gallager discusses his undergraduate education at the University of Pennsylvania and his graduate studies at M.I.T. He explains the evolution of coding theory in some detail and traces progress made in the communications and computer fields since the 1950s. Gallager describes and questions military funding and corporate research priorities in the communications field. He also discusses many communication theorists' career switch to computer science in the 1970s. Gallager explains how his own career followed this pattern, and recalls his subsequent work with computer architecture and data networks. The interview concludes with Gallager's evaluations of various top achievements in communication engineering and coding technique.

About the Interview

ROBERT GALLAGER: An interview conducted by Andrew Goldstein, Center for the History of Electrical Engineering, May 19, 1993.

Oral History # 156 For the Center for the History of Electrical Engineering, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Robert Gallager, an oral history conducted in 1993 by Andrew Goldstein, IEEE History Center, Piscataway, NJ, USA.

INTERVIEW

Interview: Robert Gallager

Interviewer: Andy Goldstein

Date: May 19, 1993

Place: Dr. Gallager's office at MIT, Cambridge, MA

Goldstein:

I'm sitting with Dr. Gallager in his office on Wednesday, the 19th. This is at MIT in Cambridge, Mass. Good afternoon. Thank you for participating. As I explained before, I want to be sure we get down to details of your career. So if we can just start with some biographical information, leading up to your training.

Educational and Work Background

Gallager:

Okay. Well, I went to college at the University of Pennsylvania. I took up electrical engineering there. I wanted to be a mathematician at that point, but took electrical engineering primarily because I didn't have to learn any foreign languages, which I wasn’t very good at. When I got into electrical engineering, I found it fascinating, and went through college the usual way, half serious, half not serious. I went to Bell Telephone Laboratories when I graduated. At that point Bell Laboratories had a fascinating program which they called Kelly College. It was a type of training program for new engineers where they sent us to school three days a week, and we worked on various projects the other two days a week. We also rotated projects every three months or so. So we would get a lot of experience doing different kinds of stuff. The classes we were taking were with some of the best people that Bell Labs had. Dave Slepian was teaching about communications theory; there was Bill Bennett teaching about communication also. There was Tukey, a famous statistician. Just many people whom I didn't know about at the time, but looking back on it now, I realize that it was probably better than any university I could have gone to for graduate school.

Goldstein:

Were these formally organized classes?

Gallager:

Formally organized classes, yes. Very much the same as in a university. Just that it was three days a week instead of five. So we'd be doing other things the other two days. Some of the courses were somewhat Mickey Mouse courses on the Bell System and things like that, things we had to know but they were slightly less interesting than the more theoretical courses. After about a year and a half of that, I was drafted into the Army, which was when I started to learn a little bit about information theory because I was a private in the Army at Fort Huachuca in Arizona. Very desolate place. The closest town was Tombstone, about 35 miles away. There was very little to do on the base. There was a large group of very good technical people there, but we were all privates. The sergeants were mostly people back from I guess it was Korea then. Most of them hadn't even been through high school. The officers had gone to southern military colleges for the most part. There were some civilians on the base who seemed to be castoffs from Fort Monmouth. You probably shouldn't write this. [Laughter] Just giving it as background. It was a very strange organization in the sense that we were for a while free to do what we wanted to do pretty much. We were trying to design battlefield surveillance systems. It was a kind of an interesting control problem, the kind that many people were working on five years ago. They call it "command and control" problems, C3 problems--command, control, and computation--which was the big thing in the armed forces quite recently with a lot of academic contracts and all sorts of things. It got started back a long, long time ago. We were doing very sophisticated things, but then the officers got hold of it, and of course it all turned into very, very primitive kinds of things. [Chuckling] So the technology sort of got lost out of it.

One of the things that happened there was that with too little to do, most of us spent a great deal of time in the library, and that was when I started to learn about information theory. I started out with the popular books of the day, which were supposed to explain information theory to people who didn't have Ph.D.'s., and I found this very frustrating. One day I picked up the book by Claude Shannon, which was the original book in the field, and found that it was just fascinating. It read like a novel. Suddenly I realized that the best way to learn something was not to read what somebody else had interpreted, but to go to the person who had done it and to read it at the source. In other words it was usually far clearer at that point. So it was a good experience for me.

But anyway, after about two years of that, I came to MIT as a graduate student, primarily because I wanted to get out of the army early. I had very little intention of having an academic career at that time. I wanted to get a master's degree perhaps and then go back to Bell Labs and pursue an honest engineering career rather than an academic career. When I got here it was a very exciting time at MIT. Claude Shannon was here then. Most of the best information theorists in the country were here. Along with that, it was just a remarkably talented group of people. There were people doing switching theory, which was very important at the time. The beginnings of computer science were going on around here, the beginnings of AI, some of the beginnings of what we now call communications biophysics, which is the thing which has grown into medical communication and things like this: studies of the ear, and studies of the speech process. Some things about image processing and things like that. All of these things were going on in a relatively small laboratory with perhaps 50 students and 20 faculty or so. Fifty graduate students, perhaps more. It was a very, very exciting time. This was when the ideas of information theory were just still being worked out. I remember about a year after I got here Bob Fano was on the faculty then. He's since retired. But he was an early worker in information theory, and he had told the students that if a student could prove the coding theory of information theory, he would give the student a doctorate. A student came in the next day with a proof. [Laughter] Bob Fano complained and complained, and tried to get the student to do more, and tried to get the student to write it up more clearly, with relatively little success. So it stood as his doctoral thesis, and it became quite a famous piece of work because it was, in fact, the first formal proof of this critical theorem which was one of the central parts of information theory.

Goldstein:

Who was that? Do you know?

Research on Coding Theory at MIT

Gallager:

Amiel Feinstein. What other things were going on? There were people working on data compression at the time. There were a lot of ideas around for doing that. There were people working on coding theory, which was really the problem of how do you do error correction in data? Not so much the kind of coding that goes on in cryptography, but rather the kind which deals with errors in communication. You put redundancy in the data that you're sending, and then you use the redundancy to correct these errors. There was a lot of activity going on there. A lot of activity in the theoretical aspects of what are the limits in how well you can communicate data in the presence of noise. Because at that point everyone recognized that there was always going to be noise around. That was always going to be the limitation on communication. It was an era where computational techniques were very, very primitive, certainly compared with today. When I look at the computers we had when I was doing my doctoral thesis, the main computer at MIT had 32,000 words of memory in it, which is something that no self-respecting personal computer today would have. But this was the computer that did all of MIT's work. It operated at--I forget what the speed was. But it was much, much less than one operation per microsecond. Far, far slower than the slowest personal computer today. The languages then in use were very, very primitive. Anything we wanted to do which required some real computation we had to write in Assembly Language because otherwise it would take too long. It was a very different kind of age as far as computation went. The coding techniques that people were thinking of in those days almost had to be trivially simple. I was at a meeting yesterday down in New Jersey where we were working on all-optical networks. The question is how do you send data in networks at terabit per second speeds? Someone was describing a particular device for correcting errors that he had been working on, which looked relevant to these very, very high-speed networks. Sure enough, it was an idea that had been developed around 1960, just about the time that I was getting my doctorate. It was an idea which was of interest then because it was very, very simple. It required almost no hardware, almost no computation. It was an idea that the field passed by for about 30 years because computational techniques got so much better that nobody cared about doing things the simplest way. They could do many more computations on things. Strangely enough now, with gigabit speeds, one has to have very simple error correction again at these speeds. It's strange the way the technology keeps going back and forth, and the problems which are right for one age become wrong for another age. Then suddenly they come back again when another part of technology changes and again simplicity is required. It's a curious thing, but most of the error correction techniques being developed then were, in fact, fairly simple, fairly straightforward. The more mathematically-inclined students and faculty would develop more sophisticated things.

This process kept on for a number of years. Virtually everyone at MIT was convinced that this was the wave of the future, that somehow communications was enormously important, that the ability to be able to send data accurately was terribly important. Most of this work was supported by the military. We had a tri-services contract at MIT at the time, which meant money coming into one funnel essentially from the army and the air force and the navy. It was a large grant bloc fund. So that for the first ten years I was on the faculty, I never had to write a contract proposal or anything of that sort. All of us just did our research, did whatever we wanted to do for the most part, whatever we felt was relevant, and there was very little need to justify it, or to worry about where the money was coming from. The money was there. Of course things took much less money in those days. But it was remarkably different from today when everyone spends so much time looking for research money, trying to support one's students, worrying about the financial aspects of doing research as opposed to doing the research. It was a very different age then. Partly because the field I was in was perceived to be very important at the time. I sort of always wondered whether it was really important or not. It was a lot of fun, and I certainly enjoyed doing it. But I really had some questions about why so much need for data communication? Because not much of it was really being done. I had some questions about whether we really had a need for the coding theorem and all of these fairly exotic techniques, which were not really being picked up by practitioners. A few systems were being built in the military, very expensive systems, which would use all of this new technology. But for the most part, there wasn't a lot of it being used. Finally, when the space program got started, these coding techniques started to be used in space probes because there one could have an enormous amount of computational facilities on the ground. Of course one had to do something very, very simple from the satellite, and one was very limited in power because you're going to put large batteries on these early satellites--they were very, very small--and every pound of weight that you put on these things cost an enormous amount of money. The finances of that kind of work were very, very different, from the things we see today.

Goldstein:

Were the technologies not adopted because older technologies were adequate for communication needs? For instance, at Bell they simply didn't have so much data or the wires were sufficiently noise-free that they didn't need so much error correction?

Gallager:

It was just there was very little data being sent anyplace in those days.

Goldstein:

So the channel wasn't overcome?

Gallager:

Most of the use of communication systems in those days was for voice. The Bell System, of course, was almost all voice. People were starting to send small amounts of data from various experimental things for the most part. The space program gave a big boost to this because of course when you have a space probe, you're receiving it in one place, and you want to send the data someplace else. Perhaps you can do something to control the space probe. Certainly when satellites started coming along, there was a great deal of effort to control them. You needed a lot of data transmission at that point, so these applications started becoming more important. There were military applications for data very early on. In fact, when I was in the army, that's what we were working on, all of these techniques for sending data around and processing data, so that army commanders would know what was going on in the field. But what quickly became evident in the army was that the systems, the training of the officers, the whole system, just wasn't ready for such a sophisticated kind of technology. It was very slow in developing. Why people weren't using coding in civilian applications, I don't quite know. Right after I got my doctorate, I was involved in the formation of a small company called Codex Corporation, which was founded to try to develop coding technology. And for a number of years--probably six or eight years--they were building coding devices, trying to sell them. They could sell a few of them to the armed forces. What these devices were doing was correcting errors for data, but no one in the civilian world was really sending data anyplace, except telegraph and things like that which was very, very slow and not much need for reliability because it was just data that was turned into English text, and the eye is very, very good at doing its own error correction of English text (you can have an awful lot of errors in text and still be able to read it without any trouble). So nobody wanted to pay the money for these error-correcting devices. It was a very popular academic subject. Engineers loved it. It was lots of fun. But there wasn't much of a market.

Goldstein:

When you were at Bell, incidentally, were you in the research division or in development?

Gallager:

There wasn't as much separation then as there was later. Some of the groups I was in were pretty much development, a couple of them were very much research. One of the projects I was involved in was designing the No. 5 crossbar switch. It was a very widely used telephone switch. It had been designed earlier, but for many, many years people were improving it, making changes in it. It was a funny kind of business because if you could remove a relay from one of those systems, it was worth hundreds of thousands of dollars because there were so many of these systems being put in. They were put in everywhere so it was an enormous cost savings. It was a very carefully-engineered type system. So it was very different from the kinds of things that I did when I got to MIT, where the interest was more in one-of-a-kind types of things.

Engineering Training at the University of Pennsylvania

Goldstein:

You remind me of a question I had when you began. I was curious what you concentrated in at Penn-- what sort of background you had before entering this field which was just beginning.

Gallager:

Back then there was hardly any variation in what electrical engineers took. Electrical engineering was still a small enough field that everyone studied almost the same thing. We had a few electives, but the electives were for nontechnical things, or for learning a little more mathematics or a little more of something or other.

Goldstein:

So this included things like power, which you didn't get involved with?

Gallager:

Oh, we took courses in power. I disliked it intensely, [Chuckling] Power back then was a field that was becoming quite stagnant. There wasn't much research going on in it. It was a field where people built new equipment essentially by use of the product cycle. I don't know whether you've ever heard of this. But this problem of product cycles was that with a product cycle you take the old equipment, and every three or four years you modify it with new devices and new technology, but it's still the same old song. You never change very much. The people who do this are people who have a lot of experience, but there usually isn't much creativity involved. Fields which have only that kind of work going on tend to not attract new people, tend to not attract creative people, and they tend to become more and more stultifying. Eventually, after a long time, they almost get crushed under their own weight, and, then, of course, there's always a rebirth and an effort to renew the technology. This happened in the power field probably 15 years ago or so.

Goldstein:

So you were interested in switching, it sounds like?

Gallager:

I didn't know much about switching because we didn't learn much about it. I had heard a few things about it and found it fascinating. When I was working at Bell Labs, I learned a little more about it. I wanted to learn something about it at MIT, but when I got here I found out that there was just so much more going on in the areas of communication theory and coding and things of this sort that, that I just naturally gravitated there.

Changing Landscape of Engineering Education and Research

Goldstein:

The group you were with at MIT, did they tend to have backgrounds in engineering or in mathematics?

Gallager:

A mixture of things. Most of them, I guess, had been trained as engineers, but many of them had been trained in Europe where there was a much stronger background in mathematics. The people at MIT at that time had mostly been at the Radiation Lab during the war, and there was this enormous influx of physicists, mathematicians--mostly Jews driven out of Europe by the Germans. Brilliant people. There was just a rebirth of science in the US at that time. Even when I went to college, most of my textbooks were very dry and very fact-oriented. These are the formulas. These are the problems you can work out. It was all very cut and dried. There was a little bit of rebirth at that time in the colleges, but for the most part it was the graduate schools that were doing all the exciting things. The undergraduates schools were still operating in the prewar mode of old textbooks and very uninteresting stuff. Power courses particularly were just awful, nothing of any interest in them. It was all just lots of facts. It's almost the way education is becoming now. We seem to have almost moved back to that mode of training people instead of educating them. It's a sad thing to see it. Maybe I'll talk about that more.

Goldstein:

I'd be interested to know why you think that happened.

Gallager:

Well, essentially it's happening I think because of the pressure to learn too many things. Electrical engineering has expanded enormously in the past 30 years, from a relatively small field to a field which now includes computer science, which is a huge field in its own right, where people have to become adept at the design of equipment which uses all sorts of very sophisticated VLSI chips. One has to understand something about the solid-state technology that goes into that. There's all the modern communication theory, none of which existed when I was an undergraduate. There's all the modern control theory, none of which existed when I was an undergraduate. Almost everything we teach today did not exist when I was an undergraduate. What was taught in three courses when I was an undergraduate we try to teach in one course today. The result of that is since you have to teach it in so much less time, the only way to do it is to teach the basic facts. You don't try to teach the understanding; there isn't time for that. You don't teach a sense of excitement about it. You just say this is what you have to know, and this is how you use it. So we're more and more getting back into that mode. Industry is happy with that because for the most part what industry is doing today is this product-cycle work. We're trying to play catch-up to the Japanese now so there's very much an emphasis on manufacturing, on getting products out quickly. Instead of responding to that by trying to do more interesting and more creative things, we try to manufacture the old kinds of things with newer technology. Which requires less creativity from the engineers and more instant wisdom. So the pressure on universities is to produce students with this instant wisdom, who know a little bit about everything--who in four years have picked up the compressed version of what people used to learn over many more years. There's this pressure to learn more and more in less and less time.

Goldstein:

You were talking before--it's one of the things I didn't want to miss--about your uncertainty that the field was good for anything, this growing interest in communication and data coding. I wonder if your experience at Bell Labs had any role in that. Were other engineers who were academics to begin with less conscious of the role of commercial application?

Gallager:

The people that I knew at Bell Labs were mostly leaders in technology also. They were pushing for new things. We were working on electronic switching systems then, which was sort of going along parallel to the development of the computer industry. I guess I questioned what we were doing because I wondered why we were developing these very high-tech things which could be used only by the military, when I saw various civilian communication systems not really moving ahead very quickly. The students we were educating here were for the most part going out to teach in other universities, or going to the Bell Labs of the world. Not very many were going out to small companies or even medium-sized companies. Not very many were actually building things. I didn't want to build things particularly either, but I was just troubled by the fact that we were putting so much effort into this one problem, the reliable data transmission, when it seemed as if there was much more need for analog communication at the time. Everyone agreed that digital communication was coming, but the time wasn't quite right for it yet. This company which I was talking about, Codex Corporation, almost went broke in 1970 because they couldn't sell enough of their products. The military was cutting back at that time, so there wasn't a large market there. They were trying to develop early modems for the commercial market, but they weren't very far along in that yet. There was a small depression at the time with this going on. So the company almost went bankrupt, partly because of the fact that it was too early for the technology that they were into.

Goldstein:

Didn't Bell begin using pulse-code modulation in '61 or the early sixties? Does that sound right?

Gallager:

Probably about that time, but you see they weren't transmitting data. What they were starting to do was to take voice and sample it and quantize it and send it digitally. This was a somewhat different matter than trying to send digital data, because when you're sending voice digitally, you don't need error correction in it because a certain amount of noise in the voice is not particularly troublesome. You simply overdesign the system and get good signal-noise ratio. You don't need anything terribly sophisticated to do that. You need fairly sophisticated modulation techniques, but again, no one in universities was working on that. [Chuckling] That was something being done by the Bell System, something being done by operating engineers. But somehow universities tend to have "in" things in research which everyone is interested in, and other things which are somewhat dead for research which no one works on. It's probably always been that way, and probably always will to a certain extent.

Goldstein:

The picture you're painting is very much an ivory tower of academics entertaining themselves with a stimulating research problem that the rest of the world's indifferent to.

Gallager:

To a certain extent. It wasn't that the rest of the world was indifferent because at that time certainly the Defense Department felt it was very important. The university administrators felt it was very important. There was research money available for it, partly because the Defense Department felt it was important. There wasn't research money for working on these other things. It wasn't only that we were in an ivory tower, it's that the rest of the country went along with us in the sense that there weren't funds for doing other things.

Goldstein:

Do you know if the military had any specific applications in mind? You were talking about space communication as one.

Gallager:

Certainly space communication. I think the Defense Department always had plans that were somewhat more elaborate than its ability to carry them out. I don't know why that always happens, but it always has. I think the best example is Star Wars, which was a somewhat misguided system which was just too far beyond where the technology was. It's always trying to push things a little too far, and not taking care of the simple things that need to be done, but always worry about the most exotic kinds of problems. Anyway, all of this was a lot of fun. Even though it was somewhat ivory tower, what's been interesting to me is that most of these ideas have been used extensively since. But rarely at the time when people thought they were going to be used. For many years I thought I must be very naive because I couldn't understand why all these people thought that these things were going to be used immediately because it didn't seem like they were going to be used immediately. Other problems seemed to be more important, but there wasn't any support for them. In a sense many of them were just sort of grubby problems and it's hard to get involved in a grubby problem if you're a researcher and a ton of other people were interested in it also. Particularly if there aren't experimentalists who are willing to go in to work on it, and all the different types of people that have to work on a problem before things can get done.

But what happened around 1970 is that-- I think this was a combination of a lot of different things. It was partly the Vietnam War, which turned most civilians, certainly most academics, certainly most people who thought very much about where the world was going, it taught us that we shouldn't trust the government quite as much as we had been trusting it. It taught us that it was probably wrong for the research groups at the various universities in the country to be putting so much of their attention on these military high-tech problems. All of us had always thought all along that there was sort of a spin-off to the civilian world, that we would work on these high-tech problems, the technology would get developed paid for by the Defense Department, and then eventually the applications would spin off to the civilian sector. Of course some of that has happened, a lot of it has happened, but somewhat more slowly than we thought it would. Around 1970 people--almost everyone, almost simultaneously--saw that this was not the right thing to do. At the same time, the people who had been working in communication theory for so long and building up this almost ideal theory, which at that point had got them to the point where it really could be applied, suddenly everyone decided that the theory was far ahead of practice, that there wasn't any use in doing this anymore, all these beautiful ideas were not being used, and suddenly most communication theorists started going off into computer science where the grass seemed a lot greener at the time. There seemed to be much more growth there, much more practical application of ideas--well, it was just a field which was commercially expanding very, very rapidly, whereas the communications field was expanding less rapidly, and was expanding through the government and through AT&T, but not expanding too much elsewhere. So a lot of people moved off into computer science, a lot of people moved off in other directions. I dabbled with computer science for a while, then got into the data networks field, still spending a fair amount of time on information theory because it's always been a fascinating topic. But always with this feeling that the theory was far ahead of the practice.

Goldstein:

That's interesting. Often you think of engineering developments being shaped by commercial needs. I can think of examples from the 19th century. The development of the transformer was a response to the need for a.c. transmission--things like this. It sounds like the commercial needs were absent in the early days of communication theory, and I'm wondering what decided the direction of research in this area.

Gallager:

I suspect that if one knew what was going on in the 19th century, one would realize there that the situation was far more chaotic than history books make it appear, also. I would imagine that when we start seeing history books being written about what was happening in the sixties and the seventies, we'll find a story which is not true, but which is far more plausible than what actually happened. What actually happened was that the people who decide where the resources should be spent--a combination of lots of different groups of people. Partly the people who worked for the Defense Department who have budgets for doing research--that's where some of the research comes from. Partly people at places like Bell Labs and large industrial research laboratories. All of those people come from a research background, and therefore they're partly motivated by the same things that drive researchers. Because of that they're not quite so capable of seeing what the real commercial needs are. The people who were involved with the actual commercial needs for the most part want something today. They don't want to have it developed about ten years hence. In fact you can't develop something ten years hence. I see people trying to do that all the time, and I don't know of a single example where it's been done successfully, except in the telephone business where they always started out ten years ahead of time developing electronic switching and things like that. The telephone system at that time was a system where things lasted for 40 years. It was a very stable business. It was a monopoly, it was clear what the telephone business was, people wanted telephones, and telephones remained about the same. Everyone got them, everyone got multiple telephones. The transmission became much better, the costs went down. There wasn't any real question about what it was that one was trying to develop.

If you look at the computer field, that's a very different story. Almost everything that you see people saying ten years ago was wrong. Historians will go through and find things written which were right, but that was not what was being said by most of the people who were guiding the field. Here's one example: There was a very large project here at MIT for developing time-sharing computers. The idea at that time was that one wanted very large computer utilities because that made sense during the sixties. It cost a lot of money to build a large computer system; therefore you wanted to have lots of people use it. The whole idea was to move people in and move people out rapidly, which led to this whole idea of time sharing. While that idea was being developed, solid-state theory was coming along. People were developing chips. Chips were turning into microprocessors. People working on that project often had personal computers sitting on their desks, and none of them realized, even with the evidence right in front of them, that time-sharing computers and computer utilities were not where the future was. The future was in personal computers, large numbers of small processors, which then had to start communicating.

Teaching Coding Theorem at MIT

Goldstein:

My question is what determined any researcher's research agenda. So my question to you would be, what raised the questions that you tackled in your early papers?

Gallager:

That's a tough question. I can give you several examples.

- Audio File

- MP3 Audio

(156_-_gallager_-_clip_1.mp3)

One of the earliest and best papers that I wrote was on something called the coding theorem. It was called a simple derivation of the coding theorem, but it was more than that. It was trying to extend the coding theorem to many kinds of channels for which it hadn't been used up until then. I mean the thing that I was trying to do was to give a guide to how to develop coding systems for, in fact, practical-type channels. Instead of just the usual channels that theoreticians dealt with, which were things like a binary symmetric channel which is a channel which operates discretely in time and you put binary inputs in, and you get binary outputs out, which isn't a real channel in a sense. It's the kind of channel that you see after you build a modulator and a demodulator and all the other things that you have to build to make such a system work. The point of the coding theorem was to get at the question of how you build the whole system as an entity. How do you get the most data through it? If you have to build a different kind of module later or a different kind of detector or a different anything. Well anyway, the thing that motivated that work was that I was teaching a course at MIT in the very early sixties. We had decided to give a graduate course. Most of our graduate courses are 12-unit courses, and the students take three or four of those a term. We decided to give a 24-unit course which would really cover modern coding theory, modern communication theory, coding techniques. The idea was to get students into research quickly. Bob Fano had just written a book at that time, where he had actually given the proof of the coding theorem in his book.

I was teaching this course, and I was supposed to teach this. I was struggling more and more with this proof that he had, and I wasn't convinced that it was right. It was right in principle, but it was wrong in many details. As I was getting to the point where I had to present this material, I was just looking for other ways of finding out how to do it. I found this new way of doing it which in fact turned into a nice, interesting, and fairly important new field of research, because it found a new way of proving these theorems. By proving the theorems in a different way, one could prove much more general theorems and by proving much more general theorems, one could talk about the kinds of channels that one has to deal with in actual reality. One could think in terms of coding and modulation all as one kind of entity. So in that case I think the motivating force was having to teach a course in this. After that many of these topics just followed naturally one after the other. Other examples: I was consulting at this company--

Goldstein:

Codex?

Gallager:

Yes. Some of the problems we were working on there led to interesting research problems. Some problems in coding for burst-noise channels, which was something that academicians had never looked at. It's noise that comes in bursts so that the channel is clean for a long time, and then suddenly there's a very long period where it's very noisy. The kinds of error-correction codes we'd been using were all designed to rely on independent noise from time to time. They would correct the errors due to some kind of statistical regularity. With burst-noise channels, you don't have this regularity, and therefore you have to find ways of spreading the coding constraints over a much longer period of time. By spreading it over more time, you can use the good times to correct the errors over the bad times.

Goldstein:

Right. You're saying that this was a consideration that emerged when you actually tried to develop--

Gallager:

When you actually tried to use the coding theory, yes. So I've given you two examples: one of which came from actual practice; one of which came from the very academic thing of trying to teach a course. Let me see if I can think of other examples.

Emerging Field of Information Theory in the 1960's

Goldstein:

How did communication among different researchers go? Now, you've described the community at MIT, which sounds very close-knit, and perhaps communication was very informal. But around that time, you know, there's the IEEE Transactions on Information Theory. Are there conferences? What's the nature of communication among them?

Gallager:

It was pretty informal through that whole community at the time. In a way, the information theory community in this country was very much centered at MIT during the sixties. The students who graduated from here went to other schools. If you look at information theorists in the country now, you can trace a remarkable number of them to MIT or indirectly to MIT. That close-knit community in fact became a much larger community as time went on.

Goldstein:

You've described what industry needs today, and what industry wants and the sort of grad students they're looking for. I'm just wondering how a student at that time, in the early sixties, how they would look on a prospective concentration in information theory. Was it the kiss of death, or was it--?

Gallager:

It depended on the student. It also depended on the time. During the ‘60s there was so much excitement about information theory in the academic community, that students tended to get caught up in it.

Goldstein:

Right. Even though there were no obvious commercial projects, the money was there from the military.

Gallager:

The money was there from the military. The jobs were there. It was very easy to get academic jobs because other schools wanted to have a part of the spoils, so to speak. Also other schools wanted to be able to give courses in this new area. It was an area which was obviously important at some point, obviously important in some way. I guess I should go back and correct the idea of the ivory tower because that's not quite the way it was. There was a set of ideas which I think people could perceive as being sort of the central focus of where communication was going. It was the right way to look at real communications problems. I think people involved in academic life recognized that, and I think they were correct in recognizing that. In fact history has borne that out. Most of what's being done in actual communication systems today is pretty much an outgrowth of that point of view, which came from information theorists and then filtered down to people who were actually building detectors and building the feedback loops and building all the other devices. But the point of view and how to look at those problems very much came out of the work in the sixties and the fifties, which stemmed from the work at the Radiation Lab here, and few other laboratories--certainly Bell Labs. What other schools were important in that? Stanford was relatively important at that time. Berkeley. IBM laboratories were starting to be a force in that field. Its hard to tell exactly where all these places were. There were certainly a lot of workers in Europe fairly active at that time. But it wasn't the kind of field where the field was so large that people had to rely on papers in journals and things like that. It was a field where people sort of sent their papers to the other people who were working on similar problems, and things tended to propagate relatively quickly.

Goldstein:

During that period, this informal period, what literature did you feel responsible for keeping on top of?

Gallager:

Certainly the Information Theory Transactions; Communication Technology, pretty much. Mainly IEEE Transactions on Communications. There was a Russian journal which I tried to keep up with because the Russians were doing a lot of interesting work on information theory in those days. I guess those were the major journals. I don't think that many people read that much literature. I've been impressed as I've become older and more cynical at how little people actually read the literature. That many articles in the various transactions are hardly ever read. Many of them have very little impact. Graduate students read them more than anyone else, I think. But very often the researchers are too busy doing their own thing, too busy working on a small number of things, to actually read all the literature that's going on in the field.

Goldstein:

Was there a need or any compulsion to keep up with work in circuits or systems, that sort of thing?

Gallager:

A little bit insofar as teaching these courses. I felt some urge to keep up with some of the ideas going on in solid state and things like this. Certainly chip technology and that sort of thing, which was very relevant as far as building these digital devices was concerned. One had to have an idea of which devices were important, to have some idea of what the computational complexity of these various devices was. There was some effort to keep more or less up to speed with that, but certainly not a detailed effort to be involved with all of it.

Goldstein:

You were alluding to the introduction of data communication, the first important application for all this work that had been done. Does that begin with ARPANET, with something before that?

Gallager:

There were certainly networks, a group of networks, that were starting to become important at about the same time. There wasn't really the need for very reliable communications in those networks, which was part of the reason why the people who worked on error correction became depressed. For networks such as the ARPANET, if you have errors in this transmission, the simplest thing to do is to detect them and transmit things over again. The only time when you have to receive things right the first time, is sometimes in situations where you only have communication in one direction. A lot of those situations are military situations. You have submarines who don't want to respond, or who can't respond for one reason or another. The military applications where there's really just one-way communication. With some of the satellite, space-probe problems, it takes a very long time to reach a space probe that's way out in the other end of the solar system. So there's really no possibility of a retransmission there. In communication systems which operate in real time, you really can't do retransmission. Like in a voice system. If you go back and retransmit something, It really doesn't work for voice. It doesn't work for video. It's just for non-real time data applications that you can use error detection and retransmission.

Goldstein:

You just suggested a real separation between the error-correction crowd and the error-detection crowd. That's true?

Gallager:

Yes. But as it turned out, error detection is almost trivial. There's really no problem there. After one understands a little bit about error correction, one realizes that error detection is just very easy to do. So it's never been a very active area of research. It's been something which as the need arises for it, all of the techniques are essentially there. I think this was part of the reason why the effort in error correction slowed down for a while around 1970. It built up again when we had compact discs and audio, because of the very sophisticated error correction which was used there. Again this was because of the fact that it suddenly became very easy to do immense amounts of computation very, very cheaply because of the single processing chips in the compact disc. You can sell consumer electronics, where for twenty dollars you do things that would have cost millions of dollars back in the seventies.

Information Theory and Computer Research

Goldstein:

It sounds like you're describing a few points of contact between this information theory work and computers, computer technology. There's the issue of cheap computation, the availability of computational power. There's the need for transmission of data over networks. I wonder if you can tell me about the merging of these two areas, you know, computer research and communication.

Gallager:

Well, unfortunately there's not a lot of merging. There's a lot of merging of problems in the sense that both the computer industry and the communication industry make enormously heavy use of the microprocessors that we have all around now. And the microprocessors lead to these single-processing kits which do amazing amounts of computation which is necessary in communication systems. They are also very, very necessary in computer systems, and of course also useful in the central processors in computer systems. So there's that kind of union. The communication that goes on in a computer system through the bus is a real communication problem. It's probably been the limiting factor in computer systems for many, many years, and it's leading to all the parallel processing which is going on in the computer industry now, where again communication is the major problem. On the other side, in the communications field, when we start building large networks, the major problem is how to design the nodes which are doing all the processing, and those are computers. So that you can't separate the two anymore. But the culture of the people who work in the computer field is very, very different from the culture of the people who work in the communication field, as different as night and day.

Goldstein:

Really! In what ways?

Gallager:

Well, it’s hard to put one's finger on it. Part of it is that the success in the communication field, most of the successes, have come out of a more scientific effort. Mathematics, physics, have all been important. It's a field where if you read Communications Transactions or the Information Theory Transactions you can hardly pass a page without seeing a theorem. In the computer technology journals, you rarely see theorems. The mode of success there has been the mode of building systems. Very little science to it. It's called "computer science," for the same reason that social science is called "social science." You call things sciences that aren't sciences. Things that are sciences are never called sciences. I'm not saying this to knock people who work in the computer field. It's a different kind of field. The problems have traditionally been the problems of trying to organize very large systems, and trying to organize very large systems where you don't a priori have any organizing principles. When you build a telephone system, the organizing principles have grown up along with the size of the telephone systems. People know how to partition the problem. You build modulation systems, you build central offices. All those things have their own theories behind them. Switching theory has been very well developed over the years. It's changed, it's been modified as the technology changes. But there is this rich, rich theoretical structure, rich conceptual structure which says how things ought to be done, which gives people a clear view of what it is they're trying to accomplish, and gives a smooth transition from very mathematical approaches to very pragmatic approaches. And the mathematics and the pragmatics all had to do with each other, and they all worked together.

In the computer field, there's a lot of computer theory that has very little to do with how computer systems are built, and my perception is that it probably won't have. Every once in a while you see things starting to grow up which might provide a liaison, but the computer field changes so fast--I mean, the technology changes so fast--that somehow the understanding never seems to have a chance to catch up. The way we build computer systems is pretty much the result of the way the actual designers design their systems. It's very much try it and see if it works. Often you don't have much time to try it because the technology will be all different in another two years. And the companies will be different. Many computer companies will go broke, and the microprocessor companies will become powerful. In another five years, who knows what there will be. That's why the cultures are so different. It certainly isn't a set of two cultures that work together easily.

Translating Theory into Practice

Goldstein:

Probing the communications culture, it seems like a lot of your work has been of a very theoretical nature, where perhaps you've derived expression for the upper bound of the probability of an error, things like this. But occasionally, I saw, you worked on specific algorithms for coding, and these seem like different tasks. I wonder how the division of labor within the field has developed.

Gallager:

Well, it's strange. I don't view them as different because to me when I'm finding upper bounds on error probability, I'm simultaneously thinking of how does one achieve those bounds? How does one build systems which will do that? So I guess for me the two have always sort of gone hand in hand.

Goldstein:

Is that a prevalent attitude?

Gallager:

Not completely, but I think it's pretty prevalent.

- Audio File

- MP3 Audio

(156_-_gallager_-_clip_2.mp3)

In the information theory community there are people who are pure mathematicians, who are really just driven by the elegance of the field, who want to work on things because they see the innate beauty of what they're working on. I think a larger number, though, sort of go back and forth between pure theory, where there certainly is beauty, but also the beauty leads into ideas of how you actually build systems. I mean, most of us who are involved in this kind of thing don't actually build the hardware ourselves. And we rarely even get to the point of worrying about the kinds of chips. There's a sort of gradation. There are these pure theoreticians who just work on the theory, but also there are a number of colleagues I have who have started their own companies, who are more entrepreneurial, who continue to do theoretical work, but who are also very involved in the implementation of science. The people who work on this theory are typically people who-- I'm trying to say this right because in the last five years there's been enormous pressure on theoreticians, an enormous sense that the theory is not necessary anymore. That people should be working on real things. That we should be educating people to work on real things. I guess the view I've come up with--I'm trying to think this through--is that yes, theoreticians should be interested in real problems. But it's not the theory which is important. The thing which is important is trying to understand what's fundamental about a problem. Being able to step back from the details, stepping back from the particular things which are necessary in an implementation today because of the devices we have today for building things, and understand what's important about building a system. Understand for a computer system what the relationship is between, for example, communication on the bus and the processing that's going on. Find the right ways of looking at those problems. And a coding system to find the right way of looking at modulation versus coding, which in a sense are really two ways of looking at the same thing. The people who are successful in this field are the ones who've learned to look at these problems in a combined way, and to be able to see what's going on. They are people who when faced with a new technological problem come up with ideas that make sense because they can see the whole picture. People who don't study theory never are able to do that, because all they learn is formulas. They learn to work out problems. They learn to simulate things. But they never get this picture of what's going on. And to me the point of theory is to see a picture, is be able to visualize what's important in a problem. To me that's the essence of engineering, which is why I've become very unhappy when I see people increasingly going into the details of implementing things. Of course people have to worry more about manufacturing now, about reliability, about all the problems of how a company should be made to work efficiently. But if everyone does that, we lose our capability of coming up with new technology. We can come up with new technology insofar as a small variation on what we're already doing. For people who work on this product cycle viewpoint, it's enough to simply be aware of new manufacturing techniques, of new technology and new chips, of what's available. You just keep changing the product and improving it. But if we don't have people doing this underlying research, solving these basic problems, building up this technology ahead of time, building up the ideas, finding the right ways of looking at problems, then pretty soon we lose the whole thing. That's what scares me.

Goldstein:

In the 1960s or, say, right before 1970 in that area, was there the perception that some people were more theoretically-inclined than others? I'm curious about an era when there was less external application, and I wonder if there was a division, including a cultural division, within researchers?

Gallager:

There was pretty much the same division as there is now, except back then there was a better balance. The people who wanted to be practitioners, I think, were pretty much encouraged to be practitioners by industry, and they could make more money that way. They could start their own companies and very often did that. But when they went to school, they were encouraged to learn more about theoretical developments, to learn to think more abstractly. For the most part this was helpful because by helping people who are going to be practitioners to think more abstractly, you're really helping them to stand back from the problem and see it in a more general setting. So that they can move more quickly from one technology to another technology. They can leave one field to get into another field easily. Then the whole academic enterprise was slanted towards doing more theoretical work, more abstract work. The value of learning more mathematics and knowing how to use the mathematics in engineering. Whereas today there's much more of a push throughout the academic world. Oh, I was talking about the split between more practically-oriented and more theoretically-oriented engineers.

Goldstein:

I'm not sure if you're talking about in communications specifically or engineering in general.

Gallager:

I think both. I think the split was similar in the sixties. And I think the split is similar now. Certain fields have always been much more pragmatic in nature. Like the computer field is very much bifurcated between theory and practice, where there's very little communication between the theoreticians and the practitioners. Communication has always been fortunate in the sense that there is quite a bit of communication. Most of the physics-based fields have a lot of communication between the more theoretically-oriented and the more practically-oriented. Partly because to work in physics these days, you have to have so much theoretical background anyway, that the practitioners are brought up to the point of being able to communicate with the theoreticians. Whereas in some fields, such as parts of communication technology, cellular telephone systems, and things like that, one doesn't have to know that much about the field to get into one's specialty and to operate there. So there tends to be a little less contact between the academic parts of the field, or the conceptual parts of the field, and the pragmatic parts of it. But I think the switch which has happened between the sixties and the nineties is that most of the students see their future in jobs as coming mostly from being much more pragmatic. Students who would like to learn more theory often feel pressured to at least clothe their work in the jargon of practice. I think this is particularly unfortunate because when somebody's doing theoretical work and they pretend it's practical, it's the worst of both worlds. I mean it takes away the conceptual beauty of what they're doing, but at the same time if they're not really interested in practice, what they're doing is not going to be very helpful there.

Goldstein:

In your own career, can you tell me something about the evolution of your interests into data networks?

Gallager:

Yes. It came partly through my desire to work with other people more. For a while I was the only information theorist at MIT.

From Information Theory to Data Networks

Goldstein:

What happened to the strong community?

Gallager:

Well, some of them went into computer science. Some of them went off and formed their own companies and things like that. Some of them got into the various biofields. Where did the others go?

Goldstein:

I can take off who you're thinking of. That might be interesting to track down.

Gallager:

Yes. For example, Bob Fano went off into the computer field. Peter Elias went off into the computer field. Jack Wozencraft, who was on the faculty in the early days, left MIT and then came back later. In fact he and I both got into data networks at the same time. Part of the idea was that this was an exciting new field, and it looked like there were interesting things to be done there. There were lots of opportunities. The jobs were there for students. There was lots of student interest. That was part of the reason we both got into it. It was this combination of interesting problems, good job market for students, student interest, all of these things. In information theory it was increasingly hard to find the very best students who wanted to go into the field because it was viewed as being a somewhat stagnant field at that time. Now it's viewed as being much more active, partly because many of these application areas have sort of grown enormously.

Goldstein:

It's strange, actually, that you talk about information theory as being stagnant or your moving into data networks because it would appear to me to be a logical consequence of work in information theory. Was it really a disruption, a departure from it?

Gallager:

Well, it was a disruption for me because I went into computer science for a while first. Then from computer science I went back into data networks. It was not a disruption. It was something which was interesting. I could see lots of interesting problems there. But I really felt the need to go off and start something new and different. Sometimes when you're working in a field too long, you find yourself going back and working on problems you worked on a long time ago. You forget that you worked on them a long time ago. You work on them for a while, and you suddenly at a certain point, you say, gee, I was here before once. At that time it's probably time to start working in a new area. [Chuckling] Part of the reason for getting into data networks was that it was just this exciting new field.

Goldstein:

When you were working in computer science, what sort of things were you looking into? When did that begin, and what year did you move into data networks?

Gallager:

Well, very early '70’s. I probably moved into data networks around '76 or so. First I was interested in computer languages and some of the theoretical aspects of that. There was a theoretical background of lambda calculus and logic and recursive function theory that forms the theoretical background of what was going on in the computer language field. So I got into that. I found it interesting. Then as I got into it more, I realized that it really had nothing to do with computer languages at all. It was an interesting mathematical problem, but it had nothing to do with either modern languages or the way that people use language in writing programs. Then I got into computer architecture with the hope of trying to find some structure there, and found relatively quickly that it's a field which is moving so quickly due to new technology that the hope of putting structure into it was almost hopeless. The developments in architecture come from people who build systems. You take new components, and you put them together in different ways, and you get the new system out on the market very, very quickly. If you stop to think about it, you're a year behind the times. The way that that technology goes is one year behind times means twice as slow and twice as expensive. So that you have to have enormous gains from understanding the problem better to make it worthwhile to understand it better.

I felt for a person of my nature that was just the wrong field for me. Computer networks have always had a little bit of the same flavor. It's always been hard there, with the rapid evolution of the field, to be able to put some kind of sensible structure in it. But in fact this turned out to be easier there because the problems sort of stay the same. The speeds go up, the lengths of packets go up, but there's a scaling principle that says that the underlying problems are the same as they always were. There's a problem of congestion in networks, which is a fundamental problem, which arises because most computer data is very bursty in nature. Sometimes somebody wants to send an enormous amount of data; lots of time they don't want to send any data at all. So if you have this big network with lots of inputs coming into it with the users using it very sporadically. Using it very intensively for a short period of time, and not using it at all for a long time. The problem that that brings up is that when one user starts to use a network very intensively, lots of data starts flowing through the whole network. Queues start to build up, all the other users start to get poorer service. The other users that are getting poorer service, sometimes what happens is the queues fill up, buffers overflow, sources start to send their data for a second time because they don't get acknowledgments. The whole network breaks down. Because of some users sending enormous amounts of data, all the users suffer. The problem is how do you design networks to avoid that problem? It's been the same problem since the early days of the ARPANET. It's a problem which still isn't satisfactorily solved. It's a problem we see coming up in these all-optical networks that we're thinking about now and trying to design now. In that field there is some stability there. There are fundamental problems which have to be understood.

Goldstein:

You were just saying that there's a consistency in problems. I'm not sure if you meant throughout the brief history of data networks or between communications issues and data networks. I thought it was the latter you were saying.

Gallager:

No, the former. The problems in data networks seem to be quite different from the problems in communication.

Goldstein:

Were there any conceptual links between your work in those two stages of your career? Or maybe methodological links?

Gallager:

Well, there were certainly methodological links in terms of trying to use the same kinds of approaches. Essentially this business of trying to understand what's going on in the problem, as opposed to trying to build something, or as opposed to trying to construct a purely mathematical theory of it. Something in the middle is always what I found fascinating. My one effort to combine the two led to a very interesting paper. It was on fundamental limitations of protocols in data networks. But in retrospect, when I look at it, it was an unimportant paper. It was asking the wrong questions. [Chuckling]

Goldstein:

In what way? Tell me what got you on that topic and what you said.

APRANET, Protocol and Overhead

Gallager:

The thing that got me on that topic was that in the early days of data networks, one of the big problems was that the amount of overhead that was being sent was enormous. The networks were expensive, and one would have liked to have sent more data through them. But all of the capability of the network was being used up in sending this protocol. In the early days of the ARPANET, some simple calculations showed that there was about one bit of data for every hundred bits of control, and that's pretty outrageous. [Chuckling]

Goldstein:

Was that because there were some real-time communications going on? I remember you wrote something about somebody just typing the same character.

Gallager:

Yes. This business of something called echoplex where you send one character, and with teletype . The ordinary way of a terminal communicating to the computer where you type a character, when the computer gets the character, it sends it back, and that's what prints it for you. Therefore you have a very simple method of error control that way. If what comes back is different from what you typed, at that point you knew that the computer had the wrong thing. You could go back and you then corrected it. What that did was that every time a character was going through the network, it would have to have a whole packet connected to it, and therefore you'd have to send this whole packet with all the control overhead for the one 8-bit character. There would have to be an acknowledgement which had to come back. The way the ARPANET worked, because they were worried about having enough storage at the other end, there would have to be another packet coming back saying: Now you can send more data. There were really four packets that went back and forth for each one character of data that comes in. Now, that was a relatively easy problem to fix. In fact it started because people wanted to find a very quick and easy way of using the ARPANET for assorted traditional data transmission from terminals.

Goldstein:

They developed a protocol without much thought?

Gallager:

Well, not without much thought. They developed a protocol which would allow them most quickly to start using the system. Part of the genius of the early ARPANET and part of its problem was that it was in a sense a big test bed. It was trying to sell the idea of data communications to everyone. By getting the universities involved in it, by getting the universities doing research on it and by getting people to use it quickly and easily, it was the best way of generating that excitement, and generating a new technology, generating the people to work on it. It was very effective in that sense. For a while it was a good idea in the sense that it kept the network filled up when there wasn't much data to send. [Laughter] But ultimately that problem had to be resolved, and it was resolved in a sensible way by not sending a single character of data; you take a whole line. When you press carriage return, at that point the packet would be sent, which was a relatively simple fix. But the problem I started to think about was how much control information do you really need as a minimum? How much of it is just the desire to do something in a simple, quick and dirty way, and how much of it is essential? That's what this paper was about, trying to figure out which parts of the protocol were really needed, and which parts were un-needed.

Goldstein:

But you said it was barking up the wrong tree?

Gallager:

Well, it was barking up the wrong tree because virtually all of it was un-needed.

Goldstein:

Most of the protocol, the overhead is?

Gallager:

Yes. The amount of it that was really needed was so small that optimizing it was unimportant. The real problems in protocols are trying to do something which is simple enough that people understand it. As our networks get faster and faster, that problem becomes much more important because suddenly these systems get so complicated that people can't understand them. They tend to break down, and people can't figure out what went wrong. The ARPA Network, once the whole thing was brought down for about three days one time because of a silly programming error. But it was incredibly hard to find because the system was so complicated.

Goldstein:

What was your involvement with ARPANET?

Gallager:

Well, DARPA supported our work in data networks for a number of years. Our involvement with the actual network was not at all close. We wrote papers about networking in general, which might have had some impact on the people who were designing and changing the system. Hopefully it had some impact on the people who started to design later networks.

Goldstein:

I mean, I don't even know at a fundamental level whether you were involved in any of the design questions of ARPANET. Whether you were a user or whether you commented on it as an observer.

Gallager:

I was a user and an observer. I was not a heavy user, but I was a user. Mostly an observer. Mostly my interest was not in the ARPANET itself, but in how to build subsequent networks. The ARPANET was built - my recollection is somewhere around 1972 or so. It kept getting changed and modified as time went on. But I was never involved in the direct loop of those changes, never had terribly close association with the people who worked on doing that.

Goldstein:

Did you communicate directly with any designers of other networks? You said that you were interested influencing subsequent designs.

Development of Commercial Networks and Ethernet

Gallager:

Let's see. Codex was building networks at that time.

Goldstein:

For whom now?

Gallager:

They were building private networks, and this was partly successful, partly not all that successful.

Goldstein:

When you say private networks, you mean for airlines or banks, things like that?

Gallager:

Yes. But they weren't so much interested in the software for those systems as for the underlying network. Putting together the systems of least phone lines and the protocols for operating the network, and that sort of stuff. What was happening in those days-- I keep talking about people in the field not really knowing what sort of thing was going on in the field. What was happening in those days was that a lot of the theoretical development was concerned with wide-area networks which was where the most interesting theoretical problems were. Where the practical field was really growing up, was in local-area networks, which was where all of the commercial work was being done. So Ethernet was becoming very important in those days, ring networks and so forth. Those local-area networks was really where computer people became familiar with networking and began to rely on networking. I mean, the really important problems were things like file servers. How does an organization where everybody has their own personal computers, how do they share data? How do they get a hold of the files they need? How do you save money by having diskless work stations? If you have a diskless work station, you obviously need to get all of your memory somewhere else. So that was one of the main uses of local-area networks.

In a sense in those days I was working on the wrong problem again. The problem which was really pushing the commercial field was how do you build better local-area networks? Partly at that time I was getting interested in multi-access or random-access communications problems, which is pretty much what Ethernet is. But the kinds of theoretical problems we were interested in then were the questions of what's the ultimate you can do with a random-access network? My sense is that that work never had a lot of application. I think it probably won't because the way the local-area network field is going, it's going all optical at this point. The random-access aspects of it are probably not going to be the important aspects. Every time I say something like that, two years later [Laughter] something happens in the field where it turns out to be just the opposite. But my sense now is that all of this work that was done in the theoretical community on random-access communication really doesn't have much hope of application--at least in anything I can see coming up.

Goldstein:

Because it's going digital? Not because the channels are so broad that there's no conflict? Is that what you mean?

Gallager:

No, it's not that. It's that the assumptions we were using with our random-access work were pretty much that delay was relatively negligible. The reason for that was that the transmission speeds were relatively low and that the distances involved were fairly small. When the data rates get up in the gigabit region, delay becomes very important. Therefore what you need to do with a random-access system is just a very different kind of technology. The set of ideas that are important become different. You have to focus on delay much more and on collisions much less. So you wind up with different kinds of structures.

Error Correction and Layered System

Goldstein:

In the work on data network, is error correction not an issue? Have you looked at that? Or has anybody?

Gallager: