Electronic and Computer Music

This article was initially published in Today's Engineer on April 2011

Computers play an integral part in today’s music industry. From recording and production to composition, many of today’s popular artists use computers in their work. While it may evoke images of high-tech and sophisticated machinery, computer music and electronic music are not recent phenomena; electronic music has been produced for over a century, and music has been made using computers since before the era of rock and roll. While the widespread use of computers in recording and production may have only gained favor within the mainstream industry in the past 30 years, the genre has a very rich and deep history.

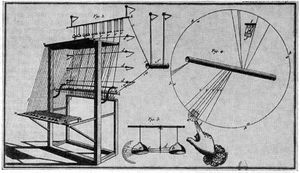

Electro-acoustic instrumentation dates back to the mid 18th century with the Denis d'or (1753) and the Clavecin électrique (1759). The Denis d’or is known only through written accounts, but diagrams from the Clavecin électrique survive. The Clavecin électrique employs a globe generator which charges a pair of bells hanging from iron bars, and a musician can press a key which will oscillate a clapper between the bells, producing a certain note. These instruments were developed almost a century before the phonautograph (1857), the earliest known device for sound recording.

Elisha Gray’s acoustic telegraph (1875) is widely considered to be the first synthesizer. Other electronic instruments would soon follow; the Telharmonium, developed by Thaddeus Cahill between 1892 and 1914, was one of the first to be used for live performances. The instrument was used for playing live in a music hall and its music would be broadcast over telephone lines. However, its enormous size (over 200 tons and 60 feet in length) and tendency to cause crosstalk on its telephone broadcasts ultimately caused the instrument to fall out of favor. Other early electronic instruments such as the Audion Piano (1915), the Theremin (1920), the Croix Sonore (1926), and the Hammond Organ (1934) proved to be more successful. These instruments led to new approaches to sound and music composition that was previously not possible with traditional instruments.

"Sketch of a New Esthetic of Music," published in 1907 by Ferruccio Busoni, was one of the most influential papers in the development of electronic music. It discussed several approaches to music now made possible, including microtuning, which is the use of scales based on increments smaller than semitones. Futurist Luigi Russolo’s "L'Arte dei Rumori" ("The Art of Noises") also took an avant-garde approach, valuing noises such as roars, whistling and buzzing. In 1914, the first concert to perform Russolo’s manifesto featuring his Intonarumori, acoustical noise instruments, was so ill received that it caused a riot. These ideas and approaches to music later influenced electronic avant-garde composers such as Pierre Schaeffer, Edgard Varèse, John Cage, Pierre Henry, George Antheil, and Karlheinz Stockhausen.

The advent of the computer furthered the possibilities of electronic music composition. The first computer used for playing music was the CSIR Mk1, developed in Sydney in the late 1940s by the Council for Scientific and Industrial Research. Built and designed by Trevor Pearcy and Maston Beard and programmed by Geoffrey Hill, the CSIR Mk1 publicly played "Colonel Bogey" in 1951. Later renamed the CSIRAC in 1955, the machine was programmed to accept a punched paper data tape in standard music notation. No recordings of the CSIRAC exist, and the first known recorded computer generated music was a medley of "God Save the King," "Baa Baa Black Sheep" and "In the Mood" played by the Ferranti Mark 1 at the end of 1951.

Hired by Bell Labs in 1954, Max Mathews is widely considered to be one of the founding fathers of computer music. Mathews wrote MUSIC-I, which was the first program to produce digital audio through use of an IBM 704 computer; the CSIRAC and Ferranti only produced analog audio. With John Chowning, Mathews helped set up a computer music program using the Stanford Artificial Intelligence Laboratory’s computer system in 1964. Chowning would later develop the FM synthesis algorithm in 1967, and founded the Center for Computer Research and Musical Acoustics (CCRMA, pronounced "Karma") in 1975. CCRMA would later employ John Pierce, a long-time employee of Bell Labs, who contributed pioneering work to digital speech synthesis.

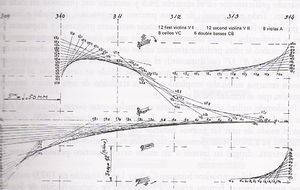

Early computers used for music could not process data fast enough to play in real time, but could be used to generate scores. One of the first composers to utilize computers was Iannis Xenakis. Xenakis, along with Dennis Gabor, was one of the first people to work with granular synthesis, and wrote programs in FORTRAN, which would generate scores to be played by traditional instruments. Due to the focus on using mathematical models such as set theory and stochastic processes in music, these experimental scores required a great deal of mathematical precision. These calculations were made much simpler by the use of computing. Another composer who worked with mathematically abstract compositions was Gottfried Michael Koenig. Koenig wrote the programs Project 1 (1964), Project 2 (1966) and SSP (1971) which used algorithmic composition to transform the calculations of various mathematical equations into music.

Academic interest in computer music increased in the 1970s with the International Computer Music Conference, first held in 1974, and Computer Music Journal, started in 1977. These allowed for the publication of scholarly papers dealing with the musical theory in computing and various aspects relating to digital audio. Early topics in these publications include discussions of software and hardware for digital synthesizers, signal processing languages and editors, interviews with composers, computer music scores and various products of interest. Both the Computer Music Journal and the International Computer Music Conference are active today and are the leading academic sources for the field.

With the introduction of the personal computer in the 1970s, computer music became something that was affordable to hobbyists and amateurs. Many of the early personal computers and game systems such as the Atari and Commodore 64 came with sound chips, and the Commodore 64’s MOS Technology SID (Sound Integrated Device) designed by Robert Yannes was a programmable chip that was widely used in the production of video game music. Music writing software such as Chris Hülsbeck’s "Soundmonitor" (1986) and Karsten Obarski’s "Ultimate Soundtracker" (1987) allowed for further ease in composing music on the computer. Several techno artists of the early 90s such as Nebula II and Urban Shakedown and were among the first to utilize this software for commercially released music.

The use of 1980s software and hardware in electronic music saw a resurgence of popularity in the early 2000s through the use of Game Boys by artists like Bit Shifter who creates music reminiscent of vintage video games. Other more experimental artists such as Baseck and Rokhausen employ the use of video game systems in conjunction with other devices to create, manipulate and construct non-conventional sounds and noise.

Spanning every genre from rock and roll and R&B to techno, noise and avant-garde classical, computers play an essential part in the production of today’s music. Nearly all of today’s music is recorded, mastered and pressed digitally, and music composed solely on and by computers is now more common than ever; vocal autotuning and synthesized accompaniment play a huge role in today’s mainstream music industry.