The First PCs

Personal computers, such as today’s desktop, laptop, pocket, and wireless machines, are not a single technology but the result of hundreds or thousands of inventions. Creating today’s personal computer required the creativity of thousands of inventors over the course of decades. The idea of a personal computer emerged in the 1950s, when journalists and commentators half-jokingly predicted that someday we would all have computers in our homes (along with other futuristic technologies like personal helicopters and nuclear powered automobiles). But a few electronics hobbyists went to work creating simple calculators based on the designs of the large “mainframe” computers, which were used by scientists and businesses.

The conception of a personal computer changed little over the next two decades. It wasn’t until the early 1970s—and the introduction of the microprocessor—that the idea of the personal computer really began to move toward reality. First on the scene were several “minicomputers.” These were powerful computers, but were small enough to fit on a desktop. However, they were much too expensive for the average consumer. Within a few years, simpler and less expensive “microcomputers” became available, and many people became computer hobbyists. A milestone was the design of the Altair computer, a microcomputer kit first offered in 1975. Altair came about when one of its designers, Edward Roberts, hired two young programmers named William Gates and Paul Allen to write software for it. Gates and Allen later formed Micro-Soft (now Microsoft), a company that would lead the development of personal computer software.

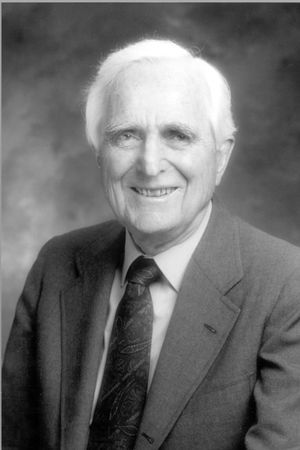

Meanwhile, two other computer enthusiasts named Steven Jobs and Stephen Wozniak were also hard at work. This duo formed Apple Computer Company in 1976. The first Apple computer was sold in kit form, but in 1977 the company introduced the Apple II, which had a sleek, beige plastic case and a color monitor and used a microprocessor made by Motorola. In the early 1980s Jobs and Wozniak designed both a microcomputer and software which were intended to be more “user friendly.” Called the MacIntosh, it used a device called a mouse (invented a few years earlier by Douglas Engelbart) to allow users to point and click to execute commands such as opening a file. Also borrowed was the idea of the Graphical User Interface—using “icons” on a television monitor to represent files and discs, which the user could move, copy, open, or delete using the mouse.

While the Apple II was well on its way to creating a new PC market, mainframe computer giant IBM decided to join in the PC revolution. The company produced a powerful desktop computer called the PC (for Personal Computer; the first model was the 5150) in 1981 and used its well-known brand name to capture much of the market. For software, IBM hired the Microsoft Corporation to design MS-DOS (Microsoft Disc Operating System), which acted as the basic software for the machine and ran the floppy disc and hard disc drives. Intel, a now famous firm in the personal computer chip field, made the PC’s microprocessor.

Throughout the 1980s, the battle for market share between Apple and IBM would depend heavily on software—the Apple became known as a “beginner’s” computer, to be used for playing games. The PC was thought to be a more “serious” machine, and was purchased in large numbers by businesses and computer enthusiasts. The line between the two was never very sharp, and it began to blur when Microsoft introduced the Windows operating system in 1983. Using a mouse and a graphical user interface, Windows was clearly influenced by innovations at Apple. A greater emphasis on graphics (and later sound and video) led to rapid changes in microprocessors, memories, and other personal computer technologies that continue today.

By the late 1980s, Microsoft had become the largest software company in the world; IBM’s dominating position as a maker of personal computers was fading, but there were numerous competitors who made “IBM-compatible” computers for use with Windows. Motorola and Intel both continued to act as major suppliers for successive generations of microprocessors. While Microsoft supplied Windows to any interested computer manufacturer, Apple resisted letting others use its software, and its share of the market gradually shrank. Today over 95% of personal computers use Windows.

As the World Wide Web became increasingly important, personal computers of all brands evolved to enhance the capabilities of networks. The Web introduced a new type of software, the browser, that expanded the things that a computer could do. By the end of the 20th century, the personal computer had been transformed from a difficult-to-use toy for hobbyists to a necessity of daily life.

Related: https://ethw.org/Milestones:Apple_Macintosh_Computer