Milestones:PageRank and the Birth of Google, 1996-1998

- Date Dedicated

- 2024/05/20

- Dedication #

- 256

- Location

- Stanford, CA

- IEEE Regions

- 6

- IEEE sections

- Santa Clara Valley

- Achievement date range

- 1996-1998

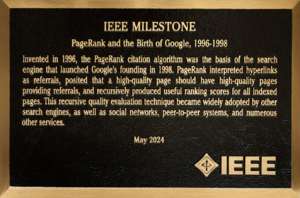

Title

PageRank and the Birth of Google, 1996-1998

Citation

Invented in 1996, the PageRank citation algorithm was the basis of the search engine that launched Google’s founding in 1998. PageRank interpreted hyperlinks as referrals, posited that a high-quality page should have high-quality pages providing referrals, and recursively produced useful ranking scores for all indexed pages. This recursive quality evaluation technique became widely adopted by other search engines, as well as social networks, peer-to-peer systems, and numerous other services.

Street address(es) and GPS coordinates of the Milestone Plaque Sites

- Site 1: Google Visitor Experience on the west side of the Gradient Canopy building, 2000 N. Shoreline Blvd, Mountain View, CA 94043 US (37.421454, -122.080537)

- Free parking at the Alta Garage at 1001 Alta Ave., which is an 8-minute walk from the Google Visitor Experience; a free shuttle bus is available from this garage

- Site 2: Gates Computer Science Building, 353 Jane Stanford Way, Stanford, CA 94305-5008 US (37.4300184, -122.1733027) (not yet installed)

- Site 3: Computer History Museum, 1401 N. Shoreline Blvd, Mountain View, CA 94043 US (37.414757, -122.077679)

Details of the physical location of the plaque

- Site 1: On the west side of the Google Gradient Canopy building: on a stanchion just outside the Cafe, to the right of the Google Store's wide entry area

- Site 2: On the 4th Floor in an Internet-focused section of a new Exhibit Area that showcases the rich history of Stanford's Computer Science Dept.

- Site 3: On the inside face of the front patio brick wall, near the museum's Main Entrance

How the plaque site is protected/secured

- Site 1: Building security; 24/7 access

- The hours of the Cafe and Google Store are included here

- Site 2: Building security; open to the public on weekdays from 8am-6pm

- Site 3: Building security; 24/7 access

Historical significance of the work

Background

Information Retrieval concerns itself with finding information that addresses a given information need. Given a query describing an information need and a corpus of documents, an information retrieval system surfaces those documents that are relevant to the information need, placing the most relevant documents towards the top of the list of results. This aspect of rank-ordering results by decreasing order of relevance is known as the ranking problem, and is a central part of research in Information Retrieval.

Prior to the inception of the World Wide Web, ranking algorithms were exclusively based on lexical features; that is, the lexical similarity between a query and the content or the metadata (such as keywords or subject descriptors) of a document. In comparison to print media, the World Wide Web was designed to be a collaborative space, where authors could create hyperlinks from web pages they created to other web pages, and could continuously revise existing web pages, including by adding additional links.

The presence of hyperlinks and the ability to revise existing web content introduced a fresh signal for ranking algorithms: peer endorsement. The idea of peer endorsement can be traced back to the sociology and the library science communities. In 1953, Leo Katz introduce a "status index" (see Ref21A) that is defined as the weighted sum of all paths between two nodes in a graph, with shorter paths having higher weight. In 1972, Eugene Garfield proposed that citations of a given research article can be viewed as an endorsement of that article, and that appropriately normalized citation counts can be used to quantify the impact of individual papers, entire journals, as well as researchers and institutions (see Ref21B).

With the advent of the web, multiple research groups came up independently with the idea of viewing hyperlinks as citations and leveraging the resulting quantitative measure as a ranking signal, to complement the existing lexical features. Massimo Marchiori proposed to use a straight count of “outer links” (that is, links from unaffiliated websites) as a ranking signal (see Ref19). Yanhong Li and Larry Rafsky suggested aggregating the anchor text of all hyperlinks to a given document into a “hyperlink vector” for that document, and then to perform traditional lexical ranking on both the content of a document and on its hyperlink vector (see Ref20). Jon Kleinberg developed the concepts of “hubs” and “authorities”: hubs are web pages containing many links to other pages that are authoritative on a particular subject, and authorities are web pages that are endorsed by many hubs as being relevant to a given subject (see Ref16, Ref17, Ref18). This definition can be formally expressed as a mutual recurrence relation, and solved using fixed-point iteration. And finally, Larry Page and Sergey Brin suggested computing a quality score for each web page as the sum of the normalized quality of the pages linking to it (see Ref13, Ref14, Ref15). This quality score, which they dubbed PageRank, had a profound impact both on academia and industry. PageRank is widely credited with being the key factor that allowed Google to surpass entrenched competitors such as AltaVista, Excite and Infoseek, and virtually every search engine adopted a variant thereof. PageRank also inspired much follow-on research, on how to compute PageRank scores for ever-larger web graphs, on how to compute PageRank scores that are personalized to the interests of particular users, on how to leverage PageRank for web page acquisition (“crawling”) in addition to result ranking, and more. There are numerous survey papers on the mathematical properties of PageRank (see Ref32, Ref33, Ref34).

The PageRank algorithm

The core idea of the PageRank algorithm is that hyperlinks present endorsements of other web pages, and that pages endorsed by many high-quality pages are themselves of high quality. The endorsement ability of each web page is distributed uniformly among all the pages it references.

Formalizing this idea, given a graph with web pages v ∈ V and hyperlinks (u,v) ∈ E where the edge set E ⊆ V × V is the set of all hyperlinks between pages in V, we could define the quality Q(v) of a web page v as:

Q(u) ⁄ out(u)| Σ |

| (u,v) ∈ E |

where out(u) = | v : (u,v) ∈ E | is the out-degree of u, i.e. the number of hyperlinks contained in web page page u.

This recurrence relation can be solved in a straightforward way, by assigning an initial value (say 1 ⁄ |V|, so the Q(v) sum up to 1, which we can view as the “charge” of the system) to the quality scores of each page and then performing power iteration. However, unfortunately this does not produce meaningful results – the solution converges to Q(v) = 0 for all v that are not part of a strongly connected and closed component. This is because not all web pages contain hyperlinks, and the quality score of such “sink” pages disappears during the process of power iteration, lowering the remaining charge during every iteration. Eventually, the entire charge of all nodes that are not part of a strongly connected and closed component leaks away.

To fix this issue, Page and collaborators assign a minimum quality score – a minimum charge – to each web page. Formally,

| Q(v)= 𝜆 (1 ⁄ | V |) + (1-𝜆) | Σ | Q(u) ⁄ out(u) |

| (u,v) ∈ E |

With this modification, every web page is guaranteed to have a score of at least 𝜆 1 ⁄ | V |, and pages with endorsements will have larger scores even if they are not part of a strongly connected and closed component. The system still “leaks charge” through its sink nodes during the process of power iteration, but that leakage is bounded to be at most (1-𝜆)(1 ⁄ | V |).

The PageRank formula has been popularly interpreted as modeling the behavior of a “random web surfer” who with probability 1-𝜆 will follow a hyperlink from their current page and with probability 𝜆 will jump to a page chosen uniformly at random. Alas, it should be emphasized that PageRank is not intended to model the web browsing behavior of users but the endorsement actions of content providers.

Scaling PageRank to billions of pages

The PageRank computation can be efficiently distributed across multiple machines by sharding the set of web pages across machines (see Ref22). Given a hyperlink from page u to page v, if u and v are mapped to the same machine, the update can be performed locally; otherwise, updates are transmitted over a network link from u’s machine to v’s machine. Updates can be streamed and thus are limited by network bandwidth but not by network latency.

There is a rich body of literature on how best to partition the web graph; in practice, the simple heuristic of mapping pages belonging to the same web site to the same partition does very well, since most hyperlinks are between two pages on the same web site (see Ref23).

Kamvar et al explored using methods other than power iteration to compute the fixed point of the PageRank equation. They proposed an algorithm called Quadratic Extrapolation that converges substantially faster than power iteration (see Ref26). Kamvar et al. also developed an ingenious way to speed up PageRank computation (see Ref25). Since most hyperlinks are local, they start by computing local PageRank scores for each web site, then scaling these local scores according to the importance of each site (which is computed using a separate, site-level PageRank computation), and finally using the resulting PageRank vector as the initialization of an ordinary PageRank computation. Since the scaled combination of local PageRank vectors is close to the final fixed-point, the global PageRank computation converges much faster.

It is straightforward to express the power iteration algorithm in a form that propagates page score changes rather than page scores. This allows for distributed implementations where updates to pages mapped to different machines are accumulated locally over several epochs before being transmitted to the peer machines (see Ref24).

PageRank for Web Crawling

Junghoo Cho, Hector Garcia-Molina and Larry Page suggested early on prioritize web pages with high PageRank when crawling the web, the intuition being that such pages are more important and more useful than others, and that such a crawl scheduling policy will maximize the utility of the fraction of the web indexed by the search service. They devised an efficient way to do so (see Ref27). Subsequently, Najork and Wiener showed that a simple breadth-first search crawl closely approximated a PageRank-based scheduling policy (see Ref28).

Topic-Sensitive and Personalized PageRank

The original PageRank paper suggested the possibility to personalize PageRank scores to individual users, by assigning higher minimum scores to known pages of interest to that user. In practice, this would be extremely expensive to compute. A more tractable approach is to compute topic-specific PageRank scores, for a manageable set of topics, and to model user interests as a linear combination of topic preferences. In this model, each page has a separate quality score for each topic. Haweliwala devised such a topic-sensitive scoring system (see Ref29, Ref30). Glen Jeh and Jennifer Widom developed this idea further, making the computation for the multiple PageRank vectors more scalable (see Ref31).

PageRank in peer-to-peer systems

The early 2000s witnessed a lot of interest in peer-to-peer (P2P) computing, that is, leveraging a set of computers that are distributed over a wide geographic area and not necessarily trusted to perform tasks that benefit from distribution. Applications of P2P computing include file-sharing (e.g. Napster), collaborative backup (e.g. Plan42), searching for extraterrestrial life (SETI), and more. There have been multiple lines of research on using the distributed computing infrastructure of P2P systems to compute PageRank scores (see Ref37), and to use the scores computed in such a fashion to rank the peers participating in a P2P network (see Ref38, Ref39, Ref40).

Surveys of papers on PageRank

The mathematical elegance and deceptive simplicity of the PageRank formulation, combined with its outsized impact on web search engines and other online services, have led to a plethora of papers analyzing its properties. Two examples of such papers are “Inside PageRank” (see Ref32) and “Deeper Inside PageRank” (see Ref33). Pavel Berkhin’s “A Survey of PageRank Computation” provides a good summary of the flurry of PageRank-inspired Research between 1998 and 2005 (see Ref34). David Gleich’s “PageRank beyond the Web” surveys applications of PageRank in fields as diverse as biology, chemistry, neuroscience, and physics (see Ref35). Sebastiano Vigna paper "Spectral Ranking" (see Ref36) provides a history of spectral ranking algorithms, of which PageRank is one exponent.

Features that set this work apart from similar achievements

Google was the first commercial search engine to leverage hyperlinks as a ranking signal; earlier search engines (e.g. AltaVista, Excite and Infoseek) based their ranking purely on the similarity between query and result text. That being said, multiple research efforts at the time investigated link-based ranking signals. Contemporary technologies similar to PageRank fall into two classes: simple link-counting as proposed by Marchiori (see Ref19) and Li and Rafsky (see Ref20), and query-dependent authority scores as proposed by Kleinberg (see Ref16, Ref17, Ref18). The PageRank algorithm improves on the former idea by assigning more weight to endorsements from high-quality web pages (making the signal more robust to adversarial behavior such as link spam). Query-dependent quality measures such as Kleinberg’s “hubs and authorities” scores are by definition computed at query time, a process that introduces substantial latencies, leading to a poor user experience. PageRank by contrast can be computed off-line (at the time of the crawl or shortly thereafter), thereby having no negative impact on query time latency.

Obstacles that needed to be overcome

As outlined above, the PageRank score of a web page can be thought of as a proxy of its quality. One of the uses of this quality estimate was to schedule Google's crawling policy, giving preference to web pages with high PageRank that had not been downloaded previously or were in need of refreshment. In order to achieve this, the PageRank computation had to be performed in parallel with the crawl, and scores had to be propagated to the frontier of the web graph (the portion of the web graph that is known but has not been explored yet). This would be straightforward if the frontier was maintained by a database, but database technology of the 1990s was not capable of coping with the scale, update frequency and query frequency that would be required to do so. Consequently, Google engineers had to devise custom disk-based algorithms to perform the PageRank computation and the crawl scheduling.

The web experienced an exponential growth phase during its first twenty years, posing another serious challenge. Since the time complexity of the PageRank computation is (at best) linear to the size of the web graph, the computational resources required to perform this computation had to grow in sync with the growth of the web. This feat required periodic reinvention of the PageRank computation infrastructure, introducing distributed processing to scale the computation, and fault-tolerance protocols to cope with unavoidable machine failures.

Significant references

Patents

/1/ Lawrence Page. Method for Node Ranking in a Linked Database. Provisional patent application serial number 60/035,205, filed January 10, 1997. Media:Ref01-Provisional-Patent-Application-60-035205.pdf

/2/ Lawrence Page. Method for Node Ranking in a Linked Database. US Patent 6,285,999, filed January 9, 1998, issued September 4, 2001. Media:Ref02-Patent-US6285999.pdf

/3/ Lawrence Page. Method for Scoring Documents in a Linked Database. US Patent 6,799,176, filed July 6, 2001, issued September 8, 2004. Media:Ref03-Patent-US6799176.pdf

/4/ Lawrence Page. Method for Node Ranking in a Linked Database. US Patent 7,058,628, filed July 2, 2001, issued June 6, 2006. Media:Ref04-Patent-US7058628.pdf

/5/ Lawrence Page. Scoring Documents In A Linked Database. US Patent 7,269,587, filed December 1, 2004, issued September 11, 2007. Media:Ref05-Patent-US7269587.pdf

/6/ Lawrence Page. Annotating links in a document based on the ranks of documents pointed to by the links. US Patent 7,908,277, filed February 5, 2007, issued March 15, 2011. Media:Ref06-Patent-US7908277.pdf

/7/ Lawrence Page. Scoring Documents In A Linked Database. US Patent 8,126,884, filed January 28, 2010, issued February 28, 2012. Media:Ref07-Patent-US8126884.pdf

/8/ Lawrence Page. Scoring Documents In A Database. US Patent 8,131,715, filed January 19, 2010, issued March 6, 2012. Media:Ref08-Patent-US8131715.pdf

/9/ Lawrence Page. Scoring Documents In A Database. US Patent 8,131,717, filed January 19, 2010, issued March 6, 2012. Media:Ref09-Patent-US8131717.pdf

/10/ Lawrence Page. Scoring Documents In A Linked Database. US Patent 8,195,651, filed February 2, 2010, issued June 5, 2012. Media:Ref10-Patent-US8195651.pdf

/11/ Lawrence Page. Scoring Documents In A Linked Database. US Patent 8,521,730, filed May 30, 2012, issued August 27, 2013. Media:Ref11-Patent-US8521730.pdf

/12/ Lawrence Page. Scoring Documents In A Linked Database. US Patent 8,725,726, filed September 14, 2012, issued May 13, 2014. Media:Ref12-Patent-US8725726.pdf

Original PageRank publications and talks

/13/ Lawrence Page, Sergey Brin, Rajeev Motwani, and Terry Winograd. The Pagerank Citation Ranking: Bringing Order to the Web. Technical Report, Dept. of Computer Science, Stanford University, January 1998. Media:Ref13-PageEtAl1998-PageRankCitationRanking.pdf

/14/ Sergey Brin and Lawrence Page. The Anatomy of a Large-Scale Hypertextual Web Search Engine. Proceedings of the 7th International World Wide Web Conference, April 1998. Winner of Seoul Test-of-time award [blog post]. Short version: Media:Ref14a-BrinPage1998-AnatomySearchEngine.pdf Long version: Media:Ref14b-BrinPage1998-AnatomySearchEngine.pdf

/15/ Larry Page. PageRank: Bringing order to the Web. Talk at Stanford, undated. [slides]

Contemporary work on link-based ranking algorithms

/16/ Jon M. Kleinberg. Authoritative Sources in a Hyperlinked Environment. Technical Report RJ 10076, IBM Research Division, May 1997. Media:Ref16-Kleinberg1997-AuthoritativeSources.pdf

/17/ Jon M. Kleinberg. Authoritative Sources in a Hyperlinked Environment. In Proceedings of ACM-SIAM Symposium on Discrete Algorithms, 668-677, January 1998. Media:Ref17-Kleinberg1998-AuthoritativeSources.pdf

/18/ Jon M. Kleinberg. Authoritative Sources in a Hyperlinked Environment. Journal of the ACM 46(5):604-632, September 1999. Media:Ref18-Kleinberg1999-AuthoritativeSources.pdf

/19/ Massimo Marchiori. The Quest for Correct Information on the Web: Hyper Search Engines. In Proceedings of the 6th International World Wide Web Conference, April 1997. (https://www.w3.org/People/Massimo/papers/WWW6/) Media:Ref19-Marchiori1997-QuestForCorrectInformation.pdf

/20/ Yanhong Li and Larry Rafsky. Beyond relevance ranking: hyperlink vector voting. In Proceedings of RIAO’97: Computer-Assisted Information Searching on Internet - Volume 2, pages 648-650, June 1997. Media:Ref20-LiRafsky1997-BeyondRelevanceRanking.pdf

/21A/ Leo Katz. Leo Katz. A new status index derived from sociometric analysis. Psychometrika, 18(1):39–43, 1953.

/21B/ Eugene Garfield. Citation analysis as a tool in journal evaluation. Science 178(1060):471-479, November 1972. Media:Ref21-Garfield1972-CitationAnalysis.pdf

Publications building on the original PageRank work

/22/ Bundit Manaskasemsak and Arnon Rungsawang. An Efficient Partition-Based Parallel PageRank Algorithm. In Proceedings of the 11th International Conference on Parallel and Distributed Systems, pages 257-263, November 2005. Media:Ref22-ManaskasemsakRungsawang2005-EfficientParallelPageRank.pdf

/23/ Ali Cevahir, Cevdet Aykanat, Ata Turk, B. Barla Cambazoglu. Site-Based Partitioning and Repartitioning Techniques for Parallel PageRank Computation. IEEE Transactions on Parallel and Distributed Systems, 22(5):786-802, May 2011. Media:Ref23-CevahirEtAl2011-SiteBasedPartitioning.pdf

/24/ Frank McSherry. A Uniform Approach to Accelerated PageRank Computation. In Proceedings of the 14th international conference on World Wide Web, pages 575-582, May 2005. Media:Ref24-McSherry2005-UniformApproach.pdf

/25/ Sepandar D. Kamvar, Taher H. Haveliwala, Christopher D. Manning, Gene H. Golub. Extrapolation methods for accelerating PageRank computations. In Proceedings of the 12th international conference on World Wide Web, pages 261-270, May 2003. Media:Ref25-KamvarEtAl-ExploitingBlockStructure.pdf

/26/ Sepandar D. Kamvar, Taher H. Haveliwala, Christopher D. Manning, Gene H. Golub. Exploiting the Block Structure of the Web for Computing PageRank. Stanford University Technical Report, 2003. Media:Ref26-KamvarEtAl2003-ExtrapolationMethods.pdf

/27/ Junghoo Cho, Hector Garcia-Molina and Lawrence Page. Efficient Crawling through URL Ordering. In Proceedings of the 7th International World Wide Web Conference, April 1998. Media:Ref27-ChoGarciaMolinaPage1998-EfficientCrawling.pdf

/28/ Marc Najork and Janet L. Wiener. Breadth-First Search Crawling Yields High-Quality Pages. In Proceedings of the 10th international conference on World Wide Web, pages 114-118, May 2001. Media:Ref28-NajorkWiener2001-BreadthFirstSearchCrawling.pdf

/29/ Taher H. Haweliwala. Topic-Sensitive PageRank. In Proceedings of the 11th International World Wide Web Conference, pages 517-526, May 2002. Media:Ref29-Haveliwala2002-TopicSensitivePageRank.pdf

/30/ Taher Haweliwala. Topic-Sensitive PageRank: A Context-Sensitive Ranking Algorithm for Web Search. IEEE Transaction on Knowledge and Data Engineering 15(4): 784-796, July/August 2003. Media:Ref30-Haveliwala2003-TopicSensitivePageRank.pdf

/31/ Glen Jeh and Jennifer Widom. Scaling Personalized Web Search. Proceedings of the 12th International Conference on World Wide Web, pages 271-279, May 2003. Media:Ref31-JehWidom2003-ScalingPersonalizedWebSearch.pdf

/32/ Monica Bianchini, Marco Gori and Franco Scarselli. Inside PageRank. ACM Transactions on Internet Technology 5(1):92-128, February 2005. Media:Ref32-BianchiniGoriScarselli2005-InsidePageRank.pdf

/33/ Amy Langville and Carl D. Meyer. Deeper Inside PageRank. Internet Mathematics 1(3):335-380, December 2003. Media:Ref33-LangvilleMeyer2003-DeeperInsidePageRank.pdf

/34/ Pavel Berkhin. A Survey on PageRank Computing. Internet Mathematics 2(1): 73-120, December 2004. Media:Ref34-Berkhin2004-SurveyPageRankComputing.pdf

/35/ David Gleich. PageRank Beyond the Web. SIAM Review 57(3):321-363, 2015. Media:Ref35-Gleich2015-PageRankBeyondTheWeb.pdf

/36/ Sebastiano Vigna. Spectral Ranking. arXiv preprint 0912.0238v15, February 2019. Media:Ref36-Vigna-SpectralRanking.pdf

/37/ Karthikeyan Sankaralingam, Simha Sethumadhavan, James C. Browne. Distributed Pagerank for P2P Systems. Proceedings of the 12th IEEE International Symposium on High Performance Distributed Computing, pages 58-68, June 2003. Media:Ref37-SankaralingamEtAl2003-DistributedPRforP2PSystems.pdf

/38/ Atsushi Yamamoto, Daisuke Asahara, Tomoko Itao, Satoshi Tanaka, Tatsuya Suda. Distributed Pagerank: A Distributed Reputation Model for Open Peer-to-Peer Networks. Proceedings of the 2004 International Symposium on Applications and the Internet Workshops, pages 389-394, January 2004. Media:Ref38-YamamotoEtAl2004-DistributedReputatioModel.pdf

/39/ Tak-Pang Lau, Dexter Siu. Distributed Ranking over Peer-to-Peer Networks. Proceedings of the 13th International World Wide Web Conference, Alternate track papers & posters, pages 356-357, May 2004. Media:Ref39 LauSiu2004 - DistributedRankingOverP2P.pdf

/40/ Josiane Xavier Parreira, Sebastian Michel, Matthias Bender, Tom Crecelius, Gerhard Weikum. P2P Authority Analysis for Social Communities. Proceedings of the 33rd International Conference on Very Large Data Bases, pages 1398–1401, September 2007. Media:Ref40-ParreiraEtAl2007-P2PAuthorityAnalysis.pdf

Supporting materials

See references

Dedication Ceremony

A Celebration of 50 Years of the Internet

- Blog with Photos from "50 Years of the Internet" Event

- Eileen Clegg's Internet Evolution Tapestry as discussed by her and Vint Cerf

Martin Wasserman Interviews re: the 50th Anniversary of the Internet

- Internet Pioneer Vint Cerf

- Internet Historian Marc Weber

- IEEE History Committee Vice Chair Brian Berg

Map