Inventing the Computer

This article was initially written as part of the IEEE STARS program.

Citation

An information-processing industry based on punched cards began in the 1890s. It grew during the first half of the 20th century, becoming of great importance to businesses and governments. Punched card equipment became increasingly sophisticated and, with incorporation of vacuum-tube electronics in the 1940s, a new type of device ultimately emerged, which we know today as the computer. A computer does sequences of calculations automatically, including data handling, at electronic speeds. Furthermore, the program is itself stored and accessed electronically. Devices with these capabilities have dramatically changed the world since their commercial introduction in 1951.

Introduction

There are several places where one could argue the story of computing begins. An obvious choice would be in antiquity, where nascent civilizations developed aids to counting and figuring such as pebbles (Latin calculi, from which comes the modern term "calculate"), counting boards (from which comes the modern term “counter top”), and the abacus—all of which survive into the 21st century. But these devices were not "computers" as we think of that term today.

Where Our Story Begins

The word “computer” implies a machine that not only calculates but also takes over the drudgery of its sister activity, the storage and retrieval of data. Thus a more appropriate place to start the story would be the 1890s, when the American inventor Herman Hollerith developed, for the U.S. Census Bureau, the punched card and a suite of machines that used cards to sort, retrieve, count, and perform simple calculations on data punched onto cards. The inherent flexibility of the system he invented led to many applications beyond that of the Census. Hollerith founded a company in 1896 called the Tabulating Machine Company to market his invention. It was combined with other companies in 1911 to form a company called the “Computing-Tabulating-Recording” Company, and in 1924 the new head of C-T-R, Thomas J. Watson, changed the name to the International Business Machines Corporation, today’s IBM. Businesses in the United States and around the world used punched card equipment, supplied by IBM and its competitors (especially Remington Rand), through the 20th century, with the punched card dying out only in the 1980s.

As punched card equipment was being marketed, other inventors were developing mechanical calculators that performed the basic functions of arithmetic. The Felt “Comptometer,” also invented in the 1890s, could only add, but a skilled operator could calculate at very high speeds with it, and it found applications primarily in the businesses and accounting fields. Other, more complex and costly machines could multiply and even divide, and these found use in engineering and science, especially astronomy. In the 1920s and 1930s, observatories and government research laboratories hired teams of clerks, often women, to use these machines to reduce experimental data. (The clerks were called “computers,” a name that was transferred to the electronic machine when it was invented in the 1940s, precisely to replace the work they were doing.)

These electromechanical machines, installed in ensembles and operated by skilled teams of people, provided a great increase in productivity for business, engineering, and science through the 1930s. They laid the foundation for the invention of the computer that followed. Many of the U.S. companies that supplied commercial computers in the 1950s, including IBM, Burroughs, and Remington Rand, were among the principal suppliers of punched card equipment and mechanical calculators in the 1930s and 1940s.

But the computer as it emerged in the late 1940s and early 1950s was more than just an extension of these machines. The first major difference was that a computer was programmable: not only could it do calculations or store data, it could also perform sequences of operations, which themselves could be modified based on the outcome of an earlier calculation. In the pre-computer era, that was done by human beings who might, for example, carry decks of cards from one punched card device to another, or by the calculator operator performing one calculation if a result was positive, another if negative, and so on. The second major difference was that the computer, as it has come to be defined, operates at electronic speeds: orders of magnitude faster than the electromechanical devices of the pre-World-War-II era. Only when those qualities—calculation, storage, programmability, and high speeds—were combined did one have a true computer, and only then can the “computer age” be said to have arrived.

Major Steps Forward

From about 1939 through about 1951 a number of devices were invented in the United States, Germany, England, and elsewhere, which offered some combination of these properties. Sometimes they lacked one or two of the attributes mentioned above, or they were severely unbalanced in one or more. For that reason there are numerous, often acrimonious claims to what was the “first” computer. In Germany at the onset of World War II, Konrad Zuse conceived and built a programmable machine using telephone relays, the “Z3,” which was in operation by 1941. Zuse’s colleague, Helmut Schreyer, proposed building a version using vacuum tubes, but that was never completed. In the U.K., the government built a set of electronic devices, operating at very high speeds, to unscramble encrypted messages sent by the Germans. The British “Colossus” may have the strongest claim to being the first electronic computer, but its operations were restricted to code breaking, and it had little numeric calculating abilities.

These European projects had their counterparts in the United States. In Cambridge, Massachusetts, Howard Aiken conceived of a large automatic calculator, which used modified IBM punched card equipment for calculation and storage, and which was programmed by holes punched in long rolls of paper tape. The machine, called the “Harvard Mark I," was operational by 1944. Like the Zuse machines, it did not use electronic circuits. Similar calculators were built during the war under the direction of George Stibitz at the Bell Telephone Laboratories, using electromechanical telephone relays and switches to calculate.

During World War II, IBM engineers C. D. Lake and B.M. Durfee modified IBM punched card equipment so that it could execute short sequences of calculations automatically—in effect replacing the human operators who might carry decks of punched cards from one machine to another. Several of these “Pluggable Sequence Relay Calculators” were built and heavily used, mainly at the U.S. Army’s Aberdeen (Maryland) Proving Ground. Another important modification of IBM equipment was made after the war by one of its customers, the Northrop Aircraft Company. Engineers at Northrop linked machines to one another and used punched cards to store programs as well as data. IBM later improved the arrangement and beginning in 1949 marketed it as the “Card Programmed Calculator.” From these examples one can see that the notion of automatic computing was very much in the air.

In 1940 John V. Atanasoff, a physicist at Iowa State College, proposed an electronic device that could solve systems of linear equations by executing a sequence of operations that followed the familiar principle of Gaussian elimination. He built a modest but nearly-functional prototype that he demonstrated by 1941. Like the Colossus, it too lacked general-purpose programmability, but it was arguably the first machine built in the United States to compute at electronic speeds. With the U.S. entry into the war, he had to abandon the project before it could be made fully operational.

These are among the main contenders for the title of first computer, and advocates for each of them have often fought among each other, in meetings, in journals, and even in the courtroom, for what they feel is the rightful place for that particular machine in history. The Atanasoff machine has been the subject of the most controversy, primarily because among those who visited him in Ames, Iowa in June 1941 was John Mauchly, one of the creators of the ENIAC, described next.

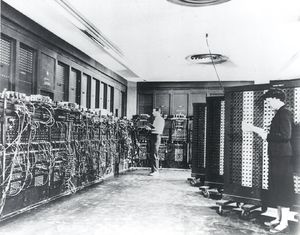

With knowledge of that controversy in mind, one might well argue that the computer age began in February, 1946, when the U.S. Army publicly unveiled the ENIAC—Electronic Numerical Integrator and Computer—at the Moore School of Electrical Engineering in Philadelphia. It should be noted that the “C” in the acronym stood for “computer,” a term deliberately chosen by Eckert and Mauchly to evoke the rooms in which women “computers” operated calculating machines. With its 18,000 vacuum tubes, the ENIAC was touted as being able to calculate the trajectory of a shell fired from a cannon faster than the shell itself traveled. That was a well-chosen example, as such calculations were the reason the Army spent over a half-million dollars (equivalent to several millions of current dollars) for the risky and unproven technique of calculating with unreliable vacuum tubes. The ENIAC used tubes for both storage and calculation, and thus could solve complex mathematical problems at electronic speeds.

Eckert and Mauchly designed the ENIAC to be programmable by plugging the various computing elements of it in different configurations, effectively rewiring the machine for each new problem. That was an inelegant method, but it worked. It was the only way to program a high-speed device until bulk storage devices having commensurate speeds were invented. In other words, there was little point in having a device that could calculate at electronic speeds, if the instructions were fed into it at mechanical speeds. Reprogramming it to do a different job might require days, even if once rewired it could calculate an answer in minutes. For that reason, historians are reluctant to call the ENIAC a true “computer,” a term they reserve for machines that can be flexibly reprogrammed to solve a variety of problems.

Today’s computers do all sorts of things: they communicate, manage databases, send mail, gather and display news, photographs, music, movies—and they compute (i.e. solve mathematical equations, typically with a spreadsheet program or an advanced mathematics software package). That last function is among the least used by most consumers, but it was that function for which the computer was invented, and from which the machine got its name.

Stored-Program Computers

The ENIAC was a remarkable machine, but if it were only a one-time development for the U.S. Army, it would hardly be remembered. But it is remembered, for at least two reasons. First, in addressing the shortcomings of the ENIAC, its designers conceived of the stored-program principle, which has been central to the design of all digital computers ever since. This principle, combined with the invention of high-speed memory devices, provided a practical alternative to the ENIAC’s cumbersome plugboard programming. By storing a program and data in a common high speed memory, not only could programs be executed at electronic speeds; the programs could also be operated on as if they were data. This allowed modification of the steps of a calculation as it was being carried out, and it was also the ancestor of today’s high-level languages compiled inside computers.

A report written by John von Neumann in 1945, after he became involved with the ENIAC project, focused more on machine properties of concern to a programmer rather than on engineering problems. His report proved to be influential and led to several projects in the U.S. and Europe. Some accounts called these computers “von Neumann machines,” a misnomer since his report did not fully credit those on the ENIAC team who contributed to the concept.

During the ensuing years, the definition of a computer has changed, so that the criterion of programmability, mentioned earlier as one of the four that define a computer, is now extended to encompass the notion of the internal storage of that program in high-speed memory. Computers of this type are formally referred to as stored-program computers, or simply as “computers.” Computing devices having the architecture of the ENIAC or the IBM Card Programmed Calculator are now more correctly referred to as programmable calculators.

From 1945 to the early 1950s a number of “firsts” emerged that implemented this now-expanded stored-program concept. In England an experimental stored-program computer, called the Manchester "Baby", was completed in 1948 and then used to carry out a rudimentary demonstration of the concept. It has been claimed to be the first stored-program electronic computer in the world to execute a program. The following year, under the direction of Maurice Wilkes in Cambridge, England, a computer called the EDSAC (Electronic Delay Storage Automatic Computer) was completed and began operation. Unlike the Manchester “Baby,” it was a fully-functional and useful stored-program computer.

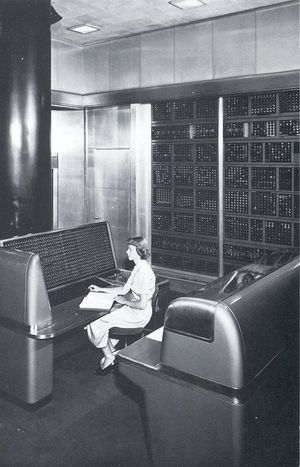

Some American efforts came to fruition at about the same time, among them the SEAC (Standards Eastern Automatic Computer), built at the U.S. National Bureau of Standards and operational in 1950. The IBM Corporation built an electronic computer, the SSEC, which contained a memory device that stored instructions that could be modified during a calculation (hence the name, which stood for Selective Sequence Electronic Calculator). The SSEC was operational in 1948. It provided IBM with basic patents on the stored-program concept and served as a training ground for early computer programmers, but because of the flow of historical events it had limited influence on future developments in computers. As historians note, it was not a general-purpose stored-program computer that followed the von Neumann model. IBM’s Model 701, discussed later, was that company’s first such product.

The second reason for placing the ENIAC at such a high place in history is that its creators, J. Presper Eckert and John Mauchly, almost alone in the early years, sought to build and sell a more elegant, stored-program follow-on to the ENIAC for commercial applications. The ENIAC was conceived, built, and used for scientific and military applications. The UNIVAC, the commercial computer, was conceived and marked as a general-purpose computer suitable for any application that one could program it for. Hence the name: an acronym for “Universal Automatic Computer.”

In spite of financial difficulties for the manufacturer, Eckert and Mauchly’s UNIVAC was well-received by its customers. Presper Eckert, the chief engineer, designed it conservatively, making the UNIVAC surprisingly reliable in spite of its use of vacuum tubes that had a tendency to burn out frequently. Sales were modest, but the technological breakthrough of a commercial, stored-program electronic computer was large. Eckert and Mauchly founded a company—another harbinger of the volatile computer industry that followed—but it was unable to survive on its own and was absorbed by Remington Rand in 1950.

Although the UNIVAC was not an immediate commercial success, it was clear that the electronic computer, if not following Eckert and Mauchly’s design exactly, was eventually going to replace the machines of an earlier era. That led in part to the decision by the IBM Corporation to enter the field with a large-scale electronic computer of its own, the IBM 701. For its design the company drew on the advice of von Neumann, whom they hired as a consultant. Von Neumann had proposed using a special purpose vacuum tube developed at RCA for memory, but they proved difficult to produce in quantity. The 701’s memory units were special-purpose modified cathode ray tubes, which had been invented in England and used on the Manchester computer.

IBM initially called the 701 a “Defense Calculator,” an acknowledgement that its customers were primarily aerospace and defense companies, who had access to large amounts of government funds. Government-sponsored military agencies were also among the first customers for the UNIVAC, now being marketed by Remington Rand. The 701 was optimized for scientific, not business applications, but it was a general-purpose computer. IBM also introduced machines that were more suitable for business applications, although it would take several years before business customers could obtain a computer that had the utility, reliability, and relatively low cost of the punched card equipment they had been depending on.

By the mid-1950s IBM and Remington Rand were joined by other vendors, while advanced research on memory devices, circuits, and above all programming was being carried out in U.S. and British universities. The process of turning an experimental, one-of-a-kind research project into reliable, marketable, and useful products took most of the decade of the 1950s to play out. But once set in motion, it was hard to stop. The “computer age” had arrived.

Acknowledgements

The author thanks members of the STARS Editorial Board and others for review and constructive criticism of this article, with special thanks to William Aspray and Emerson Pugh for helpful comments and suggestions.

Timeline

- 1939, J.V. Atanasoff conceives of electronic calculating circuits

- 1940, Bell Labs Model I: first demonstration of remote access to a calculator

- 1941, Zuse "Z3": first programmable electromechanical calculator, Berlin

- 1944, “Colossus”: British electronic code-breaking machine in use

- 1944, Harvard Mark I is unveiled, Cambridge, Massachusetts

- 1945, EDVAC Report, John von Neumann: description of the stored-program principle

- 1946, ENIAC is unveiled at Moore School, Philadelphia

- 1948, SSEC: IBM's programmable electronic "Super Calculator" is unveiled

- 1948, Manchester (U.K.) "Baby" computer: first demonstration of stored-program principle

- 1948, Card Programmed Calculator is developed at Northrop Aircraft, using IBM equipment

- 1949, EDSAC: first operational, practical stored-program computer, Cambridge, England

- 1950, SEAC: first stored-program electronic computer to operate in U.S.

- 1951, LEO: first commercial computer, for the J. Lyons & Co., U.K.

- 1951, UNIVAC: first U.S. commercial stored-program computer system

- 1952, IBM 701: first commercial stored-program computer system from IBM

Bibliography

References of Historical Significance

Konrad Zuse. 1993. The Computer, My Life (translation of the original German Der Computer, Mein Lebenswerk, Berlin: Springer, 1984). Berlin: Springer.

John von Neumann. 1945. “First Draft of a Report on the EDVAC”. Philadelphia, Moore School of Electrical Engineering, University of Pennsylvania, 30 June 1945. Published in Arthur Burks and William Aspray, eds., John von Neumann's Papers on Computing and Computer Theory (MIT Press and Tomash Publishers, 1986).

References for Further Reading

Charles J. Bashe, Lyle R. Johnson, John H. Palmer, and Emerson W. Pugh. 1986. IBM’s Early Computers. Cambridge, MA: MIT Press.

Alice R. Burks and Arthur W. Burks. 1988. The First Electronic Computer: The Atanasoff Story. Ann Arbor, MI: University of Michigan Press.

Paul E. Ceruzzi. 1983. Reckoners: the Prehistory of the Digital Computer. Westport, Connecticut: Greenwood Press.

Nancy Stern. 1981. From ENIAC to UNIVAC: An Appraisal of the Eckert-Mauchly Computers. Bedford, MA: Digital Press.

About the Author

Paul E. Ceruzzi is the curator of guidance, navigation, and control at the National Air and Space Museum of the Smithsonian Institution in Washington, D.C. His work includes research, writing, planning exhibits, collecting artifacts, and lecturing on the subjects of microelectronics, computing, and control as they apply to the practice of air and space flight.

Dr. Ceruzzi attended Yale University and the University of Kansas, from which he received a Ph.D. in American Studies in 1981. Before joining the staff of the National Air and Space Museum, he taught history of technology at Clemson University in South Carolina. He is the author of numerous articles, chapters, and books, including Computing, a Concise History; A History of Modern Computing; Internet Alley: High Technology in Tysons Corner, 1945-2005; Beyond the Limits: Flight Enters the Computer Age; and Reckoners: The Prehistory of The Digital Computer.