First-Hand:McNally's Challenge, Conceptualizing the Naval Tactical Data System - Chapter 3 of the Story of the Naval Tactical Data System

By David L. Boslaugh, Capt. USN, Retired

A response to this article has been submitted here, referencing some of its claims.

Project Lamp Light

We last heard of Lieutenant Commander Irvin McNally 1n 1951 when he was assigned to the Navy Electronics Laboratory (NEL) in San Diego, California, where he was the lab’s radar project officer. There he had been experimenting with a rudimentary digital computer to store radar data inputs, to develop track information on the inputs, and to compute interceptor control vectors. Because of his work in radar data automation, McNally in late 1954 would find himself temporarily assigned. to the Office of Naval Research to participate in a special study. In April 1954, the Secretary of the Navy had directed the Chief of Naval Research, Rear Admiral Frederick R. Furth, to establish a study group to assist in formulating a research and development program in the area of improving the combined capabilities of the services to provide continental air defense of the United States. Special emphasis was to be put on improving coordination among the services. The study was to last six months, was to be staffed by 100 military and civilian technical personnel from the three services, and was to be directed by the SAGE project managers at the MIT Lincoln Laboratory. They were to consider how the Navy could better interact with the SAGE system and provide more sea based input and support to the air defense of the Continental U.S. They were also to consider how the concepts of the SAGE system could be extended to navy combatant ships at sea. The study was named “Project Lamp Light.”

The Office of Naval Research assigned Commander Gould Hunter (promoted to captain during the study) as navy project officer, and the Navy Electronics Laboratory was requested to temporarily assign McNally as Capt Hunter's assistant and also to be a member of the study Data Processing Working Group. Mindful that McNally would have to interact with senior officers from the other services, The Office of Naval Research arranged for McNally to be spot promoted again; this time to full commander.[Graf, R. W., Case Study of the Development of the Naval Tactical Data System, National Academy of Sciences, Committee on the Utilization of Scientific and Engineering Manpower, Jan 29, 1964, p III-3]

The study group convened in September 1954 and worked until January 1955, Its final report was issued in February 1955. This report was declassified in 2008, and can now be downloaded from the internet. It can be seen from the report that the principal focus with respect to proposed Navy research and development was the provision of multiple sources of sea based data to data processing centers ashore in order to keep track of Soviet surface ships and submarines which might be capable of launching missile attacks on the continental United States. Sources of such data were to come from such navy assets as airborne early warning aircraft, navy picket ships, radio direction finding, new ground based long range radars, and even proposed arrays of sub-surface at-sea buoys having active and passive sonar systems. Other inputs such as sail plans and daily position reports from cooperating ships and nations would also be processed to help separate friendly ships and submarine tracks from potentially hostile ships and submarines.

Toward the end of the study, McNally became concerned that no noticeable study emphasis was being put on providing U S Navy combatant ships with SAGE-like automatic radar data processing capabilities; which he felt was part of the study tasking. This lower emphasis is most likely because data processing facilities of the day needed large rooms to accommodate the massive vacuum-tube based computers then in use. In critical applications such as SAGE, dual back-up computer systems were usually required, doubling the demand for space. Furthermore, the heated filaments of the vacuum tubes demanded massive amounts of electrical power, and to make the power requirement worse, more power was needed for air conditioning to carry away the heat from the filaments. To most analysts, requirements for computing system space and power made combatant ship digital data processing systems out of the question. McNally had other ideas however.

Finally, in February 1955, he approached Nathan Rochester, a senior IBM engineer, and told him of his concern that, so far, the task to address an automated navy combatant ship interconnection with SAGE had not been addressed. Rochester responded that he would be very open to a concept paper by McNally which described his ideas for navy AAW combat direction automation. [McNally, CDR Irvin L., Letter to CAPT Edward Svendsen, 23 May 1988]

As part of the study, McNally had toured SAGE sites, and he was well aware of the massive size of the SAGE computers. He felt that digital computers would be essential to automate what CIC plotting teams had been doing by hand. But computers as massive as the SAGE machines would take a special ‘command ship’ which would handle radar data plotting for an entire task force. Furthermore, in a battle this ship would most likely become the focus of enemy attack, and its loss would leave the task force blind. He felt that all combatant ships, from guided missile frigates on up, should have self contained automated radar data plotting and weapons assignment capability. Also, all the ships in a task force which were so equipped should be able to share the radar tracking and plotting load and all see the same task force battle picture.

To share the tracking load, McNally envisioned a fully automatic radio data link tying all the task force computers together. The system would automatically assign track numbers and parcel out the tracking load to participating ships. He called for special radios with digital modulator / demodulators (MODEMS) to tie the computer network together. He realized that navy ships are not only constantly moving, but also changing positions with respect to each other, so each ship’s computer system would have to know precisely where ‘own-ship’ was. This would require an automated dead reckoning navigation capability build into each ship’s computer system.

Next McNally wrote the general performance requirements for two new shipboard radars that would be designed specifically to interface with the new system. There would be a 300-mile range two-dimensional set to initially detect air targets at long range, and there would be 250-mile three dimensional height finding radar that could feed accurate target designation coordinates to missile and gun fire control systems.

Mindful of WW II Japanese saturation air attacks, McNally called for the system in each ship to simultaneously process 1,000 target tracks, and show whether they were air, surface or submarine tracks, and to show whether they were friendly, hostile or unidentified. (This would later be reduced to 250 tracks.) He called for operator displays that would enable operators to pick off the coordinates of a raw radar blip and send it to the computer for processing. The displays would also receive processed target track information which would be shown as artificial target symbols superimposed on the raw radar display. He also called for the system to show amplifying information on a selected target track such as track number, course, speed, and altitude. The system, on operator command was to be able to show only, all air tracks, all surface or submarine tracks, or any combination at any individual user console. Furthermore, on operator command the system was to show only the targets tracked by own-ship, or only targets coming in from the data link.

The above is only the beginning. The system was also to compute the relative threat of each hostile or unknown target, rank the threats, and recommend assignment of the most threatening to gun or missile systems on specific ships, or to an airborne interceptor. In the case of assigning a target to an interceptor, the system was to recommend a specific interceptor and automatically compute altitude and steering vectors for the intercept. All participating ships were to be shown each weapon/target assignment by means of ‘pairing lines’ drawn on the radar scopes.

McNally still had one overwhelming problem with his concept. How could a powerful digital computer be condensed to the size that would fit into a small compartment on a ship. He also thought he had the answer; transistors! When he had been in charge of the Bureau of Ships Radar Development Branch he had many occasions to work with Bell Telephone Laboratories. Among other things they had not only briefed him on their newly invented transistor, but also had given him one to experiment with. He would propose that these new ‘tactical’ computers be built with transistors rather than vacuum tubes, and with a few quick calculations he determined that a transistorized computer with the requisite processing power could be boiled down to something the size of, perhaps, a large refrigerator.

He called his concept the Navy Tactical Data System, and condensed the description to 15 typewritten pages. The senior MIT representative on Project Lamplight was Dr. Jerrold Zacharias who had been involved with the SAGE system and its massive vacuum tube computers for years. When Zacharias read Mcnally’s paper and of his proposed transistorized computers he remarked , “One of is wrong Mac.” He did endorse the paper and sent it on to Nathaniel Rochester who also positively endorsed it. [McNally, Irvin L., Interview by D. L. Boslaugh, 20 April 1993]

OPNAV Says “Tell Us More!”

The NTDS concept paper was soon in the hands of the Chief of Naval Research who immediately sent it on with a strong positive endorsement to Admiral Arleigh A. Burke, the Chief of Naval Operations. Burke liked the paper and directed that a task be issued to the Chief of the Bureau of ships to expand the concept into a ‘technical and operational requirements’ document describing the proposed system in more technical detail, with particular emphasis on the state of the art of computer, transistor, radio data link, and radar display technologies that would underpin the new system History then repeated itself; McNally soon found he had another set of orders directing him to report for duty once again to the Bureau of Ships where, in mid 1955, he was assigned to the Electronics Design and Development Division headed up by Captain W. F. “Tex” Cassidy.

McNally had a good grasp of the latest in radar technology and radio communications and a fairly good grasp of emerging transistor technology and he proceeded rapidly at first on expanding his concept paper. But, he felt uncomfortable about elaborating the details of a transistorized digital computer; he was not sure whether anyone had yet tried to transistorize a large scale digital computer, however he felt it could be done. He discussed his concern with Capt. Cassidy, who thought for a few seconds and replied, “I have just the man you need.”

Security requirements did not allow the Captain to tell McNally that Cdr. Edward Svendsen was at that moment working on a transistorized version of the Atlas II codebreaking computer, but he could tell him that Svendsen was building a transistorized large scale computer. When given the name, McNally responded. “Svendsen?, I once knew an ensign named Svendsen, I taught him radar, could it be the same one?.” Cassidy responded, “Yes, I believe he is the same one, because he is also a specialist in radar and combat information center operations.” McNally was delighted he would be working with his former student. [McNally, Irvin L., Interview with D. L. Boslaugh 20 April 1993]

Conceptualizing a New Anti-Air Battle Management System

Capt. Cassidy arranged for a common office for the two commanders, from which he had the telephone removed to minimize distractions. Within a few days Svendsen had assigned all of his normal responsibilities to assistants, and the two were ensconced in the small office calculating and writing. In addition to expanding the technical details of McNally’s concept paper, the Chief of Naval operations wanted quantified estimates of system requirements wherever possible. They digested study reports of task force anti-air battle exercises which had measured flow paths and quantity of intra and intership message passing needed to manage the AAW battle. They knew that modern radars were accurate to about one part in 1000 when measuring position data; which meant that ten bits of binary data would be needed to measure each radar position coordinate. They soon had estimates of how much computer memory and speed would be needed to process all the target tracks in a saturation attack on a task force.

Both commanders were well versed in shipboard electronic equipment and systems, and they drew on their past system design experiences. McNally’s concept called for the tactical data system to be installed in various combatant ship classes ranging from attack carriers, to heavy cruisers, to guided missile frigates on the smaller side. The larger ships would have larger, higher capacity tactical data systems than the smaller ships, however they wanted to use the same equipment types on all ship classes. They envisioned building large or small systems out of standardized modular equipment ‘building blocks.’ They also called for using the smallest number of module types possible. For example, many different kinds of specialists, ranging from target tracking data input operators to air intercept controllers, use radar displays in a combat information center. They called for one standard display type to serve the needs of all the specialists. They also wanted the capability to expand the system in any ship class by merely adding more standardized modules without the need to redesign the system.

Navy fighting ships and the systems therein, from main propulsion machinery to electronic systems, must be built to absorb damage and keep on fighting. McNally and Svendsen specified that no one ‘single point’ failure could be allowed to bring down the tactical data system in a ship. Therefore there must be at least two of every critical item in a ship’s system if failure of that equipment could shut the entire system down. For example, there must be at least two computers in each ship’s system. However, in normal operation both computers would always be running at the same time, and if one failed, the remaining computer would be switched to carry the total load, but with reduced system capability until the failed computer was repaired. Thus, they developed the concept of graceful system degradation in response to equipment failures. In addition to full adherence to shipboard electronics environmental militarization hardening requirements, they called for use of highly reliable electronics components.

It was not their intent to build a system that took the place of human judgment, rather it was to perform the many repetitive tasks done manually by present CIC teams and aid their judgment. For example, it would not pull the firing key of a missile system, but it would point out the most threatening attackers and rank them in priority order. It would also recommend the most appropriate ship and gun or missile system to engage the target, or it would recommend the most advantaged interceptor. In the case of a proposed air intercept, it would allow the intercept controller to graphically try various intercept geometry's. [Svendsen, Capt. Edward C., Interviews with D. L. Boslaugh, 20 Jan. 1995, 3 Feb. 1995] [McNally interview]

No Special Purpose Computers

Svendsen worked out the speed and memory size of the computers needed in the various ship classes. He told this writer that after he had laboriously laid out the processing load of each of the many computer program subroutines, such as target tracking, surface navigation, threat evaluation and weapons assignment, and a host of others, he arbitrarily doubled speed and memory size requirements; “And it is a good thing I did.” They were going to need a fast computer! At the time he knew most of the computer design ‘experts’ in the nation and he consulted with many of them.

Most told him to get the execution speed he needed he should use special purpose ‘wired program’ machines which gave the advantage of of almost zero memory access times when executing program instructions. Only the memory containing data and processing results would be made of slower random access memory. The two commanders rejected the special purpose computer idea because they could foresee the need for constant computer program changes as the system evolved, and special purpose machines needed physical reconfiguration to change computer programs, rather than just loading a new program into random access memory. They made a firm decision to base their system on general purpose, stored program machines.

That their tactical computers would use newly emerging magnetic core memories was a foregone conclusion, however they had another conceptual problem in specifying their computers. The different ship classes would need computers of different processing capacities. At first McNally and Svendsen considered a series of machines made of standard modules to form large or small computers. Svendsen, however, realized from his computer building experiences that, even with standard modules, each computer in the series was going to have to be treated as a separate computer development with all the attendant design work and testing.

Svendsen consulted with long time friend Dr. Joseph J. Eachus who had been a World War II cryptographer both at Bletchley Park in England and later at CSAW where he had been in charge of technically specifying codebreaking machines, and later the Atlas computers. Eachus was now at the National Security Agency where he was the driving force behind the Solo transistorized codebreaking computer. Eachus proposed a solution that was simple in some respects but would pose a new technical challenge in one area. He noted that the two commanders were already calling for two computers working together in each ship class for casualty control purposes, thus they were going to need the capability to trade data and communicate with each other automatically, something that no digital computer had been designed to do yet.

Dr. Eachus reasoned that if the computers on each ship were going to need automatic inter computer communications, why not use any number of identical computers in each ship class automatically talking to each other. For example, an aircraft carrier might need four computers, whereas a guided missile frigate might call for only two. Eachus coined the phrase “unit computer” to describe his concept. This way they would have to design, test, and manufacture only one computer type. The two commanders liked the concept and incorporated it into the technical and operational requirements document. [Svendsen interviews with D. L. Boslaugh]

How can Human Beings Talk to the Computers?

McNally had already done a lot of experimental work in devising radar displays from which human operators could enter raw video target coordinates into a digital computer, and on which symbols could be displayed representing processed target tracks. However, McNally had used only computer driven dots or small circles to represent his artificial computer-driven targets, whereas the system concept called for displaying a number of different symbol types to represent whether a particular target was friendly, hostile, or unidentified, and whether it as an air, surface or submarine target as well as many other attributes. Also the displays would have to be able to show speed/heading vectors, weapon/target pairing lines, sector boundary lines, and other graphics. They were going to be a state-of -the -art challenge.

The operator display concept also called for auxiliary data readouts at each display station that could show amplifying information on a selected target. Amplifying information would include calculated speed, heading, and altitude. The displays would also need a series of ‘action buttons’ to convey user decisions to the computer. In addition to the foregoing, , some operators would need a way to manually send information such as ship’s navigational position, electronic countermeasures bearing lines, or interceptor fuel and ammunition status to the computer program. They decided to call these devices ‘keysets.’ [Svendsen and McNally interviews with D. L. Boslaugh]

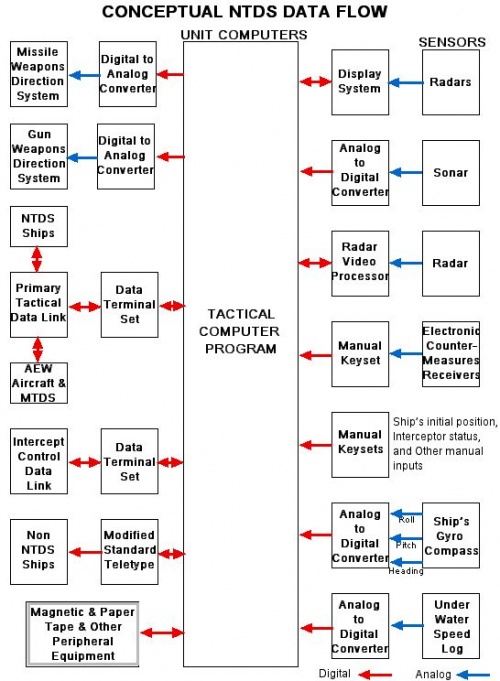

How can Digital Computers Talk to the Real World?

The computers had to communicate with more than just human operators via the display system and keysets. For example the computer program would need ship’s heading, roll, and pitch angle inputs from the ship’s gyrocompass and stable vertical sensor, as well as ship’s speed through the water from the underwater speed log. Thus, electronic converters would be needed to change the analog ‘synchro’ voltages from these sensors to digital format. Other “A/D” converters would be needed to bring such analog signals as sonar range and bearing on submarines into NTDS. Conversely, digital-to-analog conversion devices would be needed to send processed target information to gun and missile weapons direction systems. And, engagement status information would be needed back from the weapon systems. [Svendsen and McNally interviews with D. L Boslaugh]

How will the Computers Talk to the Rest of the Task Force?

The Inter-Computer Data Link

MCNally and Svendsen envisioned a primary data link that would interconnect all the computers of NTDS equipped units in a task force. Navy task forces of the mid 1950s were usually less than 20 miles in diameter, which is in the range capability of line-of-sight ultra high frequency (UHF) radios, thus they specified use of UHF radios with special “data terminal sets” (nowadays called “modulator/demodulators” or modems). The data terminal sets would include a control panel which would allow operators on the “net control ship” to assign net addresses to each participating unit. They also specified that any NTDS equipped unit should be equipped to take over as net control ship regardless of the ship’s size. This last requirement would later incur the wrath of some senior naval officers who didn’t like the idea of the CO of a guided missile frigate taking over as net control ship because they equated ‘net control’ with task force command. The requirement would stick, regardless.

The primary data link was to be capable of transmitting processed target track information as well as weapons availability and engagement status among all participating units so that all units would see the entire task force picture on their NTDS radar consoles. Once the data link was set up and running, inter computer communications was to be fully automatic with no operator intervention needed to control the link. Furthermore any participating unit must be able to enter or detach from the net without interrupting data link operation. [Svendsen and McNally interviews with D. L. Boslaugh]

The Interceptor Control Data Link

The two commanders were aware the Bureau of Aeronautics was working on an automatic interceptor control data link called the Discrete Address Data System (DADS) that was to be used in the new McDonnel F4H Phantom fighter. They therefore specified that the shipboard DADS terminal would be equipped with an interface that would allow it to communicate with the NTDS computer. This would allow NTDS equipped ships to automatically steer these interceptors to their targets.

The Teletype Data Link

Guided missile frigates, almost the size of cruisers, would be the smallest ships equipped with NTDS, but processed NTDS track and engagement information could be very useful to the smaller combatant ships, such as destroyers. To enable them to receive such information they specified that each NTDS equipment suite would include a standard navy communications teletype modified to communicate with the unit computers. Thus any small combatant could receive NTDS track information in a teletype printout, which could then be manually plotted.

Automatic Radar Detection and Tracking?

In his concept paper, McNally had stated that the two new NTDS compatible radars he called for would be capable of being retrofitted with automatic target detection and tracking equipment when technology allowed. In the meantime he had called for the NTDS display system to be capable of interfacing with any shipboard radar, and manual target detection and tracking would be the norm for early NTDS. In a bold step, to get a leg up on automatic target detection and tracking, the two commanders also called for the Technical and Operational Requirements document to include development of an experimental automatic radar video processor to be tested with the prototype NTDS at sea; . [Svendsen and McNally interviews with D. L. Boslaugh]

Other Participating Tactical Data Systems

The Chief of Naval Operations (OPNAV) task to the Bureau of Ships called for developing a comprehensive approach to naval tactical data handling which would not be limited to just surface ships. The navy already had airborne early warning (AEW) airplanes that carried powerful surveillance radars, and could transmit their radar picture in analog form to shipboard radar scopes via special shipboard receivers. McNally and Svendsen called for equipping AEW airplanes with an analog to digital data terminal that could convert their radar picture to digital format that could be transmitted over the primary data link. They did not call for a computerized airborne tactical data system but only for a capability to transmit the AEW target data over the link in standard link data formats. We will find in a later chapter that the Bureau of Aeronautics would go far beyond this limited requirement.

The U.S. Marine Corps had its own aviation squadrons having two primary tasks: 1. ground attack in support of ground troops, and 2. anti-air protection of Marine Corps ground troops and facilities. The marines thus had ground based mobile radars and air traffic control/fighter direction facilities which the Technical and Operational requirements document also called for digitizing and integration with the envisioned shipboard and airborne tactical data systems. Again, the medium for connection among the Marine’s systems and the shipboard systems would be the tactical data link.

Later OPNAV would issue the requirement for the Naval Tactical Data System to be compatible with the Royal Navy’s seagoing tactical data system development called ‘Action Data Automation’ (ADA). This interoperating capability was also to be achieved by establishing common data link standards between the USN and the RN. [Svendsen and McNally interviews with D. L. Boslaugh]

OPNAV Says “Do It!”

The two commanders spent a month working on the technical and operational requirements paper, and in the meantime, while they had been calculating and writing, the Chief of Naval Operations had directed the Office of Naval Research to form a Navy Committee on Tactical Data Processing to review the document and provide recommendations to the CNO. The primary purpose of the committee was to ensure that the navy took a comprehensive and well coordinated approach to concurrently developing a shipboard tactical data system, an airborne system and a Marine Corps system. To this end the committee had members from ONR, OPNAV, the Marine Corps, the Bureau of Ships, The Bureau of Aeronautics, and the Bureau of Ordinance.

The Chief of the Bureau of Ships positively endorsed the technical and operational requirements document in late summer of 1955 and sent it on to the Committee on Tactical Data Processing. The Committee made no technical changes in the paper, and only a few editorial changes, whereupon the document was passed to OPNAV with the recommendation that the three material bureaus be tasked and funded to start design and development of the three systems.

Any navy research and development project that has the potential to result in a new device or system going to sea or into the field is assigned a “sponsor” in the Office of the Chief of Naval Operations. It is the duty of the sponsor to justify and obtain project funds from Congress, to continually update and defend the project budget, to develop plans and a budget for navy support facilities such as training schools and, in this case, computer programming centers, to obtain the manning billets that will be required by the new system, and to review the progress of the material bureaus in developing the new system.

In November 1955 the CNO tentatively approved the operational requirement for the Naval Tactical Data System and assigned sponsorship to the office of OP-34, the Director of the OPNAV Surface Type Warfare Division, Rear Admiral William D. Irvin. His was the office that sponsored combat information center improvements, search radars, and shipboard weapon systems. Captain Cassidy, in charge of the BUSHIPS Electronics Design and Development Division was authorized to reprogram funds from other of his projects and he was able to funnel in a half million dollars to get the new program going. Then the Chief of Naval Operations threw the startup project a curve ball.

In 1955 the average length of time from approval of a new operational requirement to the start of at-sea evaluation of the new equipment or system intended to fulfill that requirement was 13 years! The CNO said he wanted the Naval Tactical Data System ready for operational evaluation in five years. McNally and Svendsen responded they could do it if allowed to take a much different management approach than the normal navy practice of the day. Much of a typical R&D project time was spent in giving frequent and endless program reviews for numerous senior officials in the navy and Department of Defense R&D management hierarchy, including the OPNAV sponsor office. Furthermore, much of a project manager’s time and effort was spent in defending his project funding from predatory fund hunters and reprogrammers in the navy, the Department of Defense, and Congress. Senior budgeteers especially seemed to think that if a project manager would not continuously fight for his funding, then the project must not be of much value to the navy.

OPNAV agreed that the NTDS project review process would be greatly streamlined, in particular the sponsors representatives would not hold formal periodic reviews, but would rather stay abreast of the project by working daily with the BUSHIPS program managers. Admiral Irvin also agreed that, although BUSHIPS would develop the project budget, it would be the sponsor’s job to justify, obtain, and defend annual project funding; and, above all, to keep it stable.

The CNO, Admiral Arleigh Burke, was already sensing a growing opposition among most naval officers to the idea of a computerized AAW battle management system. In 1955 hardly anyone knew what an electronic digital computer was, and they equated the new tactical data system with taking battle management orders from a robot or a giant electronic brain. The idea did not set well with them and many senior naval officers openly avowed they would resist the development and do everything in their power to stop it.

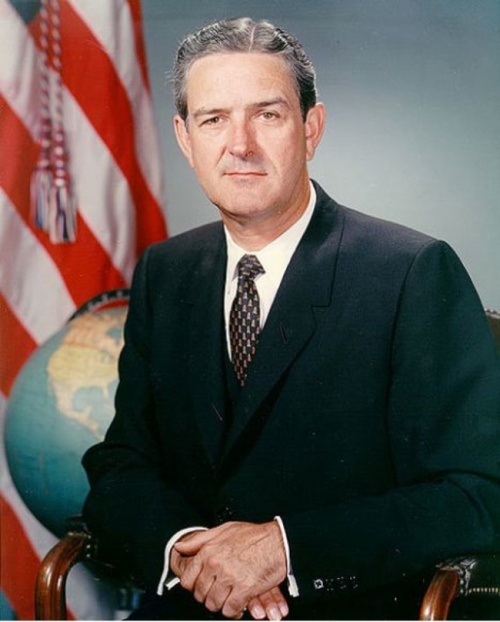

To counter this growing negative sentiment Admiral Burke let it be known that he would not tolerate any interference with or attempts to micromanage the project either within the navy or outside of it. Later, starting in early 1961, Secretary of the Navy John B. Connally also strongly seconded Burke on this position. Connally had been a task force fighter direction officer in the Pacific during WW II and had managed task force air defense against Japanese saturation Kamikaze attacks. He knew what the new system was about.

Burke also directed that he would personally review and approve all program manager assignments, including officer assignments to support NTDS in the sponsor’s office, and that a normal project tour would be at least five years in both offices. He knew how detrimental rapid project officer rotations were to a project. We were told that when Burke informed the Chief of Naval Personnel of this decision, the BUPERS chief complained that if Burke was going to do his work for him he really didn’t need him as the Chief of BUPERS. History does not record the CNO’s rejoinder.

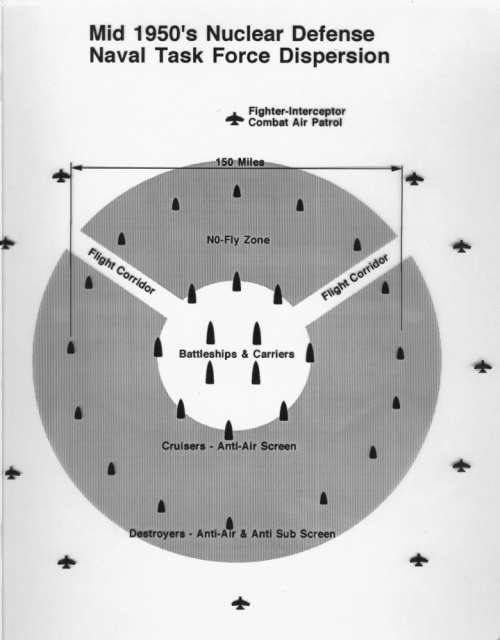

On 24 April 1956 OPNAV sent formal approval of the technical and operational requirements document. The approval letter required one change. During World War II maximum task force diameter had been 20 miles, but with the advent of the H bomb the navy was planning to increase task force diameters to as much as 150 miles to prevent all the ships in a task force from being engulfed in one nuclear blast. And, to hedge their bets, they called for a data link range of 300 miles. This meant the primary tactical data link would have to shift from line-of-sight UHF radios to high frequency (HF) radios having the power and special antennas to propagate a ground wave out to 300 miles. [Swenson, Capt Erick N., Stoutenburgh, Capt. Joseph S., and Mahinske, Capt Edmund B., “NTDS - A Page in Naval History,” Naval Engineer’s Journal, Vol 100, No 3, May 1988, ISSn 0028-1425, pp 52-56] [Svendsen and Mcnally interviews with D. L. Boslaugh]

In the mean time, while OPNAV had been reviewing the technical and operational requirements paper, Vice Admiral Thomas S. Combs, Deputy Chief of Naval operations for Air, had been scanning all navy research and development projects in a search for uncommitted research and development funds. He found enough, when combined with Capt. Cassidy’s half million to fund the NTDS Engineering Test System for an initial year. [Swallow, Capt Chandler E., Letter to D. L. Boslaugh, 16 Nov. 1994]

The OPNAV approval letter also designated the Bureau of Ships as lead developing agency for the new project. In response, the Bureau set up the NTDS Branch of the Electronics Design and Development Division which was headed up by Capt. Cassidy. This division already had four other branches covering radar, communications, sonar, and special applications (which included the secret codebreaking computers). [Bureau of Ships,Technical Development Plan for the Naval Tactical Data System(NTDS)-SS 191, 1 Apr. 1964, pp 4-5]

Capt. Cassidy assigned NTDS equipment development responsibilities to three of these four branches. The Radar Branch was to develop the display subsystem and its interfaces with shipboard radars, the Communications Branch was to develop the automatic data link equipment, and the Special Applications Branch was to develop the transistorized unit computer and its peripheral equipment. Cassidy named Cdr. McNally as NTDS ‘project coordinator’ with Cdr. Svendsen to be his assistant. In an unusual twist in order to help streamline project management, the two project coordinators were assigned not only responsibility for system development but also responsibility for production equipment procurement, shipboard installation, and lifetime maintenance. In effect, the partial beginnings of a true project office.

The two commanders, however, were given the job only to coordinate the efforts of the equipment developing branches without line authority over the NTDS work these branches were doing. They would provide management technical direction by making ‘recommendations’ to Capt. Cassidy. Furthermore the project coordinators did not have control over the project funds; again, they would provide recommendations to the BUSHIPS Assistant Chief for Research and Development regarding how much funding should be allocated to the equipment development branches. [Graf, R. W., Case Study of the Development of the Naval Tactical Data System, National Academy of Sciences, Committee on the Utilization of Scientific and Engineering Manpower, Jan 29 1964, p IV-1]

We will see in later chapters that this authority only to ‘coordinate’ and ‘recommend’ would not last long, and the NTDS Branch would eventually morph into a project office having full management, technical, and funding authority not only over NTDS development but also over all aspects of the system life cycle; becoming one of the navy’s first true project offices. To move on to the next chapter, "Building the U. S. Navy's First Seagoing Digital System", the reader may click on this link.