First-Hand:The Foundation of Digital Television: the origins of the 4:2:2 component digital standard

Contributed by Stanley Baron, IEEE Life Fellow [1]

Introduction

By the late 1970's, the application of digital technology in television production was widespread. A number of digital television products had become available for use in professional television production. These included graphics generators, recursive filters (noise reducers), time base correctors and synchronizers, standards converters, amongst others.

However, each manufacturer had adopted a unique digital interface, and this meant that these digital devices when formed into a workable production system had to be interfaced at the analog level, thereby forfeiting many of the advantages of digital processing.

Most broadcasters in Europe and Asia employed television systems based on 625/50 scanning (625 lines per picture, repeated 50 fields per second), with the PAL color encoding system used in much of Western Europe, Australia, and Asia, while France, the Soviet Union, Eastern Europe, and China used variations of the SECAM color encoding system. There were differences in luminance bandwidth: 5.0 MHz for B/G PAL, 5.5 MHz for PAL in the UK and nominally 6 MHz for SECAM. There were also legacy monochrome systems, such as 405/50 scanning in the UK and the 819/50 system in France. The color television system that was dominate in the Americas, Japan, and South Korea was based on 525/60 scanning, 4.2 MHz luminance bandwidth, and the NTSC color standard.

NTSC and PAL color coding are both linear processes. Therefore, analog signals in the NSTC format could be mixed and edited during studio processing, provided that color sub carrier phase relationships were maintained. The same was true for production facilities based on the PAL system. In analog NTSC and PAL studios it was normal to code video to composite form as early as possible in the signal chain so that each signal required only one wire for distribution rather than the three needed for RGB or YUV component signals. The poor stability of analog circuitry meant that matching separate channel RGB or YUV component signals was impractical except in very limited areas. SECAM employed frequency modulated coding of the color information, which did not allow any processing of composite signals, so the very robust SECAM composite signal was used only on videotape recorders and point to point links, with decoding to component signals for mixing and editing. Some SECAM broadcasters avoided the problem by operating their studios in PAL and recoding to SECAM for transmission.

The international community recognized that the world community would be best served if there could be an agreement on a single production or studio digital interface standard regardless of which color standard (525 line NTSC, 625 line PAL, or 625 line SECAM) was employed for transmission. The cost of implementation of digital technology was seen as directly connected to the production volume; the higher the volume, the lower the cost to the end user, in this case, the broadcasting community.

Work on determining a suitable standard was organized by the Society of Motion Picture Engineers (SMPTE) on behalf of the 525/60 broadcasting community and the European Broadcasting Union (EBU) on behalf of the 625/50 broadcasting community.

In 1982, the international community reached agreement on a common 4:2:2 Component Digital Television Standard. This standard as documented in SMPTE 125, several EBU Recommendations, and ITU-R Recommendation 601 was the first international standard adopted for interfacing equipment directly in the digital domain avoiding the need to first restore the signal to an analog format.

The interface standard was designed so that the basic parameter values provided would work equally well in both 525 line/60 Hz and 625 line/50 Hz television production environments. The standard was developed in a remarkably short time, considering its pioneering scope, as the world wide television community recognized the urgent need for a solid basis for the development of an all digital television production system. A component-based (Y, R-Y, B-Y) system based on a luminance (Y) sampling frequency of 13.5 MHz was first proposed in February 1980; the world television community essentially agreed to proceed on a component based system in September 1980 at the IBC; a group of manufacturers supplied devices incorporating the proposed interface at a SMPTE sponsored test demonstration in San Francisco in February 1981; most parameter values were essentially agreed to by March 1981; and the ITU-R (then CCIR) Plenary Assembly adopted the standard in February 1982.

What follows is an overview of this historic achievement, providing a history of the standard's origins, explaining how the standard came into being, why various parameter values were chosen, the process that led the world community to an agreement, and how the 4:2:2 standard led to today's digital high definition production standards and digital broadcasting standards.

First considerations

It is understood that digital processing of any signal requires that the sample locations be clearly defined in time and space and, for television, processing is simplified if the samples are aligned so that they are line, field, and frame position repetitive yielding an orthogonal (rectangular grid) sampling pattern.

While the NTSC system color sub carrier frequency (fsc) was an integer sub multiple of the horizontal line frequency (fH) [fsc = (m/n) x fH] lending itself to orthogonal sampling, the PAL system color sub carrier employed a field frequency off set and the SECAM color system employed frequency modulation of the color subcarrier, which made sampling the color information, contained within those systems a more difficult challenge. Further, since some European nations had adopted various forms of the PAL 625 line/50Hz composite color television standard as their broadcast standard and other European nations had adopted various forms of the SECAM 625 line/50Hz composite color television standard, the European community's search for a common digital interface standard implied that a system that was independent of the color coding technique used for transmission would be required.

Developments within the European community

In September 1972, the European Broadcasting Union (EBU) formed Working Party C, chaired by Peter Rainger to investigate the subject of coding television systems. In 1977, based on the work of Working Party C, the EBU issued a document recommending that the European community consider a component television production standard, since a component signal could be encoded as either a PAL or SECAM composite signal just prior to transmission.

At a meeting in Montreux, Switzerland in the spring of 1979, the EBU reached agreement with production equipment manufacturers that the future of digital program production in Europe would be best served by component coding rather than composite coding, and the EBU established a research and development program among its members to determine appropriate parameter values. This launched an extensive program of work within the EBU on digital video coding for program production. The work was conducted within a handful of research laboratories across Europe and within a reorganized EBU committee structure including: Working Party V on New Systems and Services chaired by Peter Rainger; subgroup V1 chaired by Yves Guinet, which assumed the tasks originally assigned to Working Party C; and a specialist supporting committee V1 VID (Vision) chaired by Howard Jones. David Wood, representing the EBU Technical Center, served as the secretariat of all of the EBU committees concerned with digital video coding.

In 1979, EBU VI VID proposed a single three channel (Y, R-Y, B-Y) component standard. The system stipulated a 12.0 MHz luminance (Y) channel sampling frequency and provided for each of the color difference signals (R-Y and B-Y) to be sampled at 4.0 MHz. The relationship between the luminance and color difference signals was noted sometimes as (12:4:4) and sometimes as (3:1:1). The proposal, based on the results of subjective quality evaluations, suggested these values were adequate to transparently deliver 625/50i picture quality.

The EBU Technical Committee endorsed this conclusion at a meeting in April 1980, and instructed its technical groups: V, V1, and V1 VID to support this effort.[2]

SMPTE organized for the task at hand

Three SMPTE committees were charged with addressing various aspects of world wide digital standards. The first group, organized in late 1974, was the Digital Study Group chaired by Charles Ginsburg. The Study Group was charged with investigating all issues concerning the application of digital technology to television. The second group was a Task Force on Component Digital Coding with Frank Davidoff as chairman. This Task Force, which began work in February 1980, was charged with developing a recommendation for a single worldwide digital interface standard.[3] While membership in SMPTE committees is generally open to any interested and affected party, the membership of the Task Force had been limited to recognized experts in the field. The third group was the Working Group on Digital Video Standards. This Working Group was charged with documenting recommendations developed by the Study Group or the Task Force and generating appropriate standards, recommended practices, and engineering guidelines.

In March 1977, the Society of Motion Picture and Television Engineers (SMPTE) began development of a digital television interface standard. The work was assigned by SMPTE's Committee on New Technology chaired by Fred Remley to the Working Group on Digital Video Standards chaired by Dr. Robert Hopkins.

By 1979, the Working Group on Digital Video Standards was completing development of a digital interface standard for NTSC television production. Given the state of the art at the time and the desire to develop a standard based on the most efficient mechanism, the Working Group created a standard that allowed the NTSC television video signal to be sampled as a single composite color television signal. It was agreed after a long debate on the merits of three times sub carrier (3fsc) versus four times sub carrier (4fsc) sampling that the Composite Digital Television Standard would require the composite television signal with its luminance channel and color sub carrier to be sampled at four times the color sub carrier frequency (4fsc) or 14.31818... MHz.

During the last quarter of 1979, agreement was reached on a set of parameter values, and the drafting of the Composite Digital Television Standard was considered completed. It defined a signal sampled at 4fsc with 8 bit samples. This standard seemed to resolve the problem of providing a direct digital interface for production facilities utilizing the NTSC standard.

By 1980, the Committee on New Technology was being chaired by Hopkins and the Working Group on Digital Video Standards was being chaired by Ken Davies.

Responding to communications with the EBU and so as not to prejudice the efforts being made to reach agreement on a world wide component standard, in January 1980, Hopkins put the finished work on the NTSC Composite Digital Television Standard temporarily aside so that any minor modifications to the document that would serve to meet possible world wide applications could be incorporated before final approval. Since copies of the document were bound in red binders, the standard was referred to as the "Red Book".

Seeking a Common Reference

The agenda of the January 1980 meeting of SMPTE's Digital Study Group included a discussion on a world wide digital television interface standard. At that meeting, the Study Group considered the report of the European community, and members of the EBU working parties had been invited to attend. Although I was not a member of the Study Group, I was also invited to attend the meeting.

It was recognized that while a three color representation of the television signal using Red, Blue, and Green (R, G, B) was the simplest three component representation, a more efficient component representation, but one that is more complex, is to provide a luminance or gray scale channel (Y) and two color difference signals (R-Y and B-Y). The R-Y and B-Y components take advantage of the characteristics of the human visual system which is less sensitive to high resolution information for color than for luminance. This allows for the use of a lower number of samples to represent the color difference signals without observable losses in the restored images. Color difference components (noted as I, Q or U, V or Dr, Db) were already in use in the NTSC, PAL, and SECAM systems to reduce the bandwidth required to support color information.

Members of the NTSC community present at the January 1980 Study Group meeting believed that the EBU V1 VID proposed 12.0 MHz, (3:1:1) set of parameters would not meet the needs for NTSC television post production particularly with respect to chroma keying, then becoming an important tool. In addition, it was argued that: (1) the sampling frequency was too low (too close to the Nyquist point) for use in a production environment where multiple generations of edits were required to accommodate special effects, chroma keying, etc., and (2) a 12.0 MHz sampling system would not produce an orthogonal array of samples in NTSC (at 12.0 MHz, there would be 762.666... pixels per line).

Orthogonal digital sampling of a television image is where each point in the resulting matrix is at right angles to the others, producing a sampling matrix that is repetitive line-to-line and field-to-field; repetitive in three dimensions: horizontally (H), vertically (V), and in time (T). While a digital sampling of an analog television signal can be achieved using any sampling frequency for the purposes of capture, storage and restoration of the original analog signal, orthogonal sampling is required to facilitate practical image processing, image manipulation, and the insertion of digitally generated image components.

The NTSC community offered for consideration a single three channel component standard based on (Y, R-Y, B-Y). This system stipulated a 4fsc (14.318 MHz) luminance sampling frequency equal to 910 x fH525, where fH525 is the NTSC horizontal line frequency. The proposal further provided for each of the color difference components to be sampled at 2fsc or 7.159 MHz. This relationship between the luminance and color difference signals was noted as (4:2:2). Adopting 4fsc as the luminance sampling frequency would facilitate trans coding of video recorded using the “single wire” NTSC composite standard with studio mixers and editing equipment based on a component video standard.

Representatives of the European television community present at the January 1980 Study Group meeting pointed to some potential difficulties with this proposal. The objections included: (1) that the sampling frequency was too high for use in practical digital recording at the time, and (2) a 14.318 MHz sampling system would not produce an orthogonal array of samples in a 625 line system (at 14.318 MHz, there would be 916.36... pixels per line).

During the January 1980 Study Group meeting discussion, I asked why the parties involved had not considered a sampling frequency that was a multiple of the 4.5 MHz sound carrier, since the horizontal line frequencies of both the 525 line and 625 line systems had an integer relationship to 4.5 MHz.

The original definition of the NTSC color system established a relationship between the sound carrier frequency (fs) and the horizontal line frequency (fH525) as fH525 = fs/286 = 15734.265... Hz, had further defined the vertical field rate fV525 = (fH525 x 2)/525 = 59.94006 Hz, and defined the color sub carrier (fsc) = (fH525 x 455)/2 = 3.579545.... MHz. Therefore, all the frequency components of the NTSC system could be derived as integer sub multiples of the sound carrier. [4]

The 625 line system defined the horizontal line frequency (fH625) = 15625 Hz and the vertical field rate fV625 = (fH625 x 2)/625 = 50 Hz. It was noted from the beginning that the relationship between fs and the horizontal line frequency (fH625) could be expressed as fH625 = fs/288. Therefore, any sampling frequency that was an integer multiple of 4.5 MHz (fs) would produce samples in either the 525 line or 625 line systems that were orthogonal.

I was asked to submit a paper to the Study Group and the Task Force describing the relationship. The assignment was to cover two topics. The first topic was how the 625 line/50Hz community might arrive at a sampling frequency close to 14.318 MHz. The second topic was to explain the relationship between the horizontal frequencies of the 525 line and 625 line systems and 4.5 MHz.

This resulted in my authoring a series of papers written between February and April 1980 addressed to the SMPTE Task Force explaining why 13.5 MHz should be considered the choice for a common luminance sampling frequency. The series of papers was intended to serve as a tutorial with each of the papers expanding on the points previously raised. A few weeks after I submitted the first paper, I was invited to be a member of the SMPTE Task Force. During the next few months, I responded to questions about the proposal, and I was asked to draft a standards document.

Crunching the numbers

The first paper I addressed to the Task Force was dated 11 February 1980.[5] This paper pointed to the fact that since the horizontal line frequency of the 525 line system (fH525 had been defined as 4.5 MHz/286 (or 2.25 MHz/143), and the horizontal line frequency of the 625 line system (fH625) was equal to 4.5 MHz/288 (or 2.25 MHz/144), any sampling frequency that was a multiple of 4.5 MHz/2 could be synchronized to both systems.

Since it would be desirable to sample color difference signals at less than the sampling rate of the luminance signal, then a sampling frequency that was a multiple of 2.25 MHz would be appropriate for use with the color difference components (R-Y, B-Y) while a sampling frequency that was a multiple of 4.5 MHz would be appropriate for use with the luminance component (Y).

Since the European community had argued that the (Y) sampling frequency must be lower than 14.318 MHz and the NTSC countries had argued that the (Y) sampling frequency must be higher than 12.00 MHz, my paper and cover letter dated 11 February 1980 suggested consideration of 3 x 4.5 MHz or 13.5 MHz as the common luminance (Y) channel sampling frequency (858 times the 525 line horizontal line frequency rate and 864 times the 625 line rate both equal 13.5 MHz).

My series of papers suggested adoption of a component color system based on (Y, R-Y, B-Y) and a luminance/color sampling relationship of (4:2:2), with the color signals sampled at 6.75 MHz. In order for the system to facilitate standards conversion and picture manipulation (such as that used in electronic special effects and graphics generators), both the luminance and color difference samples should be orthogonal. The desire to be able to trans code between component and composite digital systems implied a number of samples per active line that was divisible by four.

The February 1980 note further suggested that the number of samples per active line period should be greater than 715.5 to accommodate all of the world wide community standards active line periods. While the number of pixels per active line equal to 720 samples per line was not suggested until my next note, (720 is the number found in Rec. 601 and SMPTE 125), 720 is the first value that “works.” 716 is the first number greater than 715.5 that is divisible by 4 (716 = 4 x 179), but does not lend itself to standards conversion between 525 line component and composite color systems or provide sufficiently small pixel groupings to facilitate special effects or data compression algorithms. </p>

Additional arguments in support of 720 were provided in notes I generated prior to IBC'80 in September. Note that 720 equals 6! [6! (6 factorial) = 6x5x4x3x2x1] = 24 x 32 x 5. This allows for many small factors, important for finding an economical solution to conversion between the 525 line component and composite color standards and for image manipulation in special effects and analysis of blocks of pixels for data compression. The composite 525 line digital standard had provided for 768 samples per active line. 768 = 28 x 3. The relationship between 768 and 720 can be described as 768/720 = (28 x 3)/(24 x 32 x 5) = (24)/(3 x 5) = 16/15. A set of 16 samples in the NTSC composite standard could be used to calculate a set of 15 samples in the NTSC component standard.

Proof of Performance

At the September 1980 IBC conference, international consensus became focused on the 13.5 MHz, (4:2:2) system. However, both the 12.0 MHz and 14.318 MHz systems retained some support for a variety of practical considerations. Discussions within the Working Group on Digital Video Standards indicated that consensus could not be achieved without the introduction of convincing evidence.

SMPTE proposed to hold a “Component Coded Digital Video Demonstration” in San Francisco in February 1981 organized by and under the direction of the Working Group on Digital Video Standards to evaluate component coded systems. A series of practical tests/demonstrations were organized to examine the merits of various proposals with respect to picture quality, production effects, recording capability and practical interfacing, and to establish an informed basis for decision making.

The EBU had scheduled a series of demonstrations in January 1981 for the same purpose. SMPTE invited the EBU to hold its February meeting of the Bureau of the EBU Technical Committee in San Francisco to be followed by a joint meeting to discuss the results of the tests. It was agreed that demonstrations would be conducted at three different sampling frequencies (near 12.0 MHz, 13.5 MHz, and 14.318 MHz) and at various levels of performance.

From 2nd through the 6th of February 1981 (approximately, one year from the date of the original 13.5 MHz proposal), SMPTE conducted demonstrations at KPIX Television, Studio N facilities in San Francisco in which a number of companies participated. Each participating sponsor developed equipment with the digital interface built to the specifications provided. The demonstration was intended to provide proof of performance and to allow the international community to come to an agreement.[6]

'The demonstration organizing committee had to improvise many special interfaces and interconnections, as well as create a range of test objects, test signals, critical observation criteria, and a scoring and analysis system and methodology.

The demonstrations were supported with equipment and personnel by many of the companies that were pioneers in the development of digital television and included: ABC Television, Ampex Corporation, Barco, Canadian Broadcasting Corporation, CBS Technology Center, Digital Video Systems, Dynair, Inc., KPIX Westinghouse Broadcasting, Leitch Video Ltd., Marconi Electronics, RCA Corporation and RCA Laboratories, Sony Corporation, Tektronix Inc., Thomson CSF, VG Electronics Ltd., and VGR Corporation. I participated in the demonstrations as a member of SMPTE's Working Group on Digital Video Standards, providing a Vidifont electronic graphics generator whose interface conformed to the new standard.

Figure 1 provides an overview of the equipment constructed and assembled for the demonstration at KPIX. On the left side are the racks housing a Vidifont graphics generator and a slide scanner. In the rear on the left is a component video tape recorder. At the right center are two NTSC tape recorders. Figure 2 provides a view of the control desk. The individual who is standing is the “producer,” Ken Davies, chair of SMPTE's Working Group on Digital Video Standards.

Developing an agreement

The San Francisco demonstrations proved the viability of the 13.5 MHz, (4:2:2) proposal. At a meeting in January 1981, the EBU had considered a set of parameters based on a 13.0 MHz (4:2:2) system. Additional research conducted by EBU members had shown that a (4:2:2) arrangement was needed in order to cope with picture processing requirements, such as chroma key, and the EBU members believed a 13.0 MHz system appeared to be the most economic system that provided adequate picture processing. Members of the EBU and SMPTE committees met at a joint meeting chaired by Peter Rainger in March 1981 and agreed to propose the 13.5 MHz, (4:2:2) standard as the world wide standard. By autumn 1981, NHK in Japan led by Mr. Tadokoro, had performed its own independent evaluations and concurred that the 13.5 MHz, (4:2:2) standard offered the optimum solution.

A number of points were generally agreed upon and formed the basis of a new world wide standard. They included:

- The existing colorimetry of EBU (for PAL and SECAM) and of NTSC would be retained for 625 line and 525 line signals respectively, as matrixing to a common colorimetry was considered overly burdensome;

- An 8 bit per sample representation would be defined initially, being within the state of the art, but a 10 bit per sample representation would also be specified since it was required for many production applications;

- The range of the signal to be included should include head room (above white level) and foot room (below black level) to allow for production overshoots;

- The line length to be sampled should be somewhat wider than those of the analog systems (NTSC, PAL, and SECAM) under consideration to faithfully preserve picture edges and to avoid picture cropping;

- A bit parallel, sample multiplexed interface (e.g. transmitting R-Y, Y, B-Y, Y, R-Y, ...) was practical, but in the long term, a fully bit and word serial system would be desirable;

- The gross data rate should be recordable within the capacity of digital tape recorders then in the development stages by Ampex, Bosch, RCA, and Sony.

The standard, as documented, provided for each digital sample to consist of at least 8 bits, with 10 allowed. The values for the black and white levels were defined, as was the range of the color signal. (R-Y) and (B-Y) became CR [=0.713 (R-Y)] and CB [=0.564 (B-Y)]. While the original note dated February 1980 addressed to the Task Force proposed a code of 252(base10) =(1111 1100) for ‘white’ at 100 IRE and a code of 72 (base10) =(0100 1000) for ‘black’ at 0 IRE to allow capture of the sync levels, agreement was reached to better utilize the range of codes to capture the grey scale values with more precision and provide more overhead. ‘White’ was to be represented by an eight bit code of 240(base10) =(1111 0000) and ‘black’ was to be represented by an eight bit code 16 (base10) =(0001 0000). The original codes for defining the beginning and the end of picture lines and picture area were discussed, modified, and agreed upon, as well as synchronizing coding for line, field, and frame, each coding sequence being unique and not occurring in the video signal.SMPTE and EBU organized an effort over the next few months to familiarize the remainder of the world wide television community with the advantages offered by the 13.5 MHz, (4:2:2) system and the reasoning behind its set of parameters. Members of the SMPTE Task Force traveled to Europe and to the Far East. Members of the EBU committees traveled to the, then, Eastern European block nations and to the members of the OTI, the organization of the South American broadcasters. The objective of these tours was to build a consensus prior to the upcoming discussion at the ITU in the autumn of 1981. The success of this effort could serve as a model to be followed in developing future agreements.

SMPTE 125

I was asked to draft a SMPTE standard document that listed the parameter values for a 13.5 MHz system for consideration by the SMPTE Working Group. Since copies of the document were bound in a green binder prior to final acceptance by SMPTE, the standard was referred to as the “Green Book”.

In April 1981, the draft of the standard titled “Coding Parameters for a Digital Video Interface between Studio Equipment for 525 line, 60 field Operation” was distributed to a wider audience for comment. This updated draft reflected the status of the standard after the tests in San Francisco and agreements reached at the joint EBU/SMPTE meeting in March 1981. The EBU community later requested a subtle change to the value of ‘white’ in the luminance channel, and it assumed the value of 235(base10). This change was approved in August 1981.

After review and some modification as noted above to accommodate European concerns, the “Green Book” was adopted as SMPTE Standard 125.

ITU/R Recommendation 601

The European Broadcasting Union (EBU) generated an EBU Standard containing a companion set of parameter values. The SMPTE 125 and EBU documents were then submitted to the International Telecommunications Union (ITU). The ITU, a treaty organization within the United Nations, is responsible for international agreements on communications. The ITU Radio Communications Bureau (ITU-R/CCIR) is concerned with wireless communications, including allocation and use of the radio frequency spectrum. The ITU also provides technical standards, which are called “Recommendations.”

Within the ITU, the development of the Recommendation defining the parameter values of the 13.5 MHz (4:2:2) system fell under the responsibility of ITU-R Study Group 11 on Television. The chair of Study Group 11, Prof. Mark I. Krivocheev, assigned the drafting of the document to a special committee established for that purpose and chaired by David Wood of the EBU. The document describing the digital parameters contained in the 13.5 MHz, (4:2:2) system was approved for adoption as document 11/1027 at ITU-R/CCIR meetings in Geneva in September and October 1981. A revised version, document 11/1027 Rev.1, dated 17 February 1982, and titled “Draft Rec. AA/11 (Mod F): Encoding parameters of digital television for studios” was adopted by the ITU-R/CCIR Plenary Assembly in February 1982. It described the digital interface standard for transfer of video information between equipment designed for use in either 525 line or 625 line conventional color television facilities. Upon approval by the Plenary Assembly, document 11/1027 Rev.1 became CCIR Recommendation 601.[7]

The Foundation for HDTV and Digital Television Broadcasting Services

The 4:2:2 Component Digital Television Standard allowed for a scale of economy and reliability that was unprecedented by providing a standard that enabled the design and manufacture of equipment that could operate in both 525 line/60Hz and 625 line/50Hz production environments. The 4:2:2 Component Digital Television Standard permitted equipment supplied by different manufacturers to exchange video and embedded audio and data streams and/or to record and playback those streams directly in the digital domain without having to be restored to an analog signal. This meant that the number of different processes and/or generations of recordings could be increased without the noticeable degradation of the information experienced with equipment based on analog technology. A few years after the adoption of the 4:2:2 Component Digital Television Standard, all digital production facilities were shown to be practical.[8]

A few years later when the ITU defined “HDTV,” the Recommendation stipulated: “the horizontal resolution for HDTV as being twice that of conventional television systems” described in Rec. 601and a picture aspect ratio of 16:9. A 16:9 aspect ratio picture requires one-third more pixels per active line than a 4:3 aspect ratio picture. Rec. 601 provided 720 samples per active line for the luminance channel and 360 samples for each of the color difference signals. Starting with 720, doubling the resolution to 1440, and adjusting the count for a 16:9 aspect ratio leads to the 1920 samples per active line defined as the basis for HDTV.[9] Accommodating the Hollywood and computer communities' request for “square pixels” meant that the number of lines should be 1920 x (9/16) = 1080.

Progressive scan systems at 1280 pixels per line and 720 lines per frame are also a member of the “720 pixel” family. 720 pixels x 4/3 (resolution improvement) x 4/3 (16:9 aspect ratio adjustment) = 1280. Accommodating the Hollywood and computer communities' request for square pixels meant that the number of lines should be 1280 x (9/16) = 720.

The original 720 pixel per active line structure became the basis of a family of structures (the 720 pixel family) that was adopted for MPEG based systems including both conventional television and HDTV systems. Therefore, most digital television systems, including digital video tape systems and DVD recordings are derived from the format described in the original 4:2:2 standard.

The existence of a common digital component standard for both 50 Hz and 60 Hz environments as documented in SMPTE 125 and ITU Recommendation 601 provided a path for television production facilities to migrate to the digital domain. The appearance of high quality, fully digital production facilities providing digital video, audio, and metadata streams and the successful development of digital compression and modulation schemes allowed for the introduction of digital television broadcast services.

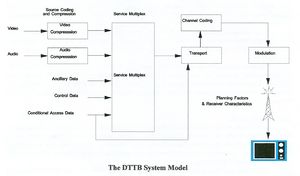

These digital television broadcast services, in turn, provided more efficient use of the spectrum, higher quality images accompanied by multi channel, surround sound, the ability to include supporting multiple digital data streams in the broadcast channel, and the possibility of a transmitting a theatre experience to the home ... and all of this was built on the foundation provided by the 4:2:2 Component Digital Television Standard, first described in February 1980 and adopted as an international agreement in February 1982. (A discussion of the international community’s effort to develop a standard for digital terrestrial television broadcasting (DTTB) is found in an article titled “Digital Television: The Digital Terrestrial Television Broadcasting (DTTB) Standard.” Figure 3 shows the DTTB System Model upon which the standard is based.)

In its 1982-1983 award cycle, the National Academy of Television Arts and Sciences recognized the 4:2:2 Component Digital Standard based on 13.5 MHz (Y) sampling with 720 samples per line with three EMMY awards:

The European Broadcasting Union (EBU) was recognized: “For achieving a European agreement on a component digital video studio specification based on demonstrated quality studies and their willingness to subsequently compromise on a world wide standard.”

The International Telecommunications Union (ITU) was recognized: “For providing the international forum to achieve a compromise of national committee positions on a digital video standard and to achieve agreement within the 1978-1982 period.”

The Society of Motion Picture and Television Engineers (SMPTE) was recognized: “For their early recognition of the need for a digital video standard, their acceptance of the EBU proposed component requirement, and for the development of the hierarchy and line lock 13.5 MHz demonstrated specification, which provided the basis for a world standard.”

Acknowledgments

This narrative is intended to acknowledge the early work on digital component coded television carried out over several years by hundreds of individuals, organizations, and administrations throughout the world. It is not possible in a limited space to list all of the individuals or organizations involved, but by casting a spotlight on the results of their work since the 1960's and its significance, the intent is to honor them - all.

Individuals interested in the specific details of digital television standards and picture formats (i.e. 1080p, 720p, etc.) should inquire at www.smpte.org. SMPTE is the technical standards development organization (SDO) for motion picture film and television production.

End Notes

- ↑ This article builds on a prior article by Stanley Baron and David Wood; simultaneously published in the SMPTE Motion Imaging Journal, September 2005, pp. 327 334 as “The Foundations of Digital Television: the origins of the 4:2:2 DTV standard" and in the EBU Technical Review, October 2005, as "Rec. 601 the origins of the 4:2:2 DTV standard.”

- ↑ Guinet, Yves; “Evolution of the EBU's position in respect of the digital coding of television”, EBU Review Technical, June 1981, pp.111 117.

- ↑ Davies, Kenneth; “SMPTE Demonstrations of Component Coded Digital Video, San Francisco, 1981”, SMPTE Journal, October 1981, pp.923 925.

- ↑ Fink, Donald; “Television Engineering Handbook”, McGraw Hill [New York, 1957], p.7 4.

- ↑ Baron, S.; “Sampling Frequency Compatibility”, SMPTE Digital Study Group, January 1980, revised and submitted to the SMPTE Task Force on Digital Video Standards, 11 February 1980. Later published in SMPTE Handbook, “4:2:2 Digital Video: Background and Implementation”, SMPTE, 1989, ISBN 0 940690 16, pp.20 23.

- ↑ Weiss, Merrill & Marconi, Ron; “Putting Together the SMPTE Demonstrations of Component Coded Digital Video, San Francisco, 1981”, SMPTE Journal, October 1981, pp.926 938.

- ↑ Davidoff, Frank; “Digital Television Coding Standards”, IEE Proceedings, 129, Pt.A., No.7, September 1982, pp.403 412.

- ↑ Nasse, D., Grimaldi, J.L., and Cayet, A; “An Experimental All Digital Television Center”, SMPTE Journal, January 1986, pp. 13 19.

- ↑ ITU Report 801, “The Present State of High Definition Television”, Part 3, “General Considerations of HDTV Systems”, Section 4.3, “Horizontal Sampling”.